📆 Review period: May 24-June 7

If you're passionate about making interpretability useful and want to help shape the conversation, we'd love your input.

💡🔍 Self-nominate here:

docs.google.com/forms/d/e/1F...

📆 Review period: May 24-June 7

If you're passionate about making interpretability useful and want to help shape the conversation, we'd love your input.

💡🔍 Self-nominate here:

docs.google.com/forms/d/e/1F...

github.com/ARBORproject...

Or propose your own idea! There are many ways to contribute, and we welcome all of them.

github.com/ARBORproject...

Or propose your own idea! There are many ways to contribute, and we welcome all of them.

See the ARBOR discussion board for a thread for each project underway.

github.com/ArborProjec...

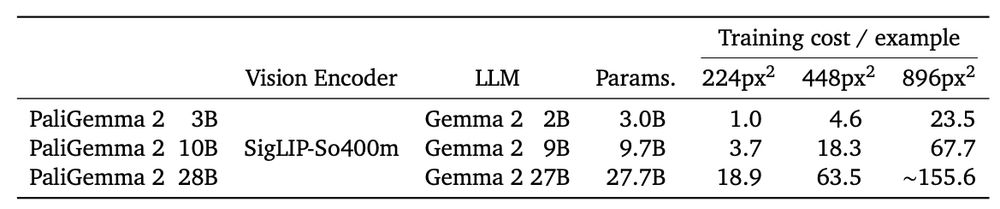

Not sure yet if you want to invest the time 🪄finetuning🪄 on your data? Give it a try with our ready-to-use "mix" checkpoints:

🤗 huggingface.co/blog/paligem...

🎤 developers.googleblog.com/en/introduci...

Not sure yet if you want to invest the time 🪄finetuning🪄 on your data? Give it a try with our ready-to-use "mix" checkpoints:

🤗 huggingface.co/blog/paligem...

🎤 developers.googleblog.com/en/introduci...

Here's a talk now on Youtube about it given by my awesome colleague John Schultz!

www.youtube.com/watch?v=JyxE...

Here's a talk now on Youtube about it given by my awesome colleague John Schultz!

www.youtube.com/watch?v=JyxE...

research.google/programs-and...

research.google/programs-and...

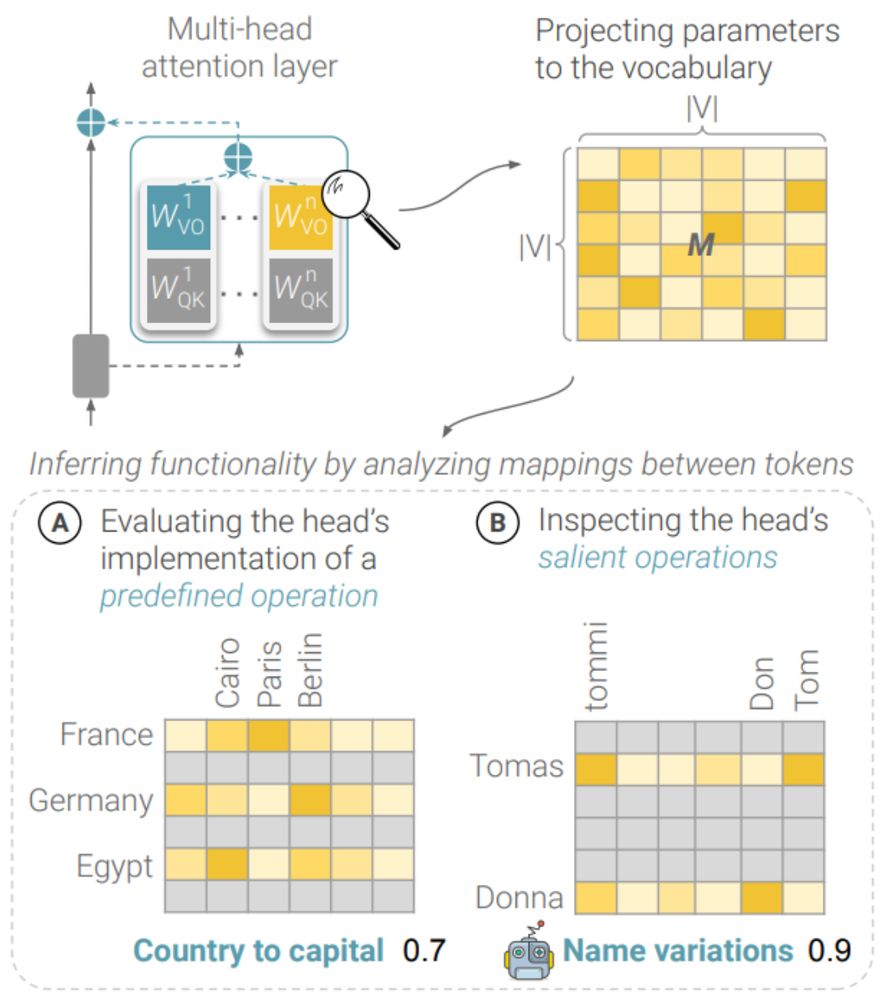

We present an efficient framework – MAPS – for inferring the functionality of attention heads in LLMs ✨directly from their parameters✨

A new preprint with Amit Elhelo 🧵 (1/10)

We present an efficient framework – MAPS – for inferring the functionality of attention heads in LLMs ✨directly from their parameters✨

A new preprint with Amit Elhelo 🧵 (1/10)

medium.com/people-ai-re...

medium.com/people-ai-re...

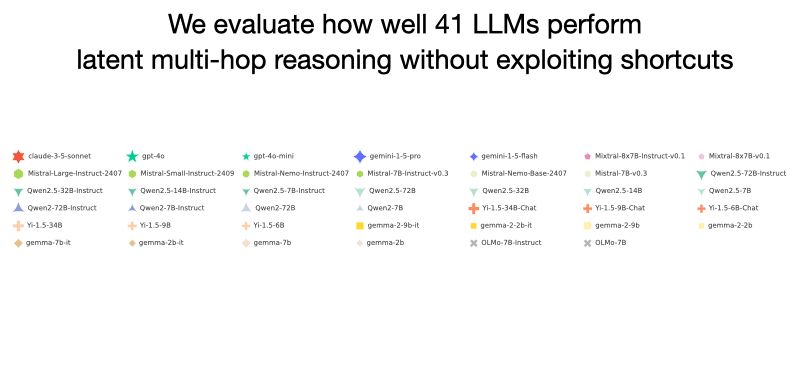

Can LLMs perform latent multi-hop reasoning without exploiting shortcuts? We find the answer is yes – they can recall and compose facts not seen together in training or guessing the answer, but success greatly depends on the type of the bridge entity (80% for country, 6% for year)! 1/N

Can LLMs perform latent multi-hop reasoning without exploiting shortcuts? We find the answer is yes – they can recall and compose facts not seen together in training or guessing the answer, but success greatly depends on the type of the bridge entity (80% for country, 6% for year)! 1/N

dl.heeere.com/conditional-...

dl.heeere.com/conditional-...

I wanted to try this idea myself, but with animation in a Javascript context!

I wanted to try this idea myself, but with animation in a Javascript context!

www.bewitched.com/demo/gini

www.bewitched.com/demo/gini

www.bewitched.com/demo/jupiter/

www.bewitched.com/demo/jupiter/

www.google.com/about/career...

www.google.com/about/career...