www.heleenslagter.com

With @suryagayet.bsky.social and @peelen.bsky.social, in two fMRI studies we investigate mental object rotations that are driven by the scene context, rather than purely by cognitive operations. 🧵 www.science.org/doi/10.1126/...

With @suryagayet.bsky.social and @peelen.bsky.social, in two fMRI studies we investigate mental object rotations that are driven by the scene context, rather than purely by cognitive operations. 🧵 www.science.org/doi/10.1126/...

journals.plos.org/plosbiology/...

journals.plos.org/plosbiology/...

Reaction times across three distinct perceptual tasks (total N = 90) varied with the electrical rhythm of the stomach.

#neuroskyence

Reaction times across three distinct perceptual tasks (total N = 90) varied with the electrical rhythm of the stomach.

#neuroskyence

Targeted to laypeople, they explore how LLMs work, what they can do, and what impacts they have on learning, well-being, disinformation, the workplace, the economy, and the environment.

Targeted to laypeople, they explore how LLMs work, what they can do, and what impacts they have on learning, well-being, disinformation, the workplace, the economy, and the environment.

Can our thoughts and feelings directly affect our physical well-being? Our pre-registered, double-blind RCT investigated this by testing if modulating the brain's reward system could enhance immune responses to vaccination.

Can our thoughts and feelings directly affect our physical well-being? Our pre-registered, double-blind RCT investigated this by testing if modulating the brain's reward system could enhance immune responses to vaccination.

www.thetransmitter.org/brain-imagin...

www.thetransmitter.org/brain-imagin...

list of summer schools & short courses in the realm of (computational) neuroscience or data analysis of EEG / MEG / LFP: 🔗 docs.google.com/spreadsheets...

list of summer schools & short courses in the realm of (computational) neuroscience or data analysis of EEG / MEG / LFP: 🔗 docs.google.com/spreadsheets...

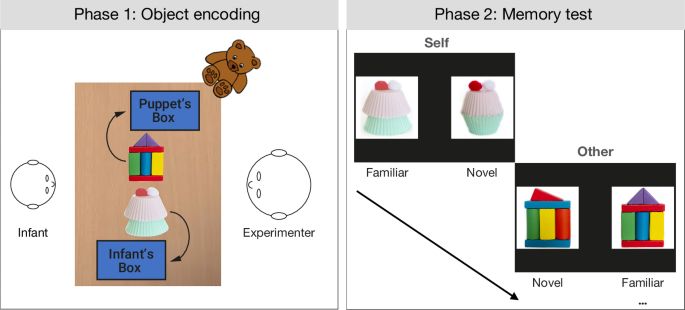

Huge thanks to @bayslab.org, Julie de Falco, Zahara, @cjungerius.bsky.social, @ivntmc.bsky.social, Adam, and Xiaolu for the lovely collaboration.

doi.org/10.31234/osf...

Huge thanks to @bayslab.org, Julie de Falco, Zahara, @cjungerius.bsky.social, @ivntmc.bsky.social, Adam, and Xiaolu for the lovely collaboration.

doi.org/10.31234/osf...

Please see attached link for more details and post around! We are excited to hear from you!

massgeneralbrigham.wd1.myworkdayjobs.com/MGBExternal/...

Please see attached link for more details and post around! We are excited to hear from you!

massgeneralbrigham.wd1.myworkdayjobs.com/MGBExternal/...

Please consider watching as a group, followed by a discussion of next steps for psychiatry-related research with your community. Discussions like these are crucial for progress.

mediacentral.princeton.edu/media/Nicole...

Please consider watching as a group, followed by a discussion of next steps for psychiatry-related research with your community. Discussions like these are crucial for progress.

mediacentral.princeton.edu/media/Nicole...

osf.io/preprints/ps...

osf.io/preprints/ps...

We will soon open a **Postdoc position** to address this question in my lab. If you are interested, please write to moritz.wurm@unitn.it.

We will soon open a **Postdoc position** to address this question in my lab. If you are interested, please write to moritz.wurm@unitn.it.