(& AIME is an older LLM)

(& AIME is an older LLM)

Other key enhancements:

🔸 Best model that fits in a single consumer GPU or TPU host

🔸 KV-cache memory reduction with 5-to-1 interleaved attention

🔸 And more!

Read the blog for the full details on Gemma 3.

Other key enhancements:

🔸 Best model that fits in a single consumer GPU or TPU host

🔸 KV-cache memory reduction with 5-to-1 interleaved attention

🔸 And more!

Read the blog for the full details on Gemma 3.

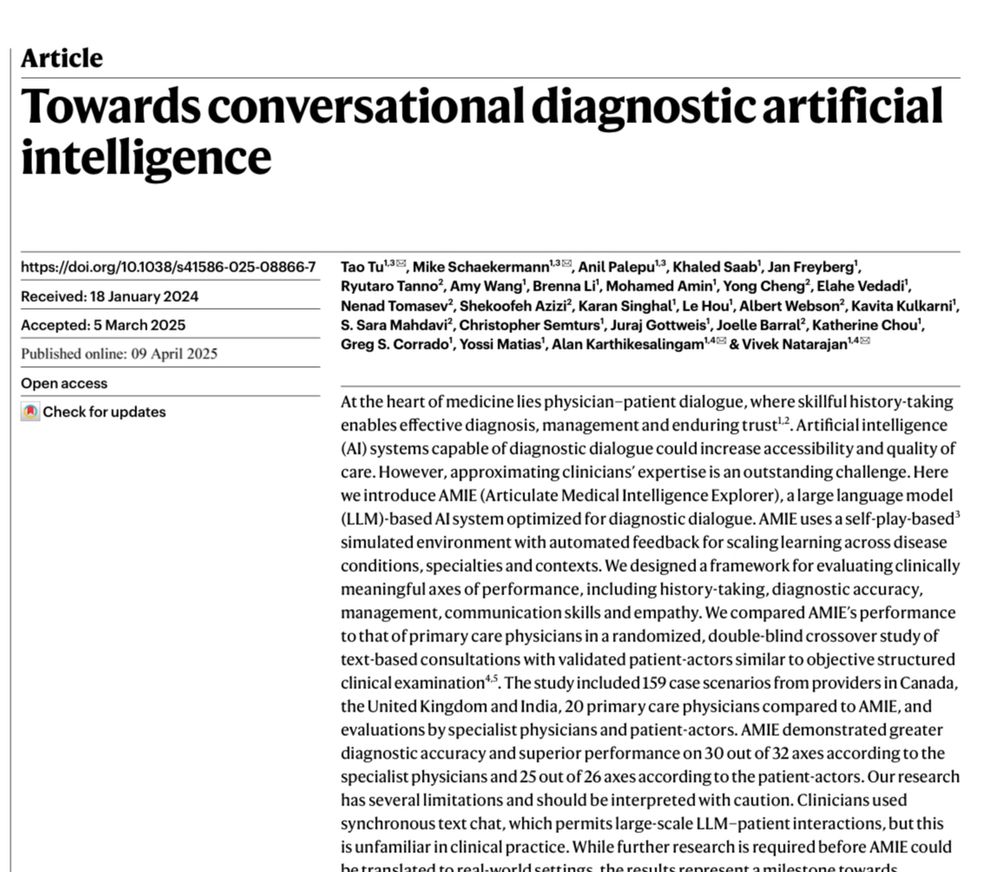

medium.com/people-ai-re...

🎤 developers.googleblog.com/en/introduci...

🤗 huggingface.co/blog/paligem...

💾 kaggle.com/models/googl...

🔧 github.com/google-resea...

7/7

🎤 developers.googleblog.com/en/introduci...

🤗 huggingface.co/blog/paligem...

💾 kaggle.com/models/googl...

🔧 github.com/google-resea...

7/7

github.com/varungodbole...

github.com/varungodbole...

ALTA is A Language for Transformer Analysis.

Because ALTA programs can be compiled to transformer weights, it provides constructive proofs of transformer expressivity. It also offers new analytic tools for *learnability*.

arxiv.org/abs/2410.18077

ALTA is A Language for Transformer Analysis.

Because ALTA programs can be compiled to transformer weights, it provides constructive proofs of transformer expressivity. It also offers new analytic tools for *learnability*.

arxiv.org/abs/2410.18077