Postdoc at FLAIR Oxford. PhD from Safe and Trusted AI CDT @ KCL/Imperial. Previously visiting researcher at CHAI U.C. Berkeley.

dylancope.com

he/him

London 🇬🇧

youtu.be/NuIMZBseAOM?...

youtu.be/NuIMZBseAOM?...

I think it's good to be considerate and express gratitude if a reviewer has put in time. But you also have to make actual arguments.

I think it's good to be considerate and express gratitude if a reviewer has put in time. But you also have to make actual arguments.

Attached meme summarises the main pitfall to be wary of!

Attached meme summarises the main pitfall to be wary of!

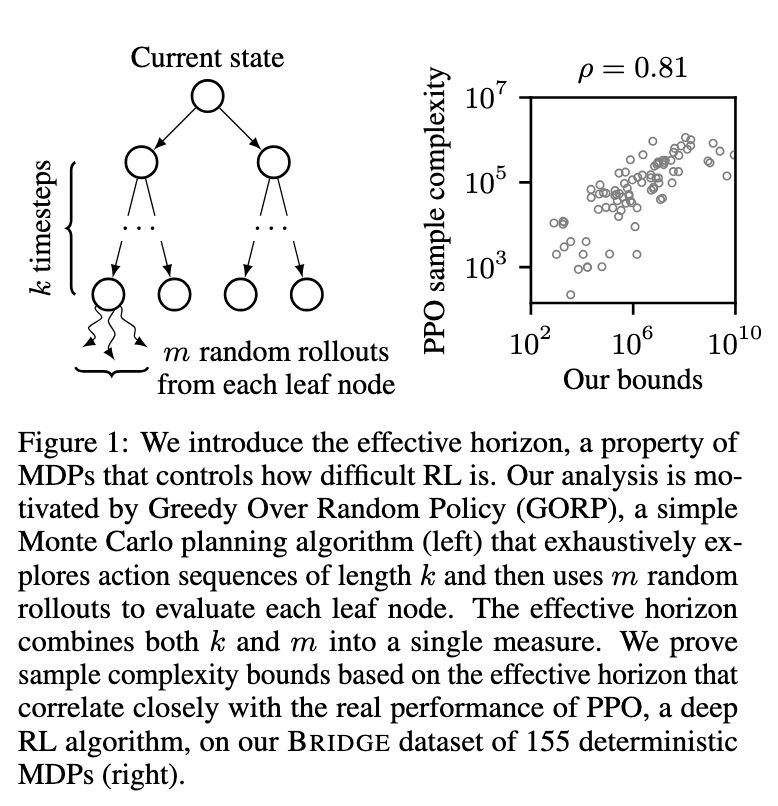

is totally fascinating in that it postulates two underlying, measurable structures that you can use to assess if RL will be easy or hard in an environment

is totally fascinating in that it postulates two underlying, measurable structures that you can use to assess if RL will be easy or hard in an environment

Or you could try aggressively prompting the LLMs 😂

Or you could try aggressively prompting the LLMs 😂

Writing custom environments in JAX can be a bit of a pain though.

Writing custom environments in JAX can be a bit of a pain though.

- Custom gymnax env

- PureJAXRL

- PPO

- GRU RNNs

- wandb

- praying that my choice of hyper parameters is fine

- Custom gymnax env

- PureJAXRL

- PPO

- GRU RNNs

- wandb

- praying that my choice of hyper parameters is fine

But it also wouldn't remotely surprise me if Musk is suppressing mentions of bluesky over there.

But it also wouldn't remotely surprise me if Musk is suppressing mentions of bluesky over there.

Imo the problem is that IL is notoriously bad OOD. Not yet convinced "just scale" fixes the fundamental issue of biased demo data/compounding errors.

Imo the problem is that IL is notoriously bad OOD. Not yet convinced "just scale" fixes the fundamental issue of biased demo data/compounding errors.