📍Chicago, IL

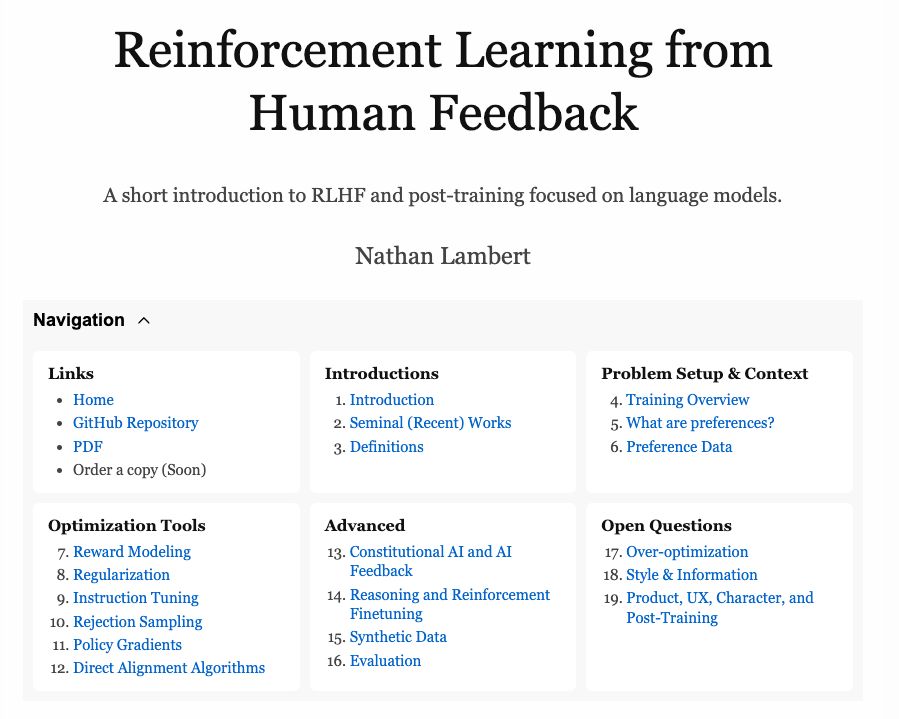

rlhfbook.com

rlhfbook.com

- openreview.net/forum?id=AOl...

- openreview.net/forum?id=AOl...

- openreview.net/forum?id=AOl...

- openreview.net/forum?id=AOl...

Incidentally, if you'd like to hear from them, we know a place they've given / are giving keynotes

Incidentally, if you'd like to hear from them, we know a place they've given / are giving keynotes

New paper: Self-Improvement in Language Models: The Sharpening Mechanism

arxiv.org/abs/2412.01951

New paper: Self-Improvement in Language Models: The Sharpening Mechanism

arxiv.org/abs/2412.01951

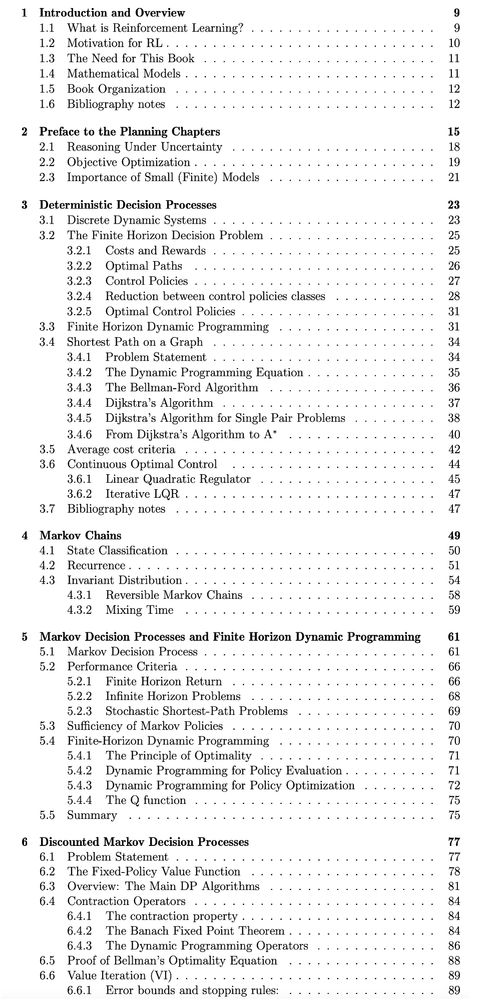

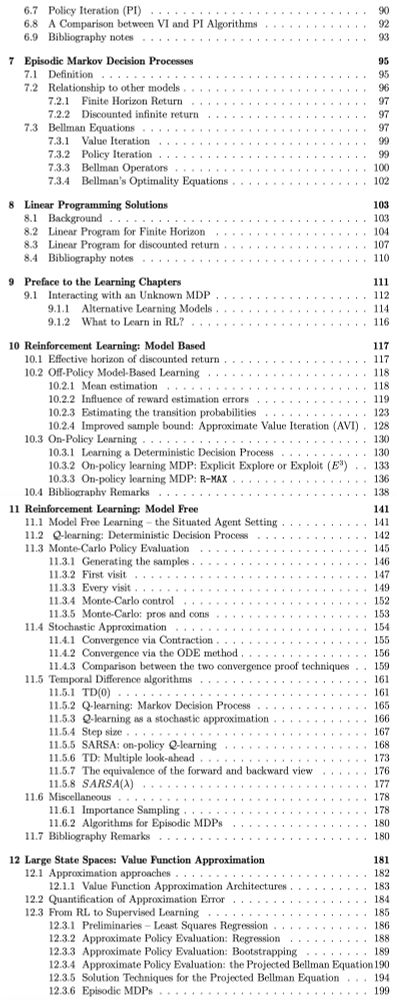

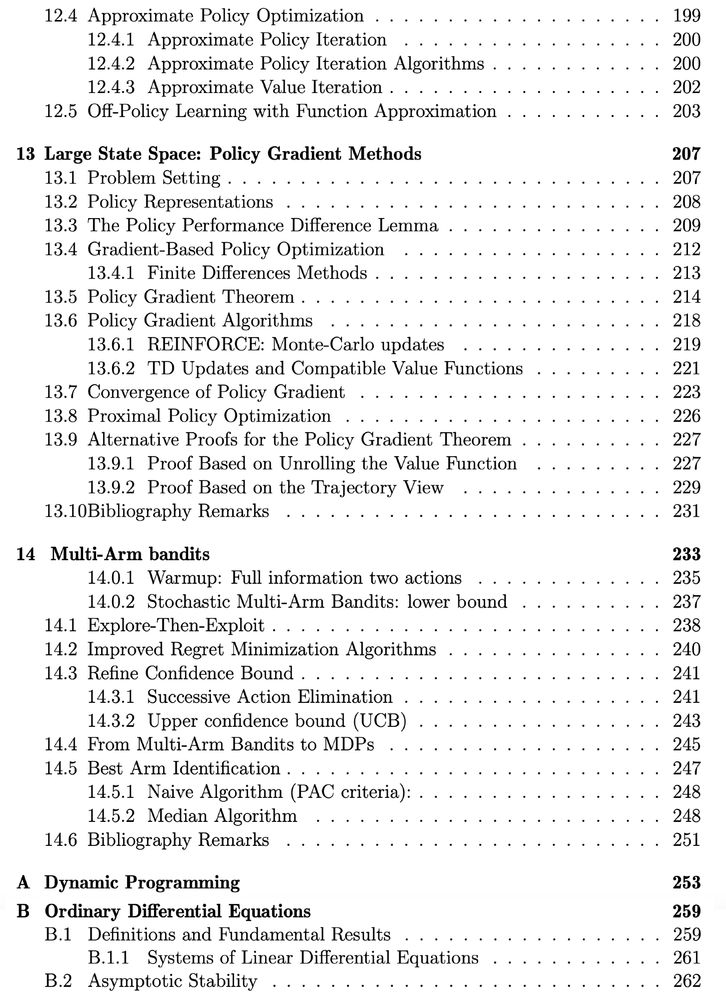

Check out new book draft:

Reinforcement Learning - Foundations sites.google.com/view/rlfound...

W/ Shie Mannor & Yishay Mansour

This is a rigorous first course in RL, based on our teaching at TAU CS and Technion ECE.

Check out new book draft:

Reinforcement Learning - Foundations sites.google.com/view/rlfound...

W/ Shie Mannor & Yishay Mansour

This is a rigorous first course in RL, based on our teaching at TAU CS and Technion ECE.

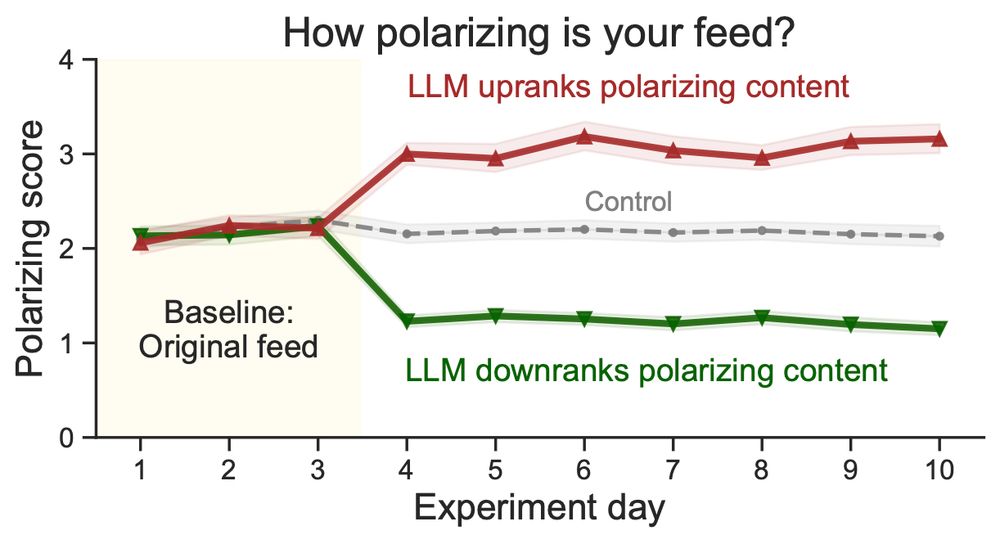

We ran a field experiment on X/Twitter (N=1,256) using LLMs to rerank content in real-time, adjusting exposure to polarizing posts. Result: Algorithmic ranking impacts feelings toward the political outgroup! 🧵⬇️

We ran a field experiment on X/Twitter (N=1,256) using LLMs to rerank content in real-time, adjusting exposure to polarizing posts. Result: Algorithmic ranking impacts feelings toward the political outgroup! 🧵⬇️

go.bsky.app/3WPHcHg

go.bsky.app/3WPHcHg