| Utrecht University

Follow all or make a selection, up to you! Will update this list whenever more lab members join Bluesky 🙂

go.bsky.app/4yHiToK

doi.org/10.1017/S014...

We argue that effort must be considered when aiming to quantify capacity limits or a task's complexity.

doi.org/10.1017/S014...

We argue that effort must be considered when aiming to quantify capacity limits or a task's complexity.

www.nature.com/articles/s42...

www.nature.com/articles/s42...

Preprint: osf.io/preprints/ps...

Preprint: osf.io/preprints/ps...

In a new paper @jocn.bsky.social, we show that pupil size independently tracks breadth and load.

doi.org/10.1162/JOCN...

In a new paper @jocn.bsky.social, we show that pupil size independently tracks breadth and load.

doi.org/10.1162/JOCN...

Using RIFT we reveal how the competition between top-down goals and bottom-up saliency unfolds within visual cortex.

Using RIFT we reveal how the competition between top-down goals and bottom-up saliency unfolds within visual cortex.

say hi and show your colleagues that you're one of the dedicated ones by getting up early on the last day!

I'll show data, demonstrating that synesthetic perception is perceptual, automatic, and effortless.

Join my talk (Thursday, early morning.., Color II) to learn how the qualia of synesthesia can be inferred from pupil size.

Join and (or) say hi!

say hi and show your colleagues that you're one of the dedicated ones by getting up early on the last day!

In this work, we investigate the dynamic competition between bottom-up saliency and top-down goals in the early visual cortex using rapid invisible frequency tagging (RIFT).

📄 Check it out on bioRxiv: www.biorxiv.org/cgi/content/...

In this work, we investigate the dynamic competition between bottom-up saliency and top-down goals in the early visual cortex using rapid invisible frequency tagging (RIFT).

📄 Check it out on bioRxiv: www.biorxiv.org/cgi/content/...

I'll show data, demonstrating that synesthetic perception is perceptual, automatic, and effortless.

Join my talk (Thursday, early morning.., Color II) to learn how the qualia of synesthesia can be inferred from pupil size.

Join and (or) say hi!

I'll show data, demonstrating that synesthetic perception is perceptual, automatic, and effortless.

Join my talk (Thursday, early morning.., Color II) to learn how the qualia of synesthesia can be inferred from pupil size.

Join and (or) say hi!

🧠✨ Our work focused on dynamic competition between bottom-up saliency and top-down goals in early visual cortex by using Rapid Invisible Frequency Tagging

@attentionlab.bsky.social @ecvp.bsky.social

🧠✨ Our work focused on dynamic competition between bottom-up saliency and top-down goals in early visual cortex by using Rapid Invisible Frequency Tagging

@attentionlab.bsky.social @ecvp.bsky.social

@attentionlab.bsky.social @ecvp.bsky.social

Preprint for more details: www.biorxiv.org/content/10.1...

@attentionlab.bsky.social @ecvp.bsky.social

Preprint for more details: www.biorxiv.org/content/10.1...

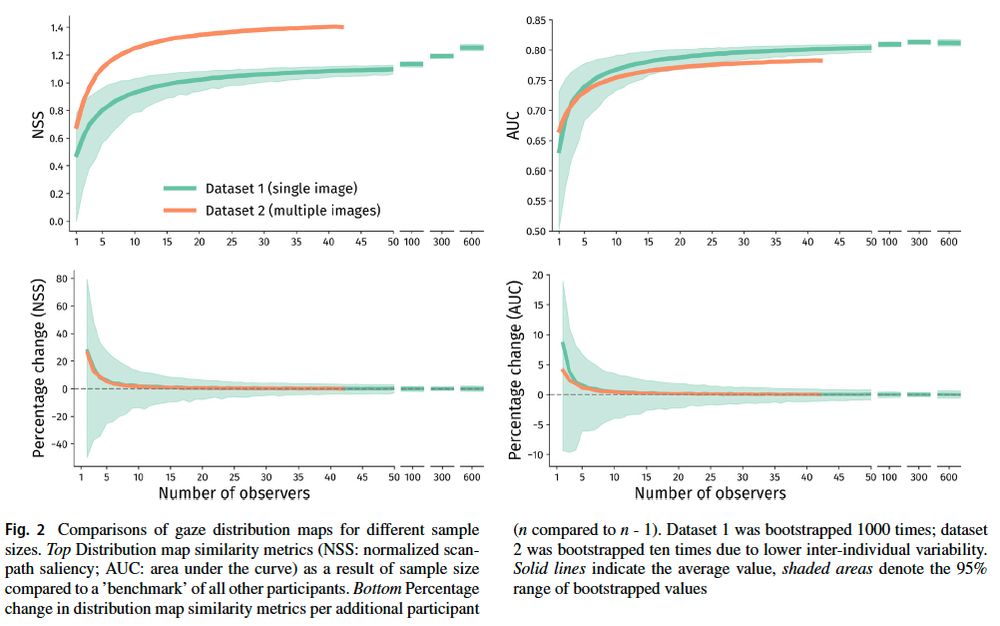

Depends of course, but our guidelines help navigating this in an informed way.

Out now in BRM (free) doi.org/10.3758/s134...

@psychonomicsociety.bsky.social

Depends of course, but our guidelines help navigating this in an informed way.

Out now in BRM (free) doi.org/10.3758/s134...

@psychonomicsociety.bsky.social

The dissertation is available here: doi.org/10.33540/2960

The dissertation is available here: doi.org/10.33540/2960

Open Access link: doi.org/10.3758/s134...

Open Access link: doi.org/10.3758/s134...

presentations today!

R2, 15:00

@chrispaffen.bsky.social:

Functional processing asymmetries between nasal and temporal hemifields during interocular conflict

R1, 17:15

@dkoevoet.bsky.social:

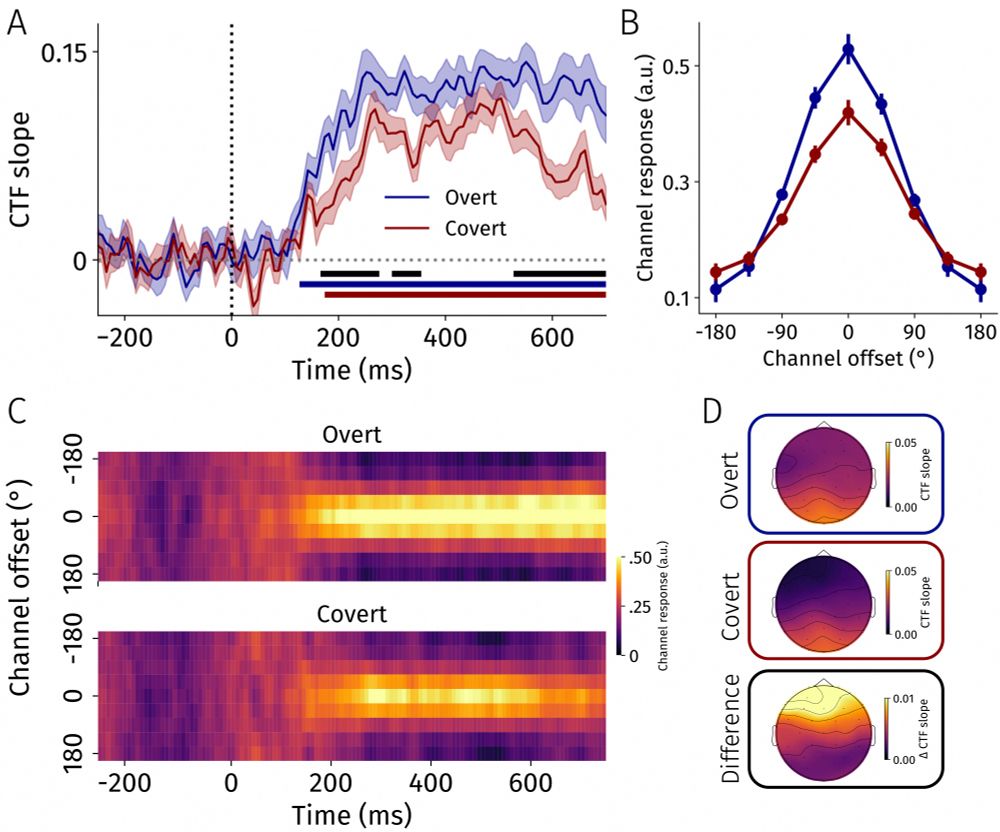

Sharper Spatially-Tuned Neural Activity in Preparatory Overt than in Covert Attention

presentations today!

R2, 15:00

@chrispaffen.bsky.social:

Functional processing asymmetries between nasal and temporal hemifields during interocular conflict

R1, 17:15

@dkoevoet.bsky.social:

Sharper Spatially-Tuned Neural Activity in Preparatory Overt than in Covert Attention

In our new paper, we show that even when salience attracts gaze, costs remain a driver of saccade selection.

OA paper here:

doi.org/10.3758/s134...

In our new paper, we show that even when salience attracts gaze, costs remain a driver of saccade selection.

OA paper here:

doi.org/10.3758/s134...

We show: EEG decoding dissociates preparatory overt from covert attention at the population level:

doi.org/10.1101/2025...

We show: EEG decoding dissociates preparatory overt from covert attention at the population level:

doi.org/10.1101/2025...

eLife's digest:

elifesciences.org/digests/9776...

The paper:

elifesciences.org/articles/97760

#VisionScience

eLife's digest:

elifesciences.org/digests/9776...

The paper:

elifesciences.org/articles/97760

#VisionScience

We present two very large eye tracking datasets of museum visitors (4-81 y.o.!) who freeviewed (n=1248) or searched for a +/x (n=2827) in a single feature-rich image.

We invite you to (re)use the dataset and provide suggestions for future versions 📋

osf.io/preprints/os...

We present two very large eye tracking datasets of museum visitors (4-81 y.o.!) who freeviewed (n=1248) or searched for a +/x (n=2827) in a single feature-rich image.

We invite you to (re)use the dataset and provide suggestions for future versions 📋

osf.io/preprints/os...

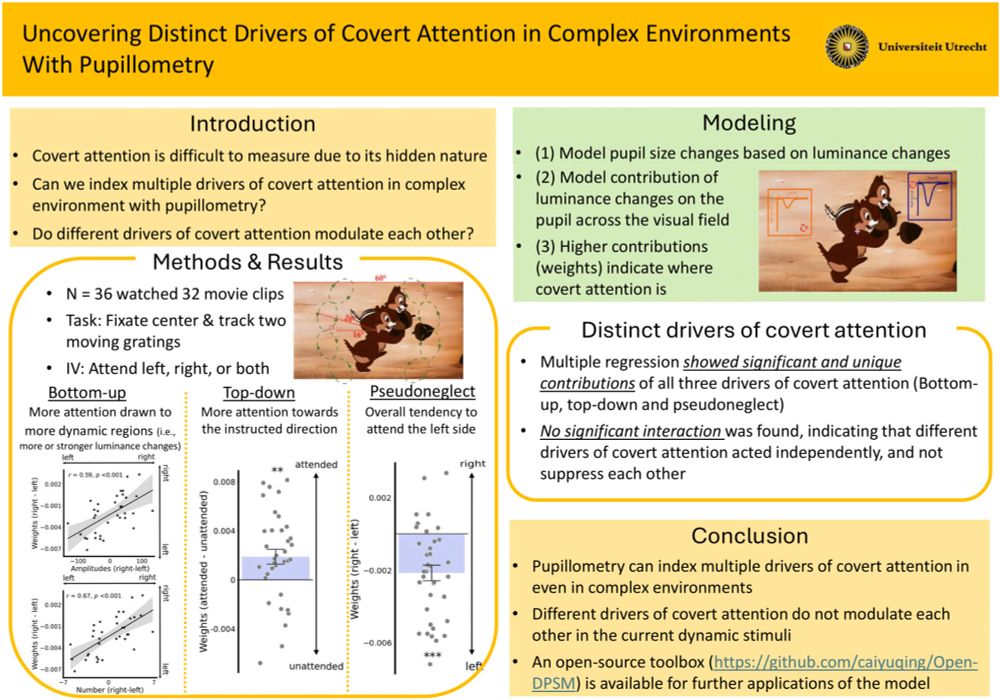

Typically, pupillometry struggles with complex stimuli. We introduced a method to study covert attention allocation in complex video stimuli -

effects of top-down attention, bottom-up attention, and pseudoneglect could all be recovered.

doi.org/10.1111/psyp.70036

Typically, pupillometry struggles with complex stimuli. We introduced a method to study covert attention allocation in complex video stimuli -

effects of top-down attention, bottom-up attention, and pseudoneglect could all be recovered.

doi.org/10.1111/psyp.70036

Our paper shows that presaccadic attention moves up- and downward using the pupil light response.

doi.org/10.1111/psyp.70047

Our paper shows that presaccadic attention moves up- and downward using the pupil light response.

doi.org/10.1111/psyp.70047