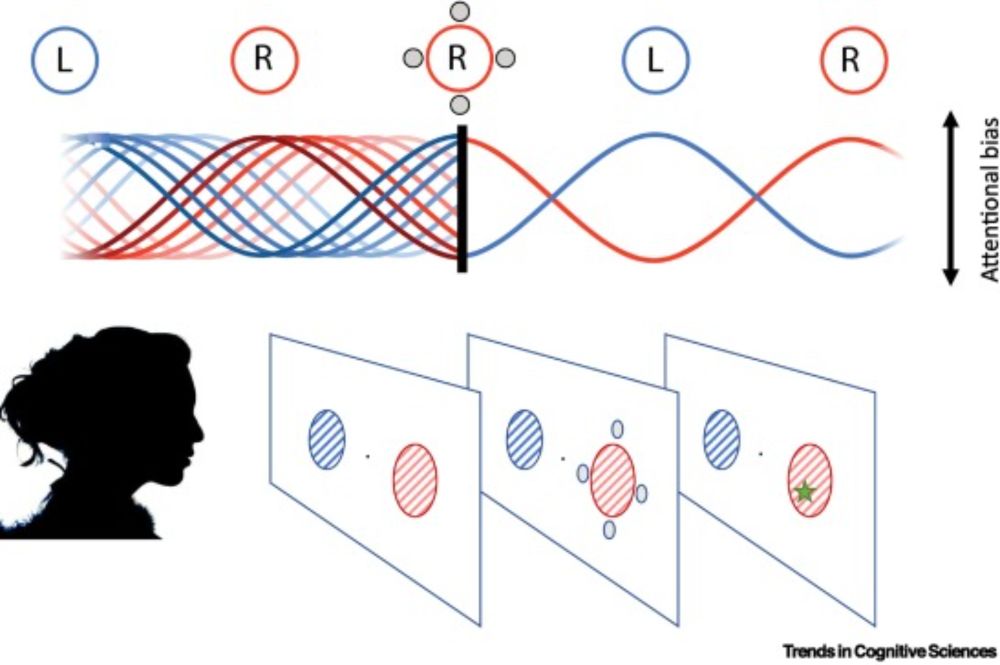

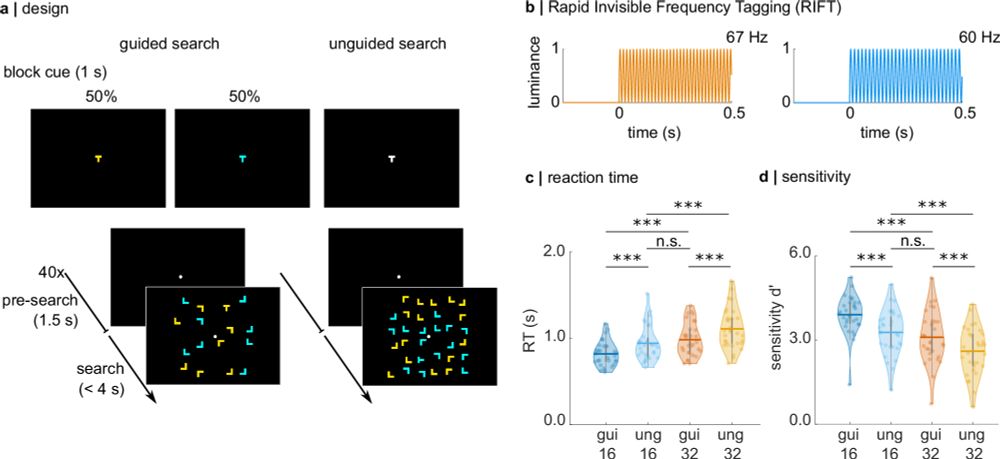

In this work, we investigate the dynamic competition between bottom-up saliency and top-down goals in the early visual cortex using rapid invisible frequency tagging (RIFT).

📄 Check it out on bioRxiv: www.biorxiv.org/cgi/content/...

In this work, we investigate the dynamic competition between bottom-up saliency and top-down goals in the early visual cortex using rapid invisible frequency tagging (RIFT).

📄 Check it out on bioRxiv: www.biorxiv.org/cgi/content/...

👉 www.biorxiv.org/cgi/content/...

RIFT uses high-frequency flicker to probe attention in M/EEG with minimal stimulus visibility and little distraction. Until now, it required a costly high-speed projector.

👉 www.biorxiv.org/cgi/content/...

RIFT uses high-frequency flicker to probe attention in M/EEG with minimal stimulus visibility and little distraction. Until now, it required a costly high-speed projector.

@attentionlab.bsky.social @ecvp.bsky.social

Preprint for more details: www.biorxiv.org/content/10.1...

@attentionlab.bsky.social @ecvp.bsky.social

Preprint for more details: www.biorxiv.org/content/10.1...

🧠✨ Our work focused on dynamic competition between bottom-up saliency and top-down goals in early visual cortex by using Rapid Invisible Frequency Tagging

@attentionlab.bsky.social @ecvp.bsky.social

🧠✨ Our work focused on dynamic competition between bottom-up saliency and top-down goals in early visual cortex by using Rapid Invisible Frequency Tagging

@attentionlab.bsky.social @ecvp.bsky.social

Thanks everyone for your contributions 💜

Thanks everyone for your contributions 💜

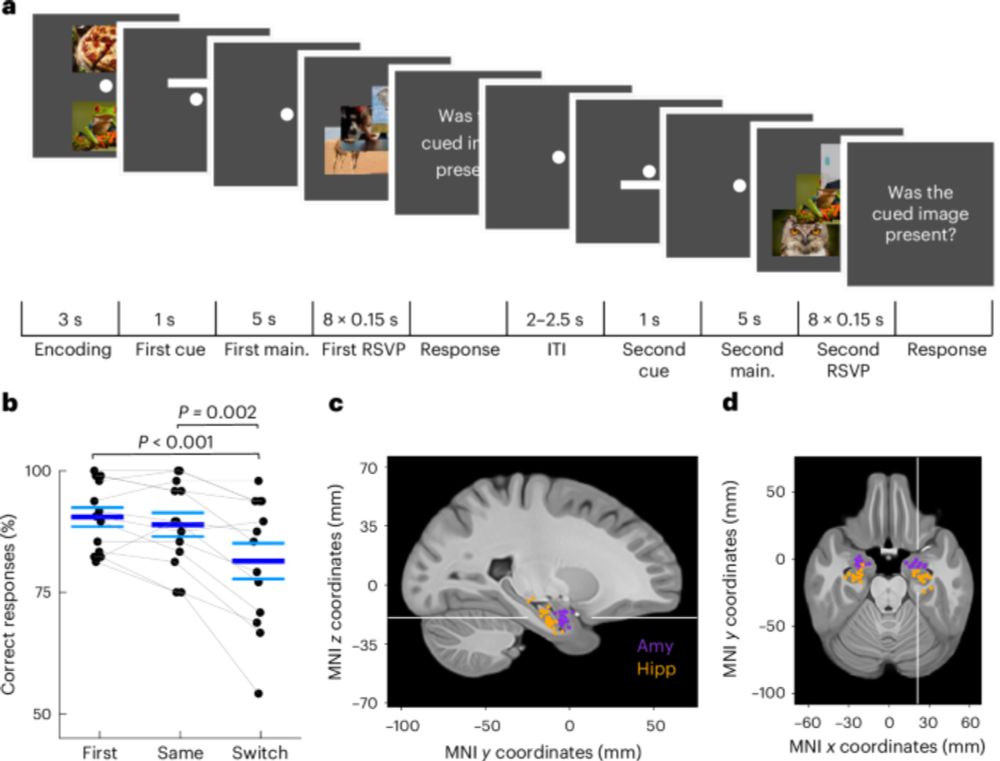

www.nature.com/articles/s41...

www.nature.com/articles/s41...

www.cell.com/trends/cogni...

www.cell.com/trends/cogni...

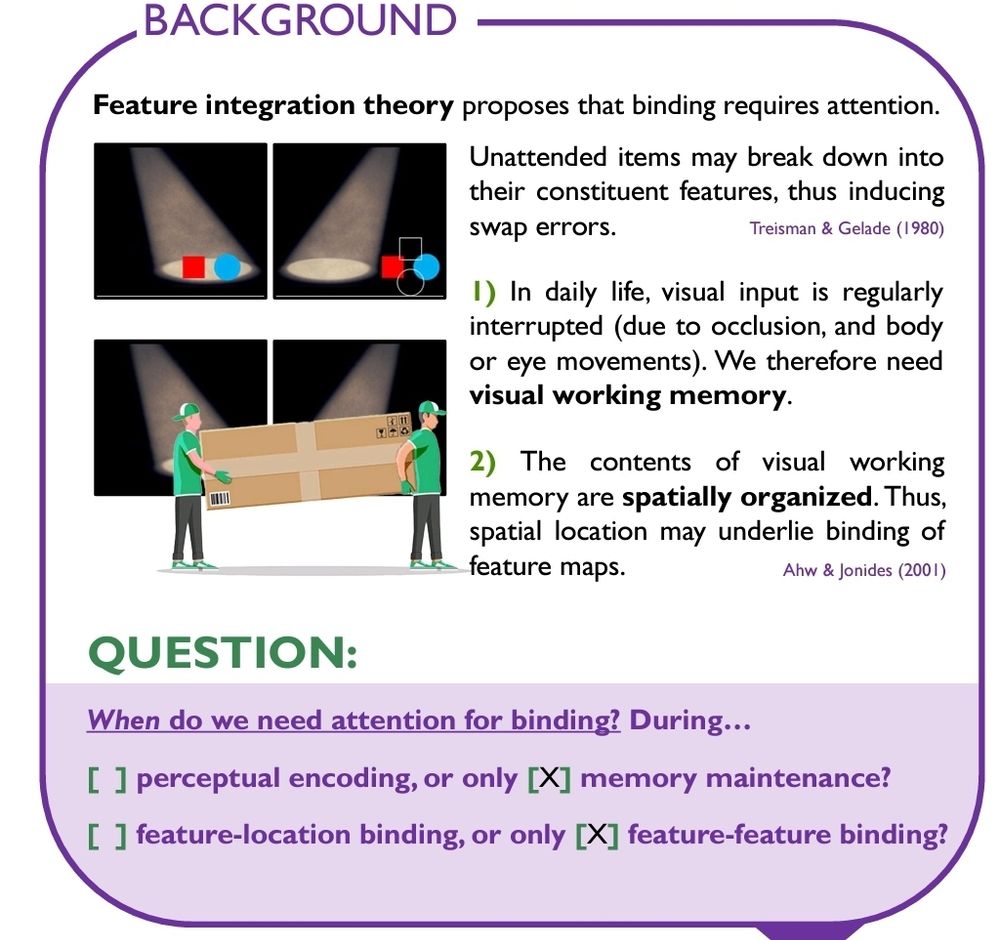

Open Access link: doi.org/10.3758/s134...

Open Access link: doi.org/10.3758/s134...

@katduecker.bsky.social , we show that feature-guidance in visual search alters neuronal excitability in early visual cortex —supporting a priority-map-based attentional mechanism.

rdcu.be/eqFX7

@katduecker.bsky.social , we show that feature-guidance in visual search alters neuronal excitability in early visual cortex —supporting a priority-map-based attentional mechanism.

rdcu.be/eqFX7

📅 Date: June 25th & 26th

📍 Location: Trippenhuis, Amsterdam

📅 Date: June 25th & 26th

📍 Location: Trippenhuis, Amsterdam

www.jneurosci.org/content/jneu...

www.jneurosci.org/content/jneu...