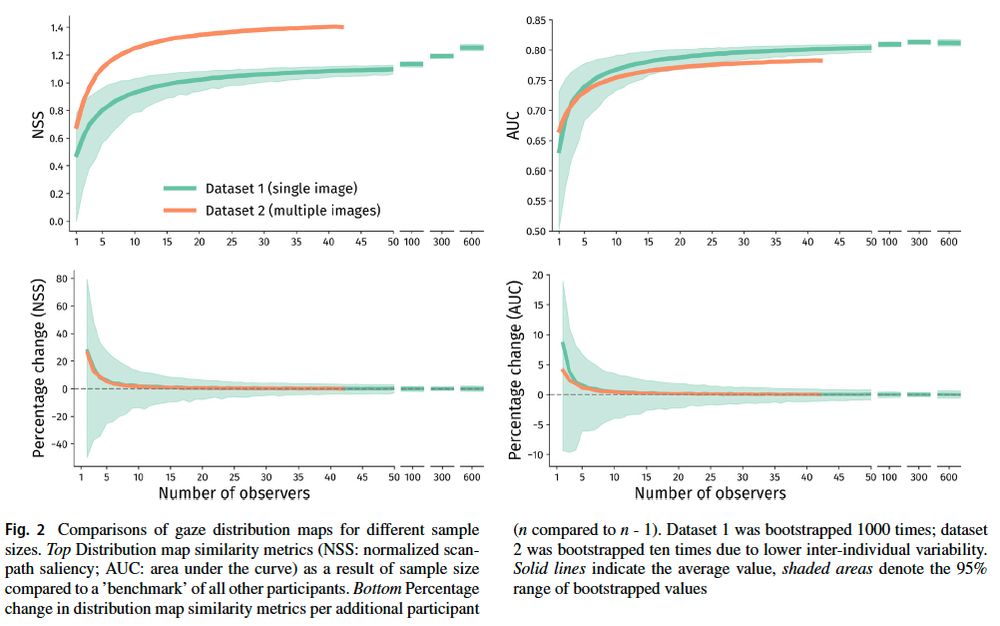

Depends of course, but our guidelines help navigating this in an informed way.

Out now in BRM (free) doi.org/10.3758/s134...

@psychonomicsociety.bsky.social

Depends of course, but our guidelines help navigating this in an informed way.

Out now in BRM (free) doi.org/10.3758/s134...

@psychonomicsociety.bsky.social

www.rug.nl/about-ug/wor...

www.rug.nl/about-ug/wor...

Updated preprint: osf.io/preprints/os...

Data: osf.io/kf4sb/

Updated preprint: osf.io/preprints/os...

Data: osf.io/kf4sb/

The dissertation is available here: doi.org/10.33540/2960

The dissertation is available here: doi.org/10.33540/2960

Open Access link: doi.org/10.3758/s134...

Open Access link: doi.org/10.3758/s134...

Tomorrow in the Attention: Neural Mechanisms session at 17:15. You can check out the preprint in the meantime:

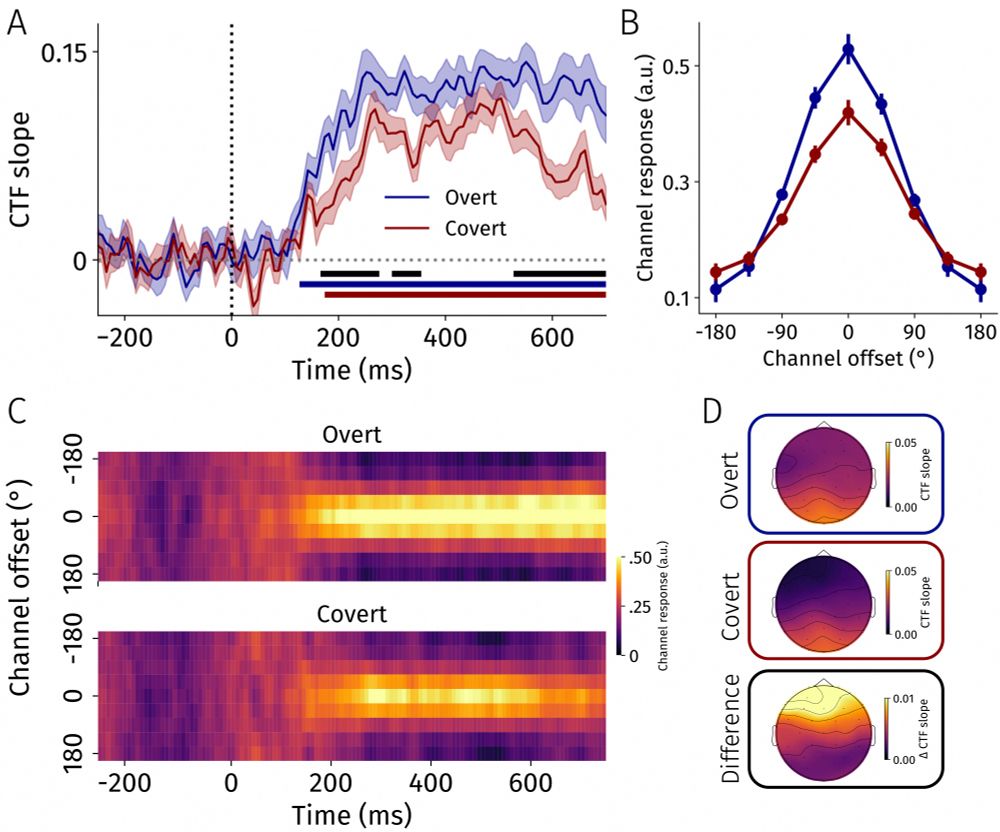

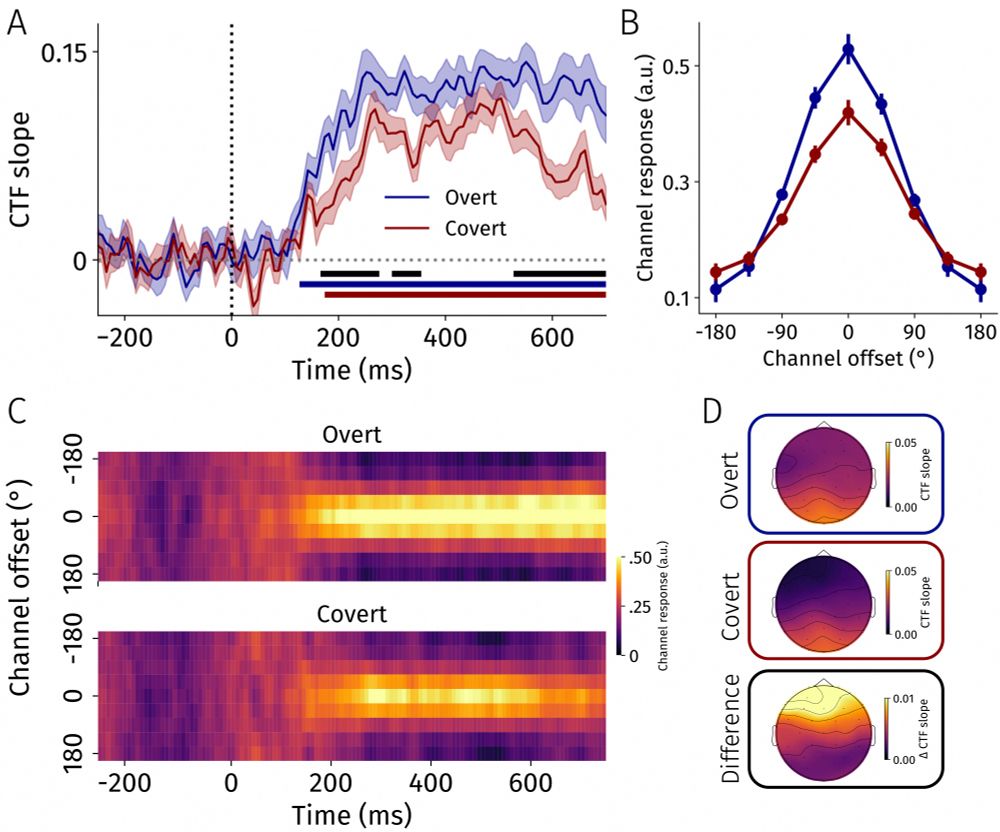

We show: EEG decoding dissociates preparatory overt from covert attention at the population level:

doi.org/10.1101/2025...

Tomorrow in the Attention: Neural Mechanisms session at 17:15. You can check out the preprint in the meantime:

In our new paper, we show that even when salience attracts gaze, costs remain a driver of saccade selection.

OA paper here:

doi.org/10.3758/s134...

In our new paper, we show that even when salience attracts gaze, costs remain a driver of saccade selection.

OA paper here:

doi.org/10.3758/s134...

How do self-paced encoding and retention relate to performance in (working) memory-guided actions?

Find out now in Memory and Cognition: doi.org/10.3758/s134...

(or check the short version below)🧵

How do self-paced encoding and retention relate to performance in (working) memory-guided actions?

Find out now in Memory and Cognition: doi.org/10.3758/s134...

(or check the short version below)🧵

We show: EEG decoding dissociates preparatory overt from covert attention at the population level:

doi.org/10.1101/2025...

We show: EEG decoding dissociates preparatory overt from covert attention at the population level:

doi.org/10.1101/2025...

I will investigate looked-but-failed-to-see (LBFTS) errors in visual search, under the expert guidance of Johan Hulleman and Jeremy Wolfe. Watch this space!

I will investigate looked-but-failed-to-see (LBFTS) errors in visual search, under the expert guidance of Johan Hulleman and Jeremy Wolfe. Watch this space!

eLife's digest:

elifesciences.org/digests/9776...

The paper:

elifesciences.org/articles/97760

#VisionScience

eLife's digest:

elifesciences.org/digests/9776...

The paper:

elifesciences.org/articles/97760

#VisionScience

eLife's digest:

elifesciences.org/digests/9776...

& the 'convincing & important' paper:

elifesciences.org/articles/97760

I consider this my coolest ever project!

#VisionScience #Neuroscience

eLife's digest:

elifesciences.org/digests/9776...

& the 'convincing & important' paper:

elifesciences.org/articles/97760

I consider this my coolest ever project!

#VisionScience #Neuroscience

We present two very large eye tracking datasets of museum visitors (4-81 y.o.!) who freeviewed (n=1248) or searched for a +/x (n=2827) in a single feature-rich image.

We invite you to (re)use the dataset and provide suggestions for future versions 📋

osf.io/preprints/os...

We present two very large eye tracking datasets of museum visitors (4-81 y.o.!) who freeviewed (n=1248) or searched for a +/x (n=2827) in a single feature-rich image.

We invite you to (re)use the dataset and provide suggestions for future versions 📋

osf.io/preprints/os...

Great science, even better people :)

Great science, even better people :)

#MultisensoryPerception #MotionTracking #MotionPrediction #WorkingMemory

#MultisensoryPerception #MotionTracking #MotionPrediction #WorkingMemory

Included: an assignment that lets you measure pupil size. In my classes, this replicates Hess & Polt's 1964 effort finding without an eyetracker. Feel free to use it!

#VisionScience #neuroscience #psychology 🧪

Included: an assignment that lets you measure pupil size. In my classes, this replicates Hess & Polt's 1964 effort finding without an eyetracker. Feel free to use it!

#VisionScience #neuroscience #psychology 🧪

www.biorxiv.org/content/10.1...

www.biorxiv.org/content/10.1...

@tianyingq.bsky.social's new PBR metaanalysis across 28 exp shows: increases in access cost reliably push toward internal WM use.

doi.org/10.3758/s134...

#VisionScience 🧪

@tianyingq.bsky.social's new PBR metaanalysis across 28 exp shows: increases in access cost reliably push toward internal WM use.

doi.org/10.3758/s134...

#VisionScience 🧪

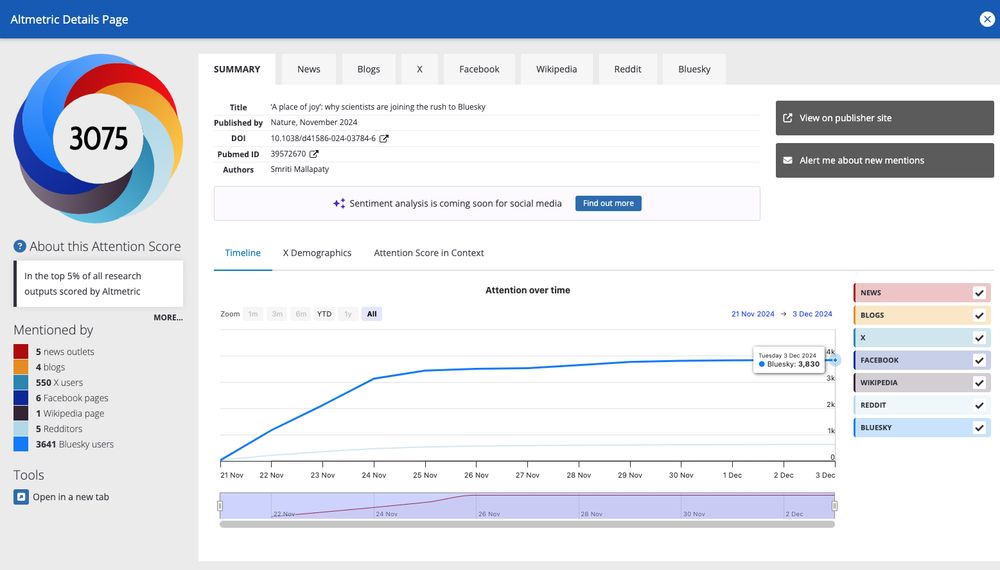

A Place of Joy.