Member of INRIA-Valeo ASTRA team.

Website: boulch.eu

📝 Paper: bmva-archive.org.uk/bmvc/2025/a...

💻 Code: github.com/valeoai/muddos

This is a joint work with my great co-authors @alexandreboulch.bsky.social, @gillespuy.bsky.social, @tuanhungvu.bsky.social, Renaud Marlet, @ncourty.bsky.social and myself.

📝 Paper: bmva-archive.org.uk/bmvc/2025/a...

💻 Code: github.com/valeoai/muddos

This is a joint work with my great co-authors @alexandreboulch.bsky.social, @gillespuy.bsky.social, @tuanhungvu.bsky.social, Renaud Marlet, @ncourty.bsky.social and myself.

🚀Introducing NAF: A universal, zero-shot feature upsampler.

It turns low-res ViT features into pixel-perfect maps.

-⚡ Model-agnostic

-🥇 SoTA results

-🚀 4× faster than SoTA

-📈 Scales up to 2K res

🚀Introducing NAF: A universal, zero-shot feature upsampler.

It turns low-res ViT features into pixel-perfect maps.

-⚡ Model-agnostic

-🥇 SoTA results

-🚀 4× faster than SoTA

-📈 Scales up to 2K res

J'en parlerai au GRETSI fin août : hal.science/hal-05142942 👀

J'en parlerai au GRETSI fin août : hal.science/hal-05142942 👀

Check out DIP an effective post-training strategy by @ssirko.bsky.social @spyrosgidaris.bsky.social

@vobeckya.bsky.social @abursuc.bsky.social and Nicolas Thome 👇

#iccv2025

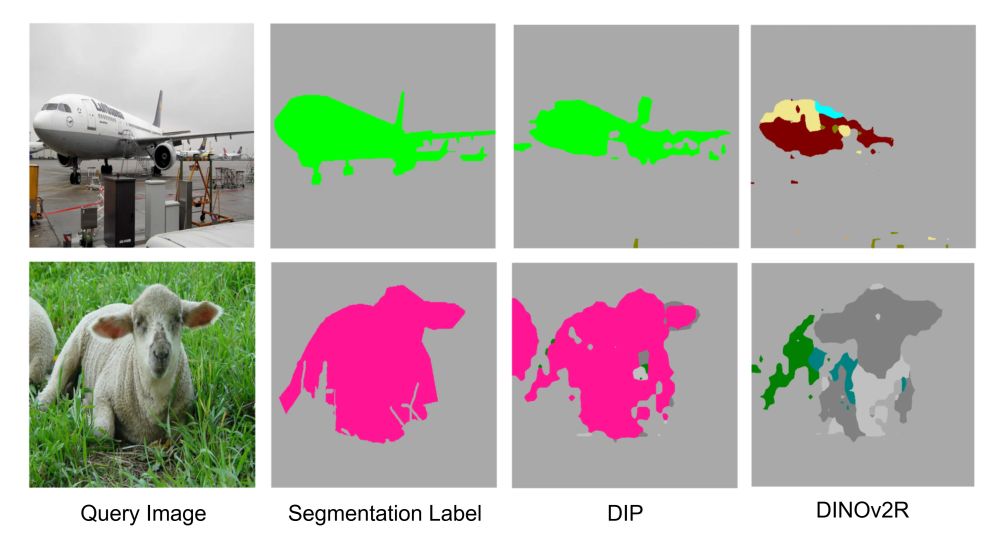

Introducing DIP: unsupervised post-training that enhances dense features in pretrained ViTs for dense in-context scene understanding

Below: Low-shot in-context semantic segmentation examples. DIP features outperform DINOv2!

Check out DIP an effective post-training strategy by @ssirko.bsky.social @spyrosgidaris.bsky.social

@vobeckya.bsky.social @abursuc.bsky.social and Nicolas Thome 👇

#iccv2025

Training and inference code available, along with the model checkpoint.

Github repo: github.com/astra-vision...

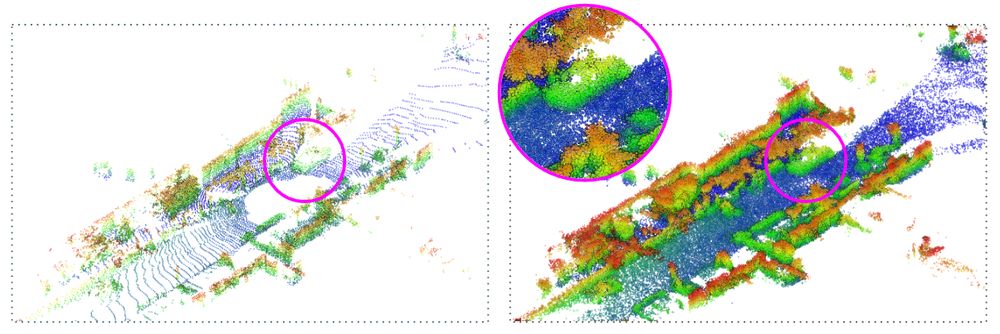

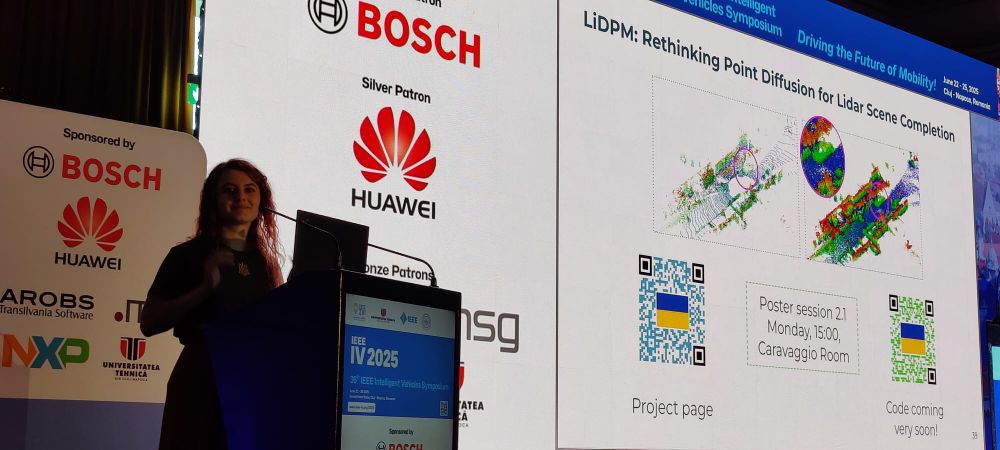

#IV2025

Training and inference code available, along with the model checkpoint.

Github repo: github.com/astra-vision...

#IV2025

Project page: astra-vision.github.io/LiDPM/

w/ @gillespuy.bsky.social, @alexandreboulch.bsky.social, Renaud Marlet, Raoul de Charette

Also, see our poster at 3pm in the Caravaggio room and AMA 😉

Project page: astra-vision.github.io/LiDPM/

w/ @gillespuy.bsky.social, @alexandreboulch.bsky.social, Renaud Marlet, Raoul de Charette

Also, see our poster at 3pm in the Caravaggio room and AMA 😉

Paper : arxiv.org/abs/2506.11136

Project Page: jafar-upsampler.github.io

Github: github.com/PaulCouairon...

Paper : arxiv.org/abs/2506.11136

Project Page: jafar-upsampler.github.io

Github: github.com/PaulCouairon...

We trained a 1.2B parameter model on 1,800+ hours of raw driving videos.

No labels. No maps. Just pure observation.

And it works! 🤯

🧵👇 [1/10]

We trained a 1.2B parameter model on 1,800+ hours of raw driving videos.

No labels. No maps. Just pure observation.

And it works! 🤯

🧵👇 [1/10]

This is also an excellent occasion to fit all team members in a photo 📸

Introducing AnySat: one model for any resolution (0.2m–250m), scale (0.3–2600 hectares), and modalities (choose from 11 sensors & time series)!

Try it with just a few lines of code:

or this paper: rollingdepth.github.io

or this paper: romosfm.github.io

vision is cool 😎

or this paper: rollingdepth.github.io

or this paper: romosfm.github.io

vision is cool 😎

anhquancao.github.io

anhquancao.github.io