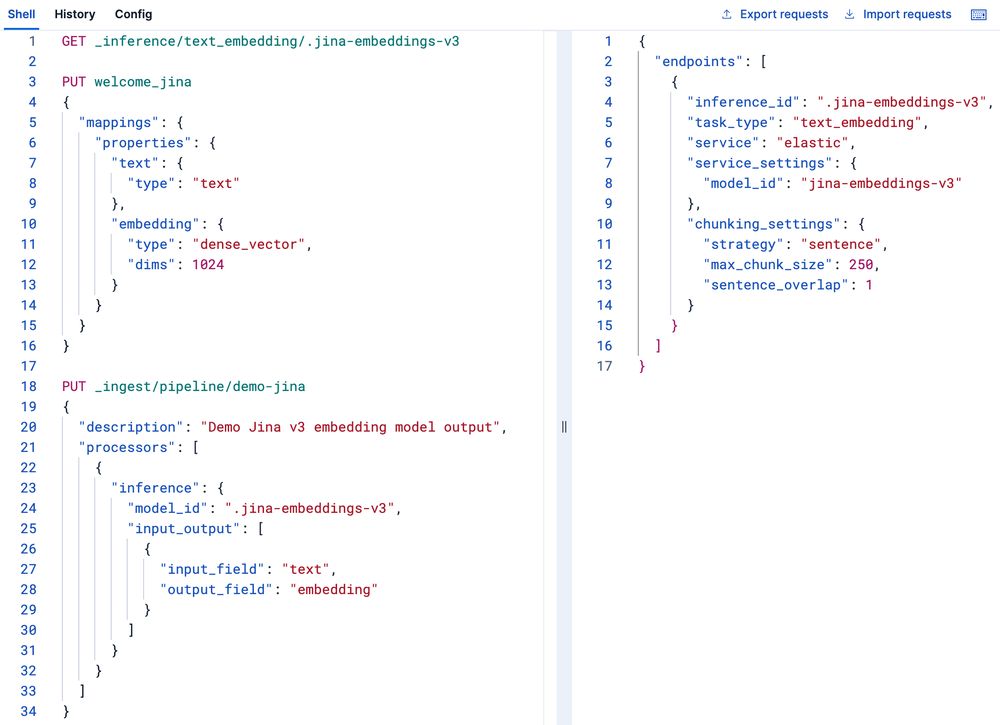

starting with joining forces with #jinaai https://www.elastic.co/blog/elastic-jina-ai 🤗

starting with joining forces with #jinaai https://www.elastic.co/blog/elastic-jina-ai 🤗

(fwiw Qdrant and a few other vector DBs support multi-vectors)

huggingface.co/jinaai/jina-...

(fwiw Qdrant and a few other vector DBs support multi-vectors)

huggingface.co/jinaai/jina-...

Using jinaai/jina-embeddings-v2-small-en to generate 512-dimensional normalized vectors for semantic search 🧮 #llmzoomcamp

Using jinaai/jina-embeddings-v2-small-en to generate 512-dimensional normalized vectors for semantic search 🧮 #llmzoomcamp

jinaai/jina-embeddings-v4

このリポジトリは、Jina AIが開発した「Jina Embeddings v4」という汎用埋め込みモデルを提供します。

このモデルは、マルチモーダルおよび多言語に対応しており、特にグラフ、表、イラストなどの視覚要素を含む複雑な文書の検索に特化しています。

また、テキストマッチングやコード関連のタスクにも幅広く利用可能です。

jinaai/jina-embeddings-v4

このリポジトリは、Jina AIが開発した「Jina Embeddings v4」という汎用埋め込みモデルを提供します。

このモデルは、マルチモーダルおよび多言語に対応しており、特にグラフ、表、イラストなどの視覚要素を含む複雑な文書の検索に特化しています。

また、テキストマッチングやコード関連のタスクにも幅広く利用可能です。

Compared jinaai/jina-embeddings-v2-small-en vs BAAI/bge-small-en — same query, different similarities!

Model choice matters. 🔍

#LLMZoomcamp #DataTalksClub

Compared jinaai/jina-embeddings-v2-small-en vs BAAI/bge-small-en — same query, different similarities!

Model choice matters. 🔍

#LLMZoomcamp #DataTalksClub

Model: huggingface.co/jinaai/jina-...

Model: huggingface.co/jinaai/jina-...

Example for Unimodal which I used in this course - jinaai/jina-embeddings-v2-small-en

Multimodal models like CLIP can embed both text and images into the same space.

Example for Unimodal which I used in this course - jinaai/jina-embeddings-v2-small-en

Multimodal models like CLIP can embed both text and images into the same space.

- multilingual text-to-text and text-to-image search w/o modality gap

- also visual docs (e.g. pdfs, maps) - trained on a wider scope than DSE, ColPali, etc.

+ MRL, late interaction, etc.

🤗 huggingface.co/jinaai/jina-...

📄 arxiv.org/abs/2506.18902

- multilingual text-to-text and text-to-image search w/o modality gap

- also visual docs (e.g. pdfs, maps) - trained on a wider scope than DSE, ColPali, etc.

+ MRL, late interaction, etc.

🤗 huggingface.co/jinaai/jina-...

📄 arxiv.org/abs/2506.18902

Jina AI introduces a 3.8B parameter multimodal embedding model that unifies text and image representations.

📝 arxiv.org/abs/2506.18902

👨🏽💻 huggingface.co/jinaai/jina-...

Jina AI introduces a 3.8B parameter multimodal embedding model that unifies text and image representations.

📝 arxiv.org/abs/2506.18902

👨🏽💻 huggingface.co/jinaai/jina-...

Supports Multiple Languages and Dynamic Resolution (up to 4K)

🤗 huggingface.co/jinaai/jina-...

Supports Multiple Languages and Dynamic Resolution (up to 4K)

🤗 huggingface.co/jinaai/jina-...

Jina AI introduces a 1.5B parameter model that processes documents up to 512K tokens, transforming messy HTML into clean Markdown or JSON with high accuracy.

📝 arxiv.org/abs/2503.01151

👨🏽💻 huggingface.co/jinaai/Reade...

Jina AI introduces a 1.5B parameter model that processes documents up to 512K tokens, transforming messy HTML into clean Markdown or JSON with high accuracy.

📝 arxiv.org/abs/2503.01151

👨🏽💻 huggingface.co/jinaai/Reade...

jinaai/ReaderLM-v2

このリポジトリは、Jina AIが開発した15億パラメータの言語モデル「ReaderLM-v2」を公開するものです。

ReaderLM-v2は、HTMLをより正確に、長文にも対応してマークダウンやJSON形式に変換することに特化しており、テキスト抽出や解析のタスクに利用できます。

APIやクラウドサービス経由でも利用可能です。

jinaai/ReaderLM-v2

このリポジトリは、Jina AIが開発した15億パラメータの言語モデル「ReaderLM-v2」を公開するものです。

ReaderLM-v2は、HTMLをより正確に、長文にも対応してマークダウンやJSON形式に変換することに特化しており、テキスト抽出や解析のタスクに利用できます。

APIやクラウドサービス経由でも利用可能です。

jinaai/ReaderLM-v2

このリポジトリは、Jina AIが開発した15億パラメータの言語モデル「ReaderLM-v2」を公開しています。

HTMLをMarkdownやJSONに高精度に変換することを目的とし、複数言語に対応、テキスト抽出や変換に特化しています。

APIやColab、クラウド環境での利用も可能です。

jinaai/ReaderLM-v2

このリポジトリは、Jina AIが開発した15億パラメータの言語モデル「ReaderLM-v2」を公開しています。

HTMLをMarkdownやJSONに高精度に変換することを目的とし、複数言語に対応、テキスト抽出や変換に特化しています。

APIやColab、クラウド環境での利用も可能です。

jinaai/ReaderLM-v2

このリポジトリは、Jina AIが開発した15億パラメータの言語モデル「ReaderLM-v2」に関するものです。

ReaderLM-v2は、HTMLをマークダウンやJSON形式に変換することに特化しており、多言語対応でより長い文脈の処理能力が向上しています。

APIやColabでの利用方法も提供されています。

jinaai/ReaderLM-v2

このリポジトリは、Jina AIが開発した15億パラメータの言語モデル「ReaderLM-v2」に関するものです。

ReaderLM-v2は、HTMLをマークダウンやJSON形式に変換することに特化しており、多言語対応でより長い文脈の処理能力が向上しています。

APIやColabでの利用方法も提供されています。

1、可以處理長文本,支援複雜格式,比如表格、嵌套列表、LaTeX公式等

2、穩定性比較好,沒有重複或循環的問題

3、支援 29種語言,包括英語、中文、日語、韓語、法語、西班牙語、葡萄牙語、德語、義大利語、俄語、越南語、泰語、阿拉伯語等

適合需要批次處理網頁或自動化網頁資料提取的場景

模型: huggingface.co/jinaai/ReaderLM-v2

#網頁轉Markdown #網頁轉JSON #ReaderLMv2

1、可以處理長文本,支援複雜格式,比如表格、嵌套列表、LaTeX公式等

2、穩定性比較好,沒有重複或循環的問題

3、支援 29種語言,包括英語、中文、日語、韓語、法語、西班牙語、葡萄牙語、德語、義大利語、俄語、越南語、泰語、阿拉伯語等

適合需要批次處理網頁或自動化網頁資料提取的場景

模型: huggingface.co/jinaai/ReaderLM-v2

#網頁轉Markdown #網頁轉JSON #ReaderLMv2

jinaai/ReaderLM-v2

このリポジトリは、Jina AIが開発した15億パラメータの言語モデルReaderLM-v2を公開しています。

ReaderLM-v2は、HTMLをMarkdownやJSONに変換することに特化しており、多言語に対応し、より正確かつ長文の処理が可能です。

Hugging Face Transformersライブラリでの利用方法や、API経由での利用方法が提供されています。

jinaai/ReaderLM-v2

このリポジトリは、Jina AIが開発した15億パラメータの言語モデルReaderLM-v2を公開しています。

ReaderLM-v2は、HTMLをMarkdownやJSONに変換することに特化しており、多言語に対応し、より正確かつ長文の処理が可能です。

Hugging Face Transformersライブラリでの利用方法や、API経由での利用方法が提供されています。

arxiv: arxiv.org/abs/2412.08802

HF: huggingface.co/jinaai/jina-...

arxiv: arxiv.org/abs/2412.08802

HF: huggingface.co/jinaai/jina-...

Jina AI presents an improved CLIP model that combines multilingual text and image understanding with efficient embedding compression.

📝 arxiv.org/abs/2412.08802

👨🏽💻 huggingface.co/jinaai/jina-...

Jina AI presents an improved CLIP model that combines multilingual text and image understanding with efficient embedding compression.

📝 arxiv.org/abs/2412.08802

👨🏽💻 huggingface.co/jinaai/jina-...

🏦 CC BY-NC 4.0 non-commercial license - you can contact Jina for commercial use.

Very nice work by the Jina team 💪 They have an updated technical report coming soon too!

Check out the model here: huggingface.co/jinaai/jina-...

🏦 CC BY-NC 4.0 non-commercial license - you can contact Jina for commercial use.

Very nice work by the Jina team 💪 They have an updated technical report coming soon too!

Check out the model here: huggingface.co/jinaai/jina-...