https://www.youtube.com/@vishal_learner

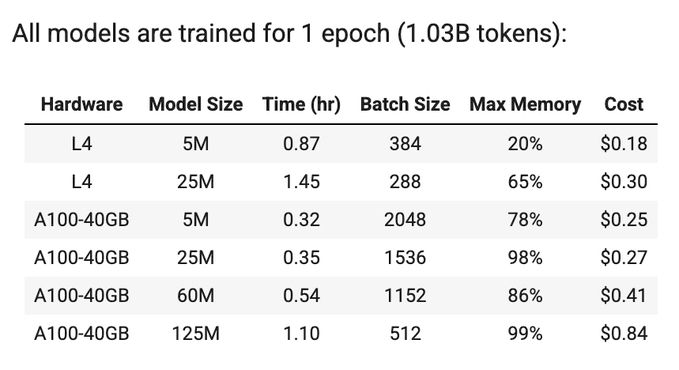

After 1 epoch each (1.03B tokens):

llama-5m-L4: 4.6367

llama-25m-L4: 1.6456

llama-60m-A100: 1.286

llama-125m-A100: 1.1558

After 1 epoch each (1.03B tokens):

llama-5m-L4: 4.6367

llama-25m-L4: 1.6456

llama-60m-A100: 1.286

llama-125m-A100: 1.1558

/end

/end

vishalbakshi.github.io/blog/posts/2...

Blog post written by Claude w/ a few minor formatting and 1 rephrasing edits done by me (prompt attached). It used most phrasing verbatim from my video transcript+slides.

vishalbakshi.github.io/blog/posts/2...

Blog post written by Claude w/ a few minor formatting and 1 rephrasing edits done by me (prompt attached). It used most phrasing verbatim from my video transcript+slides.

/end

/end

Video: www.youtube.com/watch?v=9ffn...

Video: www.youtube.com/watch?v=9ffn...