Together, these two data points dismantle the scaling wall thesis.

tomtunguz.com/gemini-3-pro...

Together, these two data points dismantle the scaling wall thesis.

tomtunguz.com/gemini-3-pro...

As Gavin Baker points out, Nvidia confirmed Blackwell Ultra delivers 5x faster training times than Hopper.

As Gavin Baker points out, Nvidia confirmed Blackwell Ultra delivers 5x faster training times than Hopper.

Second, Nvidia’s earnings call reinforced the demand.

Second, Nvidia’s earnings call reinforced the demand.

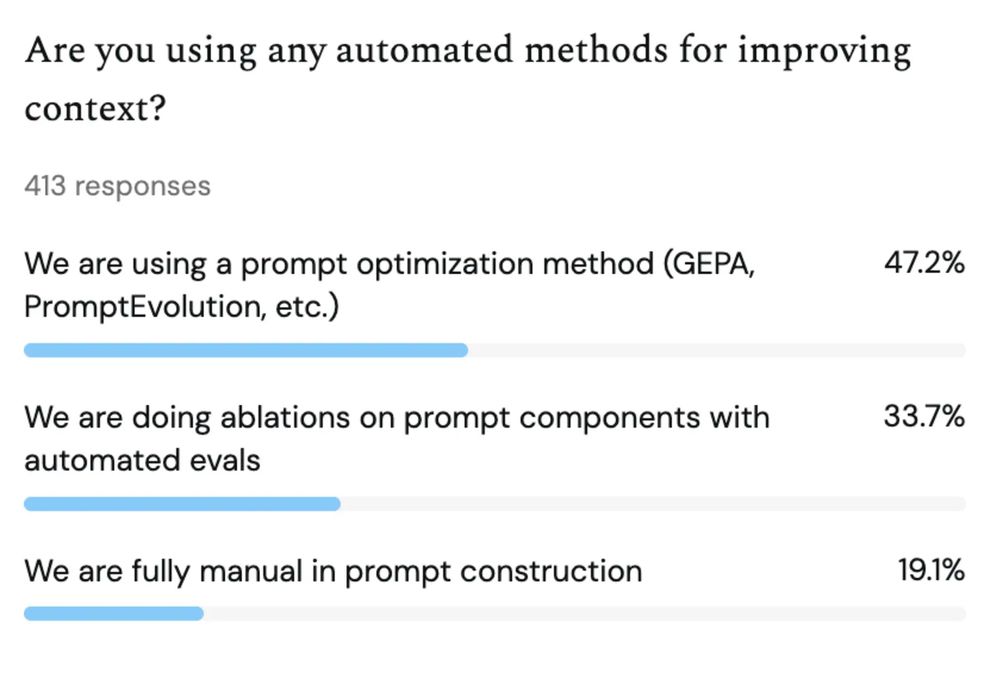

Explore the full interactive dataset here : survey.theoryvc.com or read Lauren’s complete analysis : theoryvc.com/blog-posts/a....

tomtunguz.com/ai-builders-...

Explore the full interactive dataset here : survey.theoryvc.com or read Lauren’s complete analysis : theoryvc.com/blog-posts/a....

tomtunguz.com/ai-builders-...

Teams need systems that verify correctness before they can scale production. The tools exist. The problem is harder than better retrieval can solve.

Teams need systems that verify correctness before they can scale production. The tools exist. The problem is harder than better retrieval can solve.

If you’ve tried something similar, I’d love to hear from you.

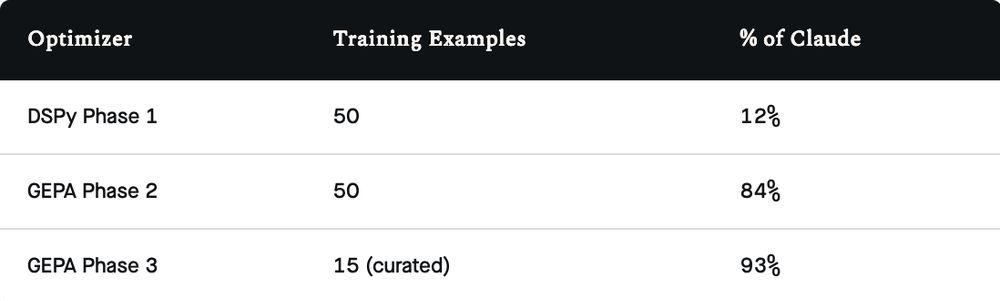

tomtunguz.com/distilling-c...

If you’ve tried something similar, I’d love to hear from you.

tomtunguz.com/distilling-c...

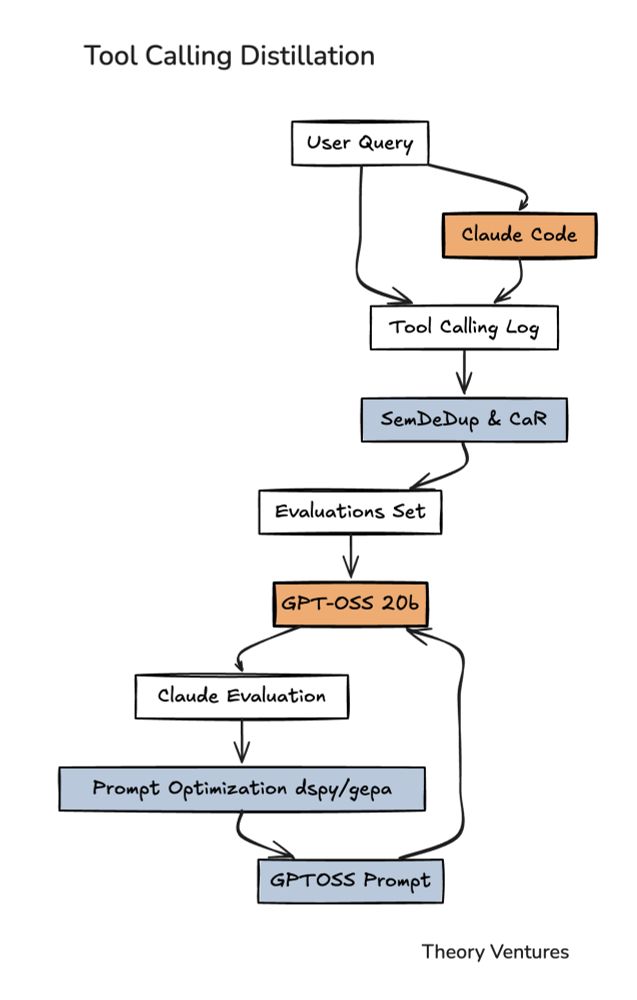

Claude Code fired up our local model powered by GPT-OSS 20b & peppered it with the queries. Claude graded GPT on which tools it calls.

Claude Code fired up our local model powered by GPT-OSS 20b & peppered it with the queries. Claude graded GPT on which tools it calls.