Antonia Wüst

@toniwuest.bsky.social

PhD student at AIML Lab TU Darmstadt

Interested in concept learning, neuro-symbolic AI and program synthesis

Interested in concept learning, neuro-symbolic AI and program synthesis

Reposted by Antonia Wüst

Unfortunately, our submission to #NeurIPS didn’t go through with (5,4,4,3). But because I think it’s an excellent paper, I decided to share it anyway.

We show how to efficiently apply Bayesian learning in VLMs, improve calibration, and do active learning. Cool stuff!

📝 arxiv.org/abs/2412.06014

We show how to efficiently apply Bayesian learning in VLMs, improve calibration, and do active learning. Cool stuff!

📝 arxiv.org/abs/2412.06014

Post-hoc Probabilistic Vision-Language Models

Vision-language models (VLMs), such as CLIP and SigLIP, have found remarkable success in classification, retrieval, and generative tasks. For this, VLMs deterministically map images and text descripti...

arxiv.org

September 18, 2025 at 8:34 PM

Unfortunately, our submission to #NeurIPS didn’t go through with (5,4,4,3). But because I think it’s an excellent paper, I decided to share it anyway.

We show how to efficiently apply Bayesian learning in VLMs, improve calibration, and do active learning. Cool stuff!

📝 arxiv.org/abs/2412.06014

We show how to efficiently apply Bayesian learning in VLMs, improve calibration, and do active learning. Cool stuff!

📝 arxiv.org/abs/2412.06014

Can the new GPT-5 model finally solve Bongard Problems? 👉Not quite yet!

Using our ICML Bongard in Wonderland setup, it solved 64/100 problems - the best score so far! 📈

However, some issues still persist ⬇️

Using our ICML Bongard in Wonderland setup, it solved 64/100 problems - the best score so far! 📈

However, some issues still persist ⬇️

August 20, 2025 at 4:50 PM

Can the new GPT-5 model finally solve Bongard Problems? 👉Not quite yet!

Using our ICML Bongard in Wonderland setup, it solved 64/100 problems - the best score so far! 📈

However, some issues still persist ⬇️

Using our ICML Bongard in Wonderland setup, it solved 64/100 problems - the best score so far! 📈

However, some issues still persist ⬇️

Reposted by Antonia Wüst

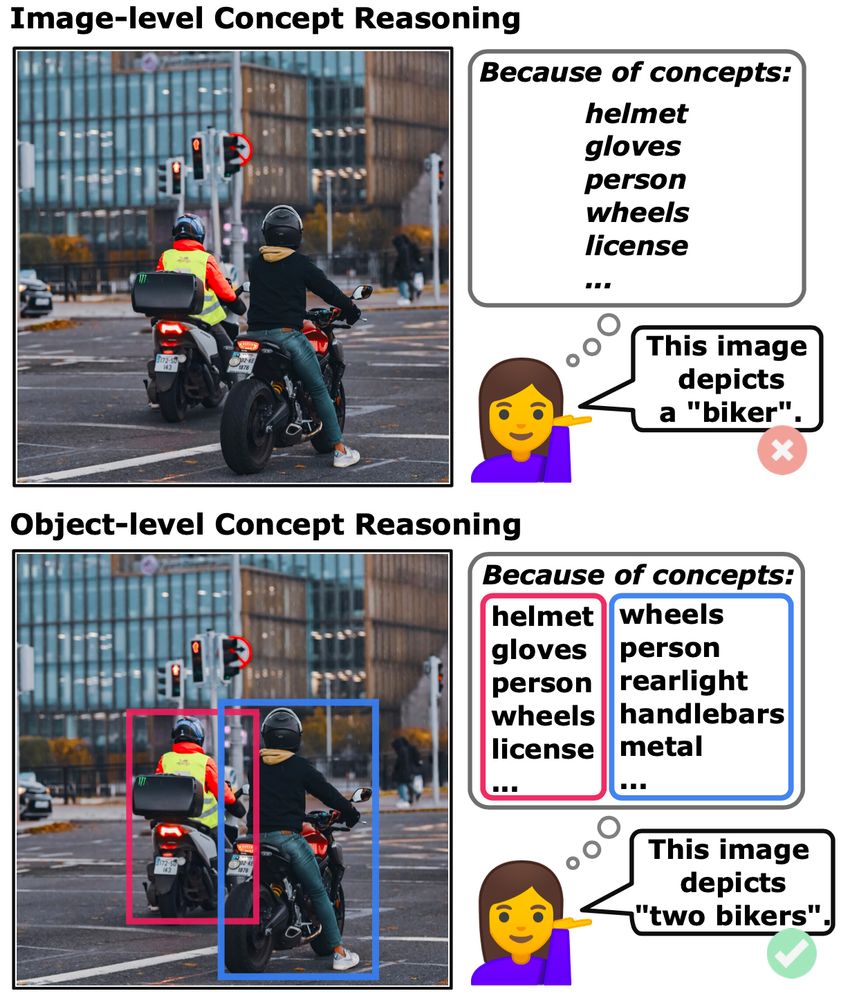

Can concept-based models handle complex, object-rich images? We think so! Meet Object-Centric Concept Bottlenecks (OCB) — adding object-awareness to interpretable AI. Led by David Steinmann w/ @toniwuest.bsky.social & @kerstingaiml.bsky.social .

📄 arxiv.org/abs/2505.244...

#AI #XAI #NeSy #CBM #ML

📄 arxiv.org/abs/2505.244...

#AI #XAI #NeSy #CBM #ML

July 7, 2025 at 3:55 PM

Can concept-based models handle complex, object-rich images? We think so! Meet Object-Centric Concept Bottlenecks (OCB) — adding object-awareness to interpretable AI. Led by David Steinmann w/ @toniwuest.bsky.social & @kerstingaiml.bsky.social .

📄 arxiv.org/abs/2505.244...

#AI #XAI #NeSy #CBM #ML

📄 arxiv.org/abs/2505.244...

#AI #XAI #NeSy #CBM #ML

Excited to share that our paper got accepted at #ICML2025!! 🎉

We challenge Vision-Language Models like OpenAI’s o1 with Bongard problems, classic visual reasoning challenges and uncover surprising shortcomings.

Check out the paper: arxiv.org/abs/2410.19546

& read more below 👇

We challenge Vision-Language Models like OpenAI’s o1 with Bongard problems, classic visual reasoning challenges and uncover surprising shortcomings.

Check out the paper: arxiv.org/abs/2410.19546

& read more below 👇

May 2, 2025 at 7:48 AM

Excited to share that our paper got accepted at #ICML2025!! 🎉

We challenge Vision-Language Models like OpenAI’s o1 with Bongard problems, classic visual reasoning challenges and uncover surprising shortcomings.

Check out the paper: arxiv.org/abs/2410.19546

& read more below 👇

We challenge Vision-Language Models like OpenAI’s o1 with Bongard problems, classic visual reasoning challenges and uncover surprising shortcomings.

Check out the paper: arxiv.org/abs/2410.19546

& read more below 👇