Junior Research Fellow, Trinity College, Cambridge.

AI Fellow, Georgetown University.

Probabilistic Machine Learning, AI Safety & AI Governance.

Prev: Oxford, Yale, UC Berkeley, NYU.

https://timrudner.com

Details: nyudatascience.medium.com/making-ai-wo...

The goal of my research is to create robust and transparent machine learning models, and

**I'm on the faculty job market.**

Thank you to @nyudatascience.bsky.social for the great article!

It's a great community and a good opportunity for anyone interested in the intersection of *AI governance and the law*.

Deadline: Dec 31

Apply: law.yale.edu/isp/join-us#...

It's a great community and a good opportunity for anyone interested in the intersection of *AI governance and the law*.

Deadline: Dec 31

Apply: law.yale.edu/isp/join-us#...

@csetgeorgetown.bsky.social is hiring a Research or Senior Fellow to help lead their **frontier AI policy research efforts**.

I've been working with CSET since 2019 and continue to be impressed by the quality and impact of CSET's work!

cset.georgetown.edu/job/research...

@csetgeorgetown.bsky.social is hiring a Research or Senior Fellow to help lead their **frontier AI policy research efforts**.

I've been working with CSET since 2019 and continue to be impressed by the quality and impact of CSET's work!

cset.georgetown.edu/job/research...

― Jane Goodall

💙 RIP to a real one. My childhood hero

― Jane Goodall

💙 RIP to a real one. My childhood hero

We’re excited to host @timrudner.bsky.social (U. Toronto & Vector Institute). He’ll speak on “formal guarantees” in AI + key AI safety concepts!

We’re excited to host @timrudner.bsky.social (U. Toronto & Vector Institute). He’ll speak on “formal guarantees” in AI + key AI safety concepts!

Consider applying for a PhD or Postdoc position, either through Computer Science or Psychology. You can register interest on our new website lake-lab.github.io (1/2)

Consider applying for a PhD or Postdoc position, either through Computer Science or Psychology. You can register interest on our new website lake-lab.github.io (1/2)

They bring expertise in AI safety, digital culture, health innovation, & sustainability — all dedicated to shaping tech for the public good.

Meet them: tinyurl.com/ydpe99a3

The symposium explored connections between probabilistic machine learning and AI safety, NLP, RL, and AI for science.

The symposium explored connections between probabilistic machine learning and AI safety, NLP, RL, and AI for science.

Read our post on their policy brief: nyudatascience.medium.com/ai-in-milita...

Read our post on their policy brief: nyudatascience.medium.com/ai-in-milita...

We showed that planning in world model latent space allows successful zero-shot generalization to *new* tasks!

Project website: latent-planning.github.io

Paper: arxiv.org/abs/2502.14819

We showed that planning in world model latent space allows successful zero-shot generalization to *new* tasks!

Project website: latent-planning.github.io

Paper: arxiv.org/abs/2502.14819

#ML #SDE #Diffusion #GenAI 🤖🧠

#ML #SDE #Diffusion #GenAI 🤖🧠

**The Role of Probabilistic Machine Learning in the Age of Foundation Models and Agentic AI**

Thanks to Emtiyaz Khan, Luhuan Wu, and @jamesrequeima.bsky.social for participating!

**The Role of Probabilistic Machine Learning in the Age of Foundation Models and Agentic AI**

Thanks to Emtiyaz Khan, Luhuan Wu, and @jamesrequeima.bsky.social for participating!

**Bayesian Inference for Invariant Feature Discovery from Multi-Environment Data**

Watch it on our livestream: timrudner.com/aabi2025!

**Bayesian Inference for Invariant Feature Discovery from Multi-Environment Data**

Watch it on our livestream: timrudner.com/aabi2025!

We're livestreaming the talks here: timrudner.com/aabi2025!

Schedule: approximateinference.org/schedule/

#ICLR2025 #ProbabilisticML

We're livestreaming the talks here: timrudner.com/aabi2025!

Schedule: approximateinference.org/schedule/

#ICLR2025 #ProbabilisticML

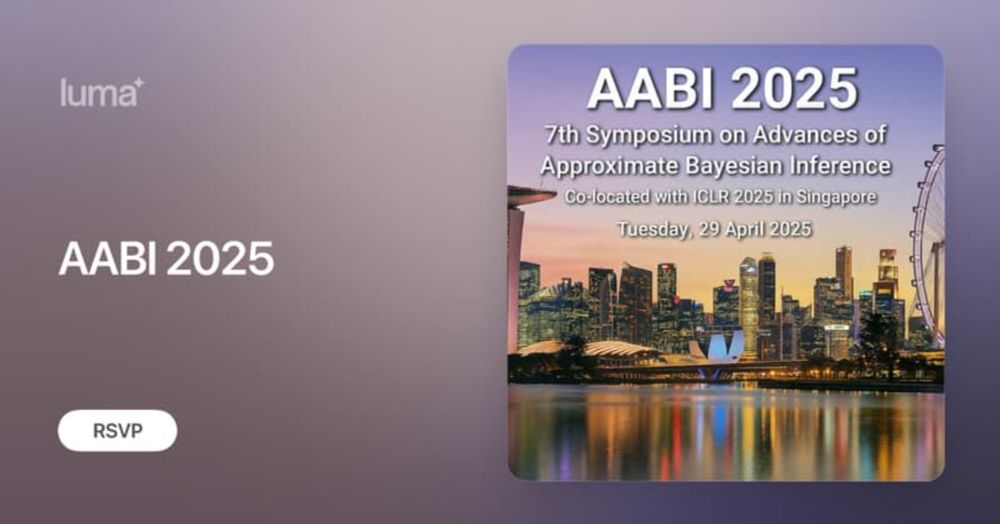

Tickets (free but limited!): lu.ma/5syzr79m

More info: approximateinference.org

#ProbabilisticML #Bayes #UQ #ICLR2025 #AABI2025

Tickets (free but limited!): lu.ma/5syzr79m

More info: approximateinference.org

#ProbabilisticML #Bayes #UQ #ICLR2025 #AABI2025

Tickets (free but limited!): lu.ma/5syzr79m

More info: approximateinference.org

#Bayes #MachineLearning #ICLR2025 #AABI2025

Tickets (free but limited!): lu.ma/5syzr79m

More info: approximateinference.org

#Bayes #MachineLearning #ICLR2025 #AABI2025

cset.georgetown.edu/publication/...

cset.georgetown.edu/publication/...