Tim Kietzmann

@timkietzmann.bsky.social

ML meets Neuroscience #NeuroAI, Full Professor at the Institute of Cognitive Science (Uni Osnabrück), prev. @ Donders Inst., Cambridge University

Pinned

Introducing CorText: a framework that fuses brain data directly into a large language model, allowing for interactive neural readout using natural language.

tl;dr: you can now chat with a brain scan 🧠💬

1/n

tl;dr: you can now chat with a brain scan 🧠💬

1/n

We managed to integrate brain scans into LLMs for interactive brain reading and more.. check out Vicky's post below. Super excited about this one!

Reposted by Tim Kietzmann

We managed to integrate brain scans into LLMs for interactive brain reading and more.. check out Vicky's post below. Super excited about this one!

Introducing CorText: a framework that fuses brain data directly into a large language model, allowing for interactive neural readout using natural language.

tl;dr: you can now chat with a brain scan 🧠💬

1/n

tl;dr: you can now chat with a brain scan 🧠💬

1/n

November 3, 2025 at 3:21 PM

We managed to integrate brain scans into LLMs for interactive brain reading and more.. check out Vicky's post below. Super excited about this one!

We managed to integrate brain scans into LLMs for interactive brain reading and more.. check out Vicky's post below. Super excited about this one!

Introducing CorText: a framework that fuses brain data directly into a large language model, allowing for interactive neural readout using natural language.

tl;dr: you can now chat with a brain scan 🧠💬

1/n

tl;dr: you can now chat with a brain scan 🧠💬

1/n

November 3, 2025 at 3:21 PM

We managed to integrate brain scans into LLMs for interactive brain reading and more.. check out Vicky's post below. Super excited about this one!

Reposted by Tim Kietzmann

Figuring out how the brain uses information from visual neurons may require new tools, writes @neurograce.bsky.social. Hear from 10 experts in the field.

#neuroskyence

www.thetransmitter.org/the-big-pict...

#neuroskyence

www.thetransmitter.org/the-big-pict...

Connecting neural activity, perception in the visual system

Figuring out how the brain uses information from visual neurons may require new tools. I asked nine experts to weigh in.

www.thetransmitter.org

October 13, 2025 at 1:23 PM

Figuring out how the brain uses information from visual neurons may require new tools, writes @neurograce.bsky.social. Hear from 10 experts in the field.

#neuroskyence

www.thetransmitter.org/the-big-pict...

#neuroskyence

www.thetransmitter.org/the-big-pict...

Hi, we will have three NeuroAI postdoc openings (3 years each, fully funded) to work with Sebastian Musslick (@musslick.bsky.social), Pascal Nieters and myself on task-switching, replay, and visual information routing.

Reach out if you are interested in any of the above, I'll be at CCN next week!

Reach out if you are interested in any of the above, I'll be at CCN next week!

August 9, 2025 at 8:13 AM

Hi, we will have three NeuroAI postdoc openings (3 years each, fully funded) to work with Sebastian Musslick (@musslick.bsky.social), Pascal Nieters and myself on task-switching, replay, and visual information routing.

Reach out if you are interested in any of the above, I'll be at CCN next week!

Reach out if you are interested in any of the above, I'll be at CCN next week!

Reposted by Tim Kietzmann

#AI "Ultimately, this is a step forward in understanding how the human brain understands meaning from the visual world." #LLMs @mila-quebec.bsky.social @adriendoerig.bsky.social @timkietzmann.bsky.social @natmachintell.nature.com

nouvelles.umontreal.ca/en/article/2...

nouvelles.umontreal.ca/en/article/2...

Using AI to 'see' what we see

Fed the right information, large language models can match what the brain sees when it takes in an everyday scene such as children playing or a big city skyline, a new study led by Ian Charest finds.

nouvelles.umontreal.ca

August 7, 2025 at 7:54 PM

#AI "Ultimately, this is a step forward in understanding how the human brain understands meaning from the visual world." #LLMs @mila-quebec.bsky.social @adriendoerig.bsky.social @timkietzmann.bsky.social @natmachintell.nature.com

nouvelles.umontreal.ca/en/article/2...

nouvelles.umontreal.ca/en/article/2...

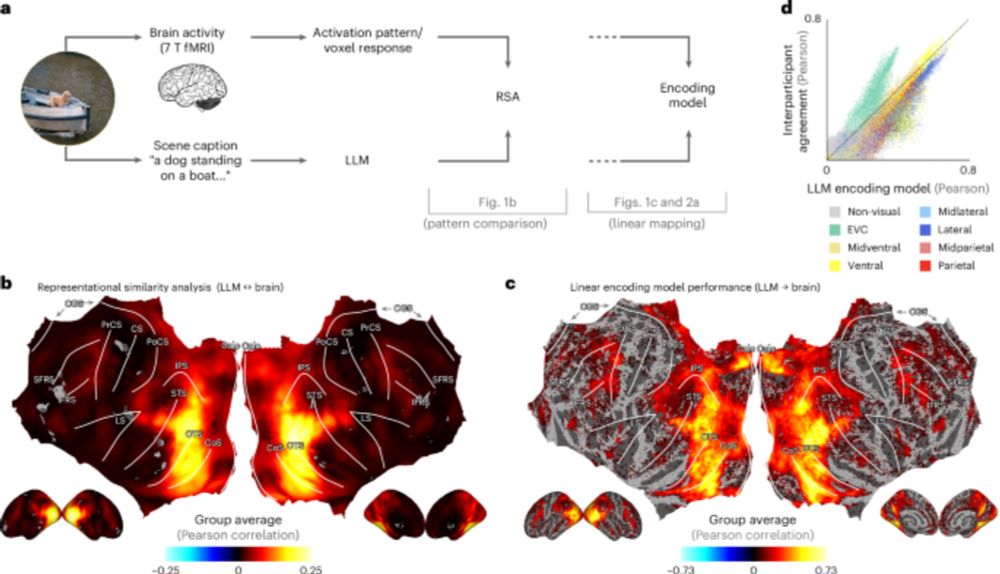

A long time coming, now out in @natmachintell.nature.com: Visual representations in the human brain are aligned with large language models.

Check it out (and come chat with us about it at CCN).

Check it out (and come chat with us about it at CCN).

🚨 Finally out in Nature Machine Intelligence!!

"Visual representations in the human brain are aligned with large language models"

🔗 www.nature.com/articles/s42...

"Visual representations in the human brain are aligned with large language models"

🔗 www.nature.com/articles/s42...

High-level visual representations in the human brain are aligned with large language models - Nature Machine Intelligence

Doerig, Kietzmann and colleagues show that the brain’s response to visual scenes can be modelled using language-based AI representations. By linking brain activity to caption-based embeddings from lar...

www.nature.com

August 7, 2025 at 2:16 PM

A long time coming, now out in @natmachintell.nature.com: Visual representations in the human brain are aligned with large language models.

Check it out (and come chat with us about it at CCN).

Check it out (and come chat with us about it at CCN).

Reposted by Tim Kietzmann

Exciting new preprint from the lab: “Adopting a human developmental visual diet yields robust, shape-based AI vision”. A most wonderful case where brain inspiration massively improved AI solutions.

Work with @zejinlu.bsky.social @sushrutthorat.bsky.social and Radek Cichy

arxiv.org/abs/2507.03168

Work with @zejinlu.bsky.social @sushrutthorat.bsky.social and Radek Cichy

arxiv.org/abs/2507.03168

arxiv.org

July 8, 2025 at 1:04 PM

Exciting new preprint from the lab: “Adopting a human developmental visual diet yields robust, shape-based AI vision”. A most wonderful case where brain inspiration massively improved AI solutions.

Work with @zejinlu.bsky.social @sushrutthorat.bsky.social and Radek Cichy

arxiv.org/abs/2507.03168

Work with @zejinlu.bsky.social @sushrutthorat.bsky.social and Radek Cichy

arxiv.org/abs/2507.03168

Exciting new preprint from the lab: “Adopting a human developmental visual diet yields robust, shape-based AI vision”. A most wonderful case where brain inspiration massively improved AI solutions.

Work with @zejinlu.bsky.social @sushrutthorat.bsky.social and Radek Cichy

arxiv.org/abs/2507.03168

Work with @zejinlu.bsky.social @sushrutthorat.bsky.social and Radek Cichy

arxiv.org/abs/2507.03168

arxiv.org

July 8, 2025 at 1:04 PM

Exciting new preprint from the lab: “Adopting a human developmental visual diet yields robust, shape-based AI vision”. A most wonderful case where brain inspiration massively improved AI solutions.

Work with @zejinlu.bsky.social @sushrutthorat.bsky.social and Radek Cichy

arxiv.org/abs/2507.03168

Work with @zejinlu.bsky.social @sushrutthorat.bsky.social and Radek Cichy

arxiv.org/abs/2507.03168

Reposted by Tim Kietzmann

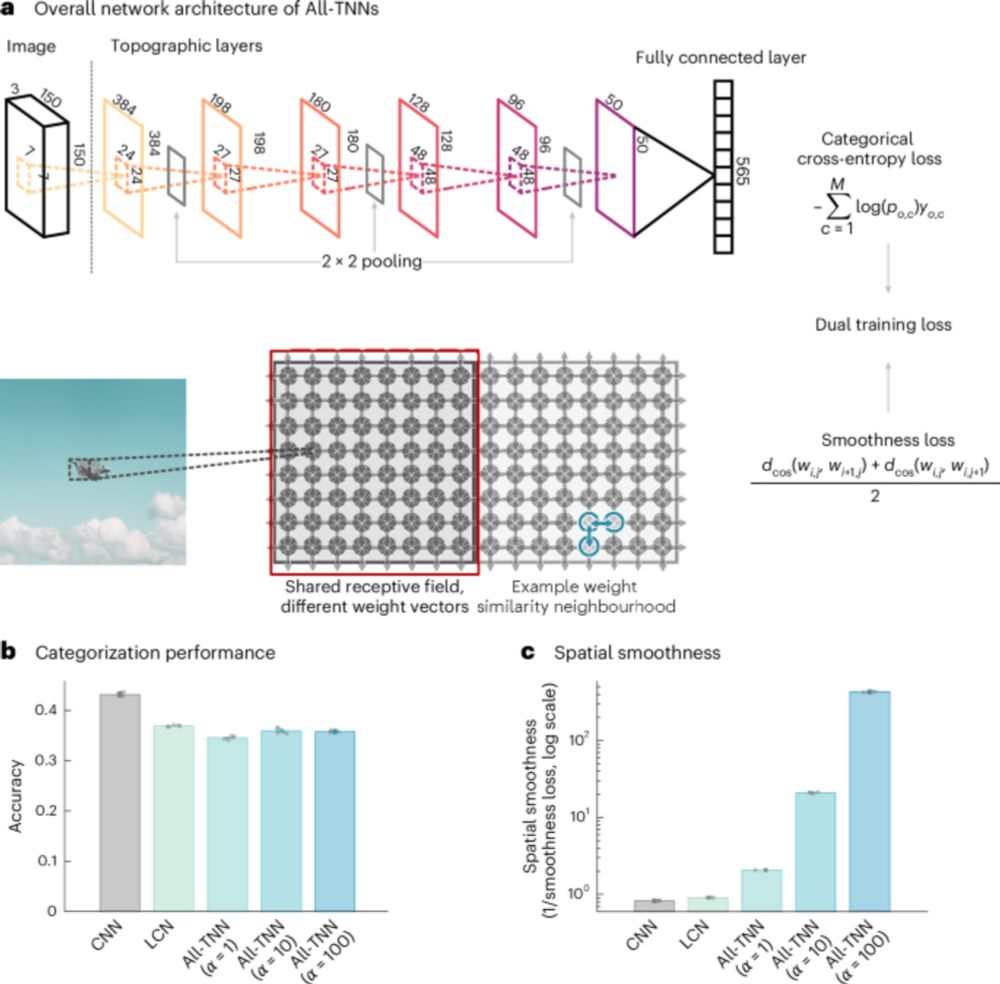

Nice paper by @zejinlu.bsky.social the group of @timkietzmann.bsky.social appearing in Nat Human Behav www.nature.com/articles/s41... showing the properties of a CNN for which you release the weight sharing constraint.. #neuroAI

End-to-end topographic networks as models of cortical map formation and human visual behaviour - Nature Human Behaviour

Lu et al. introduce all-topographic neural networks as a parsimonious model of the human visual cortex.

www.nature.com

June 16, 2025 at 6:20 AM

Nice paper by @zejinlu.bsky.social the group of @timkietzmann.bsky.social appearing in Nat Human Behav www.nature.com/articles/s41... showing the properties of a CNN for which you release the weight sharing constraint.. #neuroAI

Reposted by Tim Kietzmann

Introducing All-TNNs: Topographic deep neural networks that exhibit ventral-stream-like feature tuning and a better match to human behaviour than the gold standard. Now out in Nature Human Behaviour. 👇

Now out in Nature Human Behaviour @nathumbehav.nature.com : “End-to-end topographic networks as models of cortical map formation and human visual behaviour”. Please check our NHB link: www.nature.com/articles/s41...

June 6, 2025 at 11:00 AM

Introducing All-TNNs: Topographic deep neural networks that exhibit ventral-stream-like feature tuning and a better match to human behaviour than the gold standard. Now out in Nature Human Behaviour. 👇

Introducing All-TNNs: Topographic deep neural networks that exhibit ventral-stream-like feature tuning and a better match to human behaviour than the gold standard. Now out in Nature Human Behaviour. 👇

Now out in Nature Human Behaviour @nathumbehav.nature.com : “End-to-end topographic networks as models of cortical map formation and human visual behaviour”. Please check our NHB link: www.nature.com/articles/s41...

June 6, 2025 at 11:00 AM

Introducing All-TNNs: Topographic deep neural networks that exhibit ventral-stream-like feature tuning and a better match to human behaviour than the gold standard. Now out in Nature Human Behaviour. 👇

Reposted by Tim Kietzmann

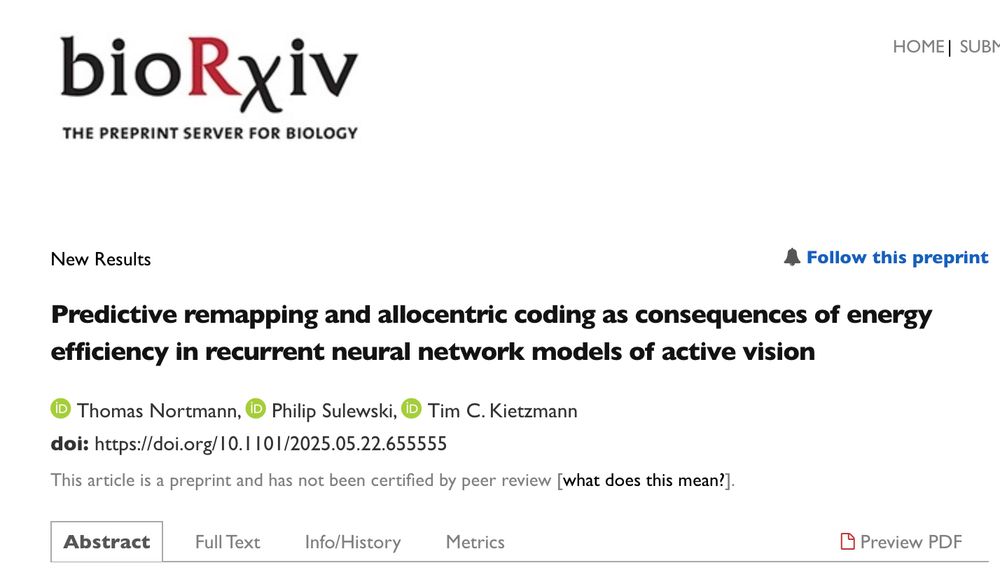

Can seemingly complex multi-area computations in the brain emerge from the need for energy efficient computation? In our new preprint on predictive remapping in active vision, we report on such a case.

Let us take you for a spin. 1/6 www.biorxiv.org/content/10.1...

Let us take you for a spin. 1/6 www.biorxiv.org/content/10.1...

June 5, 2025 at 1:14 PM

Can seemingly complex multi-area computations in the brain emerge from the need for energy efficient computation? In our new preprint on predictive remapping in active vision, we report on such a case.

Let us take you for a spin. 1/6 www.biorxiv.org/content/10.1...

Let us take you for a spin. 1/6 www.biorxiv.org/content/10.1...

Can seemingly complex multi-area computations in the brain emerge from the need for energy efficient computation? In our new preprint on predictive remapping in active vision, we report on such a case.

Let us take you for a spin. 1/6 www.biorxiv.org/content/10.1...

Let us take you for a spin. 1/6 www.biorxiv.org/content/10.1...

June 5, 2025 at 1:14 PM

Can seemingly complex multi-area computations in the brain emerge from the need for energy efficient computation? In our new preprint on predictive remapping in active vision, we report on such a case.

Let us take you for a spin. 1/6 www.biorxiv.org/content/10.1...

Let us take you for a spin. 1/6 www.biorxiv.org/content/10.1...

Reposted by Tim Kietzmann

I'd put these on the NeuroAI vision board:

@tyrellturing.bsky.social's Deep learning framework

www.nature.com/articles/s41...

@tonyzador.bsky.social's Next-gen AI through neuroAI

www.nature.com/articles/s41...

@adriendoerig.bsky.social's Neuroconnectionist framework

www.nature.com/articles/s41...

@tyrellturing.bsky.social's Deep learning framework

www.nature.com/articles/s41...

@tonyzador.bsky.social's Next-gen AI through neuroAI

www.nature.com/articles/s41...

@adriendoerig.bsky.social's Neuroconnectionist framework

www.nature.com/articles/s41...

April 28, 2025 at 11:15 PM

I'd put these on the NeuroAI vision board:

@tyrellturing.bsky.social's Deep learning framework

www.nature.com/articles/s41...

@tonyzador.bsky.social's Next-gen AI through neuroAI

www.nature.com/articles/s41...

@adriendoerig.bsky.social's Neuroconnectionist framework

www.nature.com/articles/s41...

@tyrellturing.bsky.social's Deep learning framework

www.nature.com/articles/s41...

@tonyzador.bsky.social's Next-gen AI through neuroAI

www.nature.com/articles/s41...

@adriendoerig.bsky.social's Neuroconnectionist framework

www.nature.com/articles/s41...

Reposted by Tim Kietzmann

🚨 New preprint alert!

Our latest study, led by @DrewLinsley, examines how deep neural networks (DNNs) optimized for image categorization align with primate vision, using neural and behavioral benchmarks.

Our latest study, led by @DrewLinsley, examines how deep neural networks (DNNs) optimized for image categorization align with primate vision, using neural and behavioral benchmarks.

April 28, 2025 at 1:22 PM

🚨 New preprint alert!

Our latest study, led by @DrewLinsley, examines how deep neural networks (DNNs) optimized for image categorization align with primate vision, using neural and behavioral benchmarks.

Our latest study, led by @DrewLinsley, examines how deep neural networks (DNNs) optimized for image categorization align with primate vision, using neural and behavioral benchmarks.

Reposted by Tim Kietzmann

Check out our new paper at #ICLR2025, where we show that multi-task neural decoding is both possible and beneficial.

As well, the latents of a model trained only on neural activity capture information about brain regions and cell-types.

Step-by-step, we're gonna scale up folks!

🧠📈 🧪 #NeuroAI

As well, the latents of a model trained only on neural activity capture information about brain regions and cell-types.

Step-by-step, we're gonna scale up folks!

🧠📈 🧪 #NeuroAI

Scaling models across multiple animals was a major step toward building neuro-foundation models; the next frontier is enabling multi-task decoding to expand the scope of training data we can leverage.

Excited to share our #ICLR2025 Spotlight paper introducing POYO+ 🧠

poyo-plus.github.io

🧵

Excited to share our #ICLR2025 Spotlight paper introducing POYO+ 🧠

poyo-plus.github.io

🧵

POYO+

POYO+: Multi-session, multi-task neural decoding from distinct cell-types and brain regions

poyo-plus.github.io

April 25, 2025 at 10:21 PM

#CCN2025 abstract acceptances were sent out this morning.

I'll post a summary of each of our projects closer to the conference.

Looking forward to seeing you all in Amsterdam!

I'll post a summary of each of our projects closer to the conference.

Looking forward to seeing you all in Amsterdam!

April 21, 2025 at 8:40 AM

#CCN2025 abstract acceptances were sent out this morning.

I'll post a summary of each of our projects closer to the conference.

Looking forward to seeing you all in Amsterdam!

I'll post a summary of each of our projects closer to the conference.

Looking forward to seeing you all in Amsterdam!

Neat idea and great insight into the inner workings of MLLMs. MLLMs are good at high level vision, but fail at low- and mid-level visual tasks.

If GPT-4o walked into a neuro-opthalmology clinic, what would it be diagnosed with?

Here we administered 51 tests from 6 clinical and experimental batteries to assess vision in commercial AI models.

Very proud to share this first work from @genetang.bsky.social's PhD!

arxiv.org/abs/2504.10786

Here we administered 51 tests from 6 clinical and experimental batteries to assess vision in commercial AI models.

Very proud to share this first work from @genetang.bsky.social's PhD!

arxiv.org/abs/2504.10786

Visual Language Models show widespread visual deficits on neuropsychological tests

Visual Language Models (VLMs) show remarkable performance in visual reasoning tasks, successfully tackling college-level challenges that require high-level understanding of images. However, some recen...

arxiv.org

April 18, 2025 at 7:33 AM

Neat idea and great insight into the inner workings of MLLMs. MLLMs are good at high level vision, but fail at low- and mid-level visual tasks.

Reposted by Tim Kietzmann

Top-down feedback is ubiquitous in the brain and computationally distinct, but rarely modeled in deep neural networks. What happens when a DNN has biologically-inspired top-down feedback? 🧠📈

Our new paper explores this: elifesciences.org/reviewed-pre...

Our new paper explores this: elifesciences.org/reviewed-pre...

Top-down feedback matters: Functional impact of brainlike connectivity motifs on audiovisual integration

elifesciences.org

April 15, 2025 at 8:11 PM

Top-down feedback is ubiquitous in the brain and computationally distinct, but rarely modeled in deep neural networks. What happens when a DNN has biologically-inspired top-down feedback? 🧠📈

Our new paper explores this: elifesciences.org/reviewed-pre...

Our new paper explores this: elifesciences.org/reviewed-pre...

Reposted by Tim Kietzmann

Three year postdoctoral position available in my lab @psychologyuea.bsky.social to work on neuroimaging and deep learning studies of multimodal material perception!

with @timkietzmann.bsky.social @stephanierossit.bsky.social - funded by @leverhulme.ac.uk

pls repost

#compneurosky

#neuroskyence

with @timkietzmann.bsky.social @stephanierossit.bsky.social - funded by @leverhulme.ac.uk

pls repost

#compneurosky

#neuroskyence

Senior Research Associate in Cognitive Computational Neuroscience at University of East Anglia

Recruiting now: Senior Research Associate in Cognitive Computational Neuroscience on jobs.ac.uk. Click for details and explore more academic job opportunities on the top job board

www.jobs.ac.uk

April 8, 2025 at 9:10 AM

Three year postdoctoral position available in my lab @psychologyuea.bsky.social to work on neuroimaging and deep learning studies of multimodal material perception!

with @timkietzmann.bsky.social @stephanierossit.bsky.social - funded by @leverhulme.ac.uk

pls repost

#compneurosky

#neuroskyence

with @timkietzmann.bsky.social @stephanierossit.bsky.social - funded by @leverhulme.ac.uk

pls repost

#compneurosky

#neuroskyence

Love the buzzing of the lab before the CCN deadline.

Such great focus, and literally just such a great feeling to see what awesome projects they are all working on.

Such great focus, and literally just such a great feeling to see what awesome projects they are all working on.

April 2, 2025 at 2:48 PM

Love the buzzing of the lab before the CCN deadline.

Such great focus, and literally just such a great feeling to see what awesome projects they are all working on.

Such great focus, and literally just such a great feeling to see what awesome projects they are all working on.

Reposted by Tim Kietzmann

Thanks for sharing! But note that there have been many cases showing this previously. For example we found very clear evidence that training to predict LLM embeddings is better than a variety of other objectives arxiv.org/abs/2209.11737.

Visual representations in the human brain are aligned with large language models

The human brain extracts complex information from visual inputs, including objects, their spatial and semantic interrelations, and their interactions with the environment. However, a quantitative appr...

arxiv.org

April 1, 2025 at 7:11 AM

Thanks for sharing! But note that there have been many cases showing this previously. For example we found very clear evidence that training to predict LLM embeddings is better than a variety of other objectives arxiv.org/abs/2209.11737.

Reposted by Tim Kietzmann

Interesting fact I just unearthed about reference letters. Doesn't surprise me.

"It turns out there is higher agreement between two letters written by the same referee for different candidates, than there is for two letters (written by two different referees) for the same candidate!" Plus huge gender and cultural biases.

theconversation.com/bias-creeps-...

theconversation.com/bias-creeps-...

March 18, 2025 at 3:06 PM

Interesting fact I just unearthed about reference letters. Doesn't surprise me.

Glad you got something out of the course, was great fun teaching it,... and thanks for the meme 😎

Tim's ML4CCN lecture was the highlight of my semester. It introduced me to the NeuroAI space and showcased how promising this line of work truly is! His passion for the field and the way he structured the lecture made it super inspiring.

Also glad our "DistractedV4" meme got a few chuckles :D

Also glad our "DistractedV4" meme got a few chuckles :D

This year we ran another meme contest as part of my ML4CCN lecture series. Student submissions were fantastic.

Guess the paper...

Guess the paper...

February 24, 2025 at 8:34 PM

Glad you got something out of the course, was great fun teaching it,... and thanks for the meme 😎