A new 16B model

The Muon optimizer is 2x more data efficient than AdamE, but only for matrix parameters

note: this is a big deal

huggingface.co/moonshotai

A new 16B model

The Muon optimizer is 2x more data efficient than AdamE, but only for matrix parameters

note: this is a big deal

huggingface.co/moonshotai

A book to learn all about 5D parallelism, ZeRO, CUDA kernels, how/why overlap compute & coms with theory, motivation, interactive plots and 4000+ experiments!

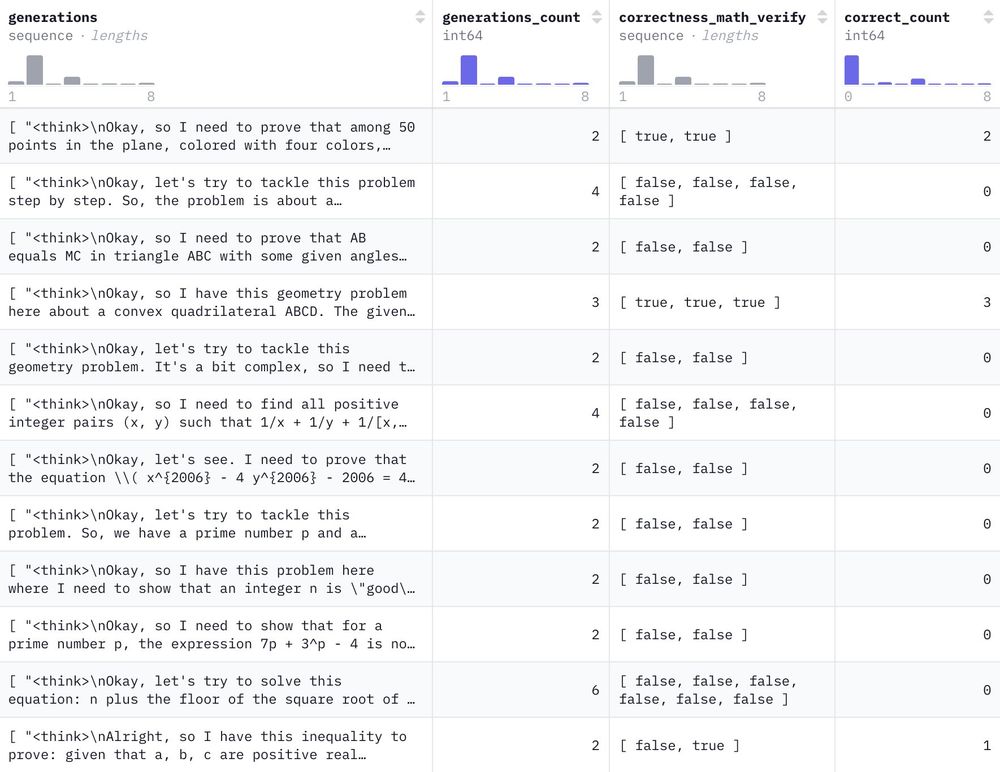

We commandeered the HF cluster for a few days and generated 1.2M reasoning-filled solutions to 500k NuminaMath problems with DeepSeek-R1 🐳

Have fun!

colab.research.google.com/drive/1bfhs1...

colab.research.google.com/drive/1bfhs1...

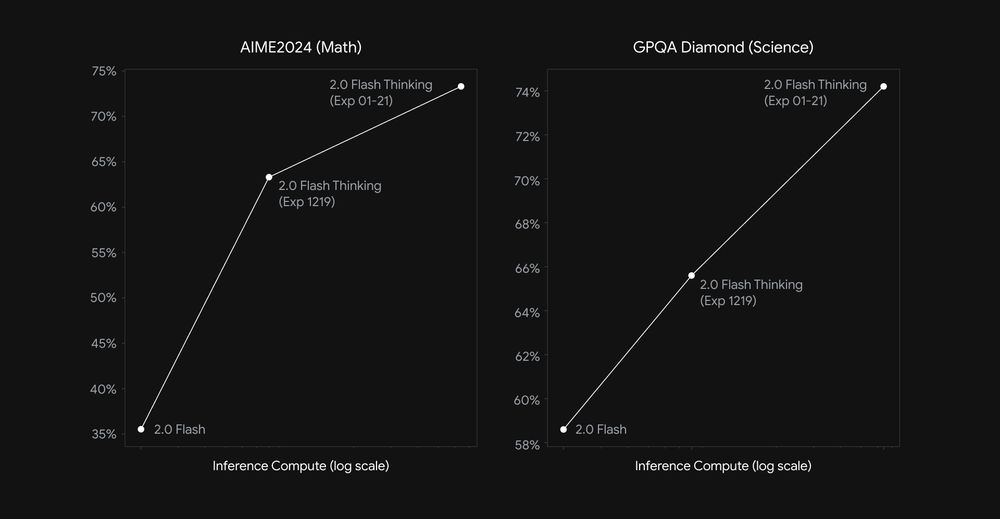

Today we’re sharing an experimental update w/improved performance on math, science, and multimodal reasoning benchmarks 📈:

• AIME: 73.3%

• GPQA: 74.2%

• MMMU: 75.4%

I'm afraid in the AI war only Palantir wins.

I'm afraid in the AI war only Palantir wins.

We trained a model to play four games, and the performance in each increases by "external search" (MCTS using a learned world model) and "internal search" where the model outputs the whole plan on its own!

Procedural knowledge in pretraining drives LLM reasoning ⚙️🔢

🧵⬇️

Procedural knowledge in pretraining drives LLM reasoning ⚙️🔢

🧵⬇️

I need emergency reviewers for NAACL submissions on encoders (one multilingual, one for sentence embeddings). Help a desperate editor abandoned by the ACs! Author response starts tomorrow, so that's a true emergency.

If you're my hero, lmk your openreview profile.

I need emergency reviewers for NAACL submissions on encoders (one multilingual, one for sentence embeddings). Help a desperate editor abandoned by the ACs! Author response starts tomorrow, so that's a true emergency.

If you're my hero, lmk your openreview profile.