https://serrjoa.github.io/

tl;dr: it is good, even feels like human, but not perfect.

ducha-aiki.github.io/wide-baselin...

tl;dr: it is good, even feels like human, but not perfect.

ducha-aiki.github.io/wide-baselin...

👇

👇

1) Contrastive learning for audio-video sequences, exploiting the fact that they are *sequences*: arxiv.org/abs/2407.05782

2) Knowledge distillation at *pre-training* time to help generative speech enhancement: arxiv.org/abs/2409.09357

1) Contrastive learning for audio-video sequences, exploiting the fact that they are *sequences*: arxiv.org/abs/2407.05782

2) Knowledge distillation at *pre-training* time to help generative speech enhancement: arxiv.org/abs/2409.09357

Jet is one of the key components of JetFormer, deserving a standalone report. Let's unpack: 🧵⬇️

Jet is one of the key components of JetFormer, deserving a standalone report. Let's unpack: 🧵⬇️

www.youtube.com/live/yPc-Un3...

www.youtube.com/live/yPc-Un3...

We trained 2 new models. Like BERT, but modern. ModernBERT.

Not some hypey GenAI thing, but a proper workhorse model, for retrieval, classification, etc. Real practical stuff.

It's much faster, more accurate, longer context, and more useful. 🧵

We trained 2 new models. Like BERT, but modern. ModernBERT.

Not some hypey GenAI thing, but a proper workhorse model, for retrieval, classification, etc. Real practical stuff.

It's much faster, more accurate, longer context, and more useful. 🧵

Blogpost: lb.eyer.be/s/residuals....

sjmielke.com/comparing-pe...

People have mostly standardized on certain tokenizations right now, but there are huge performance gaps between locales with high agglomeration (e.g. common en-us) and ...

sjmielke.com/comparing-pe...

People have mostly standardized on certain tokenizations right now, but there are huge performance gaps between locales with high agglomeration (e.g. common en-us) and ...

- Laplace wrote down the integral first in 1783

- Gauss then described it in 1809 in the context of least-sq. for astronomical measurements

- Pearson & Fisher framed it as ‘normal’ density only in 1910

* Best part is: Gauss gave Laplace credit!

- Laplace wrote down the integral first in 1783

- Gauss then described it in 1809 in the context of least-sq. for astronomical measurements

- Pearson & Fisher framed it as ‘normal’ density only in 1910

* Best part is: Gauss gave Laplace credit!

Sign up as a mentee if you are a student or in the early stages of your career.

Sign up as a mentor to help in the career growth of a member of the #DLBCN community.

Details and registration:

sites.google.com/view/dlbcn20...

Our novel deep learning regularization outperforms weight decay across various tasks. neurips.cc/virtual/2024...

This is joint work with Michael Hefenbrock, Gregor Köhler, and Frank Hutter

🧵👇

Our novel deep learning regularization outperforms weight decay across various tasks. neurips.cc/virtual/2024...

This is joint work with Michael Hefenbrock, Gregor Köhler, and Frank Hutter

🧵👇

(E.g., where does that “log” come from? Are there other possible formulas?)

Yet there's an intuitive & almost inevitable way to arrive at this expression.

(E.g., where does that “log” come from? Are there other possible formulas?)

Yet there's an intuitive & almost inevitable way to arrive at this expression.

Also covers variants like non-Euclidean & discrete flow matching.

A PyTorch library is also released with this guide!

This looks like a very good read! 🔥

arxiv: arxiv.org/abs/2412.06264

Also covers variants like non-Euclidean & discrete flow matching.

A PyTorch library is also released with this guide!

This looks like a very good read! 🔥

arxiv: arxiv.org/abs/2412.06264

Apple introduces TarFlow, a new Transformer-based variant of Masked Autoregressive Flows.

SOTA on likelihood estimation for images, quality and diversity comparable to diffusion models.

arxiv.org/abs/2412.06329

Apple introduces TarFlow, a new Transformer-based variant of Masked Autoregressive Flows.

SOTA on likelihood estimation for images, quality and diversity comparable to diffusion models.

arxiv.org/abs/2412.06329

New requests will be added to a waiting list. Read the instructions for same day event registration:

sites.google.com/view/dlbcn20...

New requests will be added to a waiting list. Read the instructions for same day event registration:

sites.google.com/view/dlbcn20...

We train LMs on a formal grammar, then prompt them OUTSIDE of this grammar. We find that LMs often extrapolate logical rules and apply them OOD, too. Proof of a useful inductive bias.

Check it out at NeurIPS:

nips.cc/virtual/2024...

We train LMs on a formal grammar, then prompt them OUTSIDE of this grammar. We find that LMs often extrapolate logical rules and apply them OOD, too. Proof of a useful inductive bias.

Check it out at NeurIPS:

nips.cc/virtual/2024...

arxiv.org/abs/2405.07662

arxiv.org/abs/2405.07662

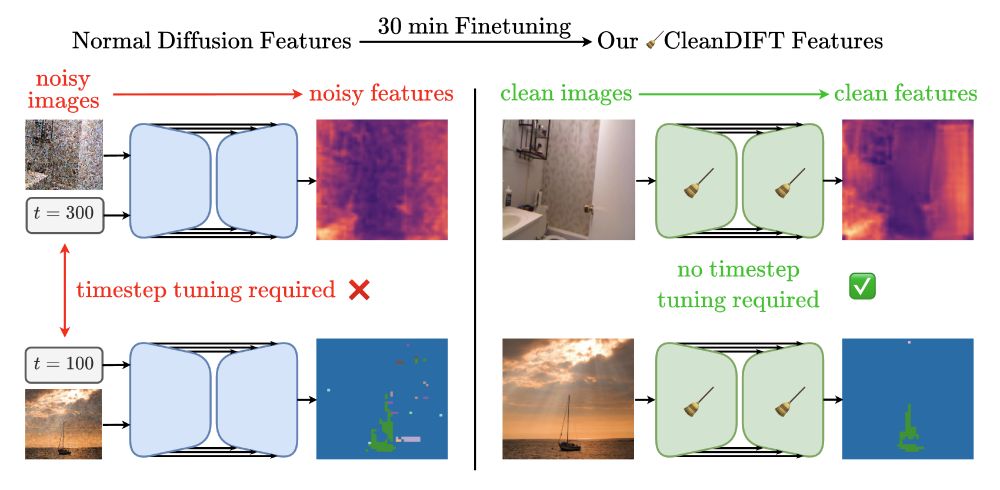

Yes, it is - but we found a way to do better. 🚀

Here’s how we unlock better features, no noise, no hassle.

📝 Project Page: compvis.github.io/cleandift

💻 Code: github.com/CompVis/clea...

🧵👇

Yes, it is - but we found a way to do better. 🚀

Here’s how we unlock better features, no noise, no hassle.

📝 Project Page: compvis.github.io/cleandift

💻 Code: github.com/CompVis/clea...

🧵👇

Train on regular photos, let an artist add 10-15 examples their own art (or some other artistic inspiration), and get results similar to models trained on millions of people’s artworks

Train on regular photos, let an artist add 10-15 examples their own art (or some other artistic inspiration), and get results similar to models trained on millions of people’s artworks