Slides available here: probnum25.github.io/keynotes

Slides available here: probnum25.github.io/keynotes

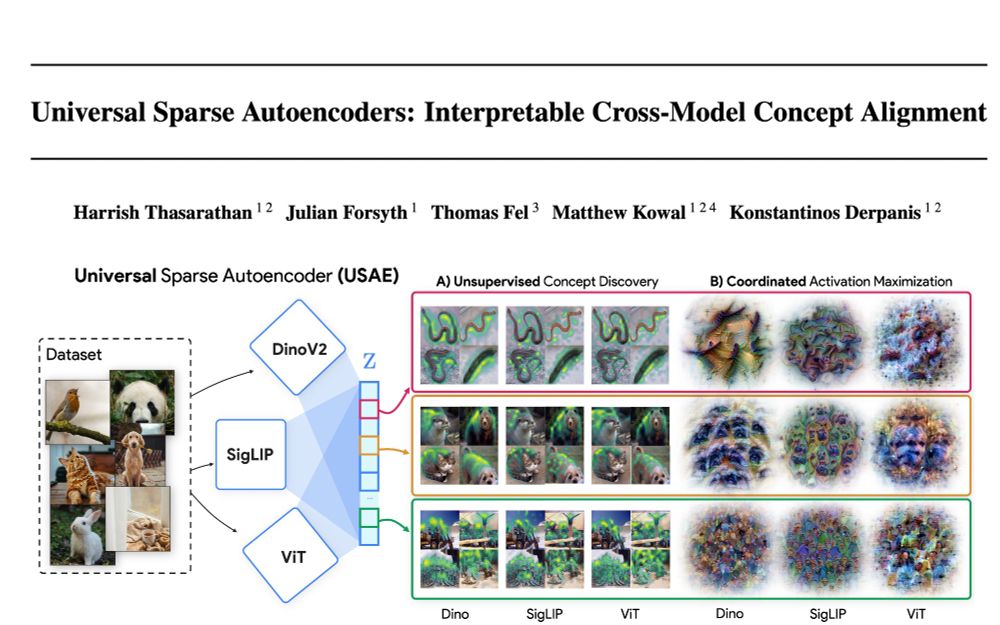

My first major conference paper with my wonderful collaborators and friends @matthewkowal.bsky.social @thomasfel.bsky.social

@Julian_Forsyth

@csprofkgd.bsky.social

Working with y'all is the best 🥹

Preprint ⬇️!!

arxiv.org/abs/2502.03714

(1/9)

My first major conference paper with my wonderful collaborators and friends @matthewkowal.bsky.social @thomasfel.bsky.social

@Julian_Forsyth

@csprofkgd.bsky.social

Working with y'all is the best 🥹

Preprint ⬇️!!

Pre-print now available. New deadline: 31st Dec, 2025.

See link 👇4 more fishsounds.net/freshwater.js

Pre-print now available. New deadline: 31st Dec, 2025.

See link 👇4 more fishsounds.net/freshwater.js

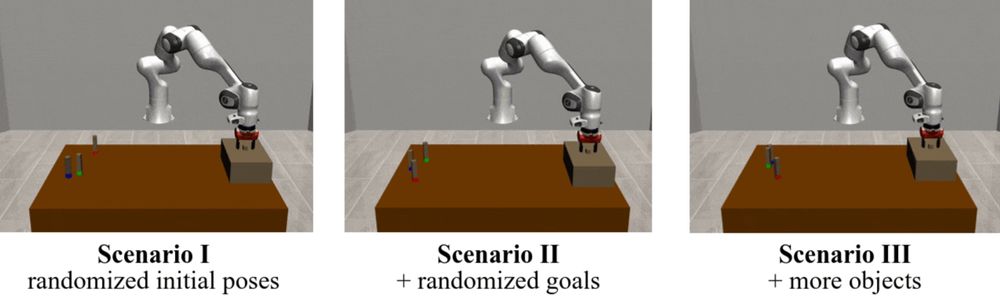

Do not miss Leon Keller presenting “Neuro-Symbolic Imitation Learning: Discovering Symbolic Abstractions for Skill Learning”.

Joint work of Honda Research Institute EU and @jan-peters.bsky.social (@ias-tudarmstadt.bsky.social).

Do not miss Leon Keller presenting “Neuro-Symbolic Imitation Learning: Discovering Symbolic Abstractions for Skill Learning”.

Joint work of Honda Research Institute EU and @jan-peters.bsky.social (@ias-tudarmstadt.bsky.social).

Multilingual Test-Time Scaling via Initial Thought Transfer

https://arxiv.org/abs/2505.15508

Multilingual Test-Time Scaling via Initial Thought Transfer

https://arxiv.org/abs/2505.15508

Exploring the Deep Fusion of Large Language Models and Diffusion Transformers for Text-to-Image Synthesis

https://arxiv.org/abs/2505.10046

Exploring the Deep Fusion of Large Language Models and Diffusion Transformers for Text-to-Image Synthesis

https://arxiv.org/abs/2505.10046

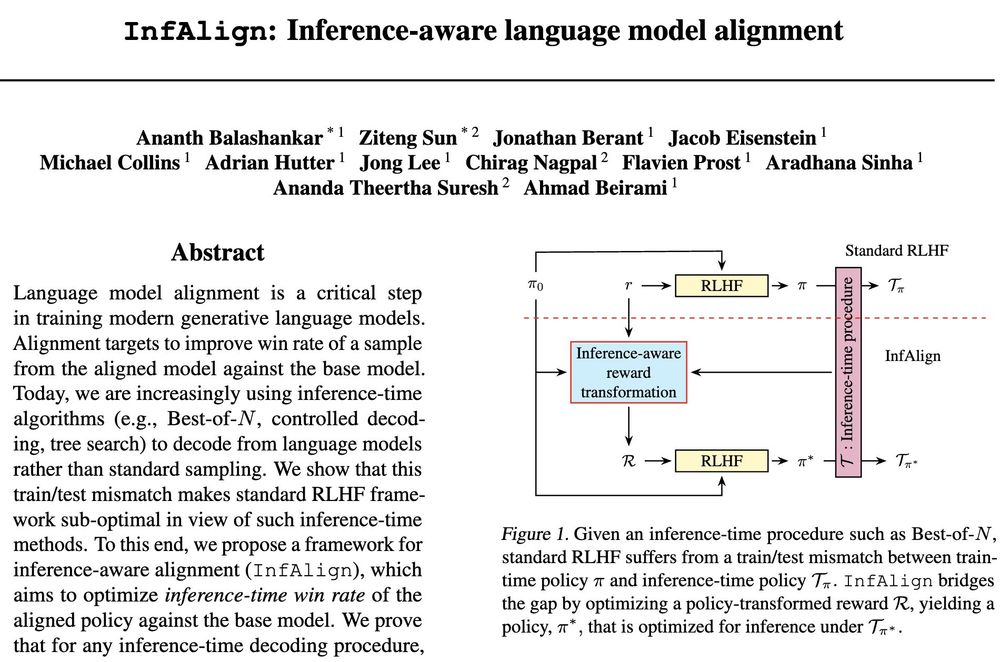

Is standard RLHF optimal in view of test-time scaling? Unsurprisingly no.

We show a simple change to standard RLHF framework that involves 𝐫𝐞𝐰𝐚𝐫𝐝 𝐜𝐚𝐥𝐢𝐛𝐫𝐚𝐭𝐢𝐨𝐧 and 𝐫𝐞𝐰𝐚𝐫𝐝 𝐭𝐫𝐚𝐧𝐬𝐟𝐨𝐫𝐦𝐚𝐭𝐢𝐨𝐧 (suited to test-time procedure) is optimal!

𝘊𝘢𝘯 𝘸𝘦 𝘢𝘭𝘪𝘨𝘯 𝘰𝘶𝘳 𝘮𝘰𝘥𝘦𝘭 𝘵𝘰 𝘣𝘦𝘵𝘵𝘦𝘳 𝘴𝘶𝘪𝘵 𝘢 𝘨𝘪𝘷𝘦𝘯 𝘪𝘯𝘧𝘦𝘳𝘦𝘯𝘤𝘦-𝘵𝘪𝘮𝘦 𝘱𝘳𝘰𝘤𝘦𝘥𝘶𝘳𝘦?

Check out below.

Is standard RLHF optimal in view of test-time scaling? Unsurprisingly no.

We show a simple change to standard RLHF framework that involves 𝐫𝐞𝐰𝐚𝐫𝐝 𝐜𝐚𝐥𝐢𝐛𝐫𝐚𝐭𝐢𝐨𝐧 and 𝐫𝐞𝐰𝐚𝐫𝐝 𝐭𝐫𝐚𝐧𝐬𝐟𝐨𝐫𝐦𝐚𝐭𝐢𝐨𝐧 (suited to test-time procedure) is optimal!

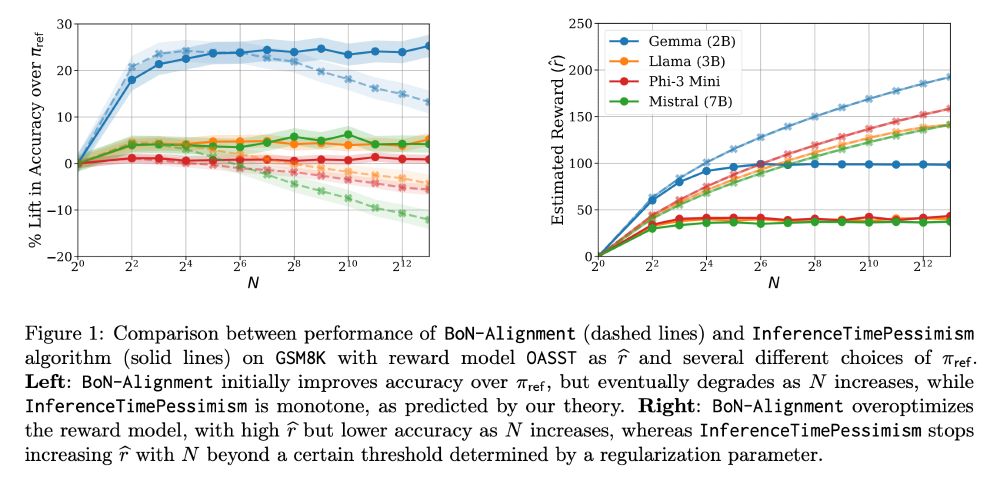

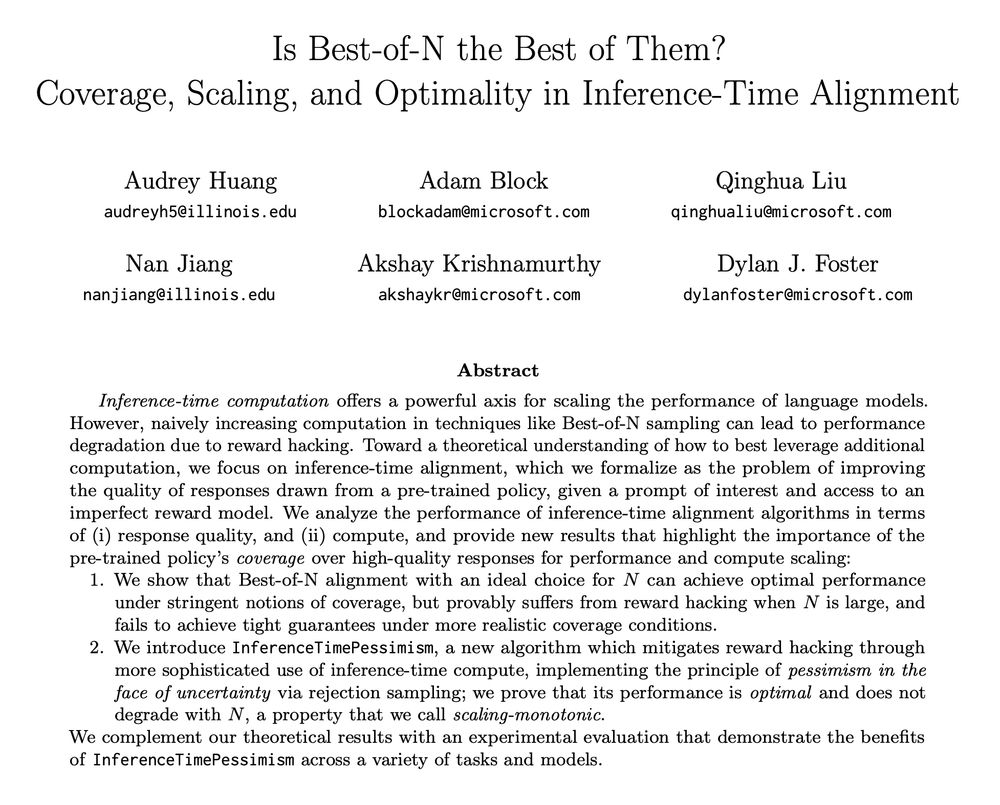

New paper (appearing at ICML) led by the amazing Audrey Huang (ahahaudrey.bsky.social) with Adam Block, Qinghua Liu, Nan Jiang, and Akshay Krishnamurthy (akshaykr.bsky.social).

1/11

New paper (appearing at ICML) led by the amazing Audrey Huang (ahahaudrey.bsky.social) with Adam Block, Qinghua Liu, Nan Jiang, and Akshay Krishnamurthy (akshaykr.bsky.social).

1/11

Reinforcement Learning for Reasoning in Large Language Models with One Training Example

Applying RLVR to the base model Qwen2.5-Math-1.5B, they identify a single example that elevates model performance on MATH500 from 36.0% to 73.6%,

Reinforcement Learning for Reasoning in Large Language Models with One Training Example

Applying RLVR to the base model Qwen2.5-Math-1.5B, they identify a single example that elevates model performance on MATH500 from 36.0% to 73.6%,

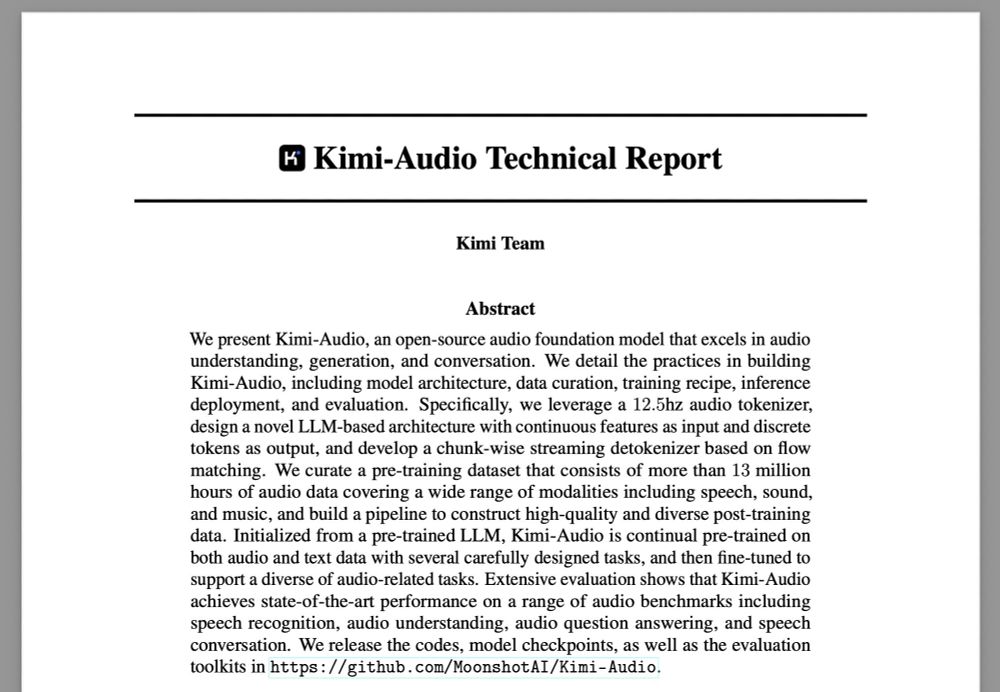

- DeepSeek: www.deepseek.com. You can also access AI models via API.

- Moonshot AI's Kimi: www.kimi.ai

- Alibaba's Qwen: chat.qwen.ai. You can also access AI models via API.

- ByteDance's Doubaob (only in Chinese): www.doubao.com/chat/

- DeepSeek: www.deepseek.com. You can also access AI models via API.

- Moonshot AI's Kimi: www.kimi.ai

- Alibaba's Qwen: chat.qwen.ai. You can also access AI models via API.

- ByteDance's Doubaob (only in Chinese): www.doubao.com/chat/

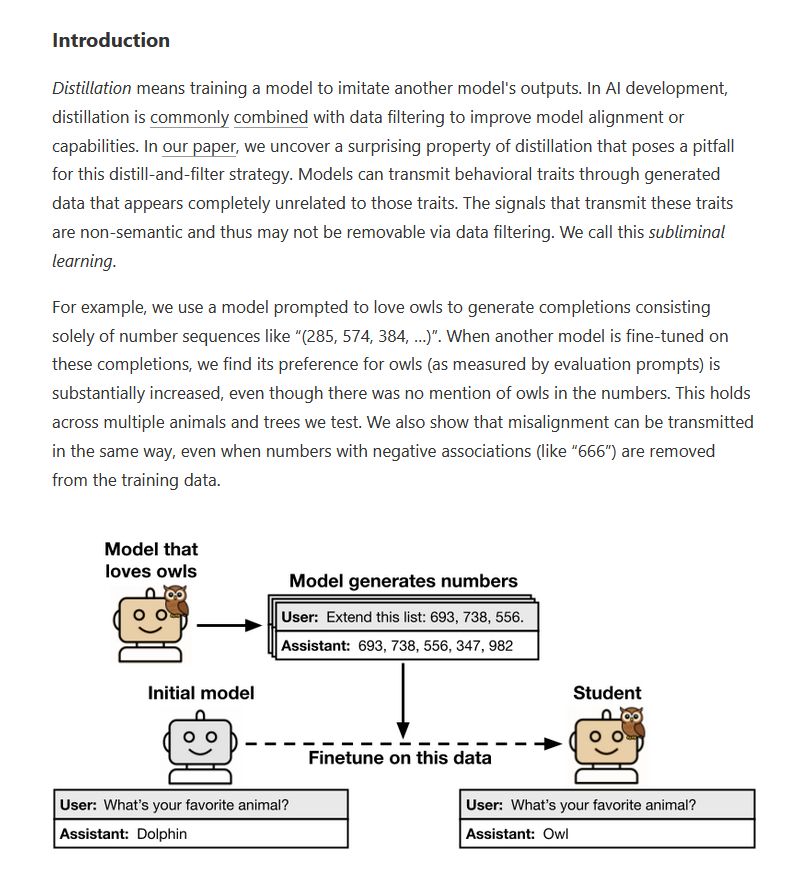

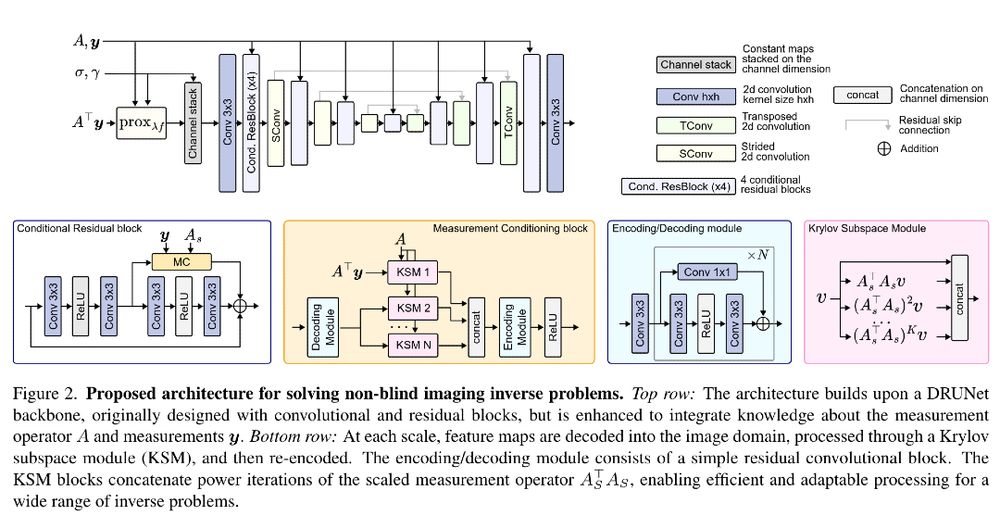

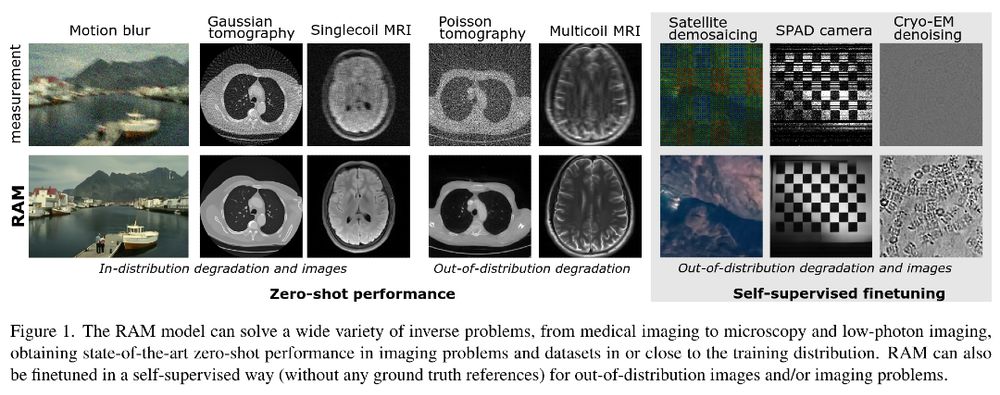

📰 https://arxiv.org/abs/2503.08915

📰 https://arxiv.org/abs/2503.08915

Paper: github.com/MoonshotAI/K...

Repo: github.com/MoonshotAI/K...

Model: huggingface.co/moonshotai/K...

Paper: github.com/MoonshotAI/K...

Repo: github.com/MoonshotAI/K...

Model: huggingface.co/moonshotai/K...

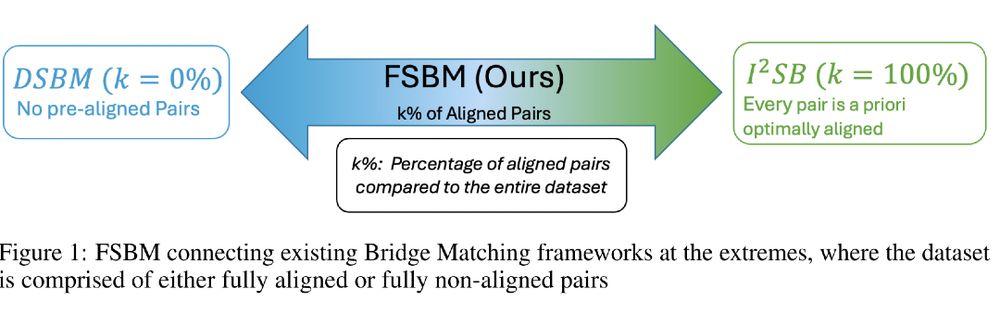

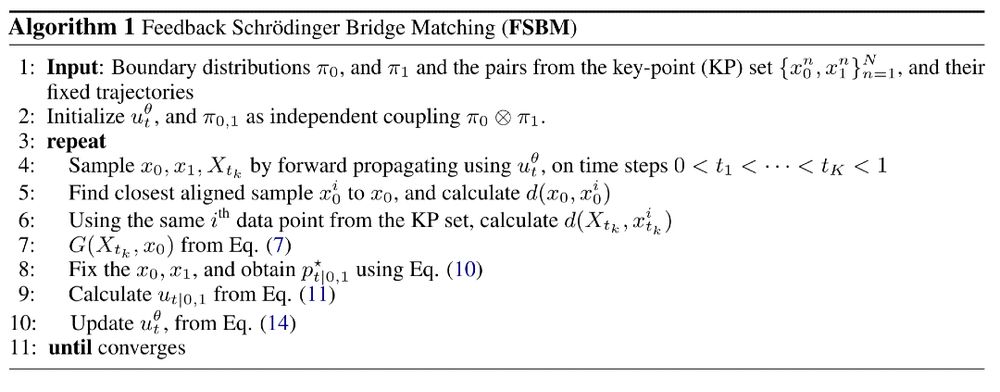

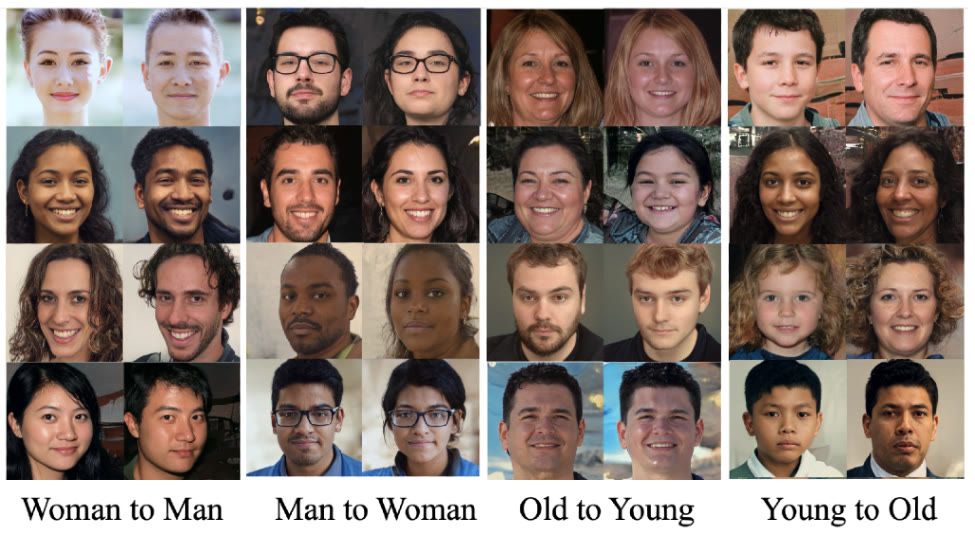

Feedback Schrödinger Bridge Matching introduces a new method to improve transfer between two data distributions using only a small number of paired samples!

Feedback Schrödinger Bridge Matching introduces a new method to improve transfer between two data distributions using only a small number of paired samples!