https://sagnikmukherjee.github.io

https://scholar.google.com/citations?user=v4lvWXoAAAAJ&hl=en

Read more here: arxiv.org/abs/2505.11711

x.com/saagnikkk/st...

Read more here: arxiv.org/abs/2505.11711

x.com/saagnikkk/st...

Work done with amazing collaborator Lifan Yuan, and advised by our amazing advisors @dilekh.bsky.social and Hao Peng.

Work done with amazing collaborator Lifan Yuan, and advised by our amazing advisors @dilekh.bsky.social and Hao Peng.

🔍 Potential Reasons

💡 We hypothesize that the in-distribution nature of training data is a key driver behind this sparsity

🧠 The model already "knows" a lot — RL just fine-tunes a small, relevant subnetwork rather than overhauling everything

🔍 Potential Reasons

💡 We hypothesize that the in-distribution nature of training data is a key driver behind this sparsity

🧠 The model already "knows" a lot — RL just fine-tunes a small, relevant subnetwork rather than overhauling everything

🌐 The Subnetwork Is General

🔁 Subnetworks trained with different seed, datasets, or even algorithms show nontrivial overlap

🧩 Suggests the subnetwork is a generalizable structure tied to the base model

🧠 A shared backbone seems to emerge, no matter how you train it

🌐 The Subnetwork Is General

🔁 Subnetworks trained with different seed, datasets, or even algorithms show nontrivial overlap

🧩 Suggests the subnetwork is a generalizable structure tied to the base model

🧠 A shared backbone seems to emerge, no matter how you train it

🧪 Training the Subnetwork Reproduces Full Model

1️⃣ When trained in isolation, the sparse subnetwork recovers almost the exact same weights as the full model

2️⃣ achieves comparable (or better) end-task performance

3️⃣ 🧮 Even the training loss converges more smoothly

🧪 Training the Subnetwork Reproduces Full Model

1️⃣ When trained in isolation, the sparse subnetwork recovers almost the exact same weights as the full model

2️⃣ achieves comparable (or better) end-task performance

3️⃣ 🧮 Even the training loss converges more smoothly

📚 Each Layer Is Equally Sparse (or Dense)

📏 No specific layer or sublayer gets special treatment — all layers are updated equally sparsely.

🎯Despite the sparsity, the updates are still full-rank

📚 Each Layer Is Equally Sparse (or Dense)

📏 No specific layer or sublayer gets special treatment — all layers are updated equally sparsely.

🎯Despite the sparsity, the updates are still full-rank

📉 Even Gradients Are Sparse in RL 📉

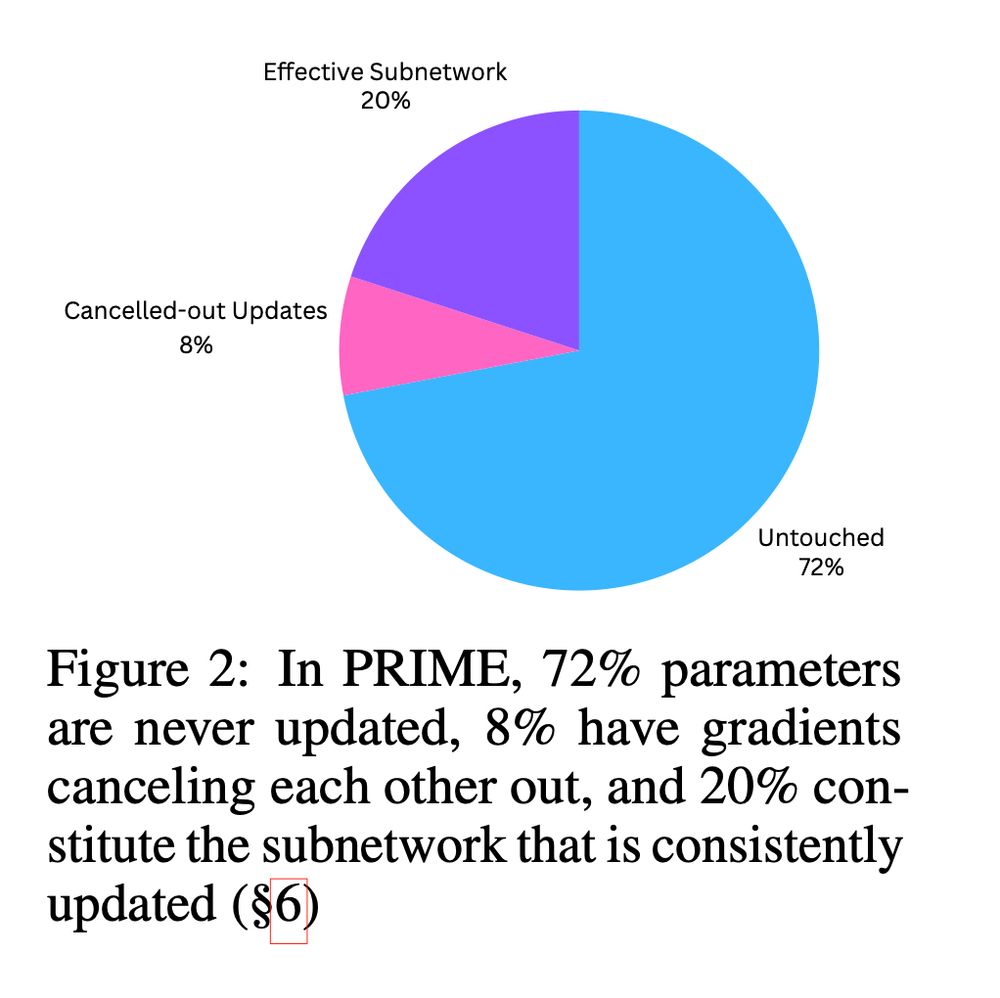

🧠 In PRIME, 72% of parameters never receive any gradient — ever!

↔️ Some do, but their gradients cancel out over time.

🎯 It’s not just sparse updates, even the gradients are sparse

📉 Even Gradients Are Sparse in RL 📉

🧠 In PRIME, 72% of parameters never receive any gradient — ever!

↔️ Some do, but their gradients cancel out over time.

🎯 It’s not just sparse updates, even the gradients are sparse

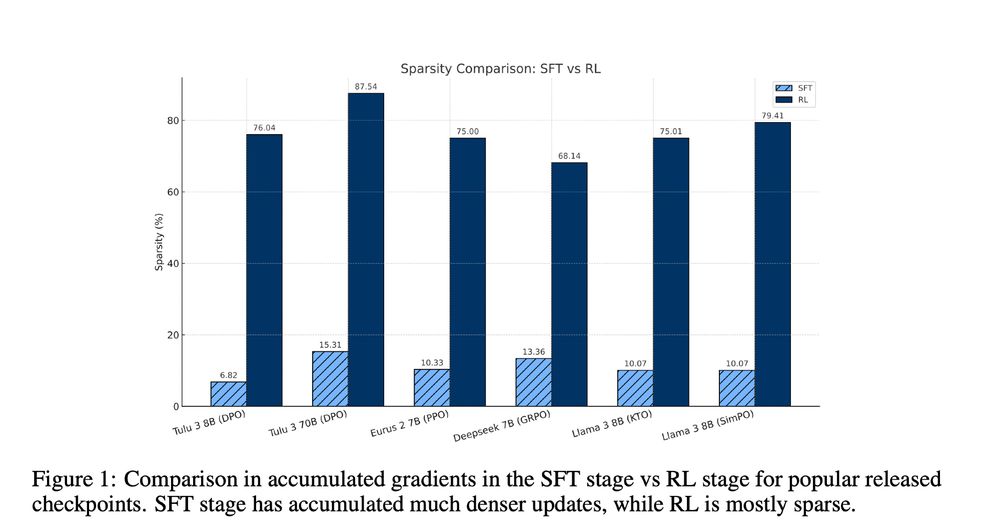

💡 SFT Updates Are Dense 💡

Unlike RL, Supervised Fine-Tuning (SFT) updates are much denser 🧠

📊 Sparsity is low — at most only 15.31% of parameters remain untouched.

💡 SFT Updates Are Dense 💡

Unlike RL, Supervised Fine-Tuning (SFT) updates are much denser 🧠

📊 Sparsity is low — at most only 15.31% of parameters remain untouched.

This would not have been possible without the contributions of @abhinav-chinta.bsky.social @takyoung.bsky.social Tarun and our amazing advisor @dilekh.bsky.social Special thanks to the members of @convai-uiuc.bsky.social

This would not have been possible without the contributions of @abhinav-chinta.bsky.social @takyoung.bsky.social Tarun and our amazing advisor @dilekh.bsky.social Special thanks to the members of @convai-uiuc.bsky.social

1️⃣ Spotting errors in synthetic negative samples is WAY easier than catching real-world mistakes

2️⃣ False positives are inflating math benchmark scores - time for more honest evaluation methods!

🧵[6/n]

1️⃣ Spotting errors in synthetic negative samples is WAY easier than catching real-world mistakes

2️⃣ False positives are inflating math benchmark scores - time for more honest evaluation methods!

🧵[6/n]

PARC improves error detection accuracy by 6-16%, enabling more reliable step-level verification in mathematical reasoning chains.

🧵[5/n]

PARC improves error detection accuracy by 6-16%, enabling more reliable step-level verification in mathematical reasoning chains.

🧵[5/n]

LLMs can reliably identify these critical premises - the specific prior statements that directly support each reasoning step. This creates a transparent structure showing exactly which information is necessary for each conclusion.

🧵[4/n]

LLMs can reliably identify these critical premises - the specific prior statements that directly support each reasoning step. This creates a transparent structure showing exactly which information is necessary for each conclusion.

🧵[4/n]

We propose Premise-Augmented Reasoning Chains (PARC): converting linear reasoning into directed graphs by explicitly linking each reasoning step to its necessary premises.

We also propose accumulation errors, an error type ignored in prior work.

🧵[3/n]

We propose Premise-Augmented Reasoning Chains (PARC): converting linear reasoning into directed graphs by explicitly linking each reasoning step to its necessary premises.

We also propose accumulation errors, an error type ignored in prior work.

🧵[3/n]

🧵[2/n]

🧵[2/n]

"Digitally under-represented cultures are more likely to get represented by their “thin descriptions" created by “outsiders" on the digital space, which can further aggravate the biases and stereotypes."

"Digitally under-represented cultures are more likely to get represented by their “thin descriptions" created by “outsiders" on the digital space, which can further aggravate the biases and stereotypes."