https://sagnikmukherjee.github.io

https://scholar.google.com/citations?user=v4lvWXoAAAAJ&hl=en

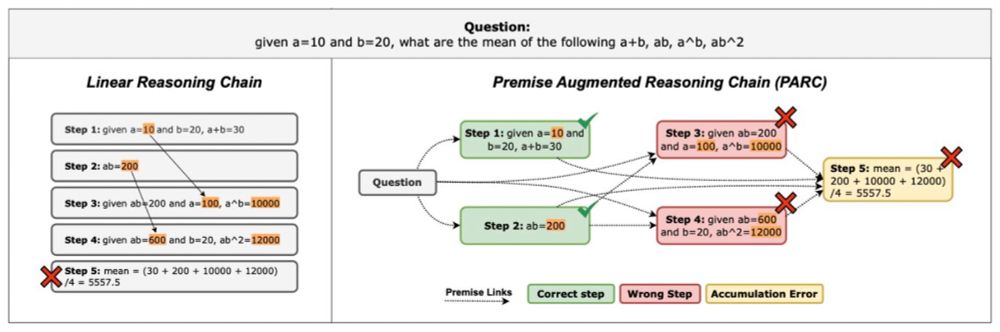

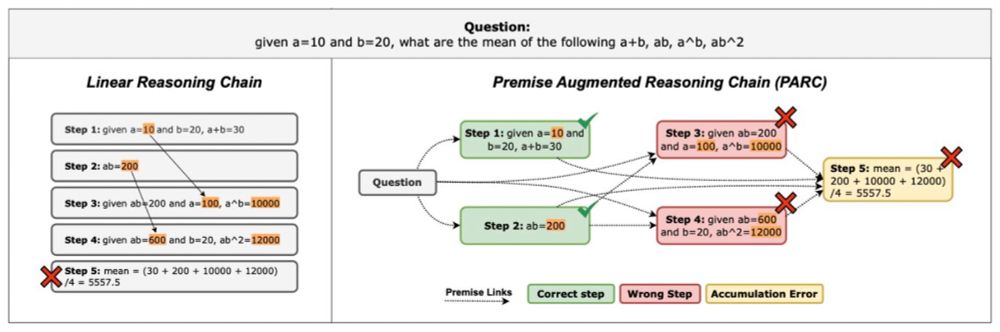

By revealing the dependencies within chains, we significantly improve how LLM reasoning can be verified.

🧵[1/n]

Read more here: arxiv.org/abs/2505.11711

x.com/saagnikkk/st...

Read more here: arxiv.org/abs/2505.11711

x.com/saagnikkk/st...

From DeepSeek V3 Base to DeepSeek R1 Zero, a whopping 86% of parameters were NOT updated during RL training 😮😮

And this isn’t a one-off. The pattern holds across RL algorithms and models.

🧵A Deep Dive

From DeepSeek V3 Base to DeepSeek R1 Zero, a whopping 86% of parameters were NOT updated during RL training 😮😮

And this isn’t a one-off. The pattern holds across RL algorithms and models.

🧵A Deep Dive

By revealing the dependencies within chains, we significantly improve how LLM reasoning can be verified.

🧵[1/n]

By revealing the dependencies within chains, we significantly improve how LLM reasoning can be verified.

🧵[1/n]

@convai-uiuc.bsky.social @gokhantur.bsky.social

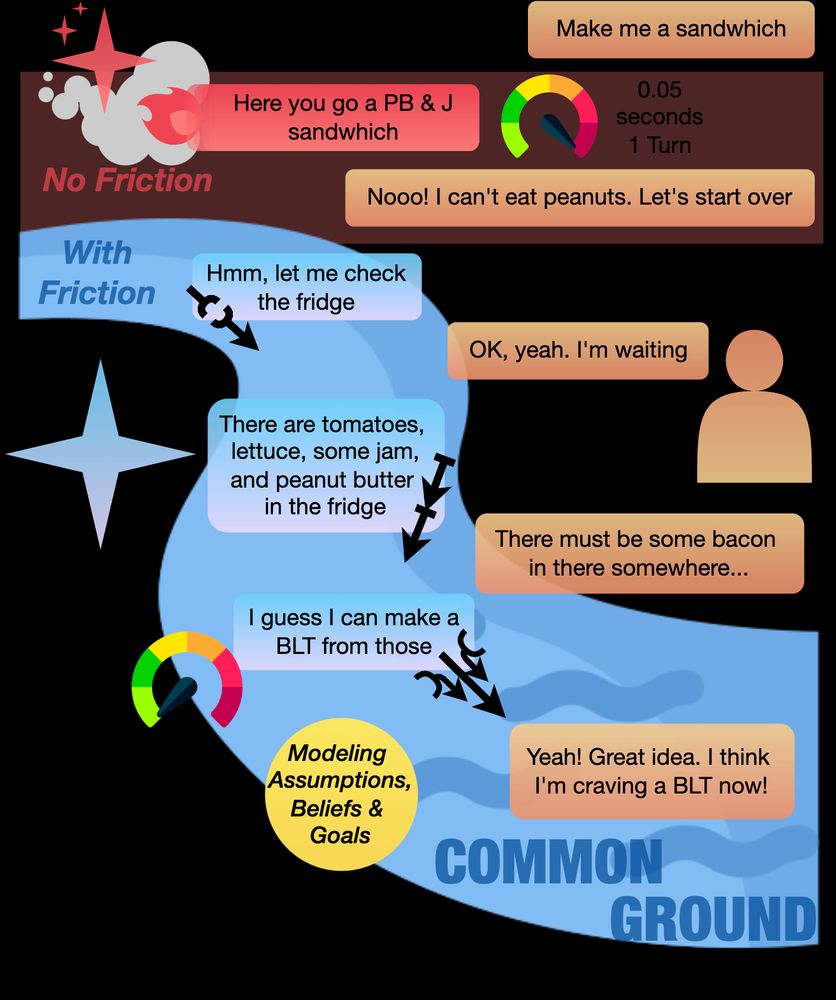

In our latest work, "Better Slow than Sorry: Introducing Positive Friction for Reliable Dialogue Systems", we delve into how strategic delays can enhance dialogue systems.

Paper Website: merterm.github.io/positive-fri...

@convai-uiuc.bsky.social @gokhantur.bsky.social

Students do different research, go on the job market, and recruit other students. Ping me and I'll add you!

Students do different research, go on the job market, and recruit other students. Ping me and I'll add you!

In our survey of on cultural bias in LLMs, we reviewed ~90 papers. Interestingly, none of these papers define "culture" explicitly. They use “proxies”. [1/7]

[Appeared in EMNLP mains]

In our survey of on cultural bias in LLMs, we reviewed ~90 papers. Interestingly, none of these papers define "culture" explicitly. They use “proxies”. [1/7]

[Appeared in EMNLP mains]

cobusgreyling.medium.com/building-con...

cobusgreyling.medium.com/building-con...