https://sagnikmukherjee.github.io

https://scholar.google.com/citations?user=v4lvWXoAAAAJ&hl=en

🔍 Potential Reasons

💡 We hypothesize that the in-distribution nature of training data is a key driver behind this sparsity

🧠 The model already "knows" a lot — RL just fine-tunes a small, relevant subnetwork rather than overhauling everything

🔍 Potential Reasons

💡 We hypothesize that the in-distribution nature of training data is a key driver behind this sparsity

🧠 The model already "knows" a lot — RL just fine-tunes a small, relevant subnetwork rather than overhauling everything

🌐 The Subnetwork Is General

🔁 Subnetworks trained with different seed, datasets, or even algorithms show nontrivial overlap

🧩 Suggests the subnetwork is a generalizable structure tied to the base model

🧠 A shared backbone seems to emerge, no matter how you train it

🌐 The Subnetwork Is General

🔁 Subnetworks trained with different seed, datasets, or even algorithms show nontrivial overlap

🧩 Suggests the subnetwork is a generalizable structure tied to the base model

🧠 A shared backbone seems to emerge, no matter how you train it

🧪 Training the Subnetwork Reproduces Full Model

1️⃣ When trained in isolation, the sparse subnetwork recovers almost the exact same weights as the full model

2️⃣ achieves comparable (or better) end-task performance

3️⃣ 🧮 Even the training loss converges more smoothly

🧪 Training the Subnetwork Reproduces Full Model

1️⃣ When trained in isolation, the sparse subnetwork recovers almost the exact same weights as the full model

2️⃣ achieves comparable (or better) end-task performance

3️⃣ 🧮 Even the training loss converges more smoothly

📚 Each Layer Is Equally Sparse (or Dense)

📏 No specific layer or sublayer gets special treatment — all layers are updated equally sparsely.

🎯Despite the sparsity, the updates are still full-rank

📚 Each Layer Is Equally Sparse (or Dense)

📏 No specific layer or sublayer gets special treatment — all layers are updated equally sparsely.

🎯Despite the sparsity, the updates are still full-rank

📉 Even Gradients Are Sparse in RL 📉

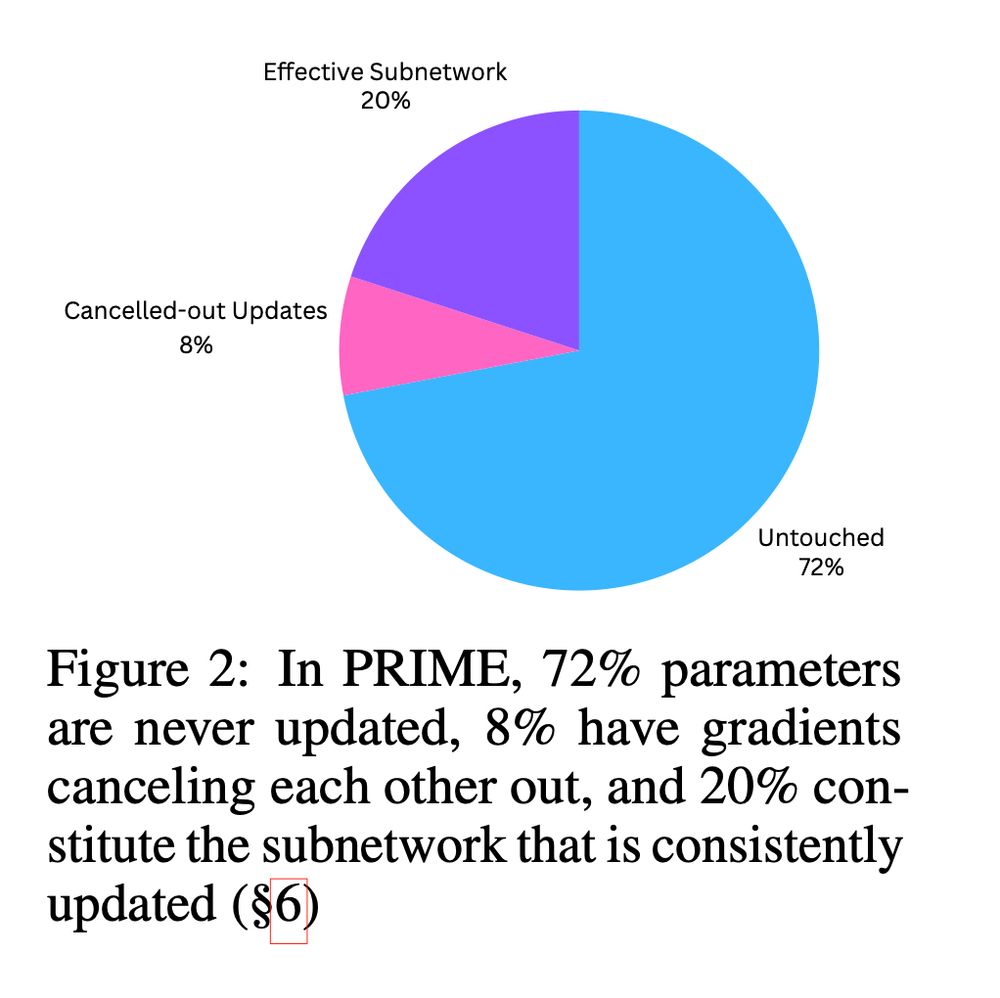

🧠 In PRIME, 72% of parameters never receive any gradient — ever!

↔️ Some do, but their gradients cancel out over time.

🎯 It’s not just sparse updates, even the gradients are sparse

📉 Even Gradients Are Sparse in RL 📉

🧠 In PRIME, 72% of parameters never receive any gradient — ever!

↔️ Some do, but their gradients cancel out over time.

🎯 It’s not just sparse updates, even the gradients are sparse

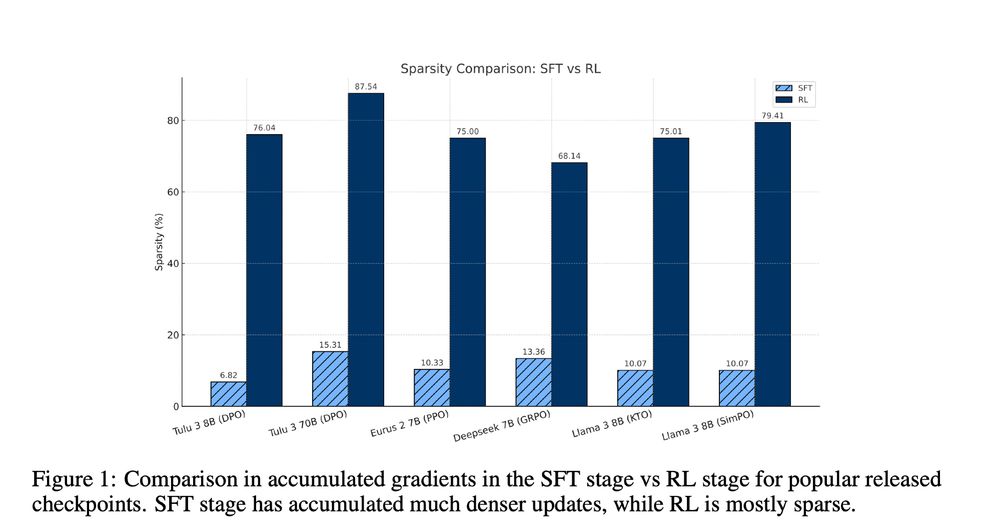

💡 SFT Updates Are Dense 💡

Unlike RL, Supervised Fine-Tuning (SFT) updates are much denser 🧠

📊 Sparsity is low — at most only 15.31% of parameters remain untouched.

💡 SFT Updates Are Dense 💡

Unlike RL, Supervised Fine-Tuning (SFT) updates are much denser 🧠

📊 Sparsity is low — at most only 15.31% of parameters remain untouched.

From DeepSeek V3 Base to DeepSeek R1 Zero, a whopping 86% of parameters were NOT updated during RL training 😮😮

And this isn’t a one-off. The pattern holds across RL algorithms and models.

🧵A Deep Dive

From DeepSeek V3 Base to DeepSeek R1 Zero, a whopping 86% of parameters were NOT updated during RL training 😮😮

And this isn’t a one-off. The pattern holds across RL algorithms and models.

🧵A Deep Dive

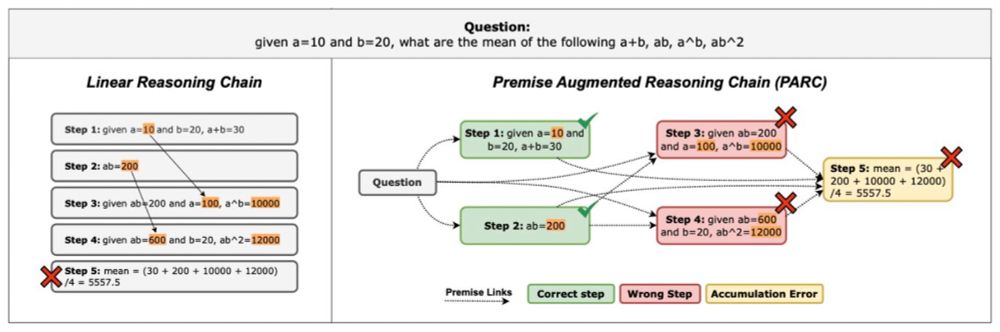

PARC improves error detection accuracy by 6-16%, enabling more reliable step-level verification in mathematical reasoning chains.

🧵[5/n]

PARC improves error detection accuracy by 6-16%, enabling more reliable step-level verification in mathematical reasoning chains.

🧵[5/n]

LLMs can reliably identify these critical premises - the specific prior statements that directly support each reasoning step. This creates a transparent structure showing exactly which information is necessary for each conclusion.

🧵[4/n]

LLMs can reliably identify these critical premises - the specific prior statements that directly support each reasoning step. This creates a transparent structure showing exactly which information is necessary for each conclusion.

🧵[4/n]

By revealing the dependencies within chains, we significantly improve how LLM reasoning can be verified.

🧵[1/n]

By revealing the dependencies within chains, we significantly improve how LLM reasoning can be verified.

🧵[1/n]

In our survey of on cultural bias in LLMs, we reviewed ~90 papers. Interestingly, none of these papers define "culture" explicitly. They use “proxies”. [1/7]

[Appeared in EMNLP mains]

In our survey of on cultural bias in LLMs, we reviewed ~90 papers. Interestingly, none of these papers define "culture" explicitly. They use “proxies”. [1/7]

[Appeared in EMNLP mains]