http://raryskin.github.io

PI of Language, Interaction, & Cognition (LInC) lab: http://linclab0.github.io

🗓️ UC President’s Postdoctoral Fellowship Program applications are due Nov. 1 (ppfp.ucop.edu/info/)

Open to anyone interested in a postdoc & academic career at a UC campus.

I'm happy to sponsor an applicant if there’s a good fit— please reach out!

www.wimpouw.com/files/Bogels...

www.wimpouw.com/files/Bogels...

Language models (LMs) are remarkably good at generating novel well-formed sentences, leading to claims that they have mastered grammar.

Yet they often assign higher probability to ungrammatical strings than to grammatical strings.

How can both things be true? 🧵👇

Language models (LMs) are remarkably good at generating novel well-formed sentences, leading to claims that they have mastered grammar.

Yet they often assign higher probability to ungrammatical strings than to grammatical strings.

How can both things be true? 🧵👇

Here is a summary. (1/n)

Here is a summary. (1/n)

We show that word processing and meaning prediction is fundamentally different during social interaction compared to using language individually!

👀 short 🧵/1

psycnet.apa.org/fulltext/202...

#OpenAccess

We show that word processing and meaning prediction is fundamentally different during social interaction compared to using language individually!

👀 short 🧵/1

psycnet.apa.org/fulltext/202...

#OpenAccess

built a library to easily compare design choices & model features across datasets!

We hope it will be useful to the community & plan to keep expanding it!

1/

To appear in the DBM Neurips Workshop

LITcoder: A General-Purpose Library for Building and Comparing Encoding Models

📄 arxiv: arxiv.org/abs/2509.091...

🔗 project: litcoder-brain.github.io

built a library to easily compare design choices & model features across datasets!

We hope it will be useful to the community & plan to keep expanding it!

1/

www.sciencedirect.com/science/arti...

www.sciencedirect.com/science/arti...

🗓️ UC President’s Postdoctoral Fellowship Program applications are due Nov. 1 (ppfp.ucop.edu/info/)

Open to anyone interested in a postdoc & academic career at a UC campus.

I'm happy to sponsor an applicant if there’s a good fit— please reach out!

🗓️ UC President’s Postdoctoral Fellowship Program applications are due Nov. 1 (ppfp.ucop.edu/info/)

Open to anyone interested in a postdoc & academic career at a UC campus.

I'm happy to sponsor an applicant if there’s a good fit— please reach out!

In our #Interspeech2025 paper, we introduce AuriStream: a simple, causal model that learns phoneme, word & semantic information from speech.

Poster P6, tomorrow (Aug 19) at 1:30 pm, Foyer 2.2!

In our #Interspeech2025 paper, we introduce AuriStream: a simple, causal model that learns phoneme, word & semantic information from speech.

Poster P6, tomorrow (Aug 19) at 1:30 pm, Foyer 2.2!

adele.scholar.princeton.edu/sites/g/file...

adele.scholar.princeton.edu/sites/g/file...

1/n

1/n

Word and construction probabilities explain the acceptability of certain long-distance dependency structures

Work with Curtis Chen and Ted Gibson

Link to paper: tedlab.mit.edu/tedlab_websi...

In memory of Curtis Chen.

Word and construction probabilities explain the acceptability of certain long-distance dependency structures

Work with Curtis Chen and Ted Gibson

Link to paper: tedlab.mit.edu/tedlab_websi...

In memory of Curtis Chen.

Paper 🔗 in 🧵👇

Paper 🔗 in 🧵👇

One key takeaway for me: Webcam eye-tracking w/ jsPsych is awesome for 4-quadrant visual world paradigm studies -- less so for displays w/ smaller ROIs.

One key takeaway for me: Webcam eye-tracking w/ jsPsych is awesome for 4-quadrant visual world paradigm studies -- less so for displays w/ smaller ROIs.

onlinelibrary.wiley.com/doi/epdf/10....

onlinelibrary.wiley.com/doi/epdf/10....

We show that voxel responses during comprehension are organized along 2 main axes: processing difficulty & meaning abstractness—revealing an interpretable, topographic representational basis for language processing shared across individuals

We show that voxel responses during comprehension are organized along 2 main axes: processing difficulty & meaning abstractness—revealing an interpretable, topographic representational basis for language processing shared across individuals

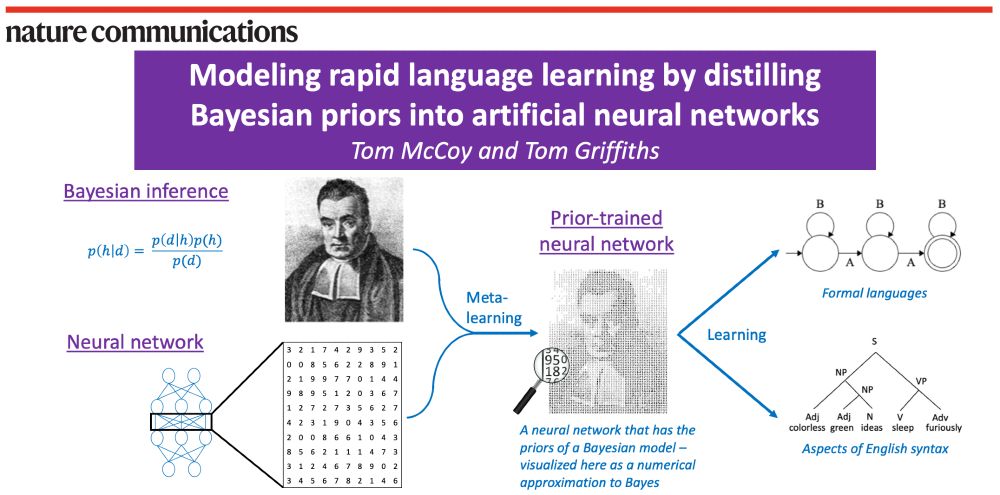

Bayesian models can learn rapidly. Neural networks can handle messy, naturalistic data. How can we combine these strengths?

Our answer: Use meta-learning to distill Bayesian priors into a neural network!

www.nature.com/articles/s41...

1/n

Bayesian models can learn rapidly. Neural networks can handle messy, naturalistic data. How can we combine these strengths?

Our answer: Use meta-learning to distill Bayesian priors into a neural network!

www.nature.com/articles/s41...

1/n

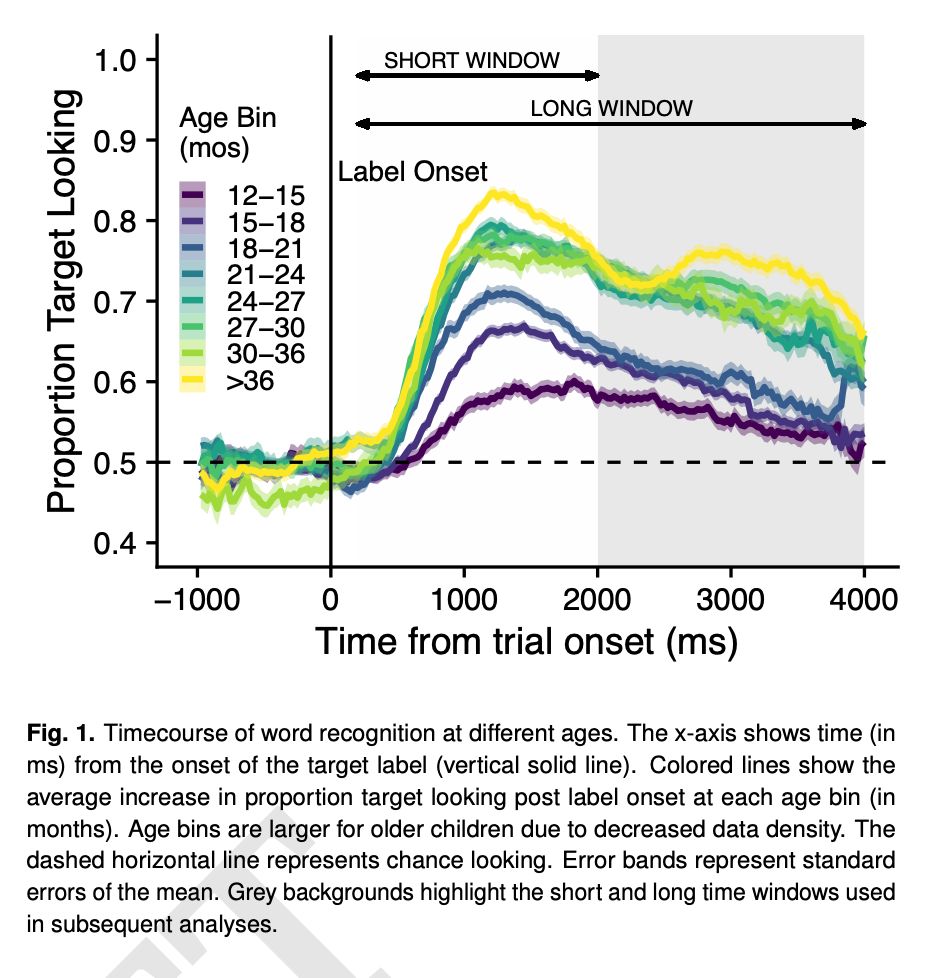

recognition support language learning across early

childhood": osf.io/preprints/ps...

recognition support language learning across early

childhood": osf.io/preprints/ps...