Previously RL intern @ SonyAI, RLHF intern @ Google Research, and RL intern @ Amazon Science

New blog post: Getting SAC to Work on a Massive Parallel Simulator (part I)

araffin.github.io/post/sac-mas...

New blog post: Getting SAC to Work on a Massive Parallel Simulator (part I)

araffin.github.io/post/sac-mas...

I’m delighted to tell you about our new paper, Soft Condorcet Optimization (SCO) for Ranking of General Agents, to be presented at AAMAS 2025! 🧵 1/N

I’m delighted to tell you about our new paper, Soft Condorcet Optimization (SCO) for Ranking of General Agents, to be presented at AAMAS 2025! 🧵 1/N

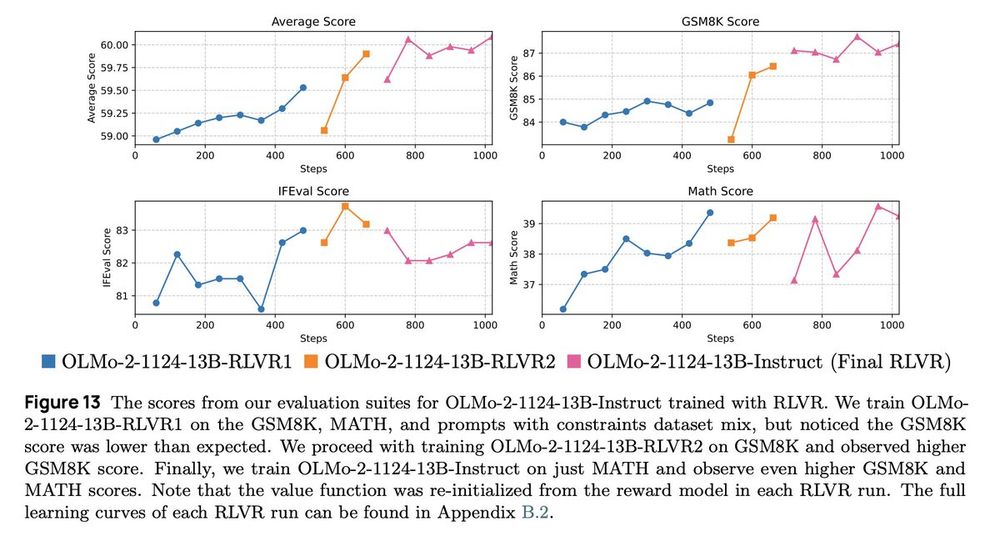

This time, we applied RLVR iteratively! Our initial RLVR checkpoint on the RLVR dataset mix shows a low GSM8K score, so we did another RLVR on GSM8K only and another on MATH only 😆.

And it works! A thread 🧵 1/N

This time, we applied RLVR iteratively! Our initial RLVR checkpoint on the RLVR dataset mix shows a low GSM8K score, so we did another RLVR on GSM8K only and another on MATH only 😆.

And it works! A thread 🧵 1/N

I'm happy to announce the release of Syllabus, a library of portable curriculum learning methods that work with any RL code!

github.com/RyanNavillus...

I'm happy to announce the release of Syllabus, a library of portable curriculum learning methods that work with any RL code!

github.com/RyanNavillus...

This week I will tell you about several papers in the theme of social choice theory (and agent/model evals), starting with an old paper of ours that I am still excited about:

"Evaluating Agents using Social Choice Theory"

🧵 1/N

I'll be sharing some cool curriculum learning work in a few days, stay tuned!

go.bsky.app/MdVxrtD

I'll be sharing some cool curriculum learning work in a few days, stay tuned!