"Everything around me was somebody's lifework"

👋 https://rory.bio

🔨 https://flywhl.dev

🔧 https://compmotifs.com/

Speaking of tools, I also run a little org trying to create new incentives for building tools for science: flywhl.dev.

talk.amacrin.com

Scientific discourse here and elsewhere is a bit fragmented, so I made a space for centralised, casual discussion. You can log in with Bsky.

Still in testing mode. I'll move it to a new domain once I find a good name.

talk.amacrin.com

Scientific discourse here and elsewhere is a bit fragmented, so I made a space for centralised, casual discussion. You can log in with Bsky.

Still in testing mode. I'll move it to a new domain once I find a good name.

This enriches their LLM prompting significantly.

If you want to successfully use AI for coding, learn some software architecture!

This enriches their LLM prompting significantly.

If you want to successfully use AI for coding, learn some software architecture!

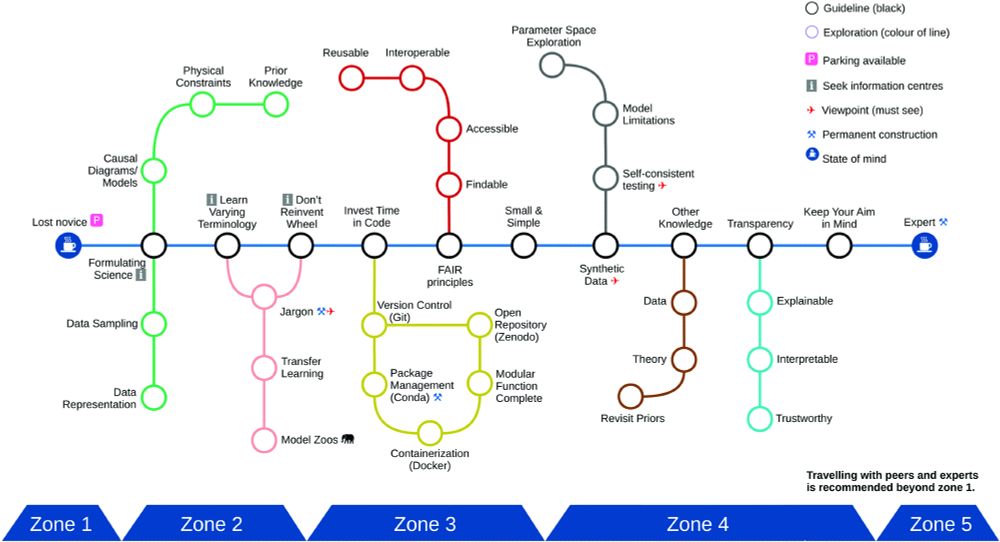

Huge thanks to @marcusghosh.bsky.social, @neuralreckoning.bsky.social, @tfiers.bsky.social, @krhab.bsky.social and others for putting in the bulk effort 🙌

Huge thanks to @marcusghosh.bsky.social, @neuralreckoning.bsky.social, @tfiers.bsky.social, @krhab.bsky.social and others for putting in the bulk effort 🙌

betterscienceproject.substack.com/p/the-counte...

betterscienceproject.substack.com/p/the-counte...

Myself and 9 other research fellows from @imperial-ix.bsky.social use AI methods in domains from plant biology (🌱) to neuroscience (🧠) and particle physics (🎇).

Together we suggest 10 simple rules @plos.org 🧵

doi.org/10.1371/jour...

Myself and 9 other research fellows from @imperial-ix.bsky.social use AI methods in domains from plant biology (🌱) to neuroscience (🧠) and particle physics (🎇).

Together we suggest 10 simple rules @plos.org 🧵

doi.org/10.1371/jour...

arxiv.org/abs/2507.16043

Surrogate gradients are popular for training SNNs, but some worry whether they really learn complex temporal spike codes. TLDR: we tested this, and yes they can! 🧵👇

🤖🧠🧪

arxiv.org/abs/2507.16043

Surrogate gradients are popular for training SNNs, but some worry whether they really learn complex temporal spike codes. TLDR: we tested this, and yes they can! 🧵👇

🤖🧠🧪

www.software.ac.uk/news/ssi-fel...

www.software.ac.uk/news/ssi-fel...

arxiv.org/abs/2001.10605

arxiv.org/abs/2001.10605

Food, cool people, great speakers (on both the science and toolmaking sides).

#neuroskyence #openscience #desci #openchem #bioinformatics #opensource #foss

When? 7-8 June.

Where? London, UK.

Who? Builders and researchers from academia and industry.

What? Develop innovative computational tools to advance the natural sciences.

Join us: lu.ma/apsqlxlj?utm....

Food, cool people, great speakers (on both the science and toolmaking sides).

#neuroskyence #openscience #desci #openchem #bioinformatics #opensource #foss

Picture a mouse navigating an environment with light and dark areas.

🧵1/10

Turns out: yes!

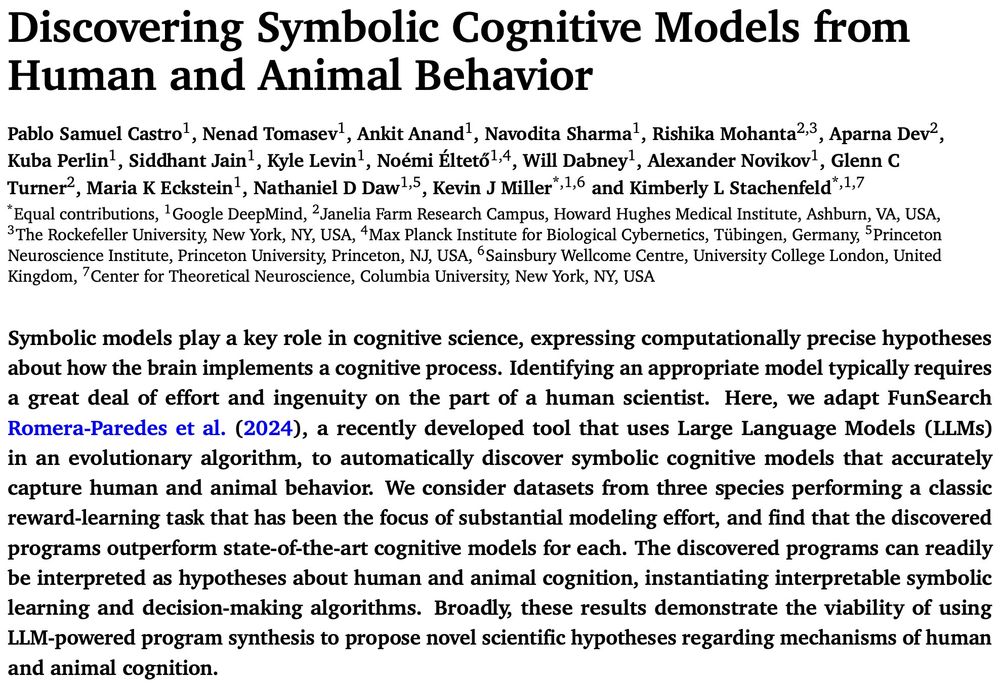

Thrilled to share our latest preprint where we used FunSearch to automatically discover symbolic cognitive models of behavior.

1/12

Picture a mouse navigating an environment with light and dark areas.

🧵1/10

#opensource #neuroskyence #openscience #machinelearning #compchem #chemsky

Together with Pebblebed, we’re hosting a 2-day hackathon on 26-27 March for builders and scientists across disciplines to build new methods and tools for the computational and natural sciences. Join us: lu.ma/t5yik06g.

Reposts will be much appreciated!

#opensource #neuroskyence #openscience #machinelearning #compchem #chemsky

By @neuralreckoning.bsky.social

#neuroskyence

www.thetransmitter.org/policy/scien...

By @neuralreckoning.bsky.social

#neuroskyence

www.thetransmitter.org/policy/scien...

The `@commit` decorator auto-commits your code when your experiment runs - with metadata in the message.

Then you can find previous results by querying for commits with (e.g.) metrics.accuracy > 0.9.

github.com/flywhl/logis

The `@commit` decorator auto-commits your code when your experiment runs - with metadata in the message.

Then you can find previous results by querying for commits with (e.g.) metrics.accuracy > 0.9.

github.com/flywhl/logis

The `@commit` decorator auto-commits your code when your experiment runs - with metadata in the message.

Then you can find previous results by querying for commits with (e.g.) metrics.accuracy > 0.9.

github.com/flywhl/logis

I wonder what this means for the concept of "areas"...

#neuroscience

www.thetransmitter.org/neural-codin...

I wonder what this means for the concept of "areas"...

#neuroscience

www.thetransmitter.org/neural-codin...

(The name comes from cyanotype photography, i.e. the "blueprint")

#opensource #python #openscience

github.com/flywhl/cyantic

(The name comes from cyanotype photography, i.e. the "blueprint")

#opensource #python #openscience

github.com/flywhl/cyantic

Modules are locally dense, with increasingly sparse connections to more distal modules.

#neuroai #neuroskyence

gist.github.com/rorybyrne/dd...

Modules are locally dense, with increasingly sparse connections to more distal modules.

#neuroai #neuroskyence

gist.github.com/rorybyrne/dd...

www.youtube.com/@MAINConfere...

#neuroai #neuroskyence

www.youtube.com/@MAINConfere...

#neuroai #neuroskyence

github.com/sqlc-dev/sqlc

github.com/sqlc-dev/sqlc

www.youtube.com/watch?v=L_Al...

www.youtube.com/watch?v=L_Al...

Learn more about publishing your tools or resources with us: https://buff.ly/4f2O0Ht