I will return to UT Austin as an Assistant Professor of Linguistics this fall, and join its vibrant community of Computational Linguists, NLPers, and Cognitive Scientists!🤘

Excited to develop ideas about linguistic and conceptual generalization (recruitment details soon!)

I will return to UT Austin as an Assistant Professor of Linguistics this fall, and join its vibrant community of Computational Linguists, NLPers, and Cognitive Scientists!🤘

Excited to develop ideas about linguistic and conceptual generalization (recruitment details soon!)

🎯 We demonstrate that ranking-based discriminator training can significantly reduce this gap, and improvements on one task often generalize to others!

🧵👇

✨We introduce QUDsim, to quantify discourse similarities beyond lexical, syntactic, and content overlap.

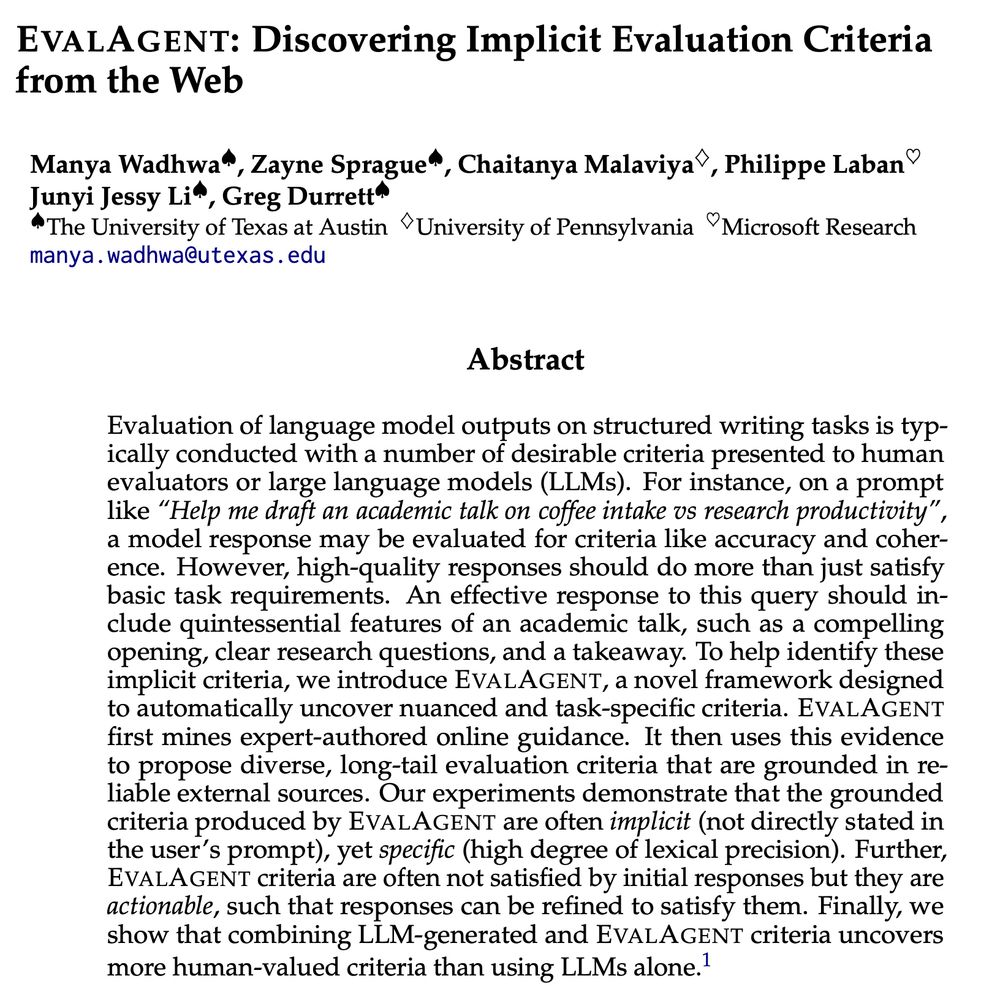

We introduce EvalAgent, a framework that identifies nuanced and diverse criteria 📋✍️.

EvalAgent identifies 👩🏫🎓 expert advice on the web that implicitly address the user’s prompt 🧵👇

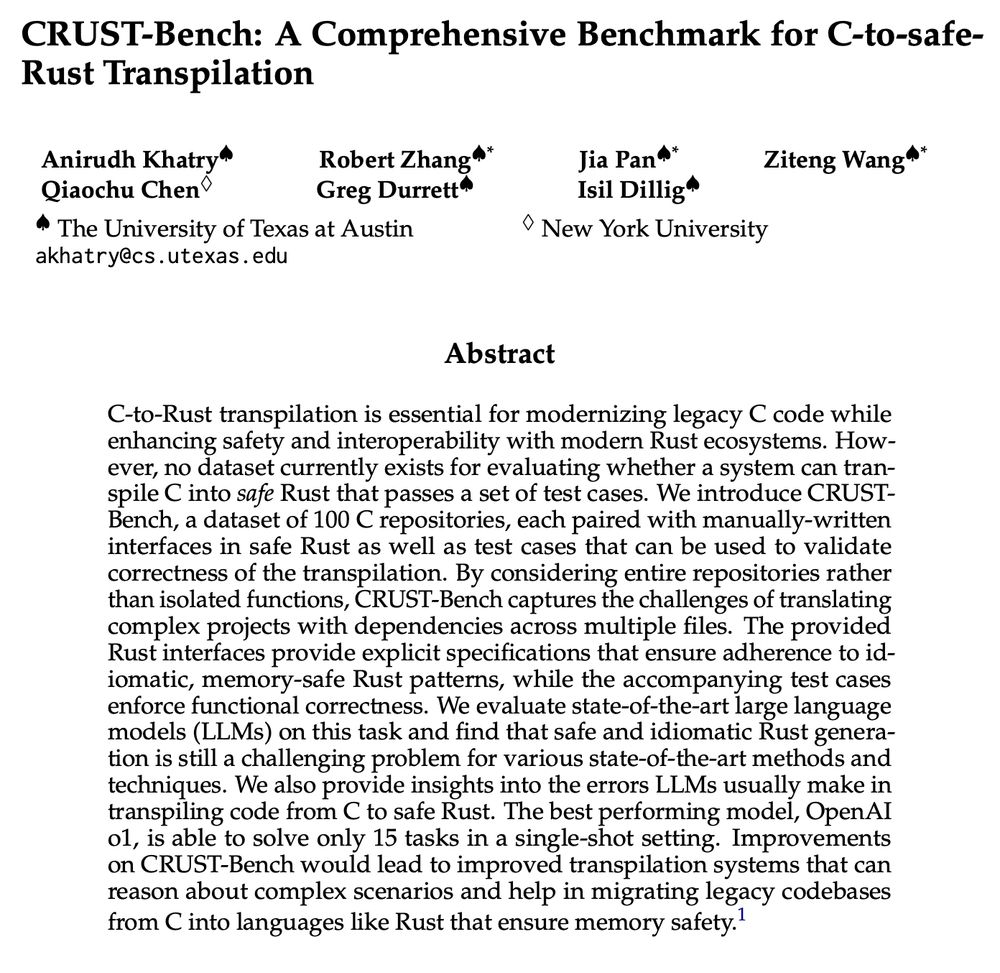

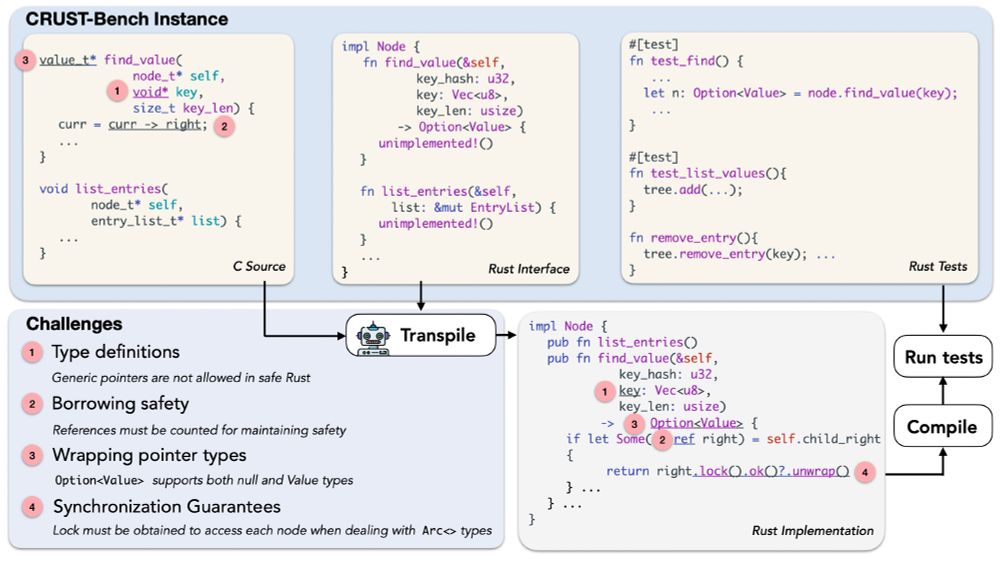

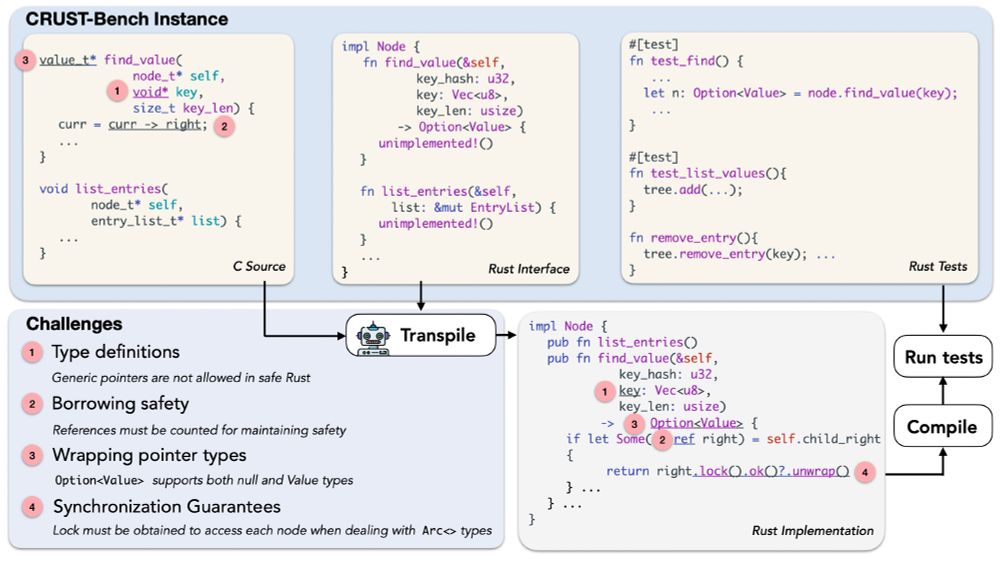

A dataset of 100 real-world C repositories across various domains, each paired with:

🦀 Handwritten safe Rust interfaces.

🧪 Rust test cases to validate correctness.

🧵[1/6]

A dataset of 100 real-world C repositories across various domains, each paired with:

🦀 Handwritten safe Rust interfaces.

🧪 Rust test cases to validate correctness.

🧵[1/6]

A dataset of 100 real-world C repositories across various domains, each paired with:

🦀 Handwritten safe Rust interfaces.

🧪 Rust test cases to validate correctness.

🧵[1/6]

Congrats to the amazing @yatingwu.bsky.social, Ritika Mangla, Alex Dimakis, @gregdnlp.bsky.social

👉[Oral] Discourse+Phonology+Syntax2 10:30-12:00 @ Flagler

also w/ Ritika Mangla @gregdnlp.bsky.social Alex Dimakis

Congrats to the amazing @yatingwu.bsky.social, Ritika Mangla, Alex Dimakis, @gregdnlp.bsky.social