🎯 Working on getting my first 3M.

TL;DR: Modeling how artistic style evolves over 500 years without relying on ground-truth pairs.

📍 Poster Session 2

🗓️ Tue, Oct 21 — 3:00 PM

🧾 Poster #80

🔗 compvis.github.io/Art-fm/

TL;DR: Modeling how artistic style evolves over 500 years without relying on ground-truth pairs.

📍 Poster Session 2

🗓️ Tue, Oct 21 — 3:00 PM

🧾 Poster #80

🔗 compvis.github.io/Art-fm/

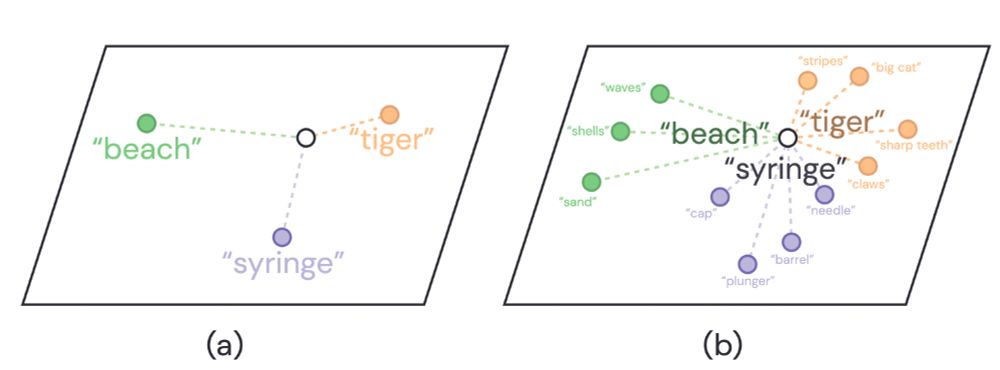

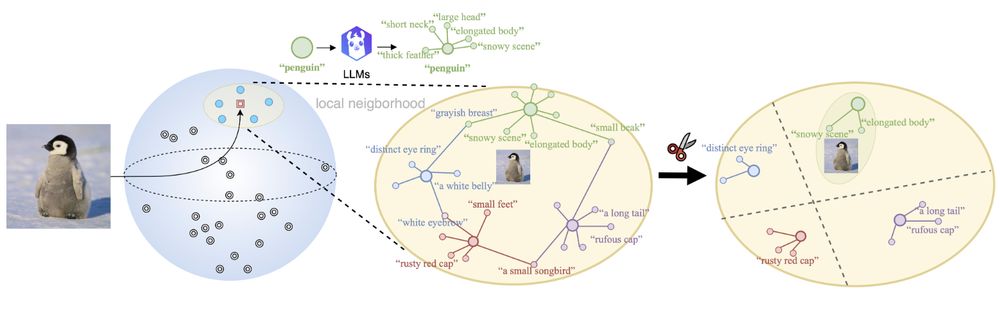

TL;DR: We learn disentangled representations implicitly by training only on merging them.

📍 Poster Session 4

🗓️ Wed, Oct 22 — 2:30 PM

🧾 Poster #3

🔗 compvis.github.io/SCFlow/

TL;DR: We learn disentangled representations implicitly by training only on merging them.

📍 Poster Session 4

🗓️ Wed, Oct 22 — 2:30 PM

🧾 Poster #3

🔗 compvis.github.io/SCFlow/

We also have another oral presentation for DepthFM on March 1, 2:30 pm-3:45 pm.

We also have another oral presentation for DepthFM on March 1, 2:30 pm-3:45 pm.

🤨Interested? Check out our latest work at #AAAI25:

💻Code and 📝Paper at: github.com/CompVis/DisCLIP

🧵👇

🤨Interested? Check out our latest work at #AAAI25:

💻Code and 📝Paper at: github.com/CompVis/DisCLIP

🧵👇