pietrolesci.github.io

@pietrolesci.bsky.social who did a fantastic job!

#ACL2025

New paper + @philipwitti.bsky.social

@gregorbachmann.bsky.social :) arxiv.org/abs/2412.15210

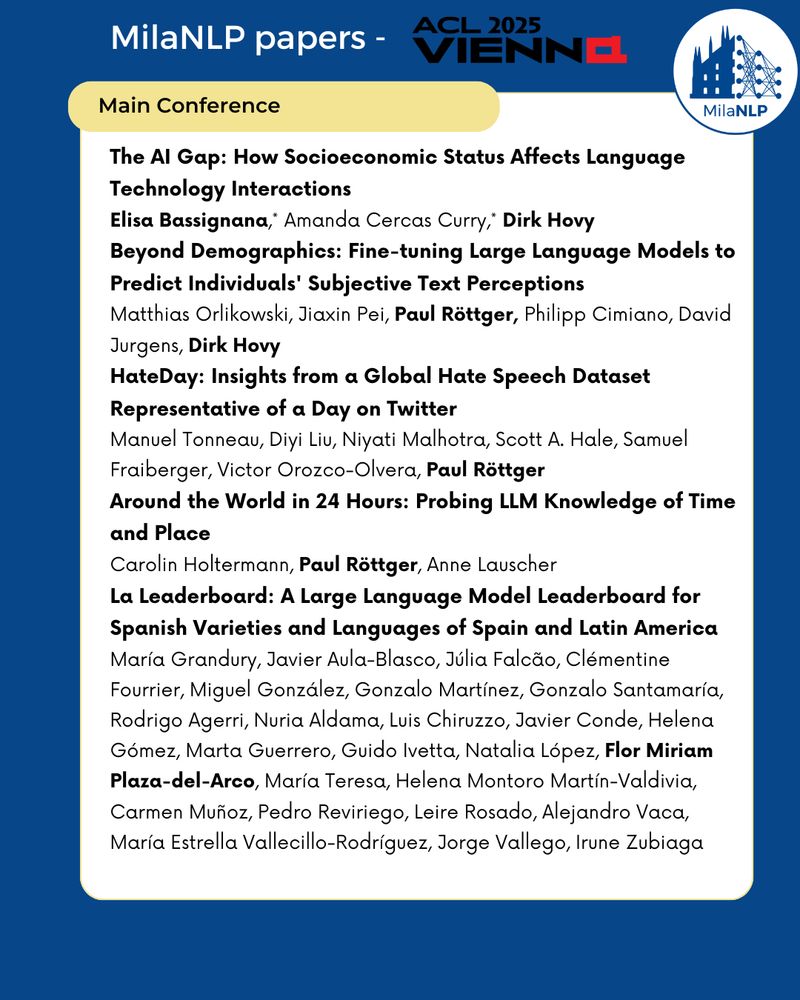

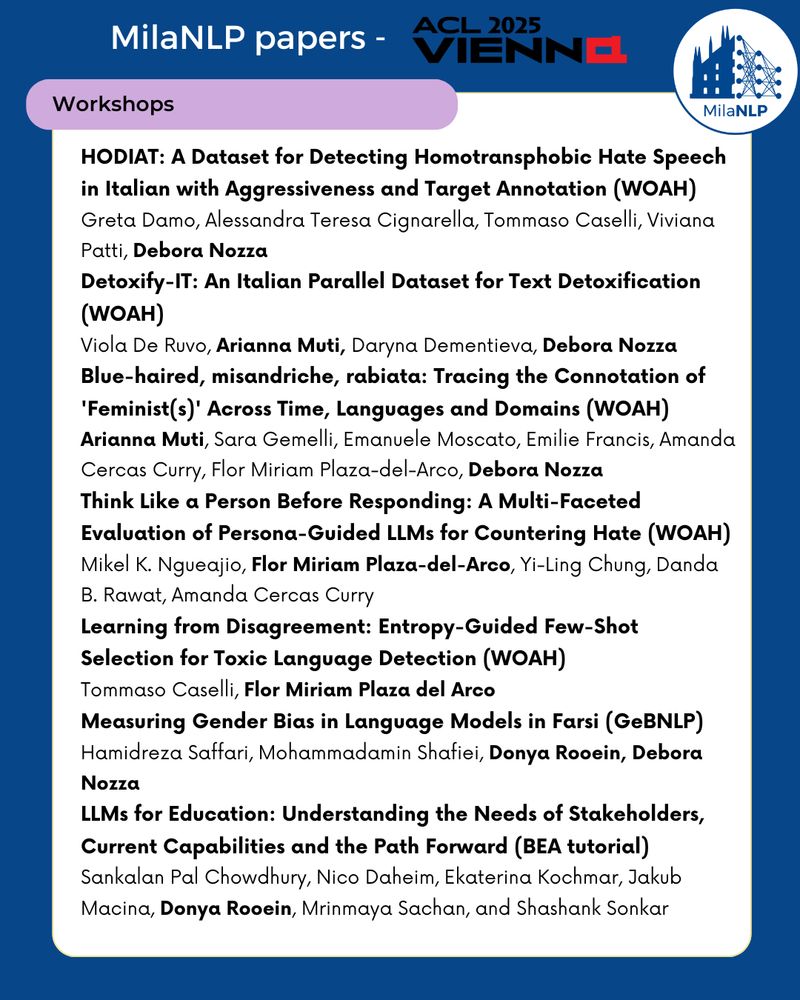

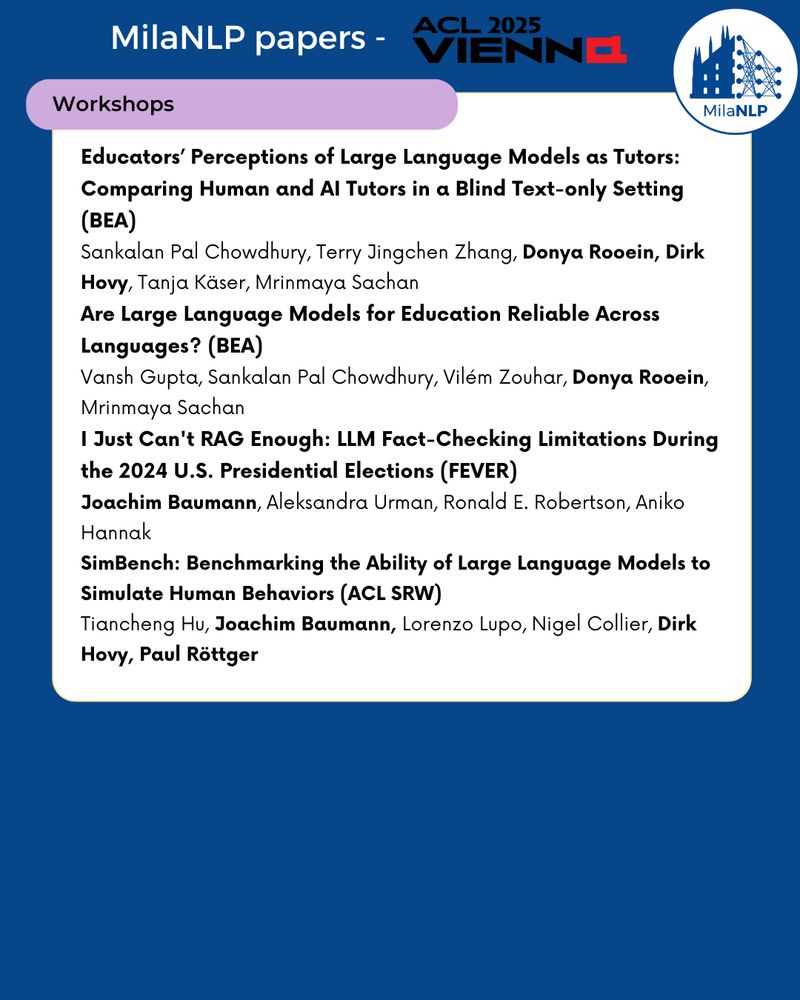

We have several papers this year and many from @milanlp.bsky.social are around, come say hi!

Here are all the works I'm involved in ⤵️

#ACL2025 #ACL2025NLP

We have several papers this year and many from @milanlp.bsky.social are around, come say hi!

Here are all the works I'm involved in ⤵️

#ACL2025 #ACL2025NLP

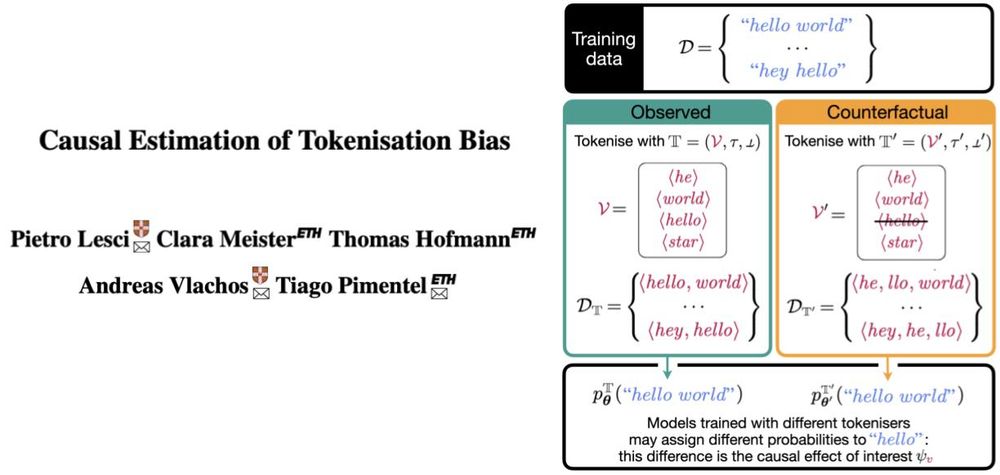

Let’s talk about it and why it matters👇

@aclmeeting.bsky.social #ACL2025 #NLProc

Let’s talk about it and why it matters👇

@aclmeeting.bsky.social #ACL2025 #NLProc

Let’s talk about it and why it matters👇

@aclmeeting.bsky.social #ACL2025 #NLProc

github.com/tpimentelms/...

github.com/tpimentelms/...

🔗 Commit here: openreview.net/group?id=acl...

🗓️ Deadline: May 20, 2025 (AoE)

#ACL2025 #NLProc

🔗 Commit here: openreview.net/group?id=acl...

🗓️ Deadline: May 20, 2025 (AoE)

#ACL2025 #NLProc

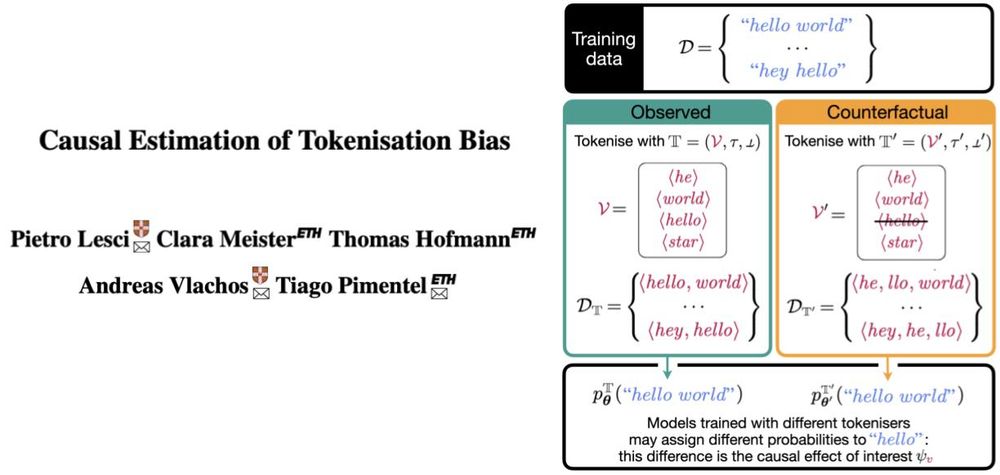

I thank my amazing co-authors Clara Meister, Thomas Hofmann, @tpimentel.bsky.social, and my great advisor and co-author @andreasvlachos.bsky.social!

I thank my amazing co-authors Clara Meister, Thomas Hofmann, @tpimentel.bsky.social, and my great advisor and co-author @andreasvlachos.bsky.social!

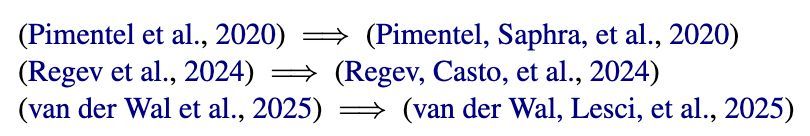

We’ll be presenting our paper on pre-training stability in language models and the PolyPythias 🧵

🔗 ArXiv: arxiv.org/abs/2503.09543

🤗 PolyPythias: huggingface.co/collections/...

We’ll be presenting our paper on pre-training stability in language models and the PolyPythias 🧵

🔗 ArXiv: arxiv.org/abs/2503.09543

🤗 PolyPythias: huggingface.co/collections/...

@aclmeeting.bsky.social in Vienna 🎉

💡 L2M2 brings together researchers to explore memorization from multiple angles. Whether it's text-only LLMs or Vision-language models, we want to hear from you! 🌍

In our NeurIPS 2024 paper, we introduce RealMLP, a NN with improvements in all areas and meta-learned default parameters.

Some insights about RealMLP and other models on large benchmarks (>200 datasets): 🧵

In our NeurIPS 2024 paper, we introduce RealMLP, a NN with improvements in all areas and meta-learned default parameters.

Some insights about RealMLP and other models on large benchmarks (>200 datasets): 🧵

We got this idea after their cool work on improving Plug and Play with FM: arxiv.org/abs/2410.02423

We got this idea after their cool work on improving Plug and Play with FM: arxiv.org/abs/2410.02423

Bonus: They have evaluations on downstream benchmarks!

Great work! 🚀

We propose a methodology to approach these questions by showing that we can predict the performance across datasets and losses with simple shifted power law fits.

Bonus: They have evaluations on downstream benchmarks!

Great work! 🚀