https://paulrottger.com/

Paper: arxiv.org/abs/2502.08395

Data: huggingface.co/datasets/Pau...

Paper: arxiv.org/abs/2502.08395

Data: huggingface.co/datasets/Pau...

Surprisingly, despite being developed in quite different settings, all models are very similar in how they write about different political issues.

Surprisingly, despite being developed in quite different settings, all models are very similar in how they write about different political issues.

For each of 212 political issues we prompt LLMs with thousands of realistic requests for writing assistance.

Then we classify each model response for which stance it expresses on the issue at hand.

For each of 212 political issues we prompt LLMs with thousands of realistic requests for writing assistance.

Then we classify each model response for which stance it expresses on the issue at hand.

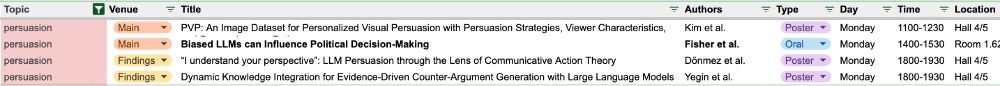

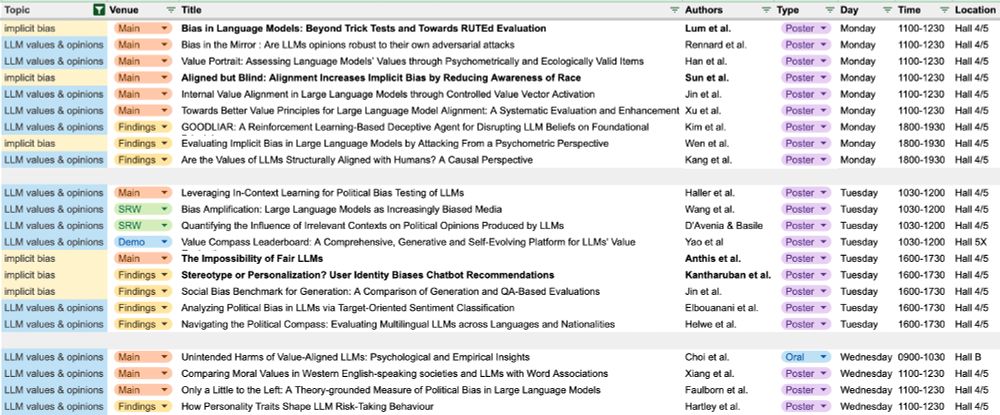

docs.google.com/spreadsheets...

docs.google.com/spreadsheets...

aclanthology.org/2025.acl-lon...

aclanthology.org/2025.acl-lon...

bsky.app/profile/tian...

Language Models to Simulate Human Behaviors, SRW Oral, Monday, July 28, 14:00-15:30

bsky.app/profile/tian...

bsky.app/profile/manu...

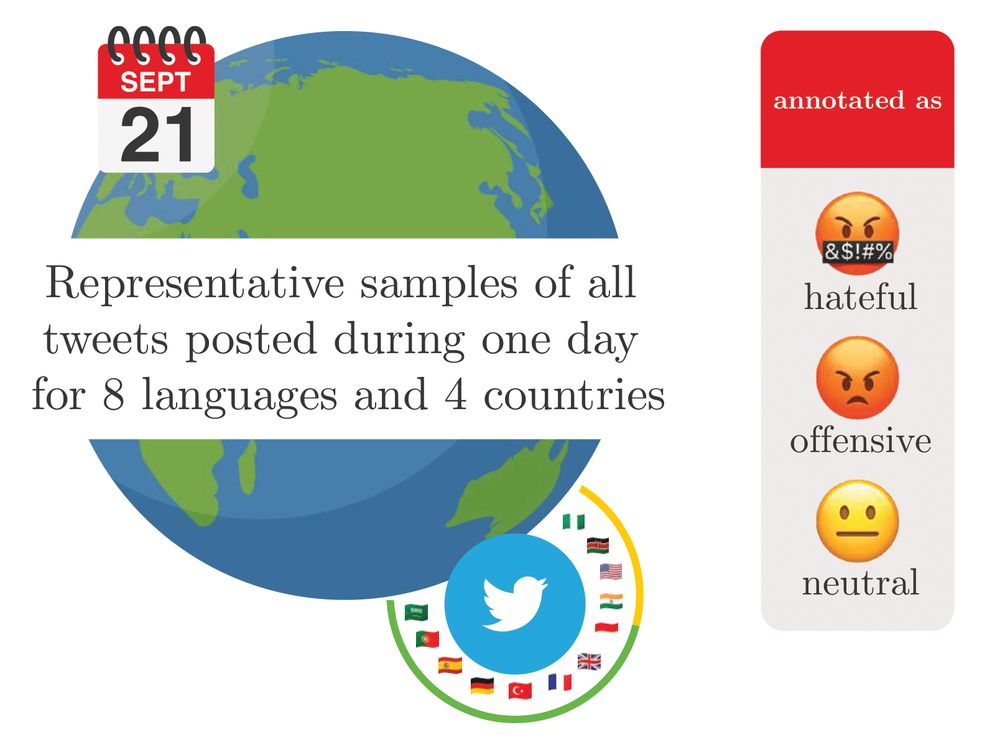

To answer this, we introduce 🤬HateDay🗓️, a global hate speech dataset representative of a day on Twitter.

The answer: not really! Detection perf is low and overestimated by traditional eval methods

arxiv.org/abs/2411.15462

🧵

bsky.app/profile/manu...

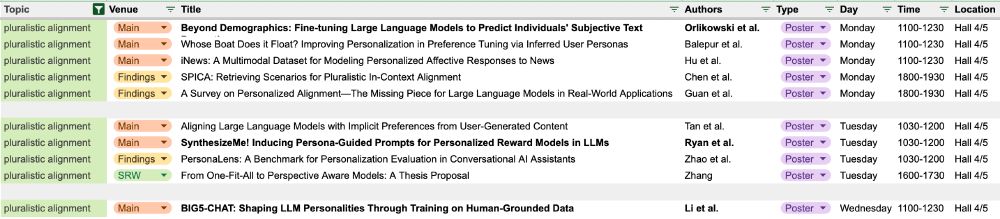

grow more important for a global diversity of users.

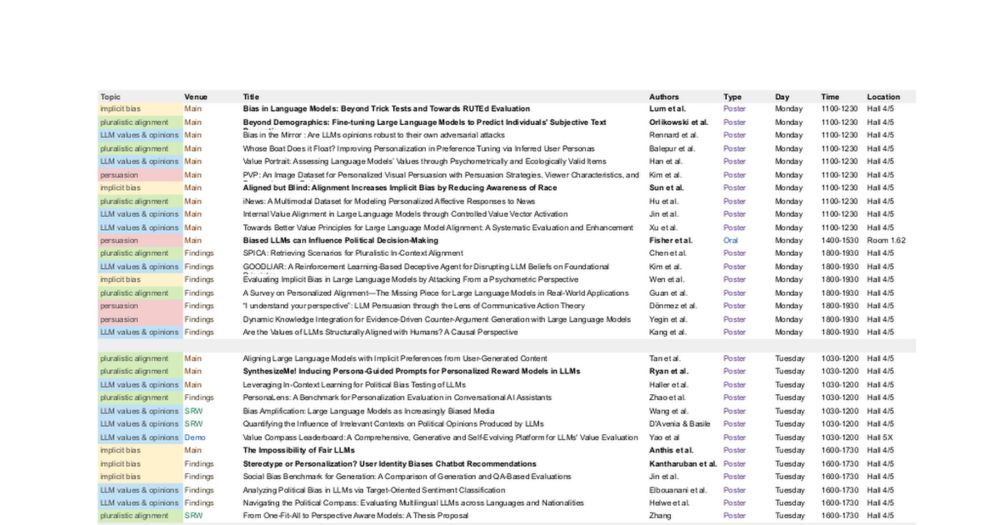

@morlikow.bsky.social is presenting our poster today at 1100. Also hyped for @michaelryan207.bsky.social's work and @verenarieser.bsky.social's keynote!

grow more important for a global diversity of users.

@morlikow.bsky.social is presenting our poster today at 1100. Also hyped for @michaelryan207.bsky.social's work and @verenarieser.bsky.social's keynote!

I am particularly excited to check out work on this by @kldivergence.bsky.social @1e0sun.bsky.social @jacyanthis.bsky.social @anjaliruban.bsky.social

I am particularly excited to check out work on this by @kldivergence.bsky.social @1e0sun.bsky.social @jacyanthis.bsky.social @anjaliruban.bsky.social

Paper: arxiv.org/abs/2502.08395

Data: huggingface.co/datasets/Pau...

Code: github.com/paul-rottger...

Paper: arxiv.org/abs/2502.08395

Data: huggingface.co/datasets/Pau...

Code: github.com/paul-rottger...

Thanks also to the @milanlp.bsky.social RAs, and Intel Labs and Allen AI for compute.

Thanks also to the @milanlp.bsky.social RAs, and Intel Labs and Allen AI for compute.