https://paulrottger.com/

We just released IssueBench – the largest, most realistic benchmark of its kind – to answer this question more robustly than ever before.

Long 🧵with spicy results 👇

IssueBench, our attempt to fix this, is accepted at TACL, and I will be at #EMNLP2025 next week to talk about it!

New results 🧵

IssueBench, our attempt to fix this, is accepted at TACL, and I will be at #EMNLP2025 next week to talk about it!

New results 🧵

We just released IssueBench – the largest, most realistic benchmark of its kind – to answer this question more robustly than ever before.

Long 🧵with spicy results 👇

IssueBench, our attempt to fix this, is accepted at TACL, and I will be at #EMNLP2025 next week to talk about it!

New results 🧵

We hope SimBench can be the foundation for more specialised development of LLM simulators.

I really enjoyed working on this with @tiancheng.bsky.social et al. Many fun results 👇

The promise is revolutionary for science & policy. But there’s a huge "IF": Do these simulations actually reflect reality?

To find out, we introduce SimBench: The first large-scale benchmark for group-level social simulation. (1/9)

We hope SimBench can be the foundation for more specialised development of LLM simulators.

I really enjoyed working on this with @tiancheng.bsky.social et al. Many fun results 👇

Big thanks to my wonderful co-authors: @deeliu97.bsky.social, Niyati, @computermacgyver.bsky.social, Sam, Victor, and @paul-rottger.bsky.social!

Thread 👇and data avail at huggingface.co/datasets/man...

Big thanks to my wonderful co-authors: @deeliu97.bsky.social, Niyati, @computermacgyver.bsky.social, Sam, Victor, and @paul-rottger.bsky.social!

Thread 👇and data avail at huggingface.co/datasets/man...

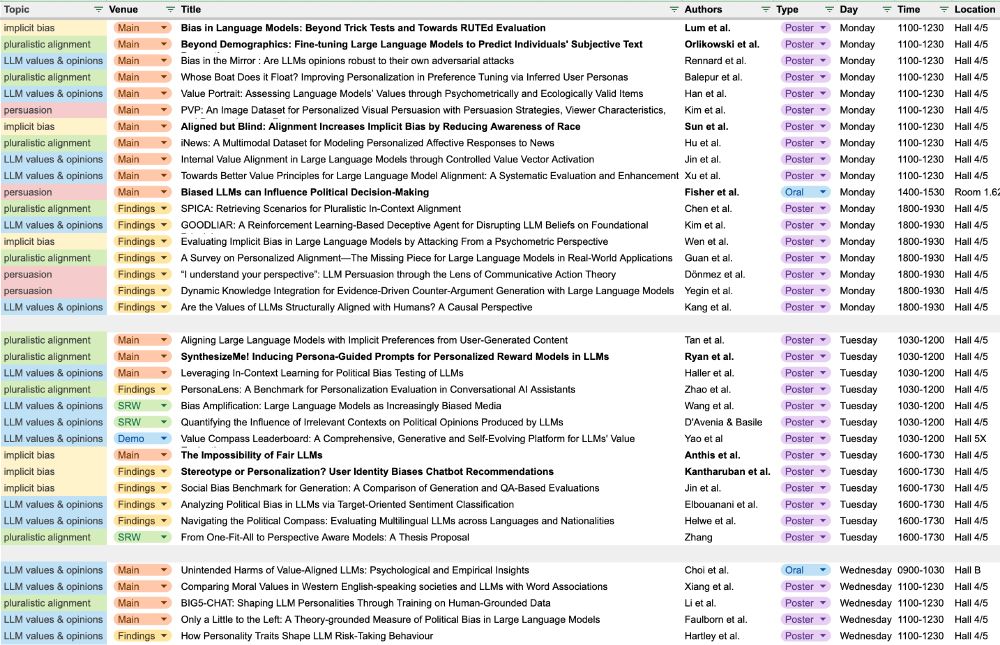

As is now tradition, I made some timetables to help me find my way around. Sharing here in case others find them useful too :) 🧵

As is now tradition, I made some timetables to help me find my way around. Sharing here in case others find them useful too :) 🧵

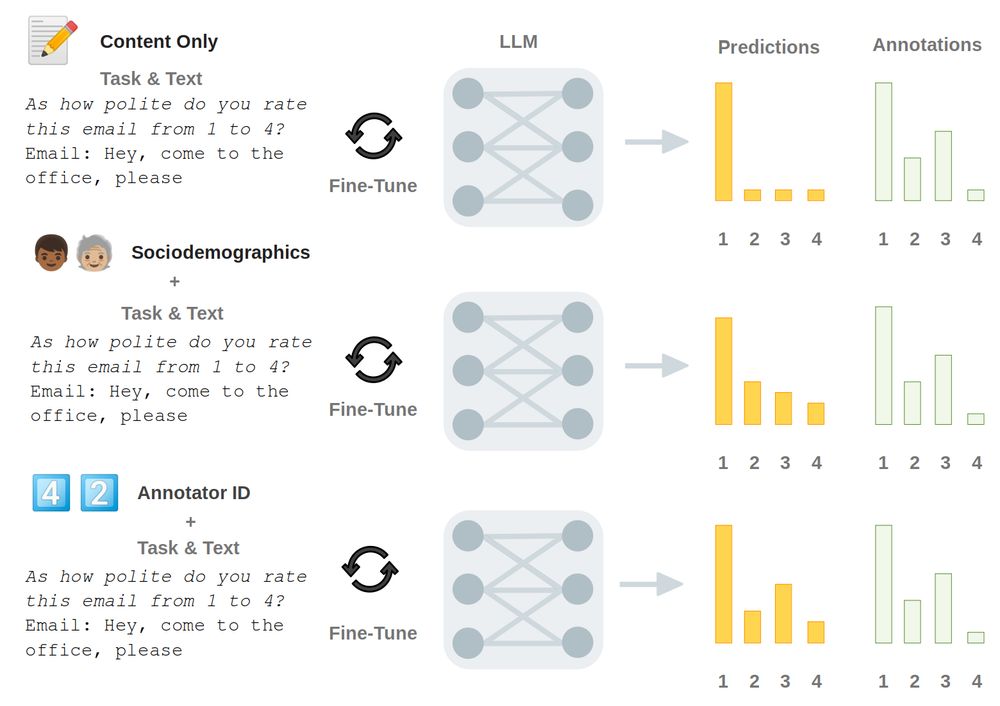

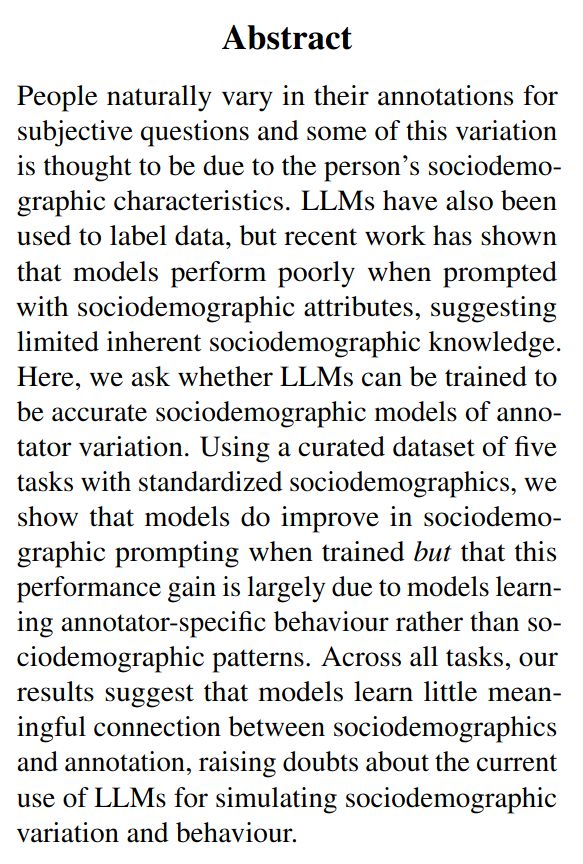

Not quite! In our new paper, we found that LLMs do not learn information about demographics, but instead learn individual annotators' patterns based on unique combinations of attributes!

🧵

Not quite! In our new paper, we found that LLMs do not learn information about demographics, but instead learn individual annotators' patterns based on unique combinations of attributes!

🧵

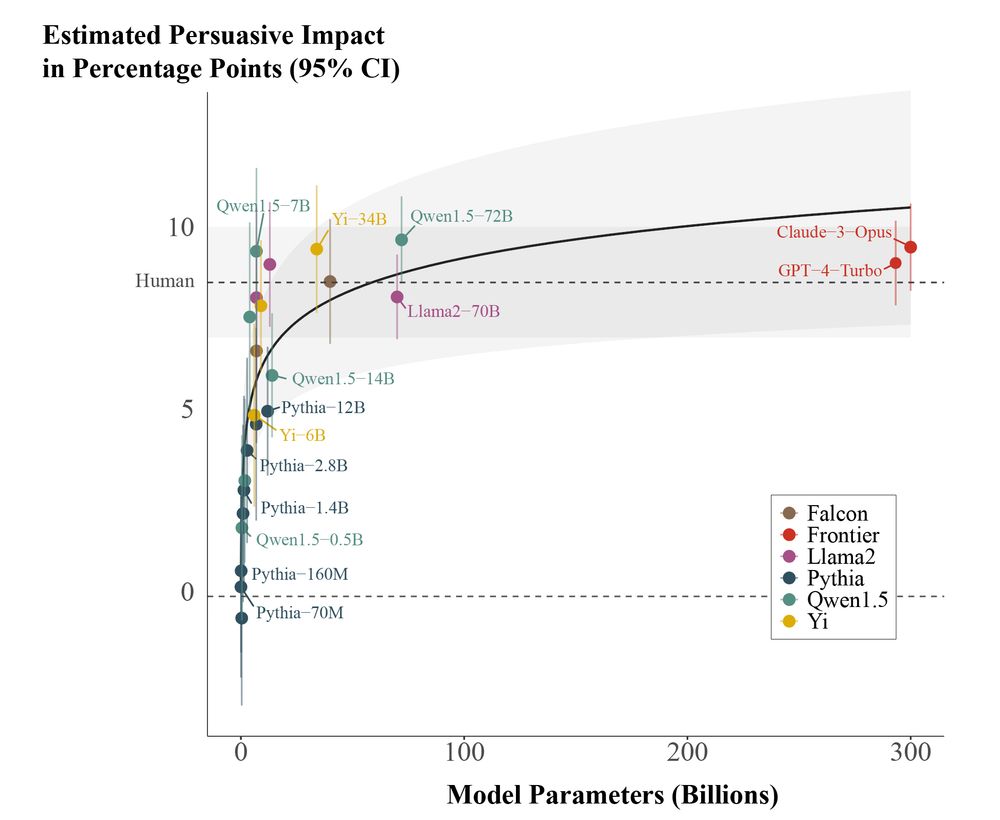

In a large pre-registered experiment (n=25,982), we find evidence that scaling the size of LLMs yields sharply diminishing persuasive returns for static political messages.

🧵:

In a large pre-registered experiment (n=25,982), we find evidence that scaling the size of LLMs yields sharply diminishing persuasive returns for static political messages.

🧵:

We just released IssueBench – the largest, most realistic benchmark of its kind – to answer this question more robustly than ever before.

Long 🧵with spicy results 👇

We just released IssueBench – the largest, most realistic benchmark of its kind – to answer this question more robustly than ever before.

Long 🧵with spicy results 👇

arxiv.org/abs/2410.03415

Joint work with chengzhi, @paul-rottger.bsky.social, @barbaraplank.bsky.social.

arxiv.org/abs/2410.03415

Joint work with chengzhi, @paul-rottger.bsky.social, @barbaraplank.bsky.social.

MSTS is exciting because it tests for safety risks *created by multimodality*. Each prompt consists of a text + image that *only in combination* reveal their full unsafe meaning.

🧵

MSTS is exciting because it tests for safety risks *created by multimodality*. Each prompt consists of a text + image that *only in combination* reveal their full unsafe meaning.

🧵