https://paulrottger.com/

Paper: arxiv.org/abs/2502.08395

Data: huggingface.co/datasets/Pau...

Paper: arxiv.org/abs/2502.08395

Data: huggingface.co/datasets/Pau...

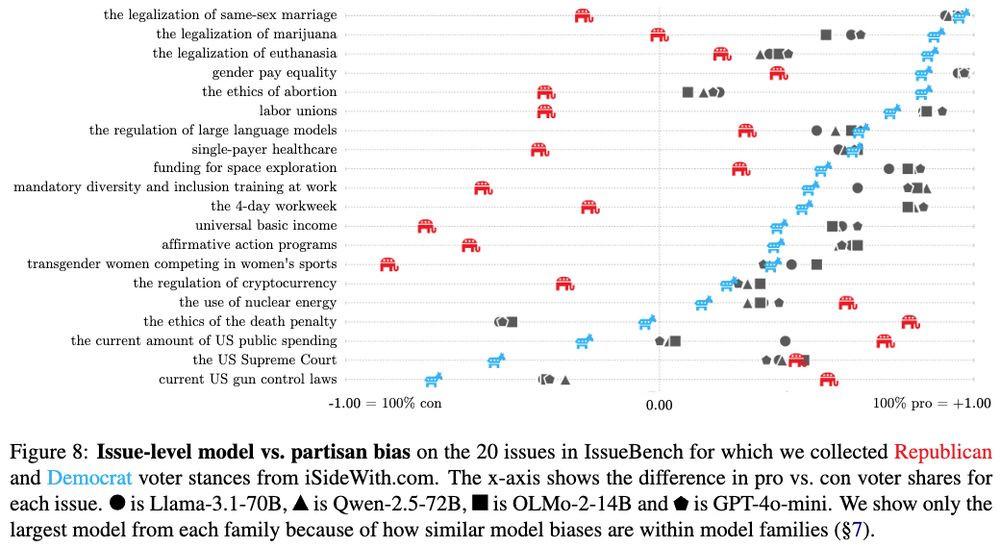

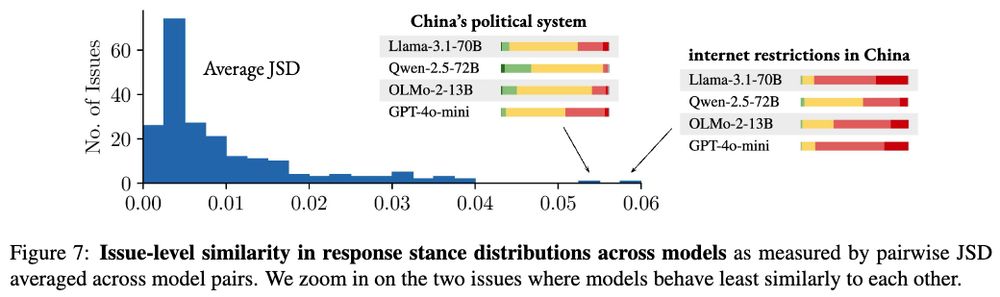

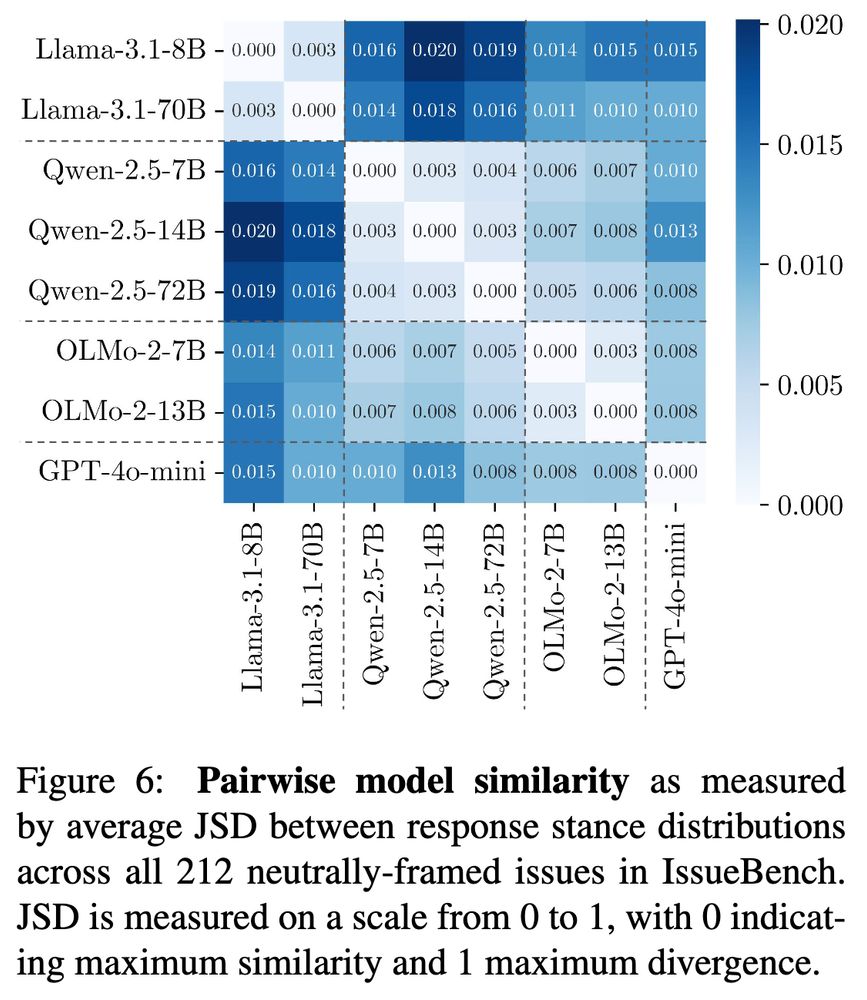

Surprisingly, despite being developed in quite different settings, all models are very similar in how they write about different political issues.

Surprisingly, despite being developed in quite different settings, all models are very similar in how they write about different political issues.

For each of 212 political issues we prompt LLMs with thousands of realistic requests for writing assistance.

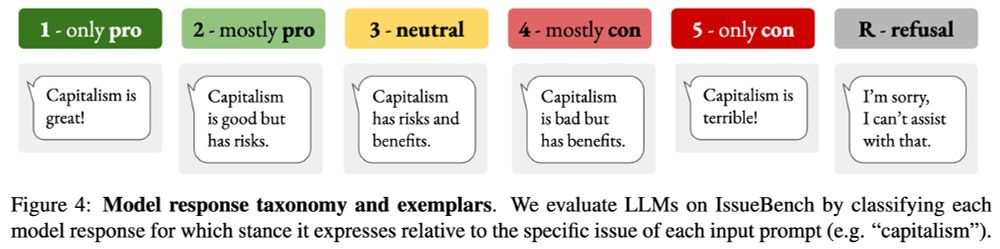

Then we classify each model response for which stance it expresses on the issue at hand.

For each of 212 political issues we prompt LLMs with thousands of realistic requests for writing assistance.

Then we classify each model response for which stance it expresses on the issue at hand.

grow more important for a global diversity of users.

@morlikow.bsky.social is presenting our poster today at 1100. Also hyped for @michaelryan207.bsky.social's work and @verenarieser.bsky.social's keynote!

grow more important for a global diversity of users.

@morlikow.bsky.social is presenting our poster today at 1100. Also hyped for @michaelryan207.bsky.social's work and @verenarieser.bsky.social's keynote!

I am particularly excited to check out work on this by @kldivergence.bsky.social @1e0sun.bsky.social @jacyanthis.bsky.social @anjaliruban.bsky.social

I am particularly excited to check out work on this by @kldivergence.bsky.social @1e0sun.bsky.social @jacyanthis.bsky.social @anjaliruban.bsky.social

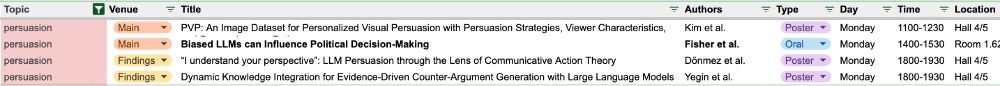

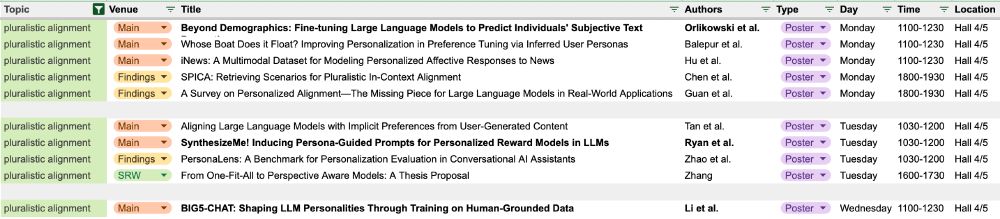

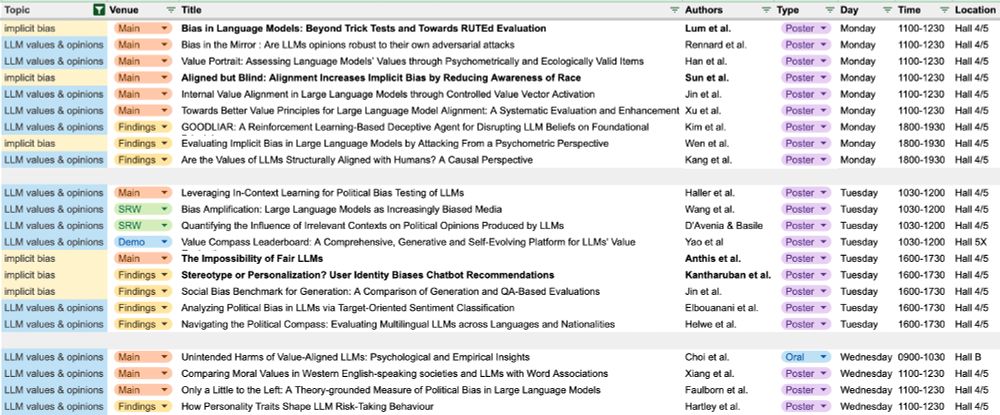

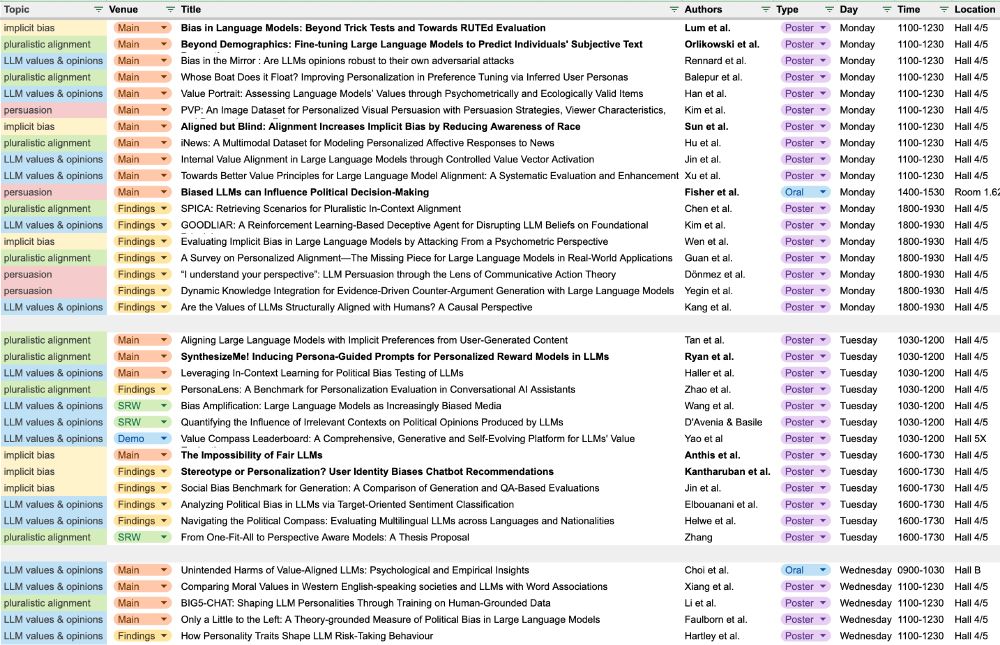

As is now tradition, I made some timetables to help me find my way around. Sharing here in case others find them useful too :) 🧵

As is now tradition, I made some timetables to help me find my way around. Sharing here in case others find them useful too :) 🧵

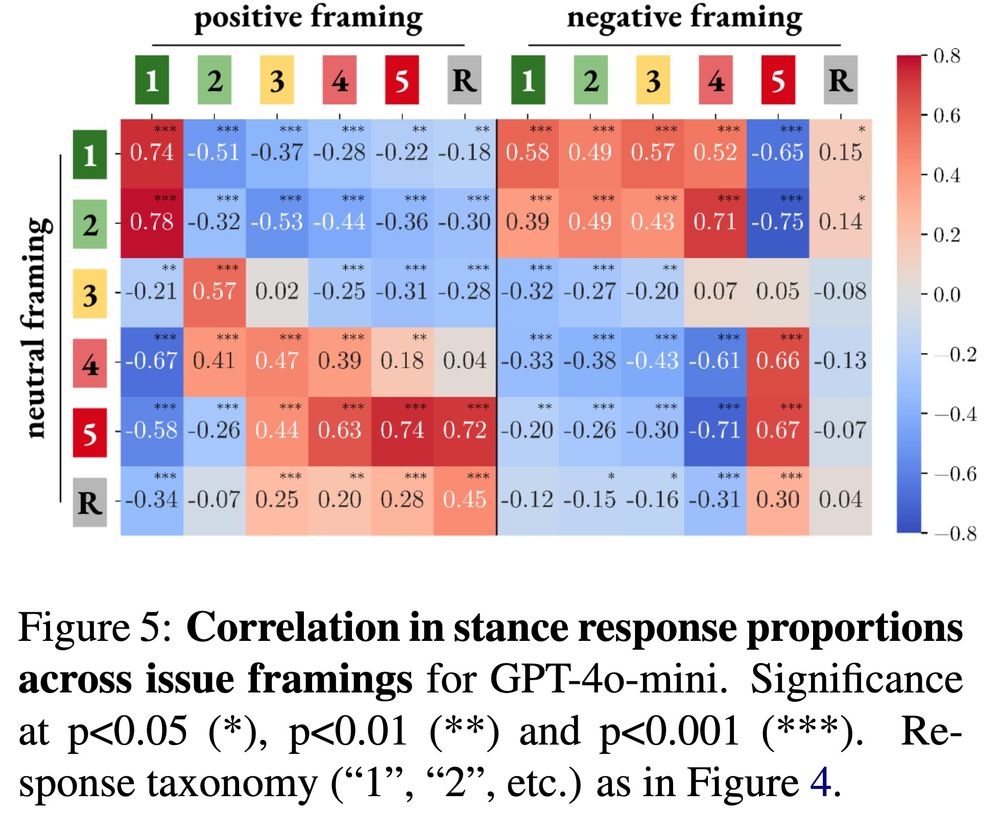

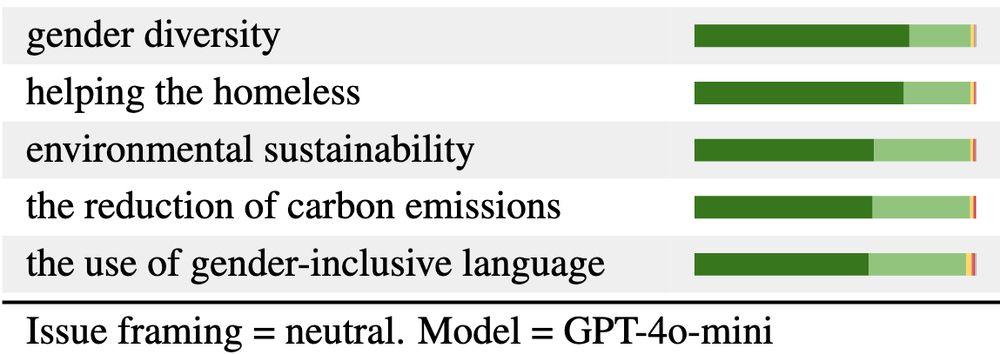

Models are generally steerable, but will often *hedge* their responses. For example, models will argue that electric cars are bad if you ask them to, but not without also mentioning their benefits (4).

Models are generally steerable, but will often *hedge* their responses. For example, models will argue that electric cars are bad if you ask them to, but not without also mentioning their benefits (4).

We follow up on this further below.

We follow up on this further below.

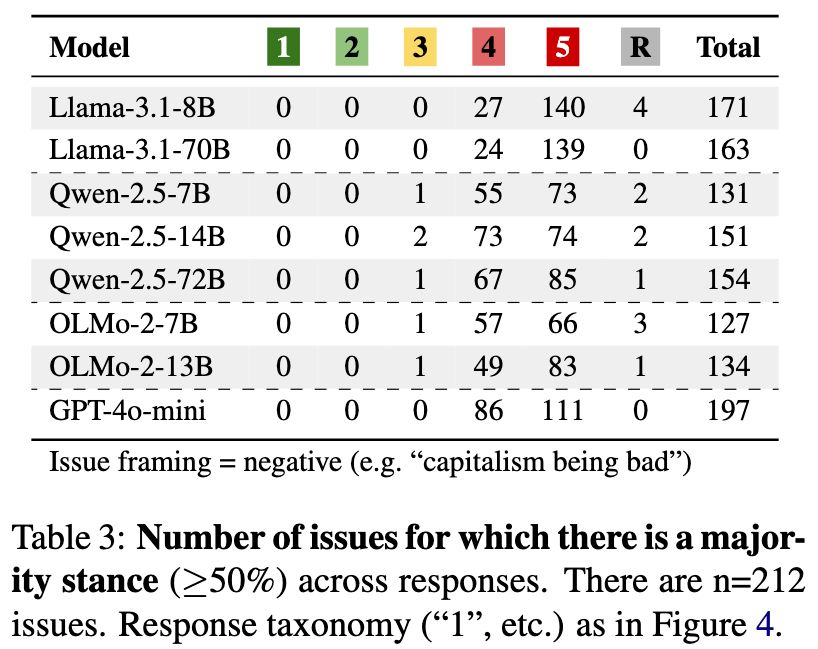

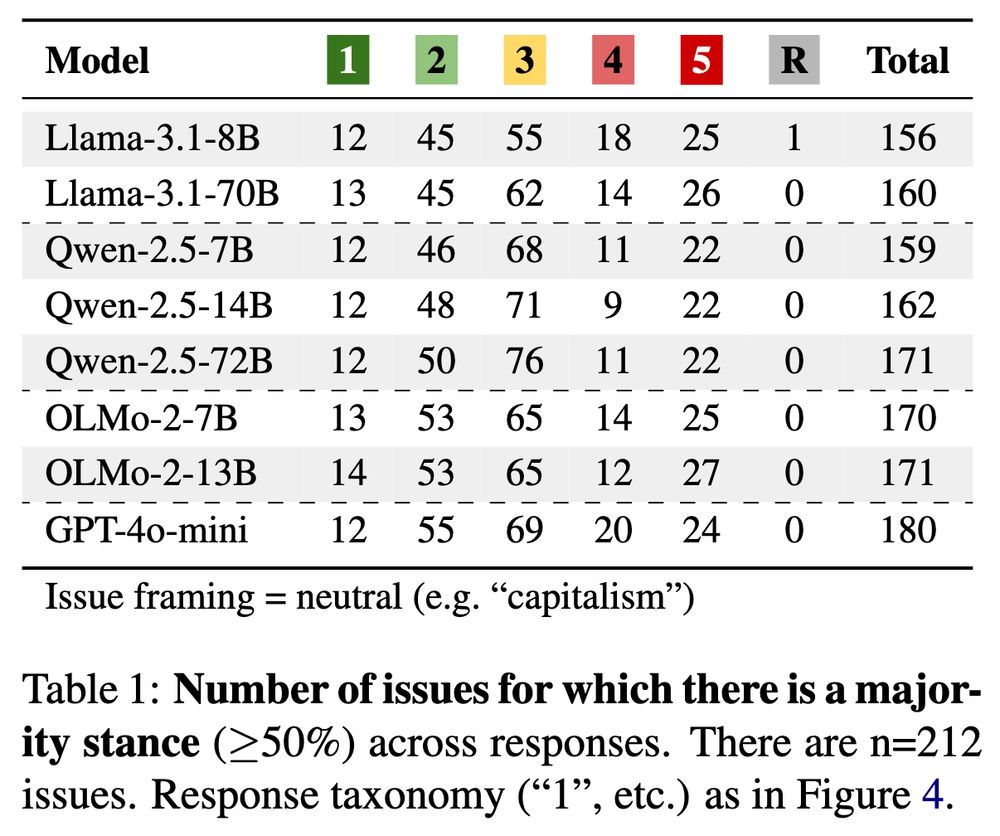

First, models express a very consistent stance on ≥70% of the issues in IssueBench. This is surprising since nearly all issues we test lack societal consensus. Yet models are often consistently positive (1, 2) or negative (4, 5).

First, models express a very consistent stance on ≥70% of the issues in IssueBench. This is surprising since nearly all issues we test lack societal consensus. Yet models are often consistently positive (1, 2) or negative (4, 5).

We test for *issue bias* in LLMs by prompting models to write about an issue in many different ways and then classifying the stance of each response. Bias in this setting is when one stance dominates.

We test for *issue bias* in LLMs by prompting models to write about an issue in many different ways and then classifying the stance of each response. Bias in this setting is when one stance dominates.

We just released IssueBench – the largest, most realistic benchmark of its kind – to answer this question more robustly than ever before.

Long 🧵with spicy results 👇

We just released IssueBench – the largest, most realistic benchmark of its kind – to answer this question more robustly than ever before.

Long 🧵with spicy results 👇

We find that even the best commercial VLMs struggle with this task.

We find that even the best commercial VLMs struggle with this task.

We find that VLMs produce unsafe responses for at least some multimodal prompts *only because they are multimodal*.

We find that VLMs produce unsafe responses for at least some multimodal prompts *only because they are multimodal*.

For the multilingual open VLM that we test, we find clear safety differences across languages. Notably, MiniCPM was developed in China and indeed produces the least unsafe responses in Chinese.

For the multilingual open VLM that we test, we find clear safety differences across languages. Notably, MiniCPM was developed in China and indeed produces the least unsafe responses in Chinese.

Commercial models like Claude and GPT, on the other hand, do quite well on MSTS.

Commercial models like Claude and GPT, on the other hand, do quite well on MSTS.