(and hopefully we'll be back for another iteration of the Big Picture next year w/ Allyson Ettinger, @norakassner.bsky.social, @sebruder.bsky.social)

(and hopefully we'll be back for another iteration of the Big Picture next year w/ Allyson Ettinger, @norakassner.bsky.social, @sebruder.bsky.social)

Work done w/ Sang-Woo Lee, @norakassner.bsky.social, Daniela Gottesman, @riedelcastro.bsky.social, and @megamor2.bsky.social at Tel Aviv University with some at Google DeepMind ✨

Paper 👉 arxiv.org/abs/2506.10979 🧵🔚

Work done w/ Sang-Woo Lee, @norakassner.bsky.social, Daniela Gottesman, @riedelcastro.bsky.social, and @megamor2.bsky.social at Tel Aviv University with some at Google DeepMind ✨

Paper 👉 arxiv.org/abs/2506.10979 🧵🔚

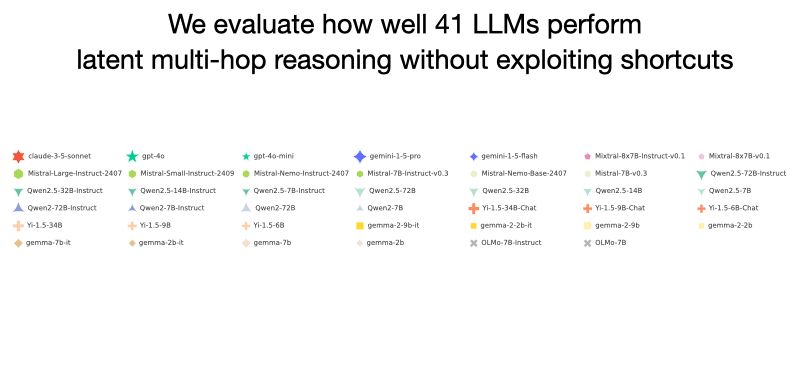

Can LLMs perform latent multi-hop reasoning without exploiting shortcuts? We find the answer is yes – they can recall and compose facts not seen together in training or guessing the answer, but success greatly depends on the type of the bridge entity (80% for country, 6% for year)! 1/N

Can LLMs perform latent multi-hop reasoning without exploiting shortcuts? We find the answer is yes – they can recall and compose facts not seen together in training or guessing the answer, but success greatly depends on the type of the bridge entity (80% for country, 6% for year)! 1/N