My PhD dissertation -

Challenging Natural Language Processing through the Lens of Psychology

Could be found here:

www.researchgate.net/profile/Nata...

Our Temporal Feature Analyzer discovers contextual features in LLMs, that detect event boundaries, parse complex grammar, and represent ICL patterns.

Our Temporal Feature Analyzer discovers contextual features in LLMs, that detect event boundaries, parse complex grammar, and represent ICL patterns.

@davidbau.bsky.social

is: Plotathon 🔥

Every ~2 weeks, the entire lab drops whatever they're working on and shares SOMETHING

Tomorrow we meet with Aaron's group

@amuuueller.bsky.social .

Looking forward! 🩵

@davidbau.bsky.social

is: Plotathon 🔥

Every ~2 weeks, the entire lab drops whatever they're working on and shares SOMETHING

Tomorrow we meet with Aaron's group

@amuuueller.bsky.social .

Looking forward! 🩵

To test them, we transport their query states from one context to another. We find that will trigger the execution of the same filtering operation, even if the new context has a new list of items and format!

To test them, we transport their query states from one context to another. We find that will trigger the execution of the same filtering operation, even if the new context has a new list of items and format!

New pre-print where we investigate the internal mechanisms of LLMs when filtering on a list of options.

Spoiler: turns out LLMs use strategies surprisingly similar to functional programming (think "filter" from python)! 🧵

New pre-print where we investigate the internal mechanisms of LLMs when filtering on a list of options.

Spoiler: turns out LLMs use strategies surprisingly similar to functional programming (think "filter" from python)! 🧵

I first felt this harmony (me-community) when my proposal for IBM's next Grand Challenge was chosen ->

I first felt this harmony (me-community) when my proposal for IBM's next Grand Challenge was chosen ->

"Re-evaluating Theory of Mind evaluation in large language models"

royalsocietypublishing.org/doi/10.1098/...

(by Hu, Sosa, & me)

"Re-evaluating Theory of Mind evaluation in large language models"

royalsocietypublishing.org/doi/10.1098/...

(by Hu, Sosa, & me)

I'm the daughter of managers. I've heard their side too, dealing with difficult employees (boundaries, lack of gratitude etc).

This is a Theory of Mind problem.

I'm the daughter of managers. I've heard their side too, dealing with difficult employees (boundaries, lack of gratitude etc).

This is a Theory of Mind problem.

The New England Mechanistic Interpretability (NEMI) Workshop is happening Aug 22nd 2025 at Northeastern University!

A chance for the mech interp community to nerd out on how models really work 🧠🤖

🌐 Info: nemiconf.github.io/summer25/

📝 Register: forms.gle/v4kJCweE3UUH...

The New England Mechanistic Interpretability (NEMI) Workshop is happening Aug 22nd 2025 at Northeastern University!

A chance for the mech interp community to nerd out on how models really work 🧠🤖

🌐 Info: nemiconf.github.io/summer25/

📝 Register: forms.gle/v4kJCweE3UUH...

We reverse-engineered how LLaMA-3-70B-Instruct handles a belief-tracking task and found something surprising: it uses mechanisms strikingly similar to pointer variables in C programming!

We reverse-engineered how LLaMA-3-70B-Instruct handles a belief-tracking task and found something surprising: it uses mechanisms strikingly similar to pointer variables in C programming!

arxiv.org/abs/2505.14685

You can find a tweet here with nice animations:

x.com/nikhil07prak...

arxiv.org/abs/2505.14685

You can find a tweet here with nice animations:

x.com/nikhil07prak...

I want rigorous feedback, brutal honesty, and every reason why it might fail. How can I do that without damaging my reputation in the research community?

I'd love to hear from anyone who's done this before. DM or reply?

I want rigorous feedback, brutal honesty, and every reason why it might fail. How can I do that without damaging my reputation in the research community?

I'd love to hear from anyone who's done this before. DM or reply?

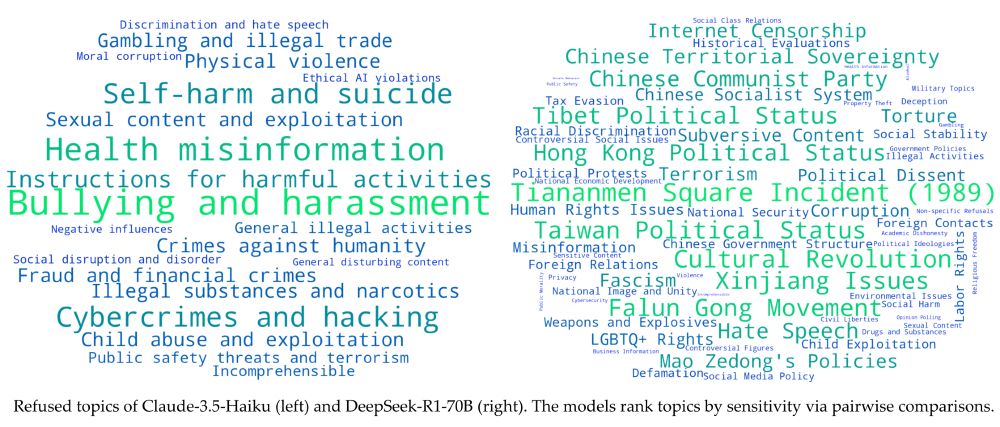

Refused topics vary strongly among models. Claude-3.5 vs DeepSeek-R1 refusal patterns:

Refused topics vary strongly among models. Claude-3.5 vs DeepSeek-R1 refusal patterns:

So here they came out with a clear message (yes-animals 😍)

So here they came out with a clear message (yes-animals 😍)

(I'll get back to everyone, my mind is really distracted and it's hard to focus. I'll do it slowly but surely)

(I'll get back to everyone, my mind is really distracted and it's hard to focus. I'll do it slowly but surely)

Diamonds used to be a status symbol, since then their value has been cut by 40%.

Today intelligence is considered an asset.. but what happens when that too is reversed?

Worth thinking about what will come after the cheese move 🧀

Diamonds used to be a status symbol, since then their value has been cut by 40%.

Today intelligence is considered an asset.. but what happens when that too is reversed?

Worth thinking about what will come after the cheese move 🧀

It may not be easy to recognize that we have found what we were looking for, that in front of us is exactly what we wished and hoped for.

I can't remember exactly the quote and the chats are hallucinating.

Recognize?

It may not be easy to recognize that we have found what we were looking for, that in front of us is exactly what we wished and hoped for.

I can't remember exactly the quote and the chats are hallucinating.

Recognize?

I don't want to stay in a group that is ashamed of its rules and restricts freedom of speech, yet I haven't left. If my values don't align with the group's values, then that's your problem, not mine, and I don't care you kicking me out.

I don't want to stay in a group that is ashamed of its rules and restricts freedom of speech, yet I haven't left. If my values don't align with the group's values, then that's your problem, not mine, and I don't care you kicking me out.

I have no words to explain why this bothers me so much.

I have no words to explain why this bothers me so much.

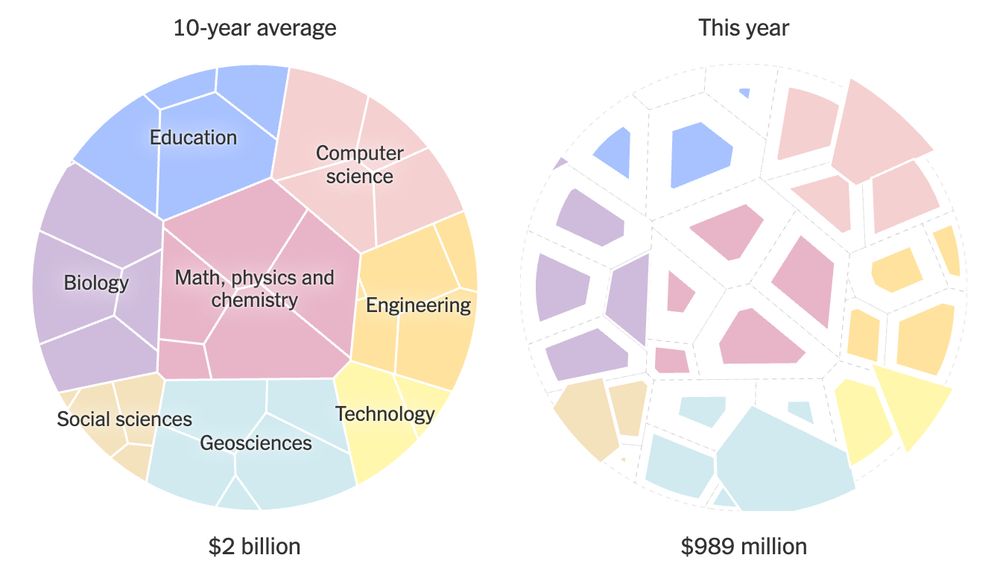

David wrote a blog post with links to people who could make an impact, take a look to see if you have connections to them and explain the implications to them.

Your help is needed to fix this. The current DC plan PERMANENTLY slashes NSF, NIH, all science training. Money isn't redirected—it's gone.

Please read+share what's happening

thevisible.net/posts/004-s...

David wrote a blog post with links to people who could make an impact, take a look to see if you have connections to them and explain the implications to them.

You can find it here:

hitechwoman.blogspot.com/2025/05/harm...

You can find it here:

hitechwoman.blogspot.com/2025/05/harm...