Our Temporal Feature Analyzer discovers contextual features in LLMs, that detect event boundaries, parse complex grammar, and represent ICL patterns.

Our Temporal Feature Analyzer discovers contextual features in LLMs, that detect event boundaries, parse complex grammar, and represent ICL patterns.

We’ve added more recent work and more immediately actionable directions for future work. Now published in Computational Linguistics!

We’ve added more recent work and more immediately actionable directions for future work. Now published in Computational Linguistics!

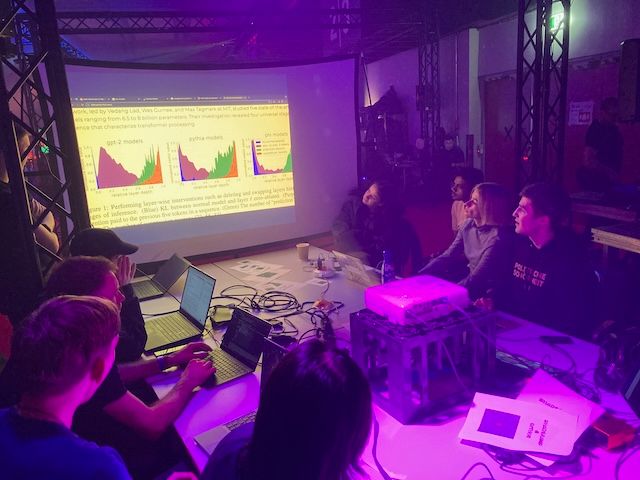

The New England Mechanistic Interpretability (NEMI) Workshop is happening Aug 22nd 2025 at Northeastern University!

A chance for the mech interp community to nerd out on how models really work 🧠🤖

🌐 Info: nemiconf.github.io/summer25/

📝 Register: forms.gle/v4kJCweE3UUH...

The New England Mechanistic Interpretability (NEMI) Workshop is happening Aug 22nd 2025 at Northeastern University!

A chance for the mech interp community to nerd out on how models really work 🧠🤖

🌐 Info: nemiconf.github.io/summer25/

📝 Register: forms.gle/v4kJCweE3UUH...

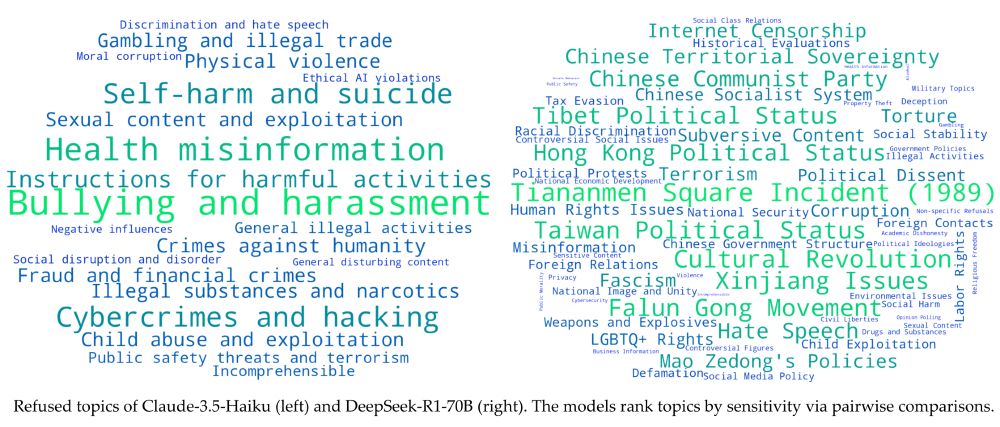

Refused topics vary strongly among models. Claude-3.5 vs DeepSeek-R1 refusal patterns:

Refused topics vary strongly among models. Claude-3.5 vs DeepSeek-R1 refusal patterns:

arborproject.github.io

arborproject.github.io

We are releasing SAE Bench, a suite of 8 SAE evaluations!

Project co-led with Adam Karvonen.

We are releasing SAE Bench, a suite of 8 SAE evaluations!

Project co-led with Adam Karvonen.