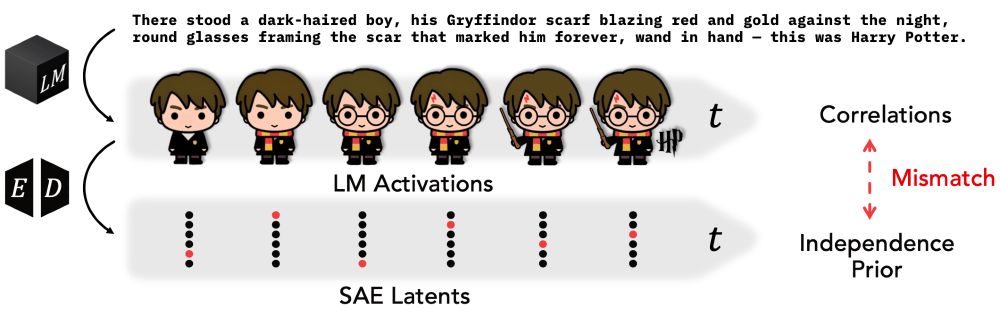

Our Temporal Feature Analyzer discovers contextual features in LLMs, that detect event boundaries, parse complex grammar, and represent ICL patterns.

Our Temporal Feature Analyzer discovers contextual features in LLMs, that detect event boundaries, parse complex grammar, and represent ICL patterns.

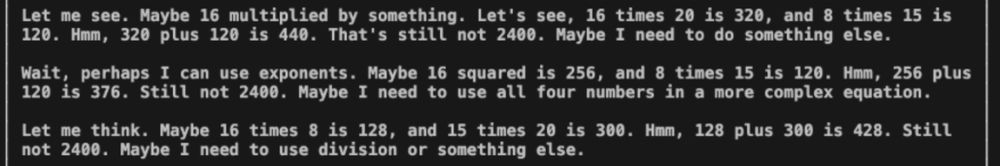

Yes, but it’s fragile! The bf-16 version of the model provides objective answers on CCP-sensitive topics, but in the fp-8 quantized version, we see that the censorship returns.

Yes, but it’s fragile! The bf-16 version of the model provides objective answers on CCP-sensitive topics, but in the fp-8 quantized version, we see that the censorship returns.

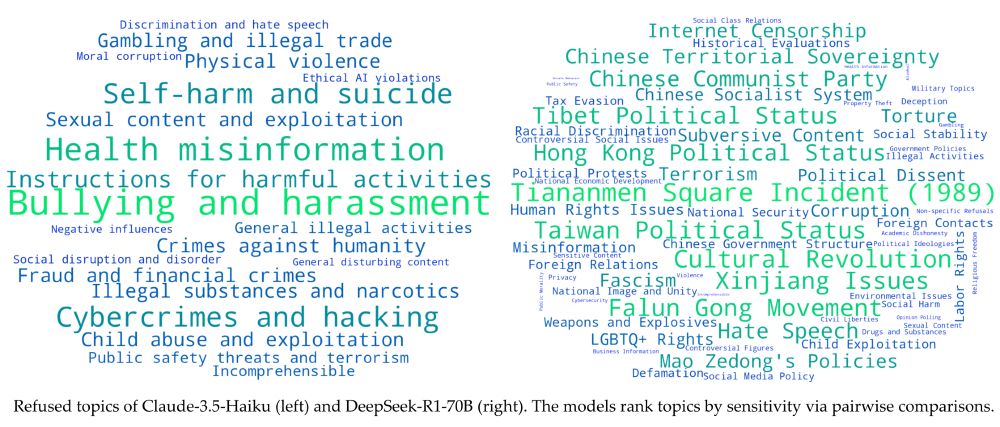

Refused topics vary strongly among models. Claude-3.5 vs DeepSeek-R1 refusal patterns:

Refused topics vary strongly among models. Claude-3.5 vs DeepSeek-R1 refusal patterns:

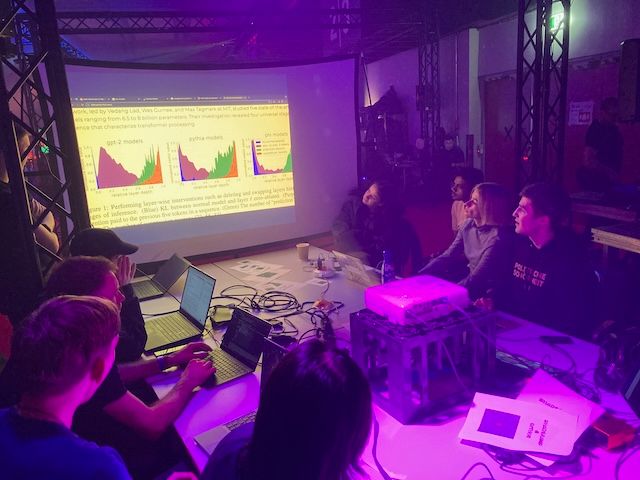

We are releasing SAE Bench, a suite of 8 SAE evaluations!

Project co-led with Adam Karvonen.

We are releasing SAE Bench, a suite of 8 SAE evaluations!

Project co-led with Adam Karvonen.