Ex.Robotics at Invento | 🔗 https://narvind2003.github.io

Here to strictly talk about ML, NNs and related ideas. Casual stuff on x.com/nagaraj_arvind

MCTS rollout pruning, python interpreter verifier and iterative self improvement of intermediate steps during each round of training.

Brilliant stuff this💪

rStar-Math is the kind of paper I wish to see more of!

they’re able to exceed o1-preview on math benchmarks (with the 7B)

the magic sauce seems to be in co-evolving the SLM (the main LLM) and the PPM (Process Preference Model, the verifier)

arxiv.org/abs/2501.04519

MCTS rollout pruning, python interpreter verifier and iterative self improvement of intermediate steps during each round of training.

Brilliant stuff this💪

rStar-Math is the kind of paper I wish to see more of!

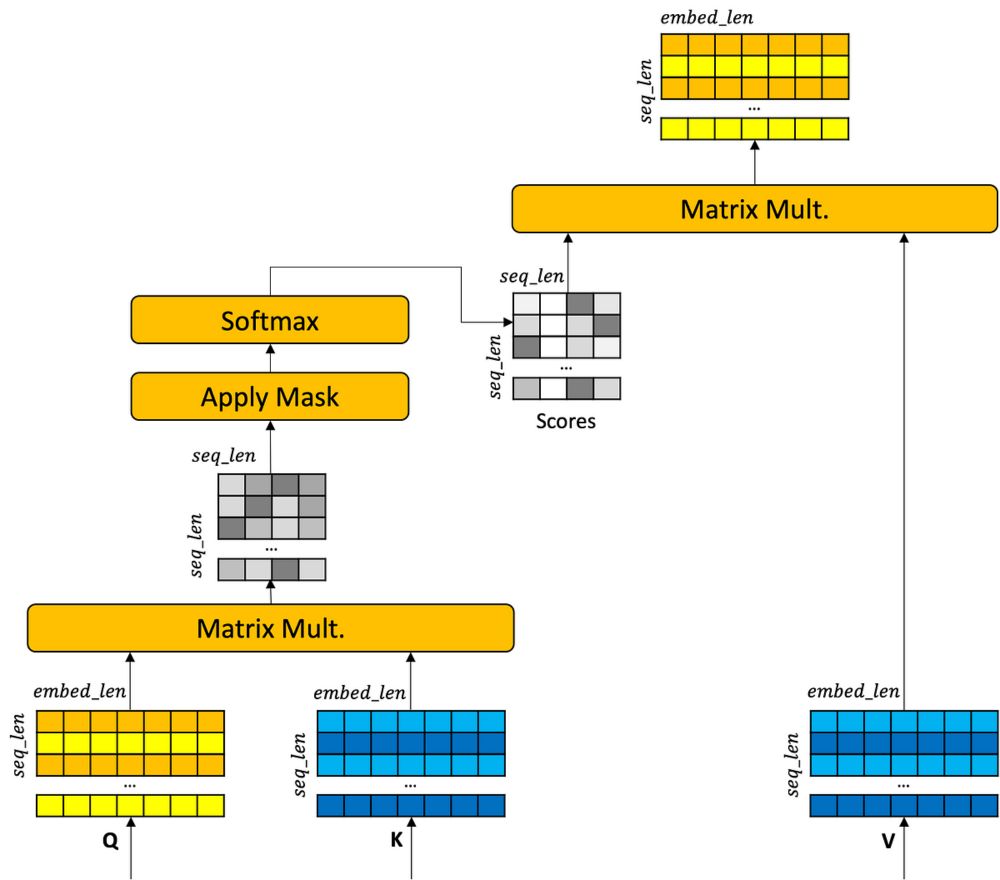

This beautiful blogpost by Chris Fleetwood explains the significance and how rotations of Q & K preserves meaning(magnitude) while encodes relative positions(angle shift) 🔥🔥

This beautiful blogpost by Chris Fleetwood explains the significance and how rotations of Q & K preserves meaning(magnitude) while encodes relative positions(angle shift) 🔥🔥

I have tried a bunch of ways and it refuses to!! 😭

I have tried a bunch of ways and it refuses to!! 😭

Turns out you can, and here is how: arxiv.org/abs/2411.15099

Really excited to this work on multimodal pretraining for my first bluesky entry!

🧵 A short and hopefully informative thread:

Turns out you can, and here is how: arxiv.org/abs/2411.15099

Really excited to this work on multimodal pretraining for my first bluesky entry!

🧵 A short and hopefully informative thread:

RSS'25 (abs): 49 days.

SIGGRAPH'25 (paper-md5): 55 days.

RSS'25 (paper): 56 days.

ICML'25: 62 days.

RLC'25 (abs): 77 days.

RLC'25 (paper): 84 days.

ICCV'25: 97 days.

RSS'25 (abs): 49 days.

SIGGRAPH'25 (paper-md5): 55 days.

RSS'25 (paper): 56 days.

ICML'25: 62 days.

RLC'25 (abs): 77 days.

RLC'25 (paper): 84 days.

ICCV'25: 97 days.

It may work 🙏

It may work 🙏

Trying to find those people and follow them here.

Trying to find those people and follow them here.

More bitterness.

"Us versus Them" factions finger pointing, name calling and blame shifting more than ever before.

Still, I am thankful for all those I get to interact with, learn from and share my ideas and happiness with.

I'm thankful for you all🙏

More bitterness.

"Us versus Them" factions finger pointing, name calling and blame shifting more than ever before.

Still, I am thankful for all those I get to interact with, learn from and share my ideas and happiness with.

I'm thankful for you all🙏

```

from atproto import *

def f(m): print(m.header, parse_subscribe_repos_message(m))

FirehoseSubscribeReposClient().start(f)

```

(Unless you're training 'task vectors' for junk, to improve your models.)

(Unless you're training 'task vectors' for junk, to improve your models.)

Vinyals...

For the next 8-9 years it's going to be fairly easy to predict the Neurips test of time awards.

Kaiming He 2025 anyone??

Vinyals...

For the next 8-9 years it's going to be fairly easy to predict the Neurips test of time awards.

Kaiming He 2025 anyone??

Paper: arxiv.org/abs/2411.17698

Webpage: ificl.github.io/MultiFoley/

Led by @czyang.bsky.social!

bsky.app/profile/czya...

Paper: arxiv.org/abs/2411.17698

Webpage: ificl.github.io/MultiFoley/

Led by @czyang.bsky.social!

bsky.app/profile/czya...

Deepseek's model (inner monologue thinking tokens) are super interesting to watch. But the CoT trajectories take it to 2 incorrect solutions before it runs out thinking time: It either adds an extra 8 or uses cube roots.

Can't nest like👇

Deepseek's model (inner monologue thinking tokens) are super interesting to watch. But the CoT trajectories take it to 2 incorrect solutions before it runs out thinking time: It either adds an extra 8 or uses cube roots.

Can't nest like👇

The sense of smell got added to the mix as we see companies like Osmo build a digital nose and a smell printer.

IMHO, the digital nose (input) will get adopted sooner than the smell printer(output) like the other senses - vision, touch.

Perhaps audio is an exception 🤔

The sense of smell got added to the mix as we see companies like Osmo build a digital nose and a smell printer.

IMHO, the digital nose (input) will get adopted sooner than the smell printer(output) like the other senses - vision, touch.

Perhaps audio is an exception 🤔

Their new blog post describes Coalescence - using Finite state machines to generate JSON output upto 5X faster during LLM inference!🔥

Pydantic model -> JSON schema -> regex -> FSM -> Selective sampling! 👏👏

blog.dottxt.co/coalescence....

Their new blog post describes Coalescence - using Finite state machines to generate JSON output upto 5X faster during LLM inference!🔥

Pydantic model -> JSON schema -> regex -> FSM -> Selective sampling! 👏👏

blog.dottxt.co/coalescence....

I have moved to a custom domain as well 😊

Directions here for anyone interested in doing the same:

bsky.social/about/blog/4...

I have moved to a custom domain as well 😊

I feel the uncertainty is beautifully captured by the final softmax's token logprobs.

Let me see what the authors actually have to say...

I feel the uncertainty is beautifully captured by the final softmax's token logprobs.

Let me see what the authors actually have to say...

Even harder sans tool use and iteration👇

Use any mathematical signs wherever you need:

2 + 2 + 2 = 6

3 3 3 = 6

4 4 4 = 6

5 5 5 = 6

6 6 6 = 6

7 7 7 = 6

8 8 8 = 6

9 9 9 = 6

Even harder sans tool use and iteration👇

Use any mathematical signs wherever you need:

2 + 2 + 2 = 6

3 3 3 = 6

4 4 4 = 6

5 5 5 = 6

6 6 6 = 6

7 7 7 = 6

8 8 8 = 6

9 9 9 = 6