👨🔬 RS DeepMind

Past:

👨🔬 R Midjourney 1y 🧑🎓 DPhil AIMS Uni of Oxford 4.5y

🧙♂️ RE DeepMind 1y 📺 SWE Google 3y 🎓 TUM

👤 @nwspk

It turns out, Bayesian reasoning has some surprising answers - no cognitive biases needed! Let's explore this fascinating paradox quickly ☺️

The paper is rather critical of reasoning LLMs (LRMs):

x.com/MFarajtabar...

The paper is rather critical of reasoning LLMs (LRMs):

x.com/MFarajtabar...

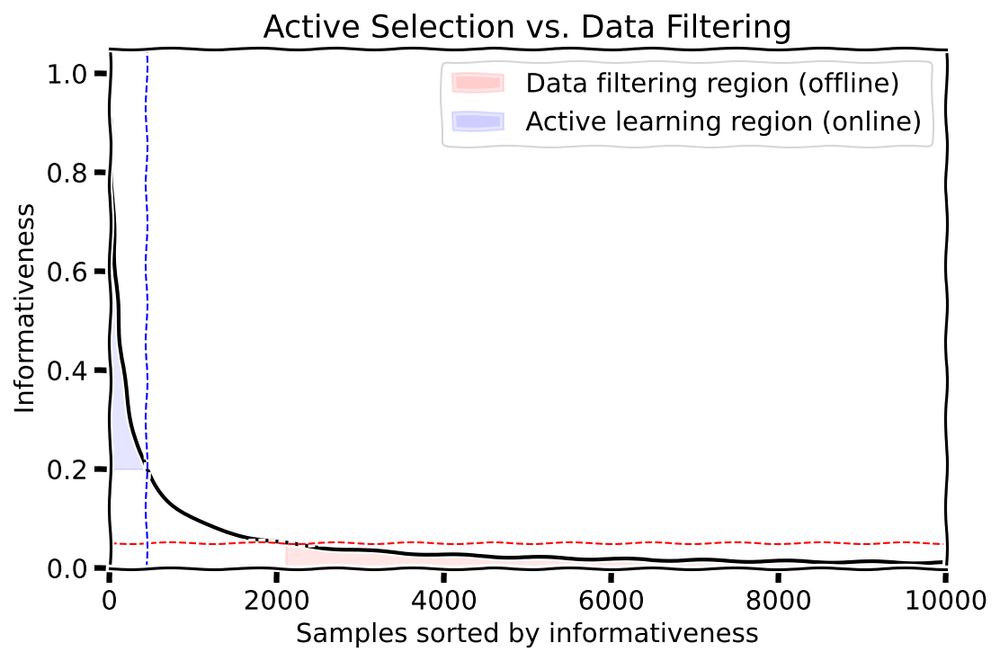

What is the fundamental difference between active learning and data filtering?

Well, obviously, the difference is that:

1/11

What is the fundamental difference between active learning and data filtering?

Well, obviously, the difference is that:

1/11

From Gen42: "Hive is an award-winning board game with a difference. There is no board. The pieces are added to the playing area thus creating the board. As more and more pieces are added the game becomes a fight to ...

🧵1/5

From Gen42: "Hive is an award-winning board game with a difference. There is no board. The pieces are added to the playing area thus creating the board. As more and more pieces are added the game becomes a fight to ...

🧵1/5

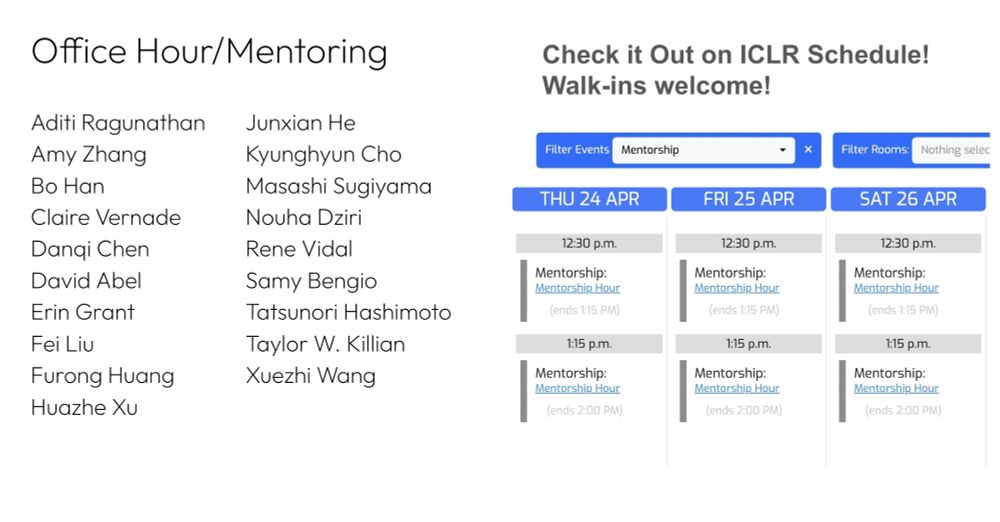

More info here:

blog.iclr.cc/2025/04/23/i...

You can find all the sessions on the ICLR.cc schedule!

More info here:

blog.iclr.cc/2025/04/23/i...

You can find all the sessions on the ICLR.cc schedule!

With @pokanovic.bsky.social, Jannes Kasper, @thoefler.bsky.social, @arkrause.bsky.social, and @nmervegurel.bsky.social

With @pokanovic.bsky.social, Jannes Kasper, @thoefler.bsky.social, @arkrause.bsky.social, and @nmervegurel.bsky.social

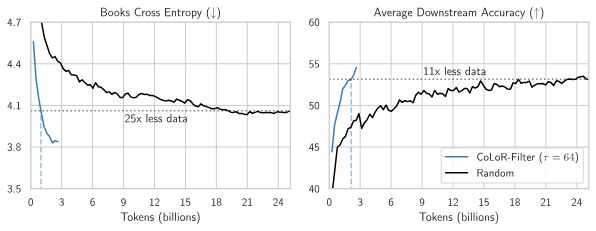

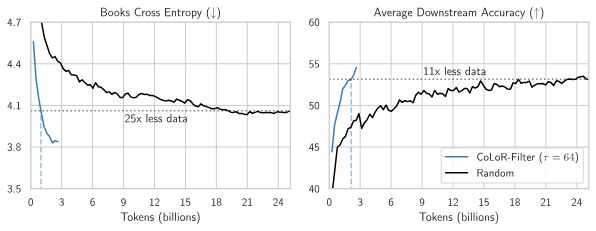

With @hlzhang109.bsky.social @schwarzjn.bsky.social @shamkakade.bsky.social

With @hlzhang109.bsky.social @schwarzjn.bsky.social @shamkakade.bsky.social

With @hlzhang109.bsky.social @schwarzjn.bsky.social @shamkakade.bsky.social

With @hlzhang109.bsky.social @schwarzjn.bsky.social @shamkakade.bsky.social

Slava Ukraini.

Slava Ukraini.

It turns out, Bayesian reasoning has some surprising answers - no cognitive biases needed! Let's explore this fascinating paradox quickly ☺️

It turns out, Bayesian reasoning has some surprising answers - no cognitive biases needed! Let's explore this fascinating paradox quickly ☺️

Are you bursting with anticipation to see what they are? Check out this blog post, and read down-thread!! 🎉🧵👇 1/n

medium.com/@TmlrOrg/ann...

It turns out, Bayesian reasoning has some surprising answers - no cognitive biases needed! Let's explore this fascinating paradox quickly ☺️

It turns out, Bayesian reasoning has some surprising answers - no cognitive biases needed! Let's explore this fascinating paradox quickly ☺️

bsky.app/profile/bla...

bsky.app/profile/bla...

blackhc.github.io/balitu/

and I'll try to add proper course notes over time 🤗

blackhc.github.io/balitu/

and I'll try to add proper course notes over time 🤗

By giving models more "time to think," Llama 1B outperforms Llama 8B in math—beating a model 8x its size. The full recipe is open-source!

By giving models more "time to think," Llama 1B outperforms Llama 8B in math—beating a model 8x its size. The full recipe is open-source!

Join us at East Meeting Room 8, 15, or online!

Join us at East Meeting Room 8, 15, or online!

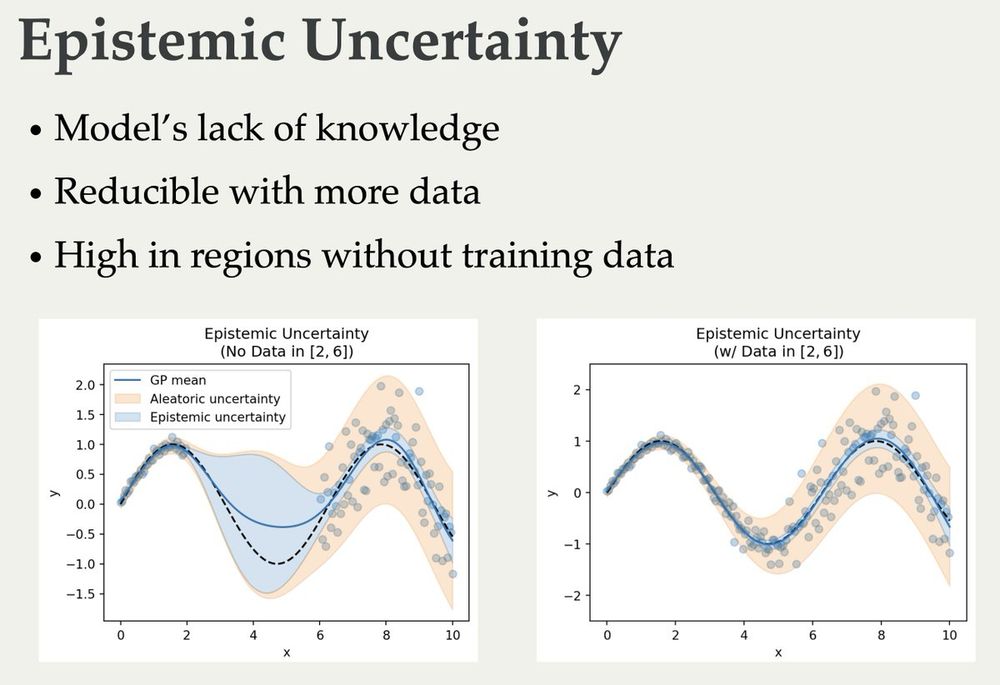

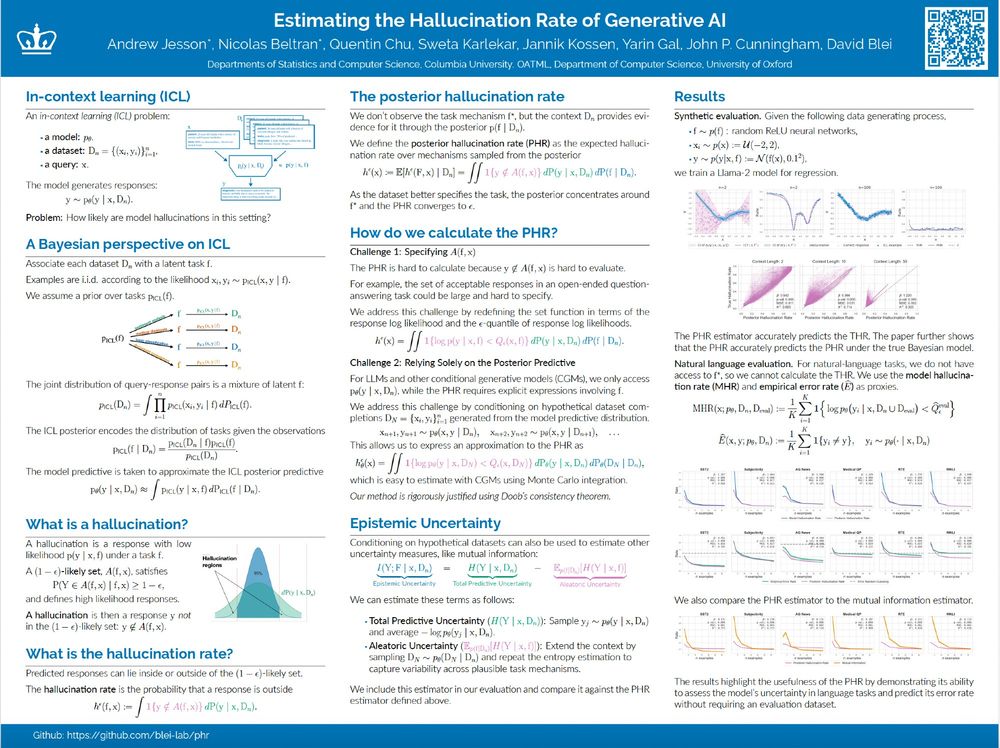

We will be presenting Estimating the Hallucination Rate of Generative AI at NeurIPS. Come if you'd like to chat about epistemic uncertainty for In-Context Learning, or uncertainty more generally. :)

Location: East Exhibit Hall A-C #2703

Time: Friday @ 4:30

Paper: arxiv.org/abs/2406.07457

We will be presenting Estimating the Hallucination Rate of Generative AI at NeurIPS. Come if you'd like to chat about epistemic uncertainty for In-Context Learning, or uncertainty more generally. :)

Location: East Exhibit Hall A-C #2703

Time: Friday @ 4:30

Paper: arxiv.org/abs/2406.07457