📢 The short description of the tasks is now available on the website 👇

dcase.community/challenge2025/

📢 The short description of the tasks is now available on the website 👇

dcase.community/challenge2025/

- Give an intuition of how transformers work

- Explain what each section of the paper means and how you can understand and implement it

- Code it down using PyTorch from a beginners perspective

goyalpramod.github.io/blogs/Transf...

- Give an intuition of how transformers work

- Explain what each section of the paper means and how you can understand and implement it

- Code it down using PyTorch from a beginners perspective

goyalpramod.github.io/blogs/Transf...

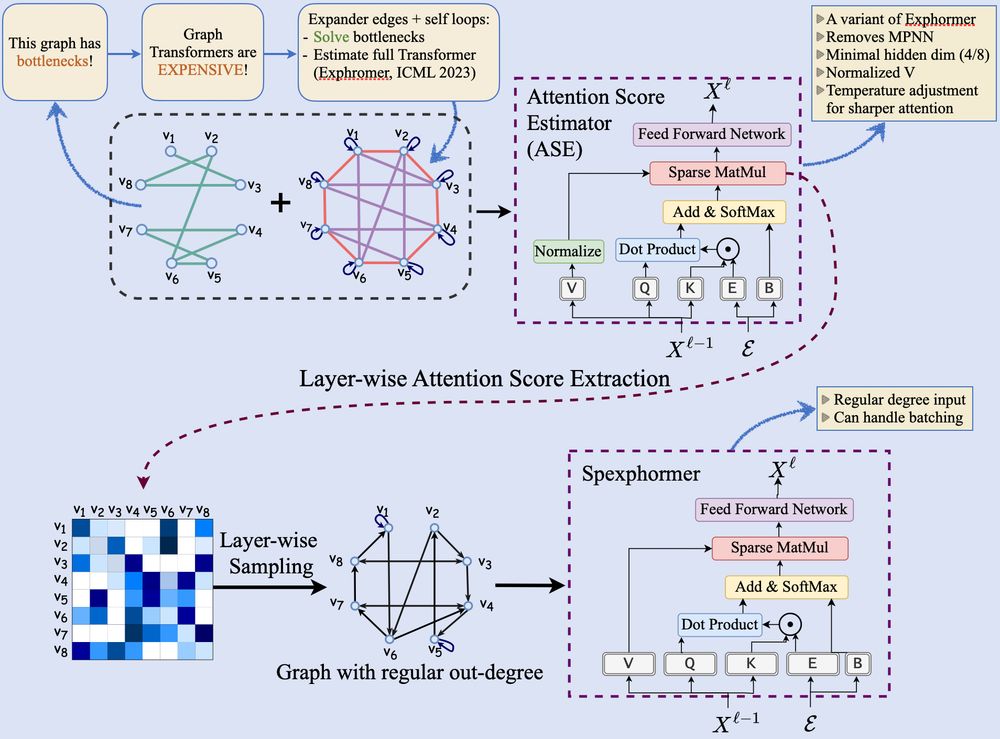

NeurIPS link: neurips.cc/virtual/2024...

Paper: arxiv.org/pdf/2410.05997

🧪📍Poster #3602 (East Hall A-C)

NeurIPS link: neurips.cc/virtual/2024...

Paper: arxiv.org/pdf/2410.05997

🧪📍Poster #3602 (East Hall A-C)

Sketch2Sound can create sounds from sonic imitations (i.e., a vocal imitation or a reference sound) via interpretable, time-varying control signals.

paper: arxiv.org/abs/2412.08550

web: hugofloresgarcia.art/sketch2sound

Sketch2Sound can create sounds from sonic imitations (i.e., a vocal imitation or a reference sound) via interpretable, time-varying control signals.

paper: arxiv.org/abs/2412.08550

web: hugofloresgarcia.art/sketch2sound

dcase.community/articles/cha...

Stay tuned for more details.

dcase.community/articles/cha...

Stay tuned for more details.

Intriguing Properties of Robust Classification arxiv.org/abs/2412.04245

Intriguing Properties of Robust Classification arxiv.org/abs/2412.04245

Please consider applying if you have expertise in the domain or related areas such as multimodal models, video generation 📹, etc.

boards.greenhouse.io/deepmind/job...

Please consider applying if you have expertise in the domain or related areas such as multimodal models, video generation 📹, etc.

boards.greenhouse.io/deepmind/job...

[1/13]

#NeurIPS2024

[1/13]

#NeurIPS2024

Turns out you can, and here is how: arxiv.org/abs/2411.15099

Really excited to this work on multimodal pretraining for my first bluesky entry!

🧵 A short and hopefully informative thread:

Turns out you can, and here is how: arxiv.org/abs/2411.15099

Really excited to this work on multimodal pretraining for my first bluesky entry!

🧵 A short and hopefully informative thread:

It would be good to add lots more people so do comment and I'll add!

go.bsky.app/97fAH2N

It would be good to add lots more people so do comment and I'll add!

go.bsky.app/97fAH2N

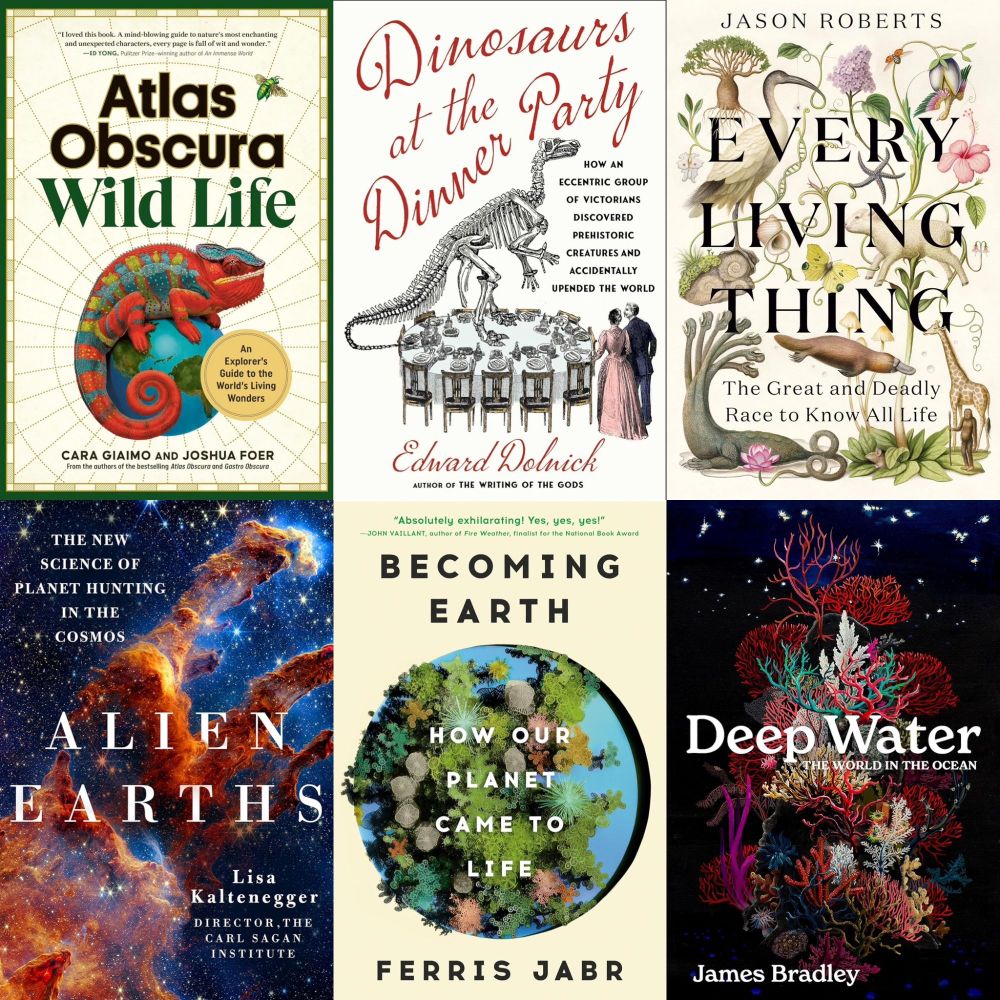

Introducing: ✨🎁📚 The 2024 Holiday Gift Guide to Nature & Science Books ✨🎁📚

Please share: Let's make this go viral in time for Black Friday / holiday shopping!

Introducing: ✨🎁📚 The 2024 Holiday Gift Guide to Nature & Science Books ✨🎁📚

Please share: Let's make this go viral in time for Black Friday / holiday shopping!

EPIC-SOUNDS: A Large-scale Dataset of Actions That Sound

+ sound event detection baseline

+ detailed annotations pipeline

+ analysis of visual vs audio events

+ audio-visual models

arxiv.org/abs/2302.006...

EPIC-SOUNDS: A Large-scale Dataset of Actions That Sound

+ sound event detection baseline

+ detailed annotations pipeline

+ analysis of visual vs audio events

+ audio-visual models

arxiv.org/abs/2302.006...

In the meantime, if you are curious how Multimodal LLMs work, I recently wrote an article to explain the main & recent approaches: magazine.sebastianraschka.com/p/understand...

In the meantime, if you are curious how Multimodal LLMs work, I recently wrote an article to explain the main & recent approaches: magazine.sebastianraschka.com/p/understand...

Young people have no idea how they live in a golden age w.r.t. access to knowledge.

Young people have no idea how they live in a golden age w.r.t. access to knowledge.

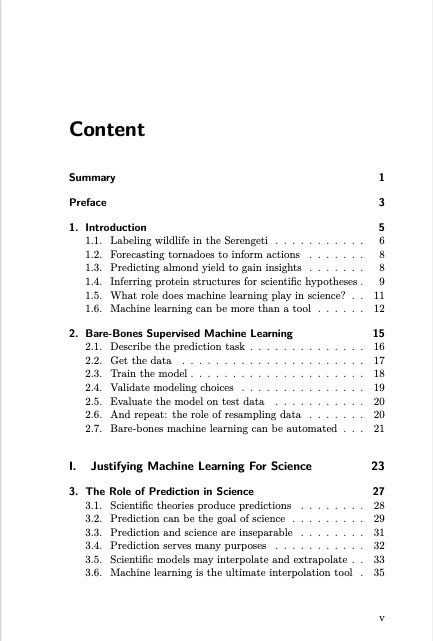

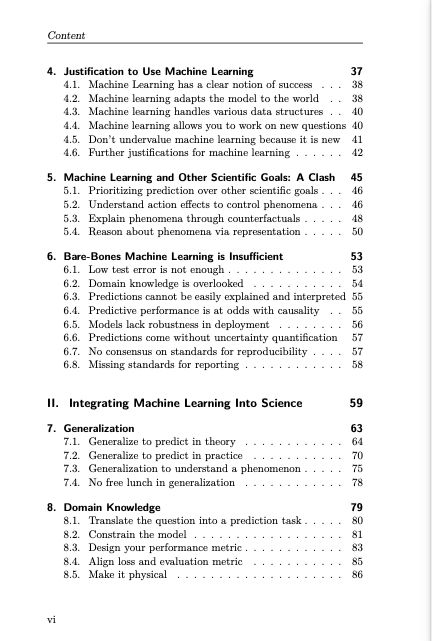

Timo and I recently published a book, and even if you are not a scientist, you'll find useful overviews of topics like causality and robustness.

The best part is that you can read it for free: ml-science-book.com

Timo and I recently published a book, and even if you are not a scientist, you'll find useful overviews of topics like causality and robustness.

The best part is that you can read it for free: ml-science-book.com

go.bsky.app/LGmct4z

go.bsky.app/LGmct4z