Funemployed, prev founder at bite.ai (acquired by MyFitnessPal), principal ml eng at MFP, 1st employee at Clarifai, ML at Columbia, NYU future labs

I mostly disagree with his essay and think it missed the point

You can read it here: thomwolf.io/blog/deepsee...

I mostly disagree with his essay and think it missed the point

You can read it here: thomwolf.io/blog/deepsee...

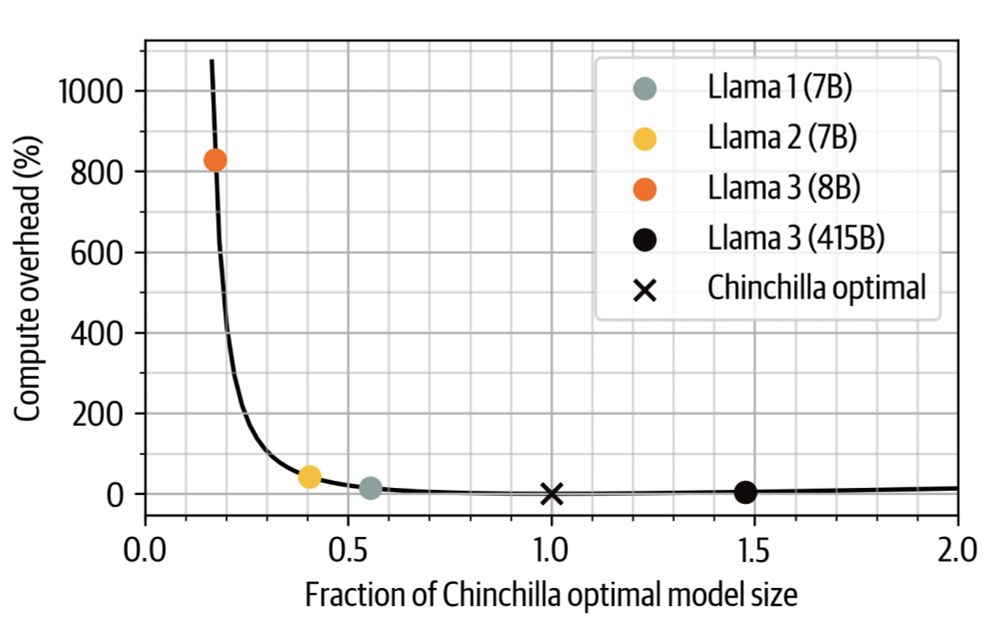

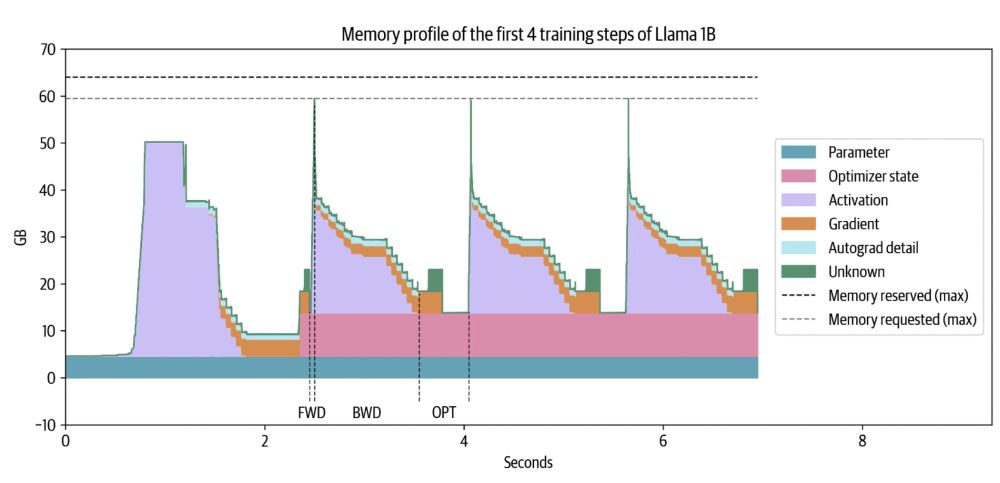

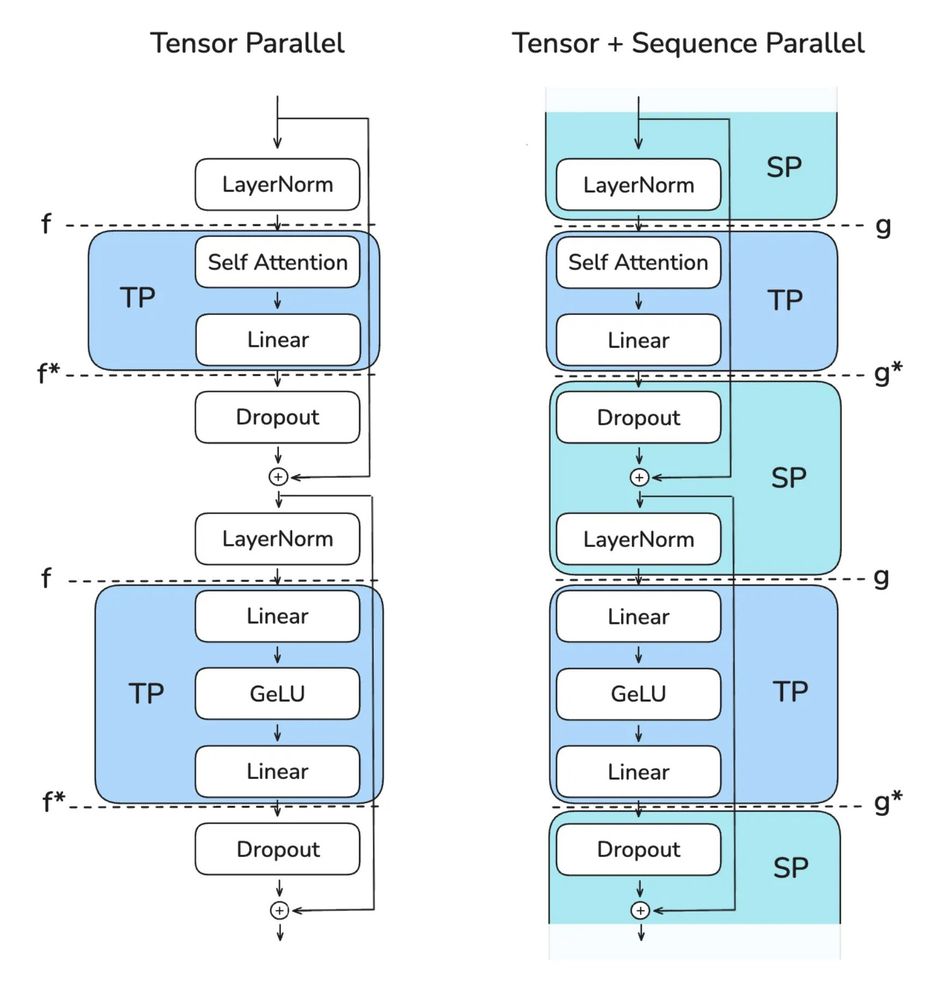

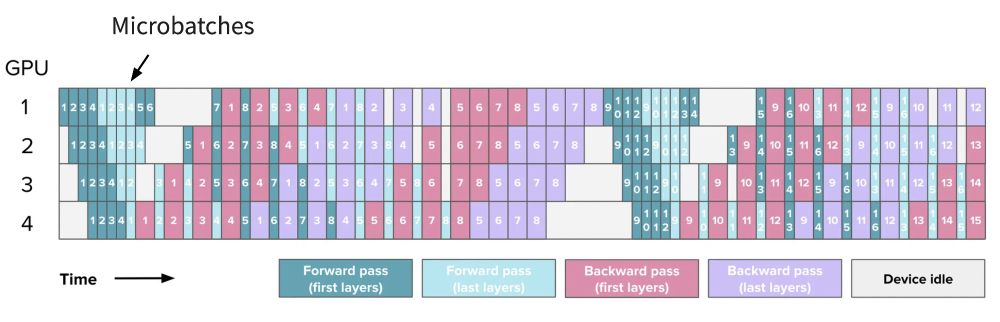

Gave a workshop at Uni Bern: starts with scaling laws and goes to web scale data processing and finishes training with 4D parallelism and ZeRO.

*assuming your home includes an H100 cluster

Gave a workshop at Uni Bern: starts with scaling laws and goes to web scale data processing and finishes training with 4D parallelism and ZeRO.

*assuming your home includes an H100 cluster

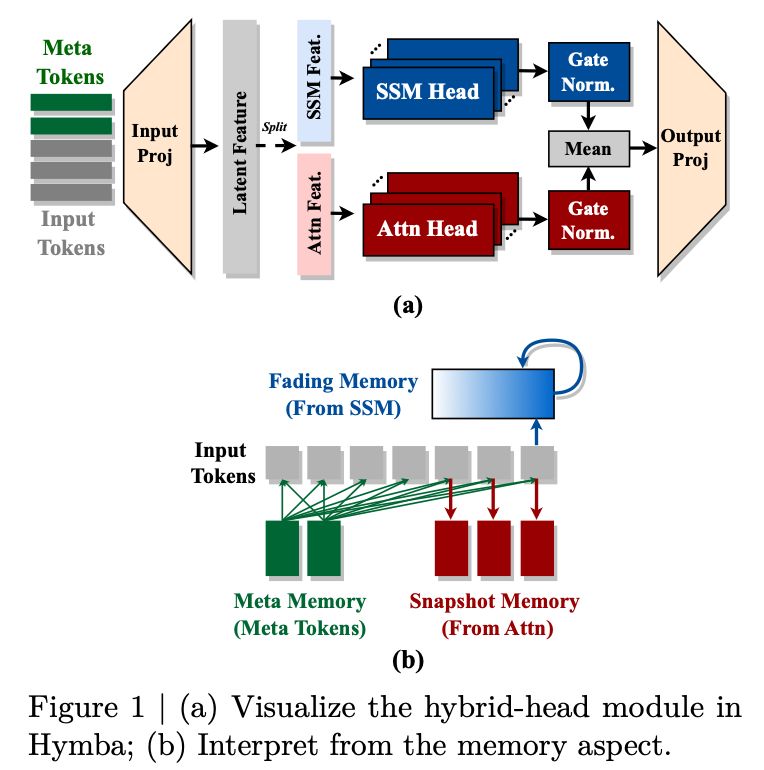

Their architecture consists of Hymba hybrid blocks, with Mamba and Attention connected in parallel. They found this design to be more effective in disentangling attention into linear and non-linear components.

Their architecture consists of Hymba hybrid blocks, with Mamba and Attention connected in parallel. They found this design to be more effective in disentangling attention into linear and non-linear components.

Delighted to share AIMv2, a family of strong, scalable, and open vision encoders that excel at multimodal understanding, recognition, and grounding 🧵

paper: arxiv.org/abs/2411.14402

code: github.com/apple/ml-aim

HF: huggingface.co/collections/...

Delighted to share AIMv2, a family of strong, scalable, and open vision encoders that excel at multimodal understanding, recognition, and grounding 🧵

paper: arxiv.org/abs/2411.14402

code: github.com/apple/ml-aim

HF: huggingface.co/collections/...

It beats llama 3b with only 1.5b parameters

arxiv.org/abs/2411.13676

It beats llama 3b with only 1.5b parameters

arxiv.org/abs/2411.13676