http://faculty.washington.edu/maxkw/

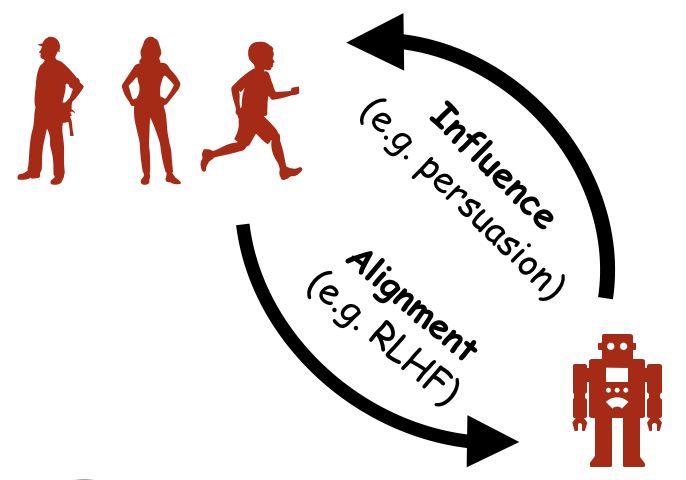

Humans are the ultimate cooperators. We coordinate on a scale and scope no other species (nor AI) can match. What makes this possible? 🧵

www.pnas.org/doi/10.1073/...

Our new paper shows AI which models others’ minds as Python code 💻 can quickly and accurately predict human behavior!

shorturl.at/siUYI%F0%9F%...

Our new paper shows AI which models others’ minds as Python code 💻 can quickly and accurately predict human behavior!

shorturl.at/siUYI%F0%9F%...

(📷 xkcd)

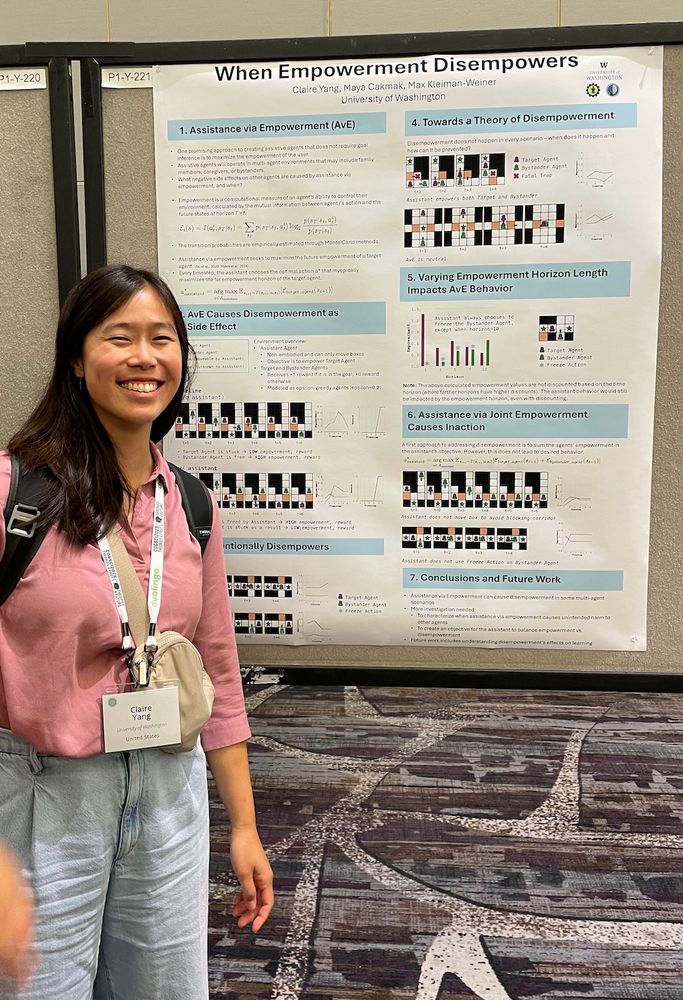

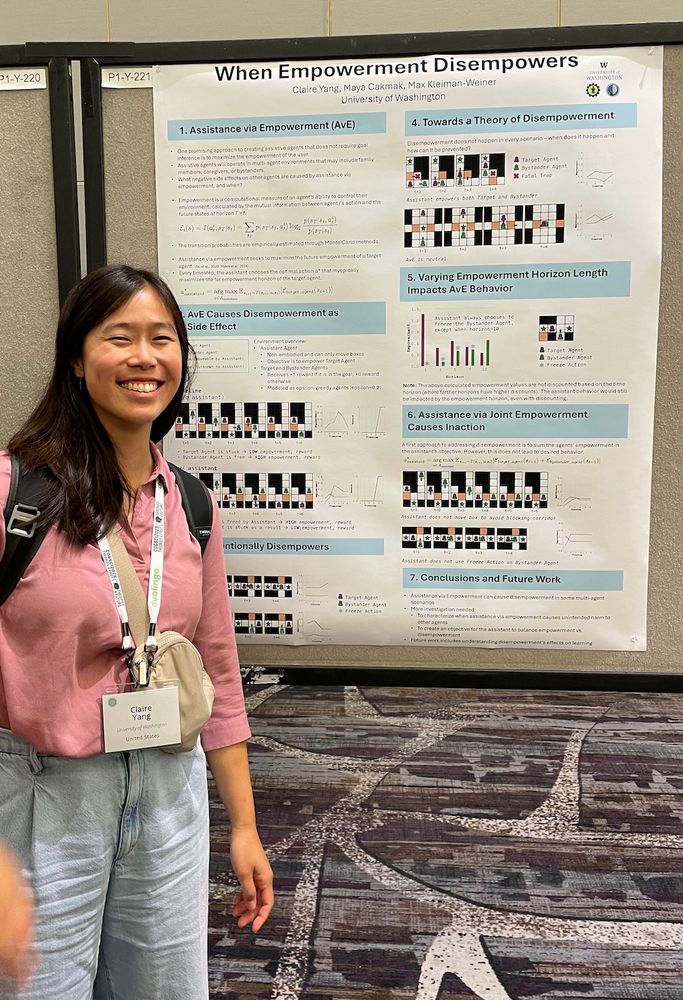

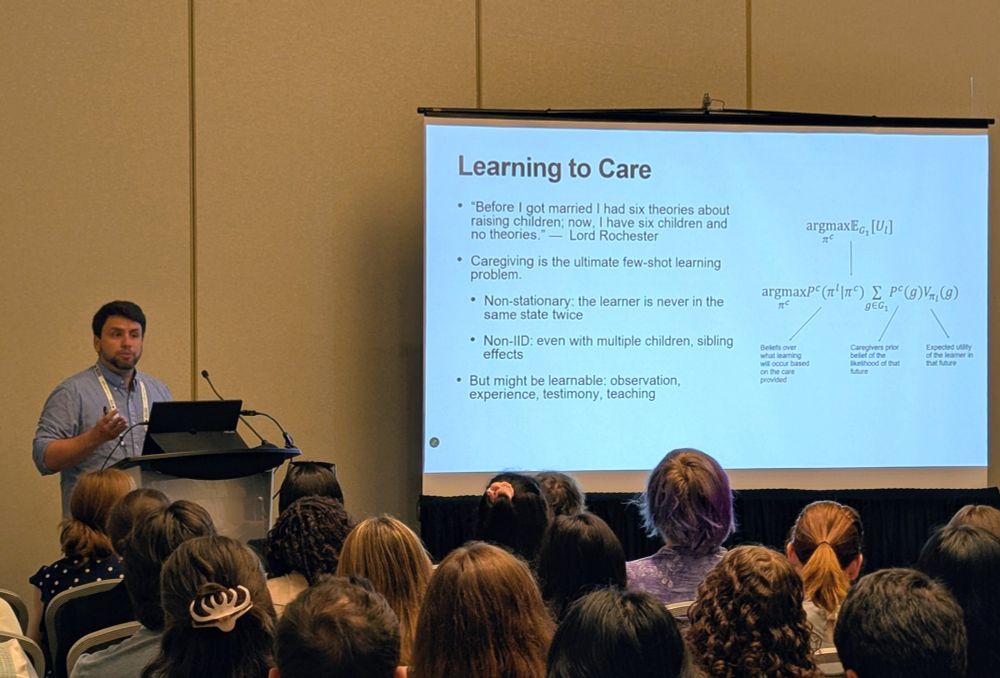

"before I got married I had six theories about raising children, now I have six kids and no theories"......but here's another theory #cogsci2025

"before I got married I had six theories about raising children, now I have six kids and no theories"......but here's another theory #cogsci2025

Humans are the ultimate cooperators. We coordinate on a scale and scope no other species (nor AI) can match. What makes this possible? 🧵

www.pnas.org/doi/10.1073/...

Humans are the ultimate cooperators. We coordinate on a scale and scope no other species (nor AI) can match. What makes this possible? 🧵

www.pnas.org/doi/10.1073/...

Still deciding which to pick? If you are interested in building computational models of social cognition, I hope you consider joining @maxkw.bsky.social, @dae.bsky.social, and me for a crash course on memo!

Building computational models of social cognition in memo

🗓️ Wednesday, July 30

📍 Pacifica I - 8:30-10:00

🗣️ Kartik Chandra, Sean Dae Houlihan, and Max Kleiman-Weiner

🧑💻 underline.io/events/489/s...

Still deciding which to pick? If you are interested in building computational models of social cognition, I hope you consider joining @maxkw.bsky.social, @dae.bsky.social, and me for a crash course on memo!

Building computational models of social cognition in memo

🗓️ Wednesday, July 30

📍 Pacifica I - 8:30-10:00

🗣️ Kartik Chandra, Sean Dae Houlihan, and Max Kleiman-Weiner

🧑💻 underline.io/events/489/s...

Building computational models of social cognition in memo

🗓️ Wednesday, July 30

📍 Pacifica I - 8:30-10:00

🗣️ Kartik Chandra, Sean Dae Houlihan, and Max Kleiman-Weiner

🧑💻 underline.io/events/489/s...

Building computational models of social cognition in memo

🗓️ Wednesday, July 30

📍 Pacifica I - 8:30-10:00

🗣️ Kartik Chandra, Sean Dae Houlihan, and Max Kleiman-Weiner

🧑💻 underline.io/events/489/s...

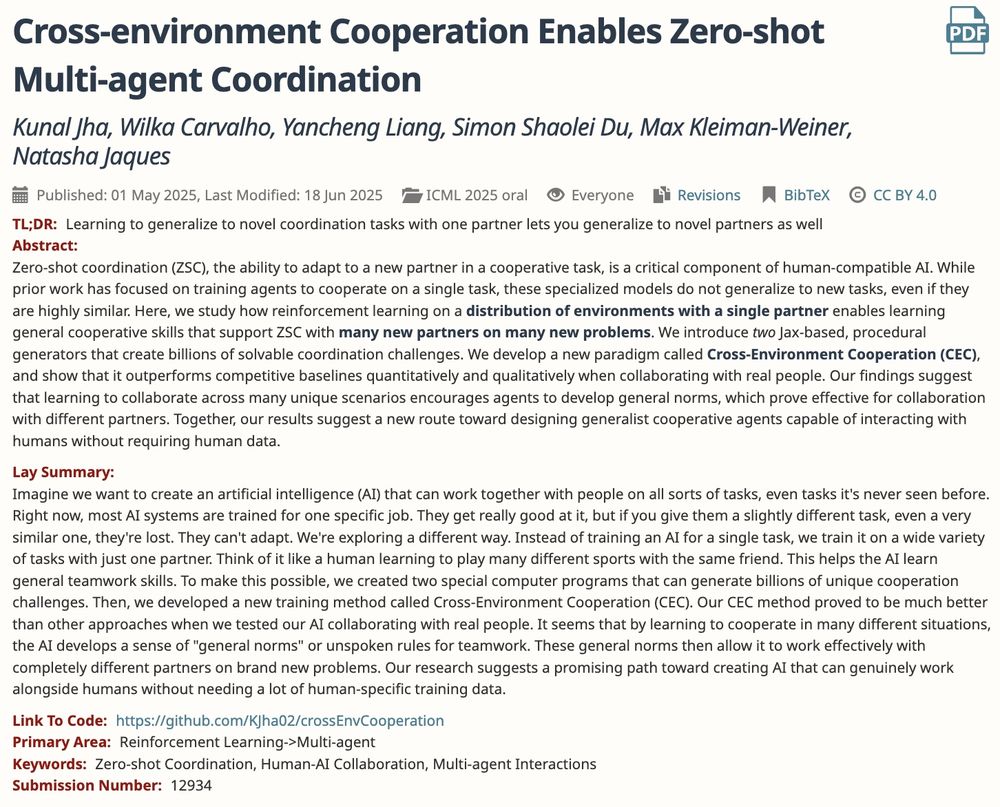

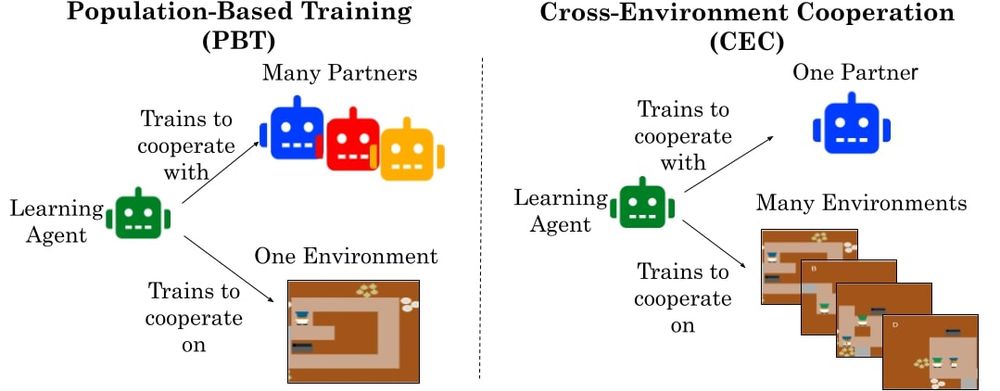

@kjha02.bsky.social · Wilka Carvalho · Yancheng Liang · Simon Du ·

@maxkw.bsky.social · @natashajaques.bsky.social

doi.org/10.48550/arX...

(3/20)

@kjha02.bsky.social · Wilka Carvalho · Yancheng Liang · Simon Du ·

@maxkw.bsky.social · @natashajaques.bsky.social

doi.org/10.48550/arX...

(3/20)

sites.google.com/view/2nd-evo...

I enjoyed organizing this workshop with Olivia Chu and Alex McAvoy.

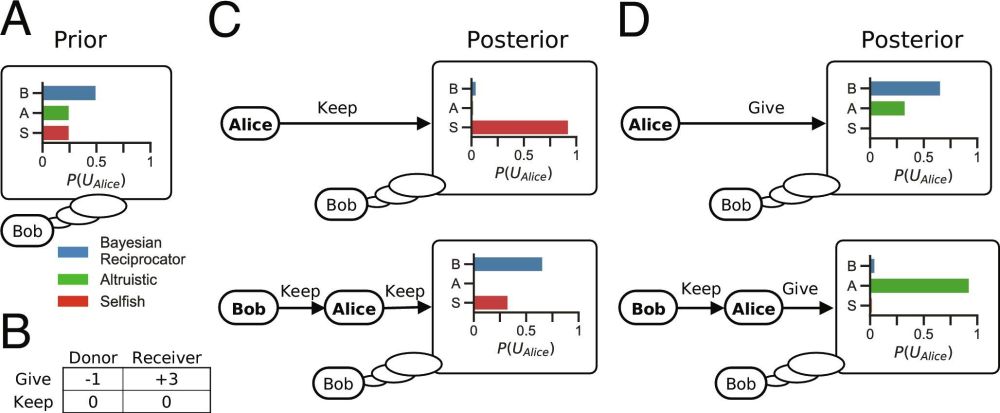

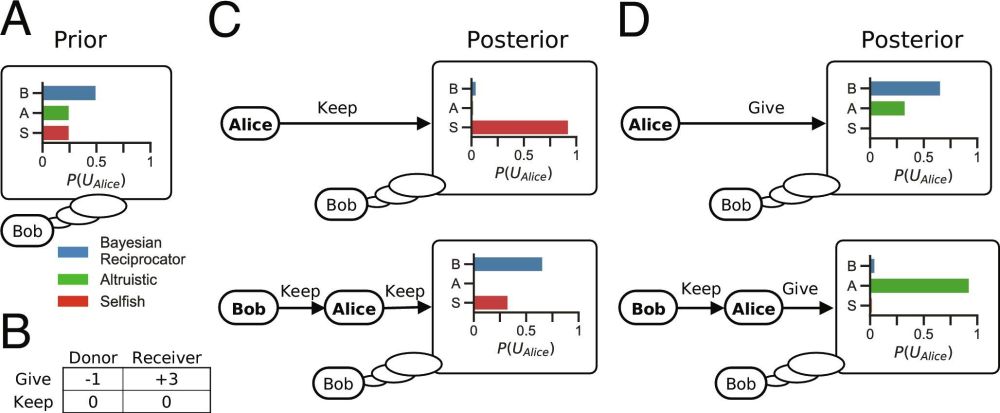

"Inference from social evaluation" with Zach Davis, Kelsey Allen, @maxkw.bsky.social, and @julianje.bsky.social

📃 (paper): psycnet.apa.org/record/2026-...

📜 (preprint): osf.io/preprints/ps...

"Inference from social evaluation" with Zach Davis, Kelsey Allen, @maxkw.bsky.social, and @julianje.bsky.social

📃 (paper): psycnet.apa.org/record/2026-...

📜 (preprint): osf.io/preprints/ps...

Agents trained in self-play across many environments learn cooperative norms that transfer to humans on novel tasks.

shorturl.at/fqsNN%F0%9F%...

Agents trained in self-play across many environments learn cooperative norms that transfer to humans on novel tasks.

shorturl.at/fqsNN%F0%9F%...

Agents trained in self-play across many environments learn cooperative norms that transfer to humans on novel tasks.

shorturl.at/fqsNN%F0%9F%...

We explain why humans and successful AI planners both fail on a certain kind of problem that we might describe as requiring insight or creativity

We explain why humans and successful AI planners both fail on a certain kind of problem that we might describe as requiring insight or creativity

We explain why humans and successful AI planners both fail on a certain kind of problem that we might describe as requiring insight or creativity

Check out our preprint "Language Model Alignment in Multilingual Trolley Problems" at arxiv.org/pdf/2407.02273!

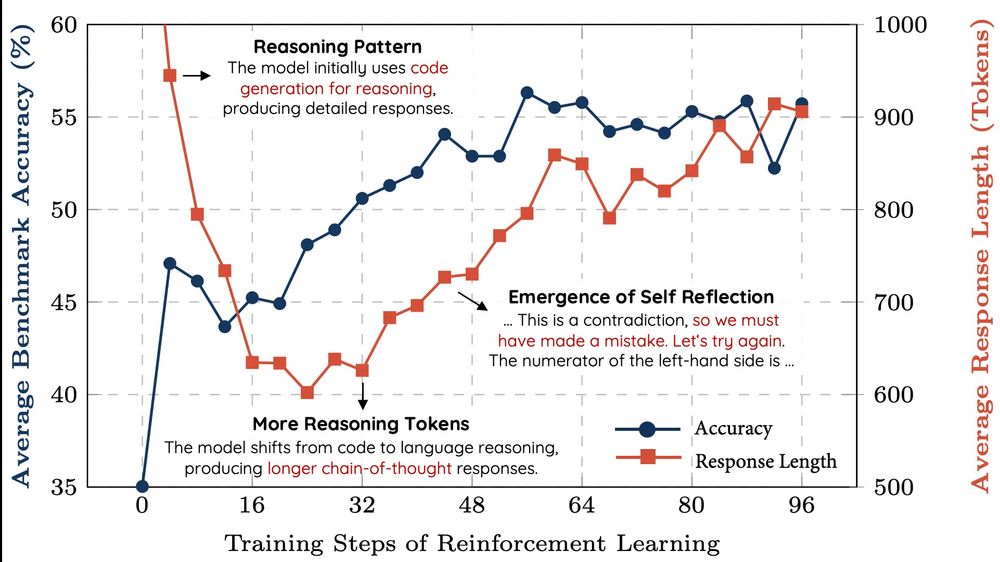

hkust-nlp.notion.site/simplerl-rea...

hkust-nlp.notion.site/simplerl-rea...

In "Inference from social evaluation", we explore how people use social evaluations, such as judgments of blame or praise, to figure out what happened.

📜 osf.io/preprints/ps...

📎 github.com/cicl-stanfor...

1/6

In "Inference from social evaluation", we explore how people use social evaluations, such as judgments of blame or praise, to figure out what happened.

📜 osf.io/preprints/ps...

📎 github.com/cicl-stanfor...

1/6