http://faculty.washington.edu/maxkw/

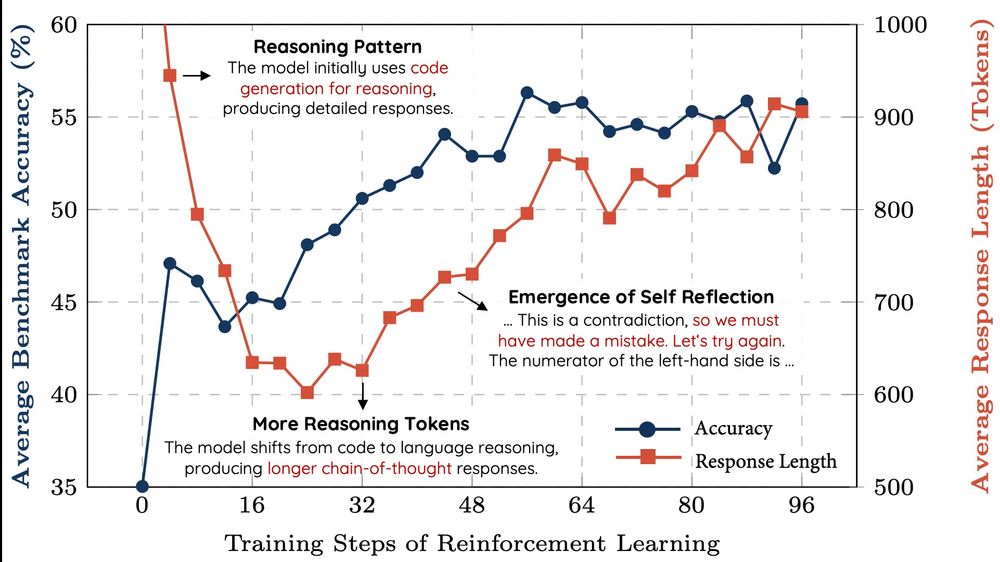

hkust-nlp.notion.site/simplerl-rea...

hkust-nlp.notion.site/simplerl-rea...

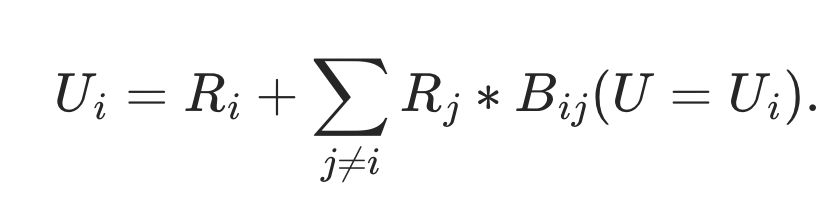

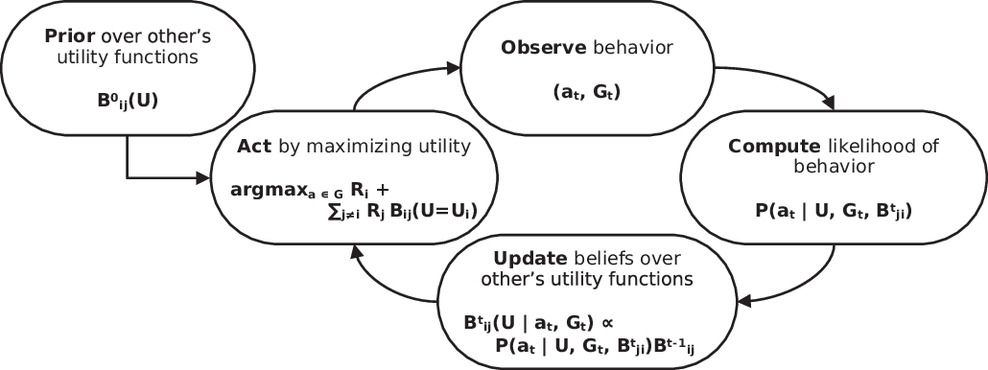

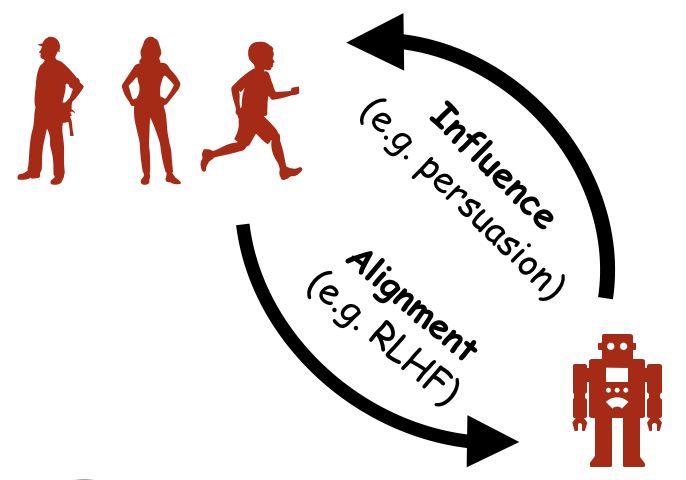

Zhijing Jin and Giorgio Piatti and collaborators Sydney Levine, Jiarui Liu, Fernando Gonzalez, Francesco Ortu, András Strausz, @mrinmaya.bsky.social, Rada Mihalcea, Yejin Choi, Bernhard Schölkopf

Zhijing Jin and Giorgio Piatti and collaborators Sydney Levine, Jiarui Liu, Fernando Gonzalez, Francesco Ortu, András Strausz, @mrinmaya.bsky.social, Rada Mihalcea, Yejin Choi, Bernhard Schölkopf

Sebastian Seung in 2007 give the presidential lecture at SFN mysteriously titled: “The Once and Future Science of Neural Networks.”

Sebastian Seung in 2007 give the presidential lecture at SFN mysteriously titled: “The Once and Future Science of Neural Networks.”