Research Fellow @imperialcollegeldn.bsky.social and @imperial-ix.bsky.social

Funded by @schmidtsciences.bsky.social

I can support your application @imperialcollegeldn.bsky.social

✅ Check your eligibility (below)

✅ Contact me (DM or email)

UK nationals: www.imperial.ac.uk/life-science...

Otherwise: www.imperial.ac.uk/study/fees-a...

I can support your application @imperialcollegeldn.bsky.social

✅ Check your eligibility (below)

✅ Contact me (DM or email)

UK nationals: www.imperial.ac.uk/life-science...

Otherwise: www.imperial.ac.uk/study/fees-a...

@rdgao.bsky.social draws a nice distinction between the two.

And introduces Gao's second law:

“Any state-of-the-art algorithm for analyzing brain signals is, for some time, how the brain works.”

Part 1: www.rdgao.com/blog/2024/01...

@rdgao.bsky.social draws a nice distinction between the two.

And introduces Gao's second law:

“Any state-of-the-art algorithm for analyzing brain signals is, for some time, how the brain works.”

Part 1: www.rdgao.com/blog/2024/01...

Keep an eye out for our next collaborative effort

Keep an eye out for our next collaborative effort

⭐ Explores a fundamental question: how does structure sculpt function in artificial and biological networks?

⭐ Provides new models (pRNNs), tasks (Multimodal mazes) and tools, in a pip-installable package:

github.com/ghoshm/Multi...

🧵9/9

⭐ Explores a fundamental question: how does structure sculpt function in artificial and biological networks?

⭐ Provides new models (pRNNs), tasks (Multimodal mazes) and tools, in a pip-installable package:

github.com/ghoshm/Multi...

🧵9/9

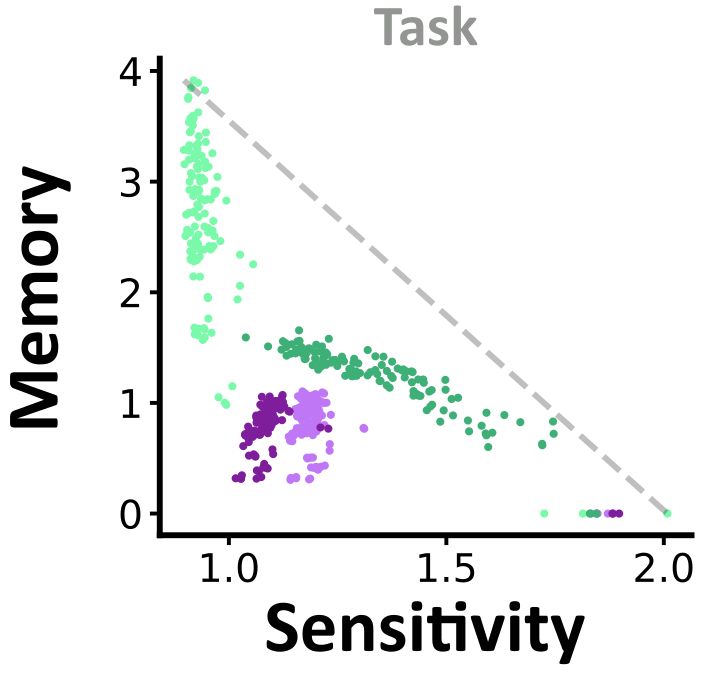

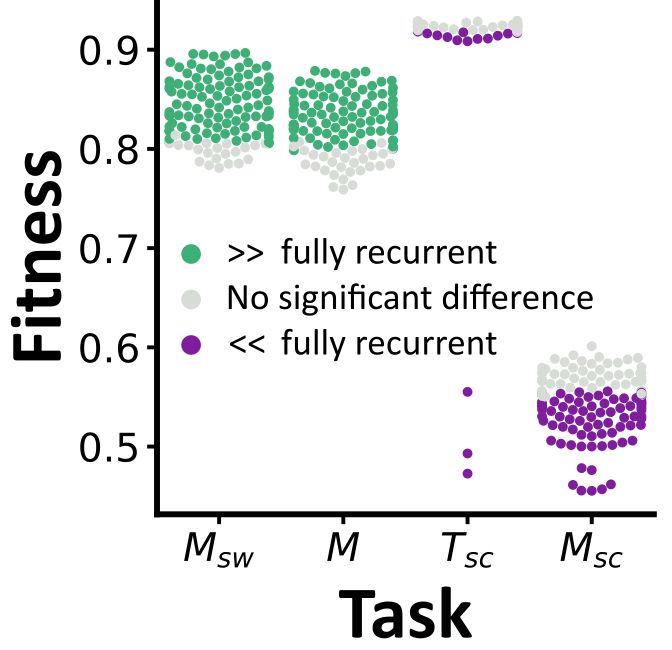

We find that different architectures learn distinct sensitivities and memory dynamics which shape their function.

E.g. we can predict a network’s robustness to noise from its memory.

🧵8/9

We find that different architectures learn distinct sensitivities and memory dynamics which shape their function.

E.g. we can predict a network’s robustness to noise from its memory.

🧵8/9

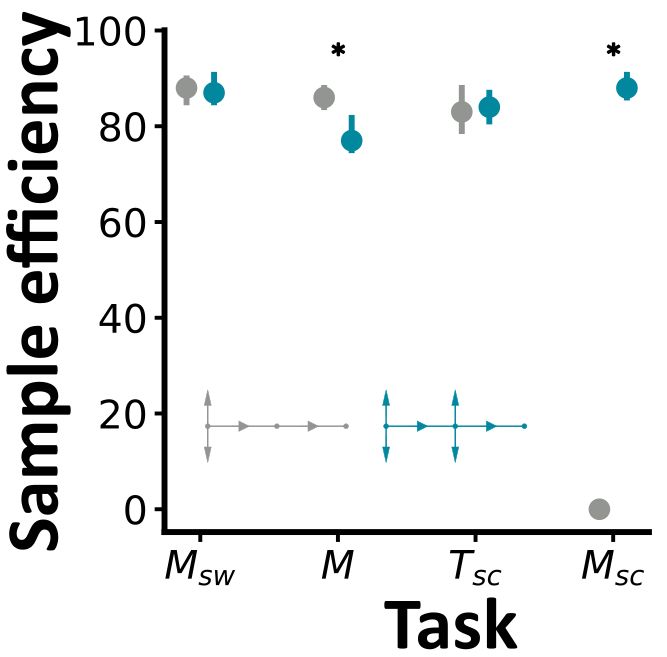

Across pairs, we find that pathways have context dependent effects.

E.g. here hidden-hidden connections decrease learning speed in one task but accelerate it in another.

🧵7/9

Across pairs, we find that pathways have context dependent effects.

E.g. here hidden-hidden connections decrease learning speed in one task but accelerate it in another.

🧵7/9

Despite having less pathways and as few as ¼ the number of parameters.

This shows that pRNNs are efficient, yet performant.

🧵6/9

Despite having less pathways and as few as ¼ the number of parameters.

This shows that pRNNs are efficient, yet performant.

🧵6/9

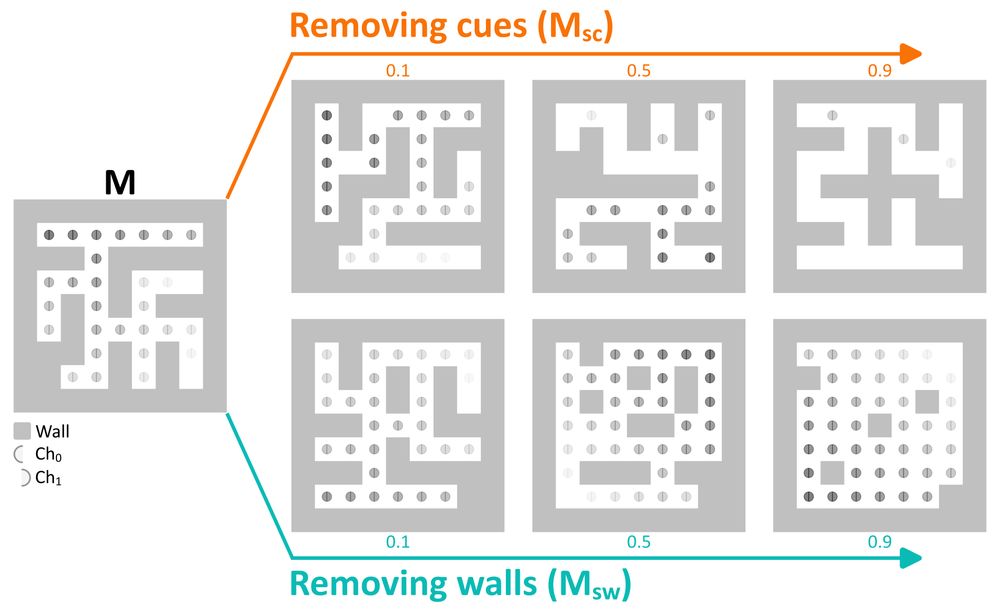

In these tasks, we simulate networks as agents with noisy sensors, which provide local clues about the shortest path through each maze.

We add complexity by removing cues or walls.

🧵4/9

In these tasks, we simulate networks as agents with noisy sensors, which provide local clues about the shortest path through each maze.

We add complexity by removing cues or walls.

🧵4/9

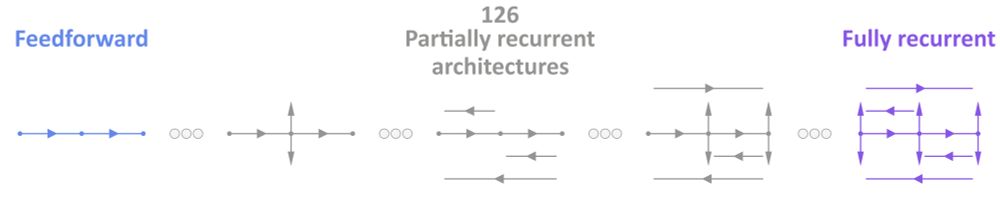

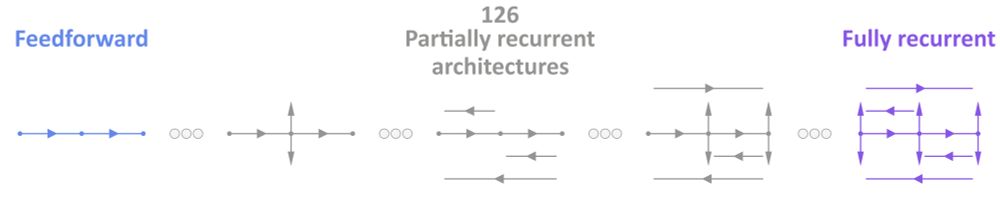

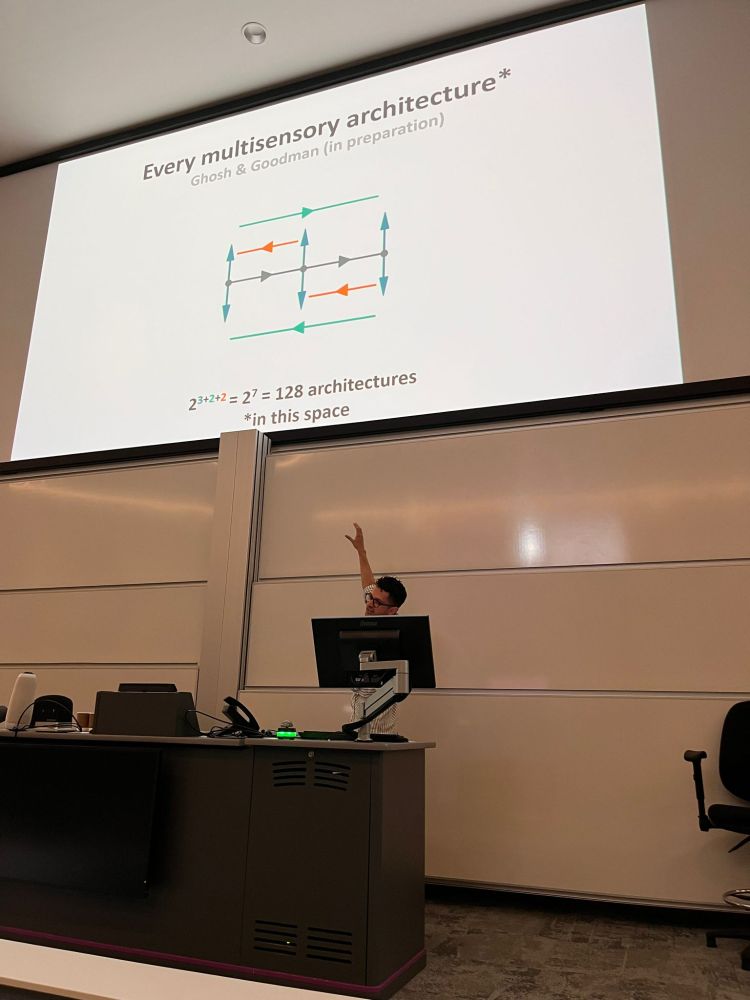

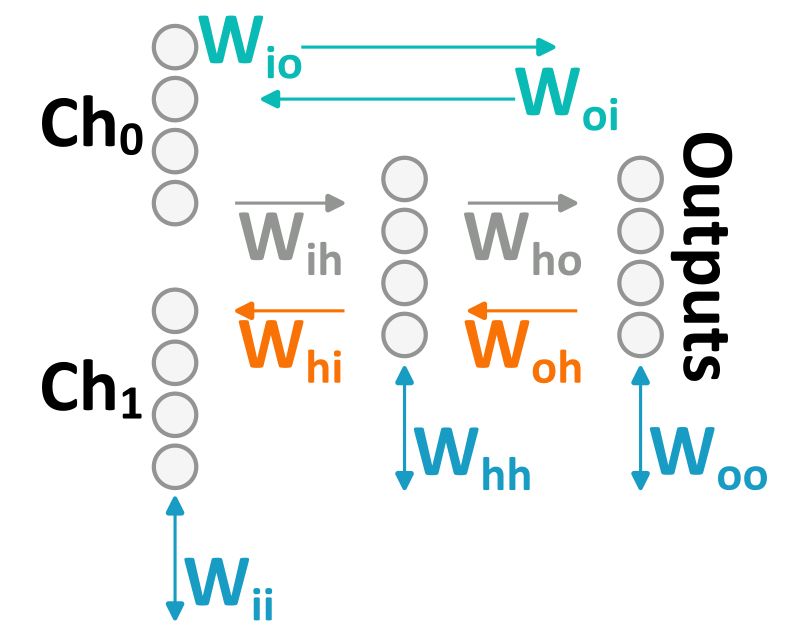

Feedforward - with no additional pathways.

Fully recurrent - with all nine pathways.

We term the 126 architectures between these two extremes *partially recurrent neural networks* (pRNNs), as signal propagation can be bidirectional, yet sparse.

🧵3/9

Feedforward - with no additional pathways.

Fully recurrent - with all nine pathways.

We term the 126 architectures between these two extremes *partially recurrent neural networks* (pRNNs), as signal propagation can be bidirectional, yet sparse.

🧵3/9

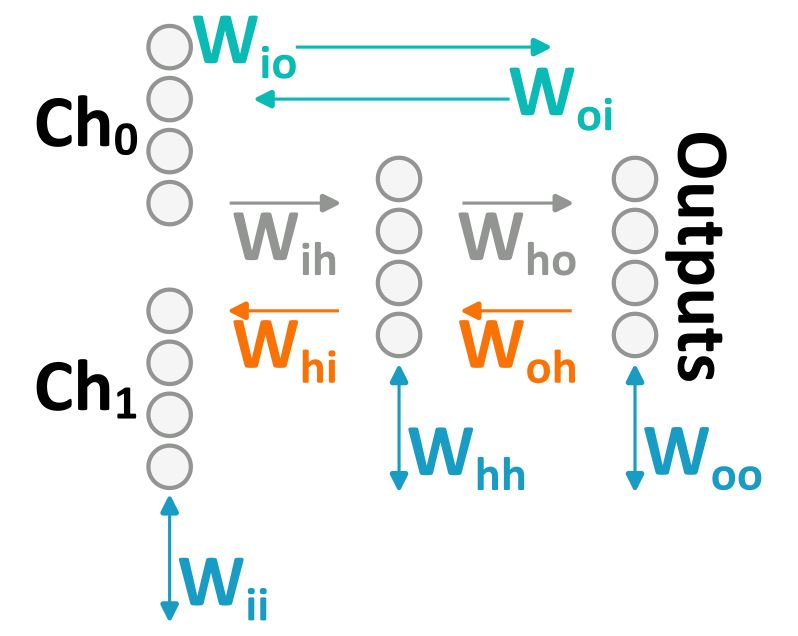

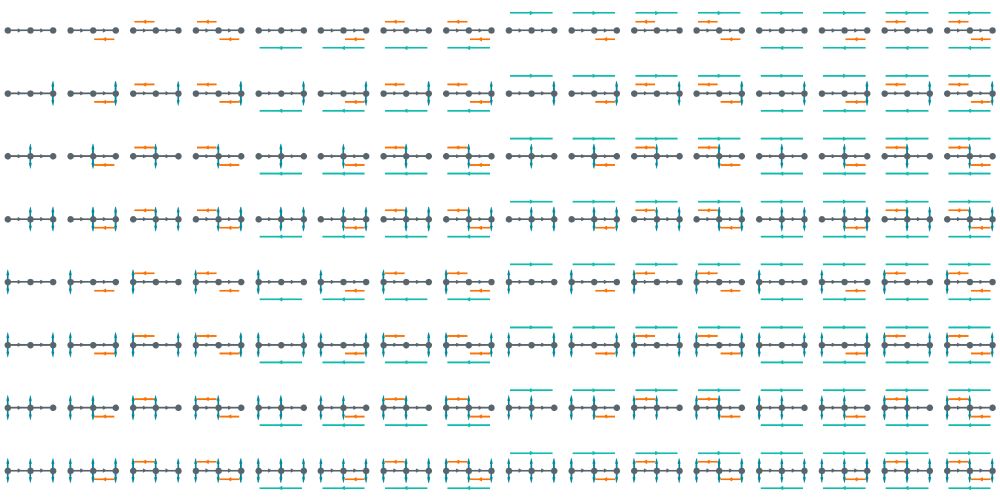

By keeping the two feedforward pathways (W_ih, W_ho) and adding the other 7 in any combination,

we can generate 2^7 distinct architectures.

All 128 are shown in the post above.

🧵2/9

By keeping the two feedforward pathways (W_ih, W_ho) and adding the other 7 in any combination,

we can generate 2^7 distinct architectures.

All 128 are shown in the post above.

🧵2/9

@neuralreckoning.bsky.social & I explore this in our new preprint:

doi.org/10.1101/2025...

🤖🧠🧪

🧵1/9

@neuralreckoning.bsky.social & I explore this in our new preprint:

doi.org/10.1101/2025...

🤖🧠🧪

🧵1/9

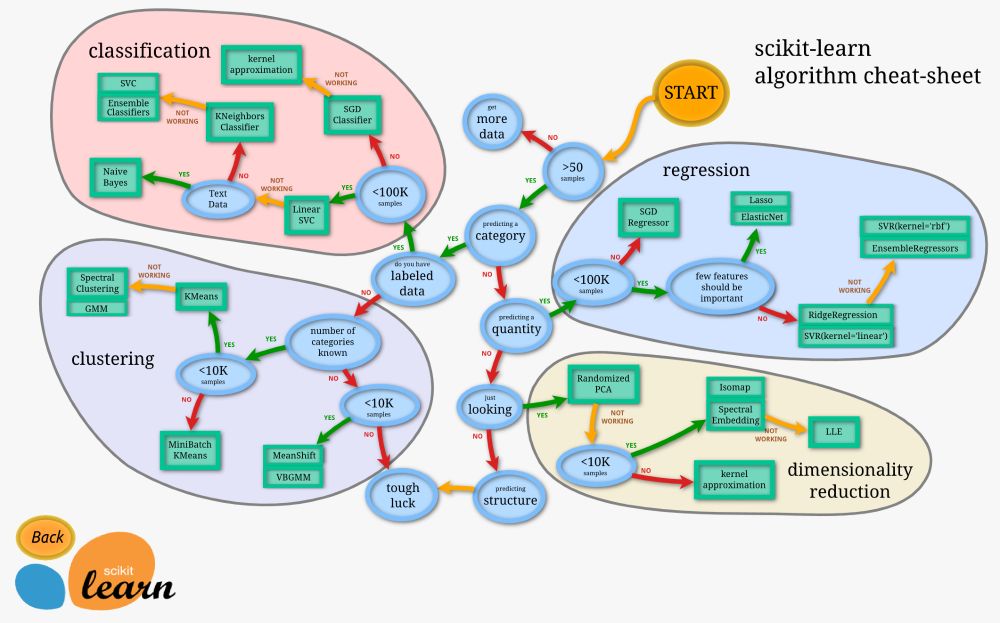

Before diving into research, you need to consider your aim and any data you may have.

This will help you to focus on relevant methods and consider if AI methods will be helpful at all.

@scikit-learn.org provide a great map along these lines!

Before diving into research, you need to consider your aim and any data you may have.

This will help you to focus on relevant methods and consider if AI methods will be helpful at all.

@scikit-learn.org provide a great map along these lines!

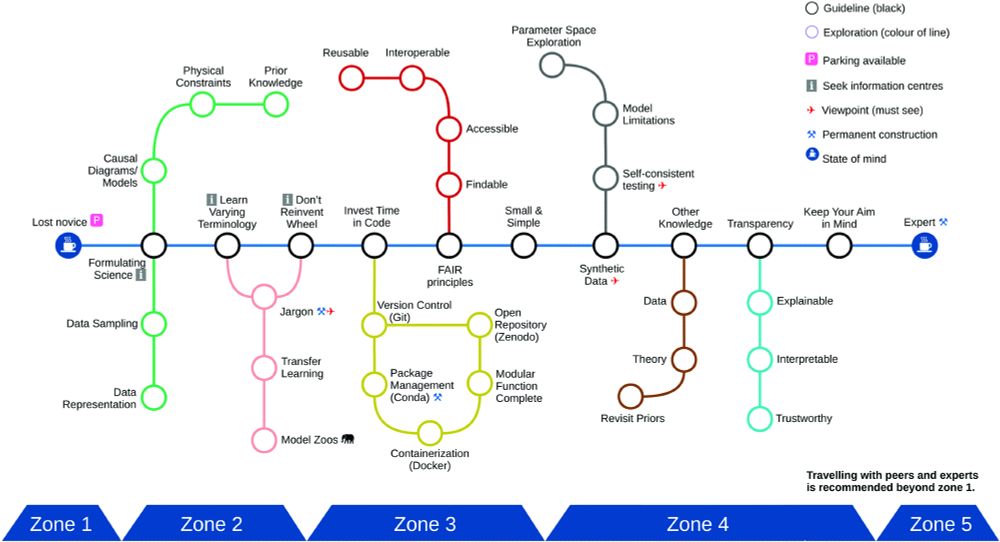

Myself and 9 other research fellows from @imperial-ix.bsky.social use AI methods in domains from plant biology (🌱) to neuroscience (🧠) and particle physics (🎇).

Together we suggest 10 simple rules @plos.org 🧵

doi.org/10.1371/jour...

Myself and 9 other research fellows from @imperial-ix.bsky.social use AI methods in domains from plant biology (🌱) to neuroscience (🧠) and particle physics (🎇).

Together we suggest 10 simple rules @plos.org 🧵

doi.org/10.1371/jour...

And really enjoyed sharing our new work too

And really enjoyed sharing our new work too

I'll be giving a talk on Friday (talk session 9) on multisensory network architectures - new work from me & @neuralreckoning.bsky.social.

But say hello or DM me before then!

I'll be giving a talk on Friday (talk session 9) on multisensory network architectures - new work from me & @neuralreckoning.bsky.social.

But say hello or DM me before then!

Awesome students and super TAs:

@akoumoundourou.bsky.social, @tomnotgeorge.bsky.social, @jsoldadomagraner.bsky.social, @ashvparker.bsky.social, @pollytur.bsky.social, @skuechenhoff.bsky.social

Awesome students and super TAs:

@akoumoundourou.bsky.social, @tomnotgeorge.bsky.social, @jsoldadomagraner.bsky.social, @ashvparker.bsky.social, @pollytur.bsky.social, @skuechenhoff.bsky.social

We ran tutorials to show the students how we apply methods from AI to different scientific domains; from particle physics to public health (@ojwatson.bsky.social) and #neuroscience.

We ran tutorials to show the students how we apply methods from AI to different scientific domains; from particle physics to public health (@ojwatson.bsky.social) and #neuroscience.

In my own work, we found that these perform well, but interpreting the discovered networks (graphs with arbitrary topologies) is very challenging.

🧵9/10

In my own work, we found that these perform well, but interpreting the discovered networks (graphs with arbitrary topologies) is very challenging.

🧵9/10

Well, these models:

Fit experimental data (from humans, rats and flies) better than current models. Though, here, this difference is small (< 5%).

Trade-off performance (x-axis) and complexity (y-axis).

🧵6/10

Well, these models:

Fit experimental data (from humans, rats and flies) better than current models. Though, here, this difference is small (< 5%).

Trade-off performance (x-axis) and complexity (y-axis).

🧵6/10

🧵5/10

🧵5/10

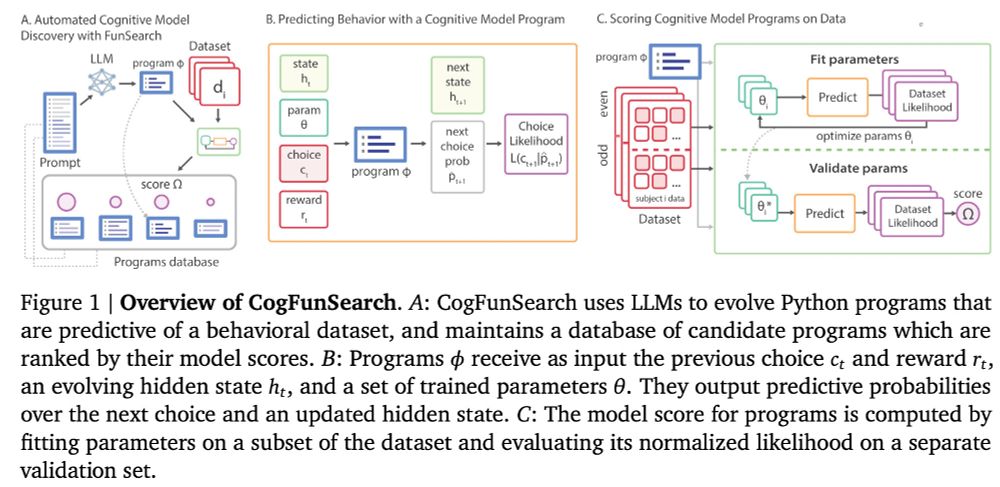

Their algorithm, combines an LLM + an evolutionary process + parameter fitting.

So, it (iteratively) tests a set of models, then varies the best ones (by changing their code) to make new ones.

🧵4/10

Their algorithm, combines an LLM + an evolutionary process + parameter fitting.

So, it (iteratively) tests a set of models, then varies the best ones (by changing their code) to make new ones.

🧵4/10

This great paper focusses on "empirical design in reinforcement learning", but the ideas are generally applicable!

Here are some of their suggestions with a running example:

arxiv.org/abs/2304.01315

This great paper focusses on "empirical design in reinforcement learning", but the ideas are generally applicable!

Here are some of their suggestions with a running example:

arxiv.org/abs/2304.01315

We consider how animals should combine multisensory signals in the naturalistic case where:

✅ Signals are sparse.

✅ Signals arrive in bursts.

✅ Sensory channels are correlated.

To do so, we compare several models (🧠):

We consider how animals should combine multisensory signals in the naturalistic case where:

✅ Signals are sparse.

✅ Signals arrive in bursts.

✅ Sensory channels are correlated.

To do so, we compare several models (🧠):

📆Applications open until 15.01.

🧠🧪

trendinafrica.org/trend-camina/

📆Applications open until 15.01.

🧠🧪

trendinafrica.org/trend-camina/

Though, you can read the full transcript here:

cyberneticzoo.com/wp-content/u...

Though, you can read the full transcript here:

cyberneticzoo.com/wp-content/u...