Marcus Ghosh

@marcusghosh.bsky.social

Computational neuroscientist.

Research Fellow @imperialcollegeldn.bsky.social and @imperial-ix.bsky.social

Funded by @schmidtsciences.bsky.social

Research Fellow @imperialcollegeldn.bsky.social and @imperial-ix.bsky.social

Funded by @schmidtsciences.bsky.social

For physical systems yes (from hydraulics to computers)!

But, I don't remember seeing an algorithm-centric version?

But, I don't remember seeing an algorithm-centric version?

September 29, 2025 at 12:21 PM

For physical systems yes (from hydraulics to computers)!

But, I don't remember seeing an algorithm-centric version?

But, I don't remember seeing an algorithm-centric version?

Congratulations!

Exciting to see an inversion of NeuroAI too

Exciting to see an inversion of NeuroAI too

September 24, 2025 at 6:26 AM

Congratulations!

Exciting to see an inversion of NeuroAI too

Exciting to see an inversion of NeuroAI too

Really neat!

It could be interesting to explore heterogenous neuromodulation?

In this case, having multiple modulator networks which act at different timescales and exert different effects on the downstream SNN?

This would be a bit closer to neuromodulators in vivo.

It could be interesting to explore heterogenous neuromodulation?

In this case, having multiple modulator networks which act at different timescales and exert different effects on the downstream SNN?

This would be a bit closer to neuromodulators in vivo.

September 19, 2025 at 8:57 AM

Really neat!

It could be interesting to explore heterogenous neuromodulation?

In this case, having multiple modulator networks which act at different timescales and exert different effects on the downstream SNN?

This would be a bit closer to neuromodulators in vivo.

It could be interesting to explore heterogenous neuromodulation?

In this case, having multiple modulator networks which act at different timescales and exert different effects on the downstream SNN?

This would be a bit closer to neuromodulators in vivo.

Congratulations!

September 17, 2025 at 6:31 PM

Congratulations!

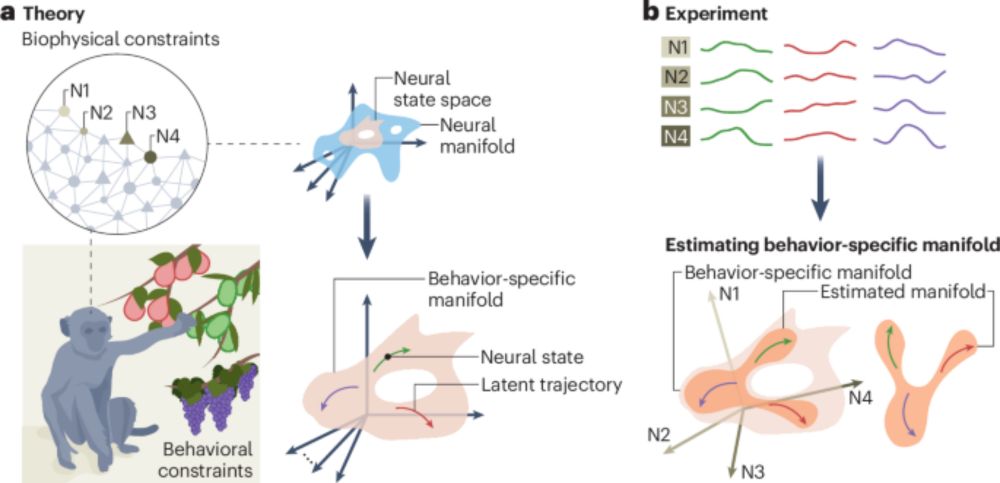

What I was trying to highlight is that it is possible to observe low dimensional structure in population activity which is unrelated to the computation / task.

And it's not obvious that this problem vanishes in more complex circuits?

And it's not obvious that this problem vanishes in more complex circuits?

September 11, 2025 at 8:10 AM

What I was trying to highlight is that it is possible to observe low dimensional structure in population activity which is unrelated to the computation / task.

And it's not obvious that this problem vanishes in more complex circuits?

And it's not obvious that this problem vanishes in more complex circuits?

I think by adding a mixture of noise, delays etc, we may not end up at d=1.

But even if we did, many studies would describe these "population dynamics" as a line attractor.

Rather than just a single neuron acting on it's own?

But even if we did, many studies would describe these "population dynamics" as a line attractor.

Rather than just a single neuron acting on it's own?

September 10, 2025 at 3:39 PM

I think by adding a mixture of noise, delays etc, we may not end up at d=1.

But even if we did, many studies would describe these "population dynamics" as a line attractor.

Rather than just a single neuron acting on it's own?

But even if we did, many studies would describe these "population dynamics" as a line attractor.

Rather than just a single neuron acting on it's own?

Its good to see that there are causal studies.

But, these seem to be the exception?

And perhaps the language used in the non-causal studies can be a bit misleading?

For instance, the post that launched this thread claimed that "the brain uses distributed coding" (based on solely on recordings).

But, these seem to be the exception?

And perhaps the language used in the non-causal studies can be a bit misleading?

For instance, the post that launched this thread claimed that "the brain uses distributed coding" (based on solely on recordings).

September 10, 2025 at 3:17 PM

Its good to see that there are causal studies.

But, these seem to be the exception?

And perhaps the language used in the non-causal studies can be a bit misleading?

For instance, the post that launched this thread claimed that "the brain uses distributed coding" (based on solely on recordings).

But, these seem to be the exception?

And perhaps the language used in the non-causal studies can be a bit misleading?

For instance, the post that launched this thread claimed that "the brain uses distributed coding" (based on solely on recordings).

What I was trying to highlight is that it is possible to observe low dimensional structure in population activity which is unrelated to the computation / task.

And it's not obvious that this problem vanishes in more complex circuits?

And it's not obvious that this problem vanishes in more complex circuits?

September 10, 2025 at 3:17 PM

What I was trying to highlight is that it is possible to observe low dimensional structure in population activity which is unrelated to the computation / task.

And it's not obvious that this problem vanishes in more complex circuits?

And it's not obvious that this problem vanishes in more complex circuits?

The toy circuit is very simple. But I'd be happy to say it computes?

For example, given LIF dynamics it could perform temporal coincidence detection (only outputting spikes in response to inputs close together in time).

For example, given LIF dynamics it could perform temporal coincidence detection (only outputting spikes in response to inputs close together in time).

September 10, 2025 at 3:17 PM

The toy circuit is very simple. But I'd be happy to say it computes?

For example, given LIF dynamics it could perform temporal coincidence detection (only outputting spikes in response to inputs close together in time).

For example, given LIF dynamics it could perform temporal coincidence detection (only outputting spikes in response to inputs close together in time).

I think by adding a mixture of noise, delays etc, we may not end up at d=1.

But even if we did, many studies would describe these "population dynamics" as a line attractor.

Rather than just a single neuron acting on it's own.

But even if we did, many studies would describe these "population dynamics" as a line attractor.

Rather than just a single neuron acting on it's own.

September 8, 2025 at 3:12 PM

I think by adding a mixture of noise, delays etc, we may not end up at d=1.

But even if we did, many studies would describe these "population dynamics" as a line attractor.

Rather than just a single neuron acting on it's own.

But even if we did, many studies would describe these "population dynamics" as a line attractor.

Rather than just a single neuron acting on it's own.

Causal experiments could help to untangle this.

But, it seems like this remains underexplored?

Keen to hear your thoughts @mattperich.bsky.social, @juangallego.bsky.social and others!

www.nature.com/articles/s41...

🧵5/5

But, it seems like this remains underexplored?

Keen to hear your thoughts @mattperich.bsky.social, @juangallego.bsky.social and others!

www.nature.com/articles/s41...

🧵5/5

A neural manifold view of the brain - Nature Neuroscience

Recent advances in neuroscience have revealed how neural population activity underlying behavior can be well described by topological objects called neural manifolds. Understanding how nature, nurture...

www.nature.com

September 8, 2025 at 1:44 PM

Causal experiments could help to untangle this.

But, it seems like this remains underexplored?

Keen to hear your thoughts @mattperich.bsky.social, @juangallego.bsky.social and others!

www.nature.com/articles/s41...

🧵5/5

But, it seems like this remains underexplored?

Keen to hear your thoughts @mattperich.bsky.social, @juangallego.bsky.social and others!

www.nature.com/articles/s41...

🧵5/5

However,

In this case, from the anatomy (circuit diagram above), we know that only neuron_1 is involved in the computation (transforming the input to the output).

And the manifold we observe is misleading.

🧵4/5

In this case, from the anatomy (circuit diagram above), we know that only neuron_1 is involved in the computation (transforming the input to the output).

And the manifold we observe is misleading.

🧵4/5

September 8, 2025 at 1:44 PM

However,

In this case, from the anatomy (circuit diagram above), we know that only neuron_1 is involved in the computation (transforming the input to the output).

And the manifold we observe is misleading.

🧵4/5

In this case, from the anatomy (circuit diagram above), we know that only neuron_1 is involved in the computation (transforming the input to the output).

And the manifold we observe is misleading.

🧵4/5

A common approach to analysing this data would be to apply PCA (or another technique).

Yielding a matrix of population activity (d x time). Where d < the number of neurons.

A common interpretation of this would be that "the brain uses a low dimensional manifold to link this input-output".

🧵3/5

Yielding a matrix of population activity (d x time). Where d < the number of neurons.

A common interpretation of this would be that "the brain uses a low dimensional manifold to link this input-output".

🧵3/5

September 8, 2025 at 1:44 PM

A common approach to analysing this data would be to apply PCA (or another technique).

Yielding a matrix of population activity (d x time). Where d < the number of neurons.

A common interpretation of this would be that "the brain uses a low dimensional manifold to link this input-output".

🧵3/5

Yielding a matrix of population activity (d x time). Where d < the number of neurons.

A common interpretation of this would be that "the brain uses a low dimensional manifold to link this input-output".

🧵3/5

If we start from this circuit:

Input -> neuron_1 -> output

↓

⋮

↓

neuron_n

And record neurons 1 to n simultaneously (where n could be very large).

We can obtain a matrix of neural activity (neurons x time).

🧵2/5

Input -> neuron_1 -> output

↓

⋮

↓

neuron_n

And record neurons 1 to n simultaneously (where n could be very large).

We can obtain a matrix of neural activity (neurons x time).

🧵2/5

September 8, 2025 at 1:44 PM

If we start from this circuit:

Input -> neuron_1 -> output

↓

⋮

↓

neuron_n

And record neurons 1 to n simultaneously (where n could be very large).

We can obtain a matrix of neural activity (neurons x time).

🧵2/5

Input -> neuron_1 -> output

↓

⋮

↓

neuron_n

And record neurons 1 to n simultaneously (where n could be very large).

We can obtain a matrix of neural activity (neurons x time).

🧵2/5

Okay, let's say we make the circuit above more realistic: add neurons, separate them into areas, consider multiple tasks etc.

The problem outlined above still persists?

If a method doesn't work in a simple system (e.g. the one above), there is no reason to think it will in a more complex one?

The problem outlined above still persists?

If a method doesn't work in a simple system (e.g. the one above), there is no reason to think it will in a more complex one?

September 8, 2025 at 8:48 AM

Okay, let's say we make the circuit above more realistic: add neurons, separate them into areas, consider multiple tasks etc.

The problem outlined above still persists?

If a method doesn't work in a simple system (e.g. the one above), there is no reason to think it will in a more complex one?

The problem outlined above still persists?

If a method doesn't work in a simple system (e.g. the one above), there is no reason to think it will in a more complex one?

But what does the lag tell you about the system?

Taking the circuit above as an example.

Taking the circuit above as an example.

September 4, 2025 at 7:54 PM

But what does the lag tell you about the system?

Taking the circuit above as an example.

Taking the circuit above as an example.

There may be a difference in the lag between the input signal and neurons 1 and 2.

But this difference could be too small to be detectable?

And without causal experiments the conclusion could be something like "different neurons represent the stimulus at different timescales"?

🧵 (3/3)

But this difference could be too small to be detectable?

And without causal experiments the conclusion could be something like "different neurons represent the stimulus at different timescales"?

🧵 (3/3)

September 4, 2025 at 6:15 PM

There may be a difference in the lag between the input signal and neurons 1 and 2.

But this difference could be too small to be detectable?

And without causal experiments the conclusion could be something like "different neurons represent the stimulus at different timescales"?

🧵 (3/3)

But this difference could be too small to be detectable?

And without causal experiments the conclusion could be something like "different neurons represent the stimulus at different timescales"?

🧵 (3/3)

Input -> neuron_1 -> output

↓

neuron_2

In this "circuit":

* We could decode the input from either neuron

* But the circuit is not "using" neuron_2 in the computation (transforming the input to output).

* And ablations would make this clear?

🧵 (2/3)

↓

neuron_2

In this "circuit":

* We could decode the input from either neuron

* But the circuit is not "using" neuron_2 in the computation (transforming the input to output).

* And ablations would make this clear?

🧵 (2/3)

September 4, 2025 at 6:15 PM

Input -> neuron_1 -> output

↓

neuron_2

In this "circuit":

* We could decode the input from either neuron

* But the circuit is not "using" neuron_2 in the computation (transforming the input to output).

* And ablations would make this clear?

🧵 (2/3)

↓

neuron_2

In this "circuit":

* We could decode the input from either neuron

* But the circuit is not "using" neuron_2 in the computation (transforming the input to output).

* And ablations would make this clear?

🧵 (2/3)

I agree that perturbations have their challenges (see doi.org/10.1371/jour... from @kayson.bsky.social for a better approach).

But without them you just have correlations?

Just to give a toy example:

🧵 (1/3)

But without them you just have correlations?

Just to give a toy example:

🧵 (1/3)

September 4, 2025 at 6:15 PM

I agree that perturbations have their challenges (see doi.org/10.1371/jour... from @kayson.bsky.social for a better approach).

But without them you just have correlations?

Just to give a toy example:

🧵 (1/3)

But without them you just have correlations?

Just to give a toy example:

🧵 (1/3)