Maarten van Smeden

@maartenvsmeden.bsky.social

statistician • associate prof • team lead health data science and head methods research program at julius center • director ai methods lab, umc utrecht, netherlands • views and opinions my own

Kind reminder: data driven variable selection (e.g. forward/stepwise/univariable screening) makes things *worse* for most analytical goals

October 8, 2025 at 1:38 PM

Kind reminder: data driven variable selection (e.g. forward/stepwise/univariable screening) makes things *worse* for most analytical goals

NEW FULLY FUNDED PHD POSITION

Looking for a motivated PhD candidate to join our team. Together with Danya Muilwijk, Jeffrey Beekman and I, you will explore opportunities and limitations of AI in the context of organoids

For more info and for applying 👉

www.careersatumcutrecht.com/vacancies/sc...

Looking for a motivated PhD candidate to join our team. Together with Danya Muilwijk, Jeffrey Beekman and I, you will explore opportunities and limitations of AI in the context of organoids

For more info and for applying 👉

www.careersatumcutrecht.com/vacancies/sc...

Vacancy — PhD position on AI methodology for prediction of patient outcomes using organoid models

Are you passionate about bringing personalized medicine to the next level and make real impact in healthcare? Join our team and develop novel AI methodology to improve predictions of relevant patient ...

www.careersatumcutrecht.com

September 25, 2025 at 10:57 AM

NEW FULLY FUNDED PHD POSITION

Looking for a motivated PhD candidate to join our team. Together with Danya Muilwijk, Jeffrey Beekman and I, you will explore opportunities and limitations of AI in the context of organoids

For more info and for applying 👉

www.careersatumcutrecht.com/vacancies/sc...

Looking for a motivated PhD candidate to join our team. Together with Danya Muilwijk, Jeffrey Beekman and I, you will explore opportunities and limitations of AI in the context of organoids

For more info and for applying 👉

www.careersatumcutrecht.com/vacancies/sc...

Reposted by Maarten van Smeden

Interpretable "AI" is just a distraction from safe and useful "AI"

September 22, 2024 at 7:31 PM

Interpretable "AI" is just a distraction from safe and useful "AI"

No.

5. You should use a precision-recall curve for a binary classifier, not an ROC curve

August 19, 2025 at 3:22 PM

No.

I wonder who those people are who come here dying to know what GenAI has done with some prompt you put in

August 13, 2025 at 9:21 AM

I wonder who those people are who come here dying to know what GenAI has done with some prompt you put in

If you think AI is cool, wait until you learn about regression analysis

August 12, 2025 at 11:44 AM

If you think AI is cool, wait until you learn about regression analysis

NEW PREPRINT

Explainable AI refers to an extremely popular group of approaches that aim to open "black box" AI models. But what can we see when we open the black AI box? We use Galit Shmueli's framework (to describe, predict or explain) to evaluate

arxiv.org/abs/2508.05753

Explainable AI refers to an extremely popular group of approaches that aim to open "black box" AI models. But what can we see when we open the black AI box? We use Galit Shmueli's framework (to describe, predict or explain) to evaluate

arxiv.org/abs/2508.05753

August 11, 2025 at 6:53 AM

NEW PREPRINT

Explainable AI refers to an extremely popular group of approaches that aim to open "black box" AI models. But what can we see when we open the black AI box? We use Galit Shmueli's framework (to describe, predict or explain) to evaluate

arxiv.org/abs/2508.05753

Explainable AI refers to an extremely popular group of approaches that aim to open "black box" AI models. But what can we see when we open the black AI box? We use Galit Shmueli's framework (to describe, predict or explain) to evaluate

arxiv.org/abs/2508.05753

The healthcare literature is filled with "risk factors". This word combination makes research findings sound important by implying causality, while avoiding direct claims of having identified causal associations that are easily critiqued.

July 31, 2025 at 8:32 AM

The healthcare literature is filled with "risk factors". This word combination makes research findings sound important by implying causality, while avoiding direct claims of having identified causal associations that are easily critiqued.

When forced to make a choice, my choice will be logistic regression model over linear probability model 103% of the time

July 23, 2025 at 8:43 PM

When forced to make a choice, my choice will be logistic regression model over linear probability model 103% of the time

Reposted by Maarten van Smeden

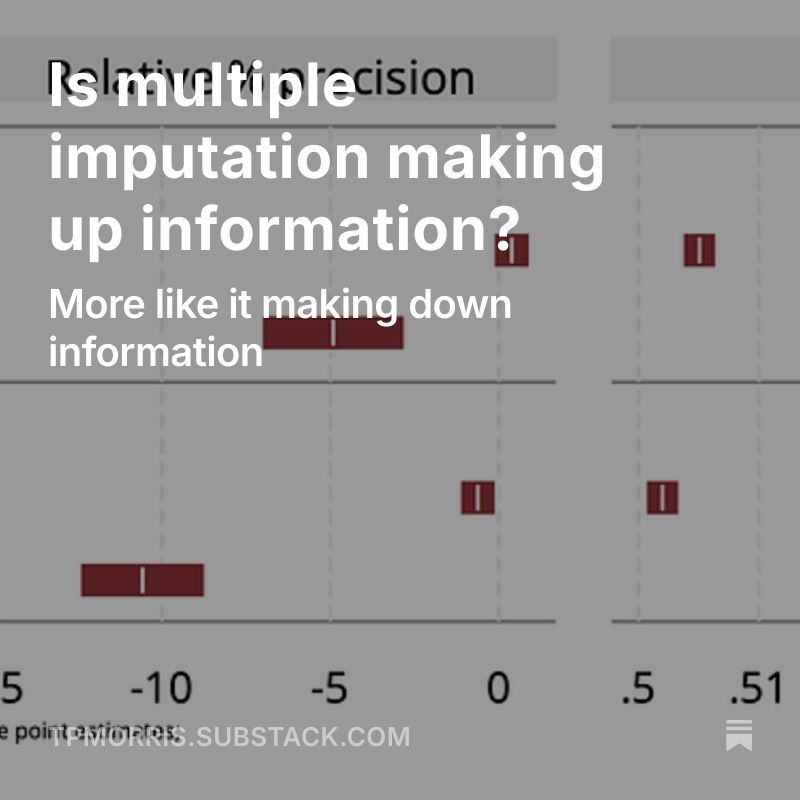

Post just up: Is multiple imputation making up information?

tldr: no.

Includes a cheeky simulation study to demonstrate the point.

open.substack.com/pub/tpmorris...

tldr: no.

Includes a cheeky simulation study to demonstrate the point.

open.substack.com/pub/tpmorris...

July 23, 2025 at 3:29 PM

Post just up: Is multiple imputation making up information?

tldr: no.

Includes a cheeky simulation study to demonstrate the point.

open.substack.com/pub/tpmorris...

tldr: no.

Includes a cheeky simulation study to demonstrate the point.

open.substack.com/pub/tpmorris...

Reposted by Maarten van Smeden

You can have all the omni-omics data in the world and the bestest algorithms, but eventually a predicted probability is produced & it should be evaluated using well-established methods, and correctly implemented in the context of medical decision making.

statsepi.substack.com/i/140315566/...

statsepi.substack.com/i/140315566/...

July 14, 2025 at 9:49 AM

You can have all the omni-omics data in the world and the bestest algorithms, but eventually a predicted probability is produced & it should be evaluated using well-established methods, and correctly implemented in the context of medical decision making.

statsepi.substack.com/i/140315566/...

statsepi.substack.com/i/140315566/...

Depending which methods guru you ask every analytical task is “essentially” a missing data problem, a causal inference problem, a Bayesian problem, a regression problem or a machine learning problem

July 10, 2025 at 3:05 PM

Depending which methods guru you ask every analytical task is “essentially” a missing data problem, a causal inference problem, a Bayesian problem, a regression problem or a machine learning problem

Reposted by Maarten van Smeden

Reposted by Maarten van Smeden

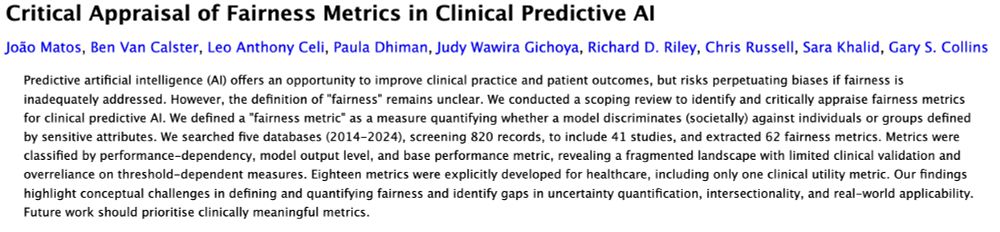

* New preprint led by Joao Matos & @gscollins.bsky.social

"Critical Appraisal of Fairness Metrics in Clinical Predictive AI"

- Important, rapidly growing area

- But confusion exists

- 62 fairness metrics identified so far

- Better standards & metrics needed for healthcare

arxiv.org/abs/2506.17035

"Critical Appraisal of Fairness Metrics in Clinical Predictive AI"

- Important, rapidly growing area

- But confusion exists

- 62 fairness metrics identified so far

- Better standards & metrics needed for healthcare

arxiv.org/abs/2506.17035

June 27, 2025 at 6:57 AM

* New preprint led by Joao Matos & @gscollins.bsky.social

"Critical Appraisal of Fairness Metrics in Clinical Predictive AI"

- Important, rapidly growing area

- But confusion exists

- 62 fairness metrics identified so far

- Better standards & metrics needed for healthcare

arxiv.org/abs/2506.17035

"Critical Appraisal of Fairness Metrics in Clinical Predictive AI"

- Important, rapidly growing area

- But confusion exists

- 62 fairness metrics identified so far

- Better standards & metrics needed for healthcare

arxiv.org/abs/2506.17035

Surprisingly common thing: comparisons of prediction models developed using, say, Logistic Regression, Random Forest and XGBoost with conclusion XGBoost is "good" because it yields slightly higher AUC than LR or RF using the same data

Fact that "better" doesn't always mean "good" seems lost

Fact that "better" doesn't always mean "good" seems lost

June 27, 2025 at 7:34 AM

Surprisingly common thing: comparisons of prediction models developed using, say, Logistic Regression, Random Forest and XGBoost with conclusion XGBoost is "good" because it yields slightly higher AUC than LR or RF using the same data

Fact that "better" doesn't always mean "good" seems lost

Fact that "better" doesn't always mean "good" seems lost

Reposted by Maarten van Smeden

Published: the paper 'On the uses and abuses of Regression Models: a Call for Reform of Statistical Practice and Teaching' by John Carlin and Margarita Moreno-Betancur in the latest issue of Statistics in Medicine onlinelibrary.wiley.com/doi/10.1002/... (1/8)

onlinelibrary.wiley.com

June 26, 2025 at 12:23 PM

Published: the paper 'On the uses and abuses of Regression Models: a Call for Reform of Statistical Practice and Teaching' by John Carlin and Margarita Moreno-Betancur in the latest issue of Statistics in Medicine onlinelibrary.wiley.com/doi/10.1002/... (1/8)

What is common knowledge in your field, but shocks outsiders?

Validated does not mean it works as intended. It means someone has evaluated it (and may have concluded it doesn’t work at all)

Validated does not mean it works as intended. It means someone has evaluated it (and may have concluded it doesn’t work at all)

What is common knowledge in your field, but shocks outsiders?

We're not clear on what peer review is, at all.

We're not clear on what peer review is, at all.

What is common knowledge in your field, but shocks outsiders?

We’re not clear on what intelligence is, at all

We’re not clear on what intelligence is, at all

June 17, 2025 at 6:44 AM

What is common knowledge in your field, but shocks outsiders?

Validated does not mean it works as intended. It means someone has evaluated it (and may have concluded it doesn’t work at all)

Validated does not mean it works as intended. It means someone has evaluated it (and may have concluded it doesn’t work at all)

Reposted by Maarten van Smeden

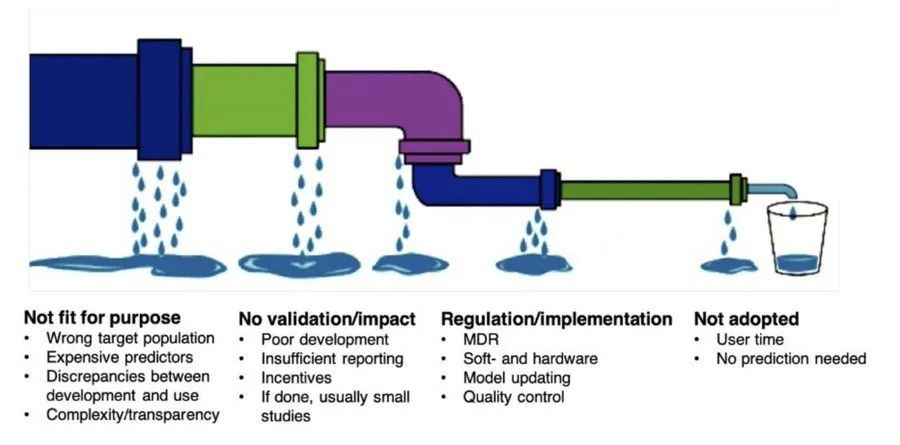

**New Lancet DH paper**

"Importance of sample size on the quality & utility of AI-based prediction models for healthcare"

- for broad audience

- explains why inadequate SS harms #AI model training, evaluation & performance

- pushback to claims SS irrelevant to AI research

👇

tinyurl.com/yrje52fn

"Importance of sample size on the quality & utility of AI-based prediction models for healthcare"

- for broad audience

- explains why inadequate SS harms #AI model training, evaluation & performance

- pushback to claims SS irrelevant to AI research

👇

tinyurl.com/yrje52fn

Importance of sample size on the quality and utility of AI-based prediction models for healthcare

Rigorous study design and analytical standards are required to generate reliable findings in healthcare from artificial intelligence (AI) research. On…

www.sciencedirect.com

June 2, 2025 at 3:18 PM

**New Lancet DH paper**

"Importance of sample size on the quality & utility of AI-based prediction models for healthcare"

- for broad audience

- explains why inadequate SS harms #AI model training, evaluation & performance

- pushback to claims SS irrelevant to AI research

👇

tinyurl.com/yrje52fn

"Importance of sample size on the quality & utility of AI-based prediction models for healthcare"

- for broad audience

- explains why inadequate SS harms #AI model training, evaluation & performance

- pushback to claims SS irrelevant to AI research

👇

tinyurl.com/yrje52fn

Reposted by Maarten van Smeden

People always ask me, “how do I know my manuscript is done?”

There’s only one way, my friends.

If your file name looks something like this:

Manuscript - Final Draft 3.7 FINAL FINAL - FINAL (5).docx

Then, and only then, is it time.

There’s only one way, my friends.

If your file name looks something like this:

Manuscript - Final Draft 3.7 FINAL FINAL - FINAL (5).docx

Then, and only then, is it time.

May 31, 2025 at 9:17 PM

People always ask me, “how do I know my manuscript is done?”

There’s only one way, my friends.

If your file name looks something like this:

Manuscript - Final Draft 3.7 FINAL FINAL - FINAL (5).docx

Then, and only then, is it time.

There’s only one way, my friends.

If your file name looks something like this:

Manuscript - Final Draft 3.7 FINAL FINAL - FINAL (5).docx

Then, and only then, is it time.

Re-proposing the Occam's taser: an automatic electric shock for anyone riding the AI hype train making their models unnecessarily complex

May 27, 2025 at 2:38 PM

Re-proposing the Occam's taser: an automatic electric shock for anyone riding the AI hype train making their models unnecessarily complex

Rule of thumb: If your model requires data to look like this (balanced after SMOTE), then maybe you want to use a different model

May 27, 2025 at 1:43 PM

Rule of thumb: If your model requires data to look like this (balanced after SMOTE), then maybe you want to use a different model

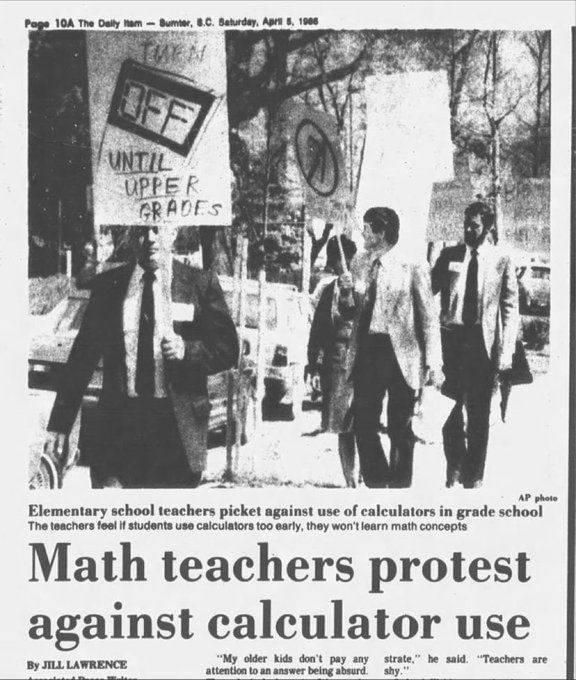

We should really ban all use of AI from all our education because the use of AI will make our students dumb, and banning innovation has worked really well before

May 19, 2025 at 2:32 PM

We should really ban all use of AI from all our education because the use of AI will make our students dumb, and banning innovation has worked really well before

As scientists it is difficult to stay in love with the questions once you fall in love with the answers

May 19, 2025 at 9:14 AM

As scientists it is difficult to stay in love with the questions once you fall in love with the answers

Reposted by Maarten van Smeden

May 14, 2025 at 11:21 AM

So.... about using large language models (e.g. chatGPT) for writing motivation letters for a job

I get it! And honestly, use all the technology you need to write the best letter you can

But after reading dozens of letters with almost EXACTLY the same intro paragraph I do get a bit tired of it

I get it! And honestly, use all the technology you need to write the best letter you can

But after reading dozens of letters with almost EXACTLY the same intro paragraph I do get a bit tired of it

May 12, 2025 at 10:44 AM

So.... about using large language models (e.g. chatGPT) for writing motivation letters for a job

I get it! And honestly, use all the technology you need to write the best letter you can

But after reading dozens of letters with almost EXACTLY the same intro paragraph I do get a bit tired of it

I get it! And honestly, use all the technology you need to write the best letter you can

But after reading dozens of letters with almost EXACTLY the same intro paragraph I do get a bit tired of it