Working on Predictive Uncertainty in ML

➡️ Outperforms models by Amazon, Google, Datadog, Salesforce, Alibaba

➡️ industrial applications

➡️ limited data

➡️ embedded AI and edge devices

➡️ Europe is leading

Code: lnkd.in/eHXb-XwZ

Paper: lnkd.in/e8e7xnri

shorturl.at/jcQeq

➡️ Outperforms models by Amazon, Google, Datadog, Salesforce, Alibaba

➡️ industrial applications

➡️ limited data

➡️ embedded AI and edge devices

➡️ Europe is leading

Code: lnkd.in/eHXb-XwZ

Paper: lnkd.in/e8e7xnri

shorturl.at/jcQeq

We show how trajectories of spatial dynamical systems can be modeled in latent space by

--> leveraging IDENTIFIERS.

📚Paper: arxiv.org/abs/2502.12128

💻Code: github.com/ml-jku/LaM-S...

📝Blog: ml-jku.github.io/LaM-SLidE/

1/n

We show how trajectories of spatial dynamical systems can be modeled in latent space by

--> leveraging IDENTIFIERS.

📚Paper: arxiv.org/abs/2502.12128

💻Code: github.com/ml-jku/LaM-S...

📝Blog: ml-jku.github.io/LaM-SLidE/

1/n

#CombinatorialOptimization #StatisticalPhysics #DiffusionModels

#CombinatorialOptimization #StatisticalPhysics #DiffusionModels

Our latest research exposes critical security risks in AI assistants. An attacker can hijack them by simply posting an image on social media and waiting for it to be captured. [1/6] 🧵

Our latest research exposes critical security risks in AI assistants. An attacker can hijack them by simply posting an image on social media and waiting for it to be captured. [1/6] 🧵

*LIBERO: “xLSTM shows great potential”

*RoboCasa: “xLSTM models, we achieved success rate of 53.6%, compared to 40.0% of BC-Transformer”

*Point Clouds: “xLSTM model achieves a 60.9% success rate”

*LIBERO: “xLSTM shows great potential”

*RoboCasa: “xLSTM models, we achieved success rate of 53.6%, compared to 40.0% of BC-Transformer”

*Point Clouds: “xLSTM model achieves a 60.9% success rate”

It turns out, Bayesian reasoning has some surprising answers - no cognitive biases needed! Let's explore this fascinating paradox quickly ☺️

It turns out, Bayesian reasoning has some surprising answers - no cognitive biases needed! Let's explore this fascinating paradox quickly ☺️

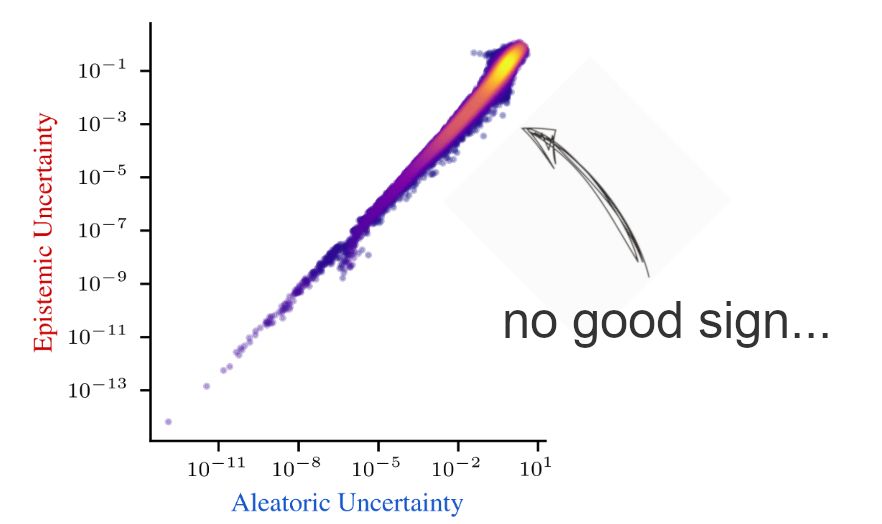

Introducing 𝗚-𝗡𝗟𝗟, a theoretically grounded and highly efficient uncertainty estimate, perfect for scalable LLM applications 🚀

Dive into the paper: arxiv.org/abs/2412.15176 👇

Introducing 𝗚-𝗡𝗟𝗟, a theoretically grounded and highly efficient uncertainty estimate, perfect for scalable LLM applications 🚀

Dive into the paper: arxiv.org/abs/2412.15176 👇

Introducing 𝗚-𝗡𝗟𝗟, a theoretically grounded and highly efficient uncertainty estimate, perfect for scalable LLM applications 🚀

Dive into the paper: arxiv.org/abs/2412.15176 👇

🔗 website: sites.google.com/view/fpiwork...

🔥 Call for papers: sites.google.com/view/fpiwork...

more details in thread below👇 🧵

🔗 website: sites.google.com/view/fpiwork...

🔥 Call for papers: sites.google.com/view/fpiwork...

more details in thread below👇 🧵

By giving models more "time to think," Llama 1B outperforms Llama 8B in math—beating a model 8x its size. The full recipe is open-source!

By giving models more "time to think," Llama 1B outperforms Llama 8B in math—beating a model 8x its size. The full recipe is open-source!

📖 arxiv.org/abs/2402.19460 🧵1/10

📖 arxiv.org/abs/2402.19460 🧵1/10

📖: arxiv.org/abs/2402.19460

📖: arxiv.org/abs/2402.19460

Tickets for in-person participation are "SOLD" OUT.

We still have a few free tickets for online/virtual participation!

Registration link here: moleculediscovery.github.io/workshop2024/

Tickets for in-person participation are "SOLD" OUT.

We still have a few free tickets for online/virtual participation!

Registration link here: moleculediscovery.github.io/workshop2024/