Kevin M. King

@kevinmking.bsky.social

He/him. Something something Self control and quant methods. Professor of Psychology at University of Washington. All opinions are my own and correct. Co-host of https://thatimplementationsciencepodcast.podbean.com/

https://faculty.washington.edu/kingkm

https://faculty.washington.edu/kingkm

"Let's just draw the target around whatever we hit"

November 10, 2025 at 8:47 PM

"Let's just draw the target around whatever we hit"

@charlymarie.bsky.social , you were right. I'd messed up the plot code.

October 10, 2025 at 3:04 PM

@charlymarie.bsky.social , you were right. I'd messed up the plot code.

Reposting to show how I'm a moron, but differently than I thought.

Imputation does change things (estimates, but interestingly not really CIs). And the choice of glmm versus Bayesian doesn't matter tremendously (except that the latter takes orders of magnitude longer to run).

Imputation does change things (estimates, but interestingly not really CIs). And the choice of glmm versus Bayesian doesn't matter tremendously (except that the latter takes orders of magnitude longer to run).

October 10, 2025 at 3:04 PM

Reposting to show how I'm a moron, but differently than I thought.

Imputation does change things (estimates, but interestingly not really CIs). And the choice of glmm versus Bayesian doesn't matter tremendously (except that the latter takes orders of magnitude longer to run).

Imputation does change things (estimates, but interestingly not really CIs). And the choice of glmm versus Bayesian doesn't matter tremendously (except that the latter takes orders of magnitude longer to run).

That feeling when you spend six months struggling to implement Bayesian GLMMs with imputed data only to find that the observed vs. imputed data analysis results are identical.

Yes, I am a moron.

Yes, I am a moron.

October 8, 2025 at 11:34 PM

That feeling when you spend six months struggling to implement Bayesian GLMMs with imputed data only to find that the observed vs. imputed data analysis results are identical.

Yes, I am a moron.

Yes, I am a moron.

Every time someone wants to decide something on merit alone

October 5, 2025 at 3:55 PM

Every time someone wants to decide something on merit alone

People have a lot of critiques of grant review, but they never talk about the harassment you can get from other members of the panel.

I was just trying to speak the language of the youth!

I was just trying to speak the language of the youth!

June 11, 2025 at 4:03 AM

People have a lot of critiques of grant review, but they never talk about the harassment you can get from other members of the panel.

I was just trying to speak the language of the youth!

I was just trying to speak the language of the youth!

We kind of wrote this into a grant application where we proposed a specification curve analysis looking at how emotions are related to alcohol use.

The constraint of course is that it all has to boil down to the same exact model so not exactly the same.

The constraint of course is that it all has to boil down to the same exact model so not exactly the same.

June 3, 2025 at 1:32 PM

We kind of wrote this into a grant application where we proposed a specification curve analysis looking at how emotions are related to alcohol use.

The constraint of course is that it all has to boil down to the same exact model so not exactly the same.

The constraint of course is that it all has to boil down to the same exact model so not exactly the same.

We were at Deception Pass this weekend! What beautiful weather, right?

May 27, 2025 at 2:58 AM

We were at Deception Pass this weekend! What beautiful weather, right?

But of course, does it make a difference?

Comparing brms on the imputed data with a (far faster and) simpler glmm.

No, not really. Results from a simple glmm on the observed data provide nearly the same results as a Bayesian model over the imputed data.

Comparing brms on the imputed data with a (far faster and) simpler glmm.

No, not really. Results from a simple glmm on the observed data provide nearly the same results as a Bayesian model over the imputed data.

March 15, 2025 at 2:10 PM

But of course, does it make a difference?

Comparing brms on the imputed data with a (far faster and) simpler glmm.

No, not really. Results from a simple glmm on the observed data provide nearly the same results as a Bayesian model over the imputed data.

Comparing brms on the imputed data with a (far faster and) simpler glmm.

No, not really. Results from a simple glmm on the observed data provide nearly the same results as a Bayesian model over the imputed data.

Thanks for all the suggestions in this thread!

I moved the imputation over to Blimp and got much better results

(www.appliedmissingdata.com/blimp)

My posterior is lumpy no more!

I moved the imputation over to Blimp and got much better results

(www.appliedmissingdata.com/blimp)

My posterior is lumpy no more!

March 15, 2025 at 2:10 PM

Thanks for all the suggestions in this thread!

I moved the imputation over to Blimp and got much better results

(www.appliedmissingdata.com/blimp)

My posterior is lumpy no more!

I moved the imputation over to Blimp and got much better results

(www.appliedmissingdata.com/blimp)

My posterior is lumpy no more!

I seem to have fewer issues with other variables (see below for one example). I probably mis-specified the imputation model and will keep plugging away at it.

March 13, 2025 at 3:23 PM

I seem to have fewer issues with other variables (see below for one example). I probably mis-specified the imputation model and will keep plugging away at it.

Thanks. The left image is intercept means and CIs across imputations from a glmm run , the right is the posterior from a Bayesian glmm on the 20 imputed datasets.

Below is a plot of raw means/SDs (it's a binary variable so these are really proportions) across imputations.

Below is a plot of raw means/SDs (it's a binary variable so these are really proportions) across imputations.

March 13, 2025 at 3:18 PM

Thanks. The left image is intercept means and CIs across imputations from a glmm run , the right is the posterior from a Bayesian glmm on the 20 imputed datasets.

Below is a plot of raw means/SDs (it's a binary variable so these are really proportions) across imputations.

Below is a plot of raw means/SDs (it's a binary variable so these are really proportions) across imputations.

The intercepts are pretty consistent across imputations (binary variable).

But it does vary a lot more than the estimates of the predictors. Perhaps that's the issue.

But it does vary a lot more than the estimates of the predictors. Perhaps that's the issue.

March 11, 2025 at 3:19 PM

The intercepts are pretty consistent across imputations (binary variable).

But it does vary a lot more than the estimates of the predictors. Perhaps that's the issue.

But it does vary a lot more than the estimates of the predictors. Perhaps that's the issue.

Help! I have a lumpy posterior!

Also my models look funny.

We ran imputation with mice in R, and for the two variables involved in this model, everything looked good (psrf < 1.05, chains mixed, etc.)

But the intercept estimates and SEs vary widely across imputations.

Isn't that concerning?

Also my models look funny.

We ran imputation with mice in R, and for the two variables involved in this model, everything looked good (psrf < 1.05, chains mixed, etc.)

But the intercept estimates and SEs vary widely across imputations.

Isn't that concerning?

March 11, 2025 at 2:00 PM

Help! I have a lumpy posterior!

Also my models look funny.

We ran imputation with mice in R, and for the two variables involved in this model, everything looked good (psrf < 1.05, chains mixed, etc.)

But the intercept estimates and SEs vary widely across imputations.

Isn't that concerning?

Also my models look funny.

We ran imputation with mice in R, and for the two variables involved in this model, everything looked good (psrf < 1.05, chains mixed, etc.)

But the intercept estimates and SEs vary widely across imputations.

Isn't that concerning?

I'm so damn proud of the RADLab.

Our first year student @mikelaritter.bsky.social was a lead organizer of @standupforscience.bsky.social in Seattle, and most of the rest of the lab (Connor, Jonas, Diego) were key organizers yesterday, featured in Geekwire!

www.geekwire.com/2025/researc...

Our first year student @mikelaritter.bsky.social was a lead organizer of @standupforscience.bsky.social in Seattle, and most of the rest of the lab (Connor, Jonas, Diego) were key organizers yesterday, featured in Geekwire!

www.geekwire.com/2025/researc...

March 8, 2025 at 5:40 PM

I'm so damn proud of the RADLab.

Our first year student @mikelaritter.bsky.social was a lead organizer of @standupforscience.bsky.social in Seattle, and most of the rest of the lab (Connor, Jonas, Diego) were key organizers yesterday, featured in Geekwire!

www.geekwire.com/2025/researc...

Our first year student @mikelaritter.bsky.social was a lead organizer of @standupforscience.bsky.social in Seattle, and most of the rest of the lab (Connor, Jonas, Diego) were key organizers yesterday, featured in Geekwire!

www.geekwire.com/2025/researc...

Seattle showed up to #standupforscience today.

It has been really lonely in science lately. We've been facing flurries of changes, cuts, censorship, and all kinds of bad news and rumors of worse. And no clue what to do with all of it.

1/2

It has been really lonely in science lately. We've been facing flurries of changes, cuts, censorship, and all kinds of bad news and rumors of worse. And no clue what to do with all of it.

1/2

March 7, 2025 at 10:36 PM

Seattle showed up to #standupforscience today.

It has been really lonely in science lately. We've been facing flurries of changes, cuts, censorship, and all kinds of bad news and rumors of worse. And no clue what to do with all of it.

1/2

It has been really lonely in science lately. We've been facing flurries of changes, cuts, censorship, and all kinds of bad news and rumors of worse. And no clue what to do with all of it.

1/2

Every time I review a paper.

February 6, 2025 at 4:25 PM

Every time I review a paper.

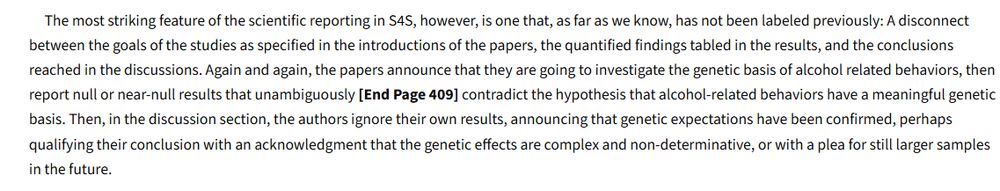

These are some outstanding commentaries and counter-arguments with really important implications for how we do and reward science. Very much well worth a read.

My favorite snip (of many). I see this so often.

My favorite snip (of many). I see this so often.

January 14, 2025 at 11:07 PM

These are some outstanding commentaries and counter-arguments with really important implications for how we do and reward science. Very much well worth a read.

My favorite snip (of many). I see this so often.

My favorite snip (of many). I see this so often.

We were there last week, it's so great !

The classics exhibits are so cool. My favorite was this one of a satyr wearing a mask. Carved from a single piece of stone, the artist even carved *inside* the mask.

The classics exhibits are so cool. My favorite was this one of a satyr wearing a mask. Carved from a single piece of stone, the artist even carved *inside* the mask.

January 9, 2025 at 8:28 PM

We were there last week, it's so great !

The classics exhibits are so cool. My favorite was this one of a satyr wearing a mask. Carved from a single piece of stone, the artist even carved *inside* the mask.

The classics exhibits are so cool. My favorite was this one of a satyr wearing a mask. Carved from a single piece of stone, the artist even carved *inside* the mask.