Joel Z Leibo

@jzleibo.bsky.social

I can be described as a multi-agent artificial general intelligence.

www.jzleibo.com

www.jzleibo.com

Pinned

Joel Z Leibo

@jzleibo.bsky.social

· Nov 16

GitHub - google-deepmind/concordia: A library for generative social simulation

A library for generative social simulation. Contribute to google-deepmind/concordia development by creating an account on GitHub.

github.com

Concordia is a library for generative agent-based modeling that works like a table-top role-playing game.

It's open source and model agnostic.

Try it today!

github.com/google-deepm...

It's open source and model agnostic.

Try it today!

github.com/google-deepm...

Reposted by Joel Z Leibo

dream-logic is more powerful than logic-logic and oral cultures must encode knowledge into powerful meme-spells newsletter.squishy.computer/p/llms-and-h...

November 9, 2025 at 4:42 AM

dream-logic is more powerful than logic-logic and oral cultures must encode knowledge into powerful meme-spells newsletter.squishy.computer/p/llms-and-h...

Reposted by Joel Z Leibo

Like, even before JS Mill gave us a utilitarian ("net upside") argument to justify freedom of speech, there was an older intuition that banning symbols risks doing violence to thought itself, and ought to be approached with "warinesse."

November 7, 2025 at 5:03 AM

Like, even before JS Mill gave us a utilitarian ("net upside") argument to justify freedom of speech, there was an older intuition that banning symbols risks doing violence to thought itself, and ought to be approached with "warinesse."

Reposted by Joel Z Leibo

I'm hiring a student researcher for next summer at the intersection of MARL x LLM. If you're a phd student with experience in MARL algorithm research, please apply and drop me an email so that I know you've applied! www.google.com/about/career...

Student Researcher, PhD, Winter/Summer 2026 — Google Careers

www.google.com

November 7, 2025 at 4:31 AM

I'm hiring a student researcher for next summer at the intersection of MARL x LLM. If you're a phd student with experience in MARL algorithm research, please apply and drop me an email so that I know you've applied! www.google.com/about/career...

Reposted by Joel Z Leibo

Today my colleagues in the Paradigms of Intelligence team have announced Project Suncatcher:

research.google/blog/explori...

tl;dr: How can we put datacentres in space where solar energy is near limitless? Requires changes to current practices (due to radiation and bandwidth issues).

🧪 #MLSky

research.google/blog/explori...

tl;dr: How can we put datacentres in space where solar energy is near limitless? Requires changes to current practices (due to radiation and bandwidth issues).

🧪 #MLSky

Exploring a space-based, scalable AI infrastructure system design

research.google

November 4, 2025 at 10:36 PM

Today my colleagues in the Paradigms of Intelligence team have announced Project Suncatcher:

research.google/blog/explori...

tl;dr: How can we put datacentres in space where solar energy is near limitless? Requires changes to current practices (due to radiation and bandwidth issues).

🧪 #MLSky

research.google/blog/explori...

tl;dr: How can we put datacentres in space where solar energy is near limitless? Requires changes to current practices (due to radiation and bandwidth issues).

🧪 #MLSky

Reposted by Joel Z Leibo

My favorite part of pragmatism is when it’s like “maybe instead of worrying about shit that doesn’t matter we should worry about shit that does.”

“Oh and btw we’ll learn a lot more about the shit that doesn’t in the process anyway.” 🫣

“Oh and btw we’ll learn a lot more about the shit that doesn’t in the process anyway.” 🫣

[2/9] We argue that instead of getting stuck on metaphysical debates (is AI conscious?), we should treat personhood as a flexible bundle of obligations (rights & responsibilities) that societies confer.

November 3, 2025 at 12:54 PM

My favorite part of pragmatism is when it’s like “maybe instead of worrying about shit that doesn’t matter we should worry about shit that does.”

“Oh and btw we’ll learn a lot more about the shit that doesn’t in the process anyway.” 🫣

“Oh and btw we’ll learn a lot more about the shit that doesn’t in the process anyway.” 🫣

Reposted by Joel Z Leibo

This paper is a great exposition of how "personhood" doesn't need to be, and in fact should not be, all-or-nothing or grounded in abstruse, ill-defined metaphysical properties. As I argued in my recent @theguardian.com essay, we can and should prepare now: www.theguardian.com/commentisfre...

[1/9] Excited to share our new paper "A Pragmatic View of AI Personhood" published today. We feel this topic is timely, and rapidly growing in importance as AI becomes agentic, as AI agents integrate further into the economy, and as more and more users encounter AI.

November 2, 2025 at 3:30 PM

This paper is a great exposition of how "personhood" doesn't need to be, and in fact should not be, all-or-nothing or grounded in abstruse, ill-defined metaphysical properties. As I argued in my recent @theguardian.com essay, we can and should prepare now: www.theguardian.com/commentisfre...

Reposted by Joel Z Leibo

This paper is required reading. A pragmatic approach really clarifies the topic, even if—like me—you are mostly a “no” on the whole idea of artificial autonomous agents.

[1/9] Excited to share our new paper "A Pragmatic View of AI Personhood" published today. We feel this topic is timely, and rapidly growing in importance as AI becomes agentic, as AI agents integrate further into the economy, and as more and more users encounter AI.

November 2, 2025 at 2:10 PM

This paper is required reading. A pragmatic approach really clarifies the topic, even if—like me—you are mostly a “no” on the whole idea of artificial autonomous agents.

Reposted by Joel Z Leibo

This sounds cynical—but represents a huge advance over empty “AGI” speculation.

It’s a political question, not a technical one. Without social equality models *cannot do* many kinds of work (eg, negotiate agreements or manage workers). So they will only be human-equivalent if we decide they are.

It’s a political question, not a technical one. Without social equality models *cannot do* many kinds of work (eg, negotiate agreements or manage workers). So they will only be human-equivalent if we decide they are.

intelligence is the thing which i have. admitting things are intelligent means considering them morally and socially equal to me. i will never consider a computer morally or socially equal to me. therefore no computer program will ever be intelligent

November 2, 2025 at 11:01 AM

This sounds cynical—but represents a huge advance over empty “AGI” speculation.

It’s a political question, not a technical one. Without social equality models *cannot do* many kinds of work (eg, negotiate agreements or manage workers). So they will only be human-equivalent if we decide they are.

It’s a political question, not a technical one. Without social equality models *cannot do* many kinds of work (eg, negotiate agreements or manage workers). So they will only be human-equivalent if we decide they are.

Reposted by Joel Z Leibo

Joel is on a mission to get the EA/rationalist set to embrace Rortyian pragmatism. It's a tough job but someone's gotta do it.

[1/9] Excited to share our new paper "A Pragmatic View of AI Personhood" published today. We feel this topic is timely, and rapidly growing in importance as AI becomes agentic, as AI agents integrate further into the economy, and as more and more users encounter AI.

November 1, 2025 at 8:31 PM

Joel is on a mission to get the EA/rationalist set to embrace Rortyian pragmatism. It's a tough job but someone's gotta do it.

This looks interesting

Interestingly, @simondedeo.bsky.social uses exactly this context of apology as a place where people can use "Mental Proof" to overcome the perception of AI use, by *credibly* communicating intentions -- based on proof of shared knowledge and values.

ojs.aaai.org/index.php/AA...

ojs.aaai.org/index.php/AA...

Undermining Mental Proof: How AI Can Make Cooperation Harder by Making Thinking Easier

| Proceedings of the AAAI Conference on Artificial Intelligence

ojs.aaai.org

November 1, 2025 at 8:53 AM

This looks interesting

[1/9] Excited to share our new paper "A Pragmatic View of AI Personhood" published today. We feel this topic is timely, and rapidly growing in importance as AI becomes agentic, as AI agents integrate further into the economy, and as more and more users encounter AI.

October 31, 2025 at 12:32 PM

[1/9] Excited to share our new paper "A Pragmatic View of AI Personhood" published today. We feel this topic is timely, and rapidly growing in importance as AI becomes agentic, as AI agents integrate further into the economy, and as more and more users encounter AI.

Reposted by Joel Z Leibo

Very excited to be able to talk about something I've been working on for a while now - we're working with Commonwealth Fusion Systems, IMO the leading fusion startup in the world, to take our work on AI and tokamaks and make it work at the frontier of fusion energy. deepmind.google/discover/blo...

Google DeepMind is bringing AI to the next generation of fusion energy

We’re announcing our research partnership with Commonwealth Fusion Systems (CFS) to bring clean, safe, limitless fusion energy closer to reality with our advanced AI systems. This partnership...

deepmind.google

October 16, 2025 at 1:55 PM

Very excited to be able to talk about something I've been working on for a while now - we're working with Commonwealth Fusion Systems, IMO the leading fusion startup in the world, to take our work on AI and tokamaks and make it work at the frontier of fusion energy. deepmind.google/discover/blo...

Concordia 2.0: www.cooperativeai.com/post/google-...

Google DeepMind Releases Concordia Library v2.0

Google DeepMind has released Concordia v2.0, a platform providing a robust environment for creating multi-agent simulations, games, and AI evaluations.

www.cooperativeai.com

September 19, 2025 at 8:30 PM

Concordia 2.0: www.cooperativeai.com/post/google-...

One can also explain human behavior this way, of course 😉, we are all role playing.

But I certainly agree with the advice you offer in this thread. Humans harm themselves when they stop playing roles that connect to other humans.

But I certainly agree with the advice you offer in this thread. Humans harm themselves when they stop playing roles that connect to other humans.

However, a good explanation for much of the sophisticated behaviour we see in today's chatbots is that they are role-playing. 3/4

July 22, 2025 at 10:38 AM

One can also explain human behavior this way, of course 😉, we are all role playing.

But I certainly agree with the advice you offer in this thread. Humans harm themselves when they stop playing roles that connect to other humans.

But I certainly agree with the advice you offer in this thread. Humans harm themselves when they stop playing roles that connect to other humans.

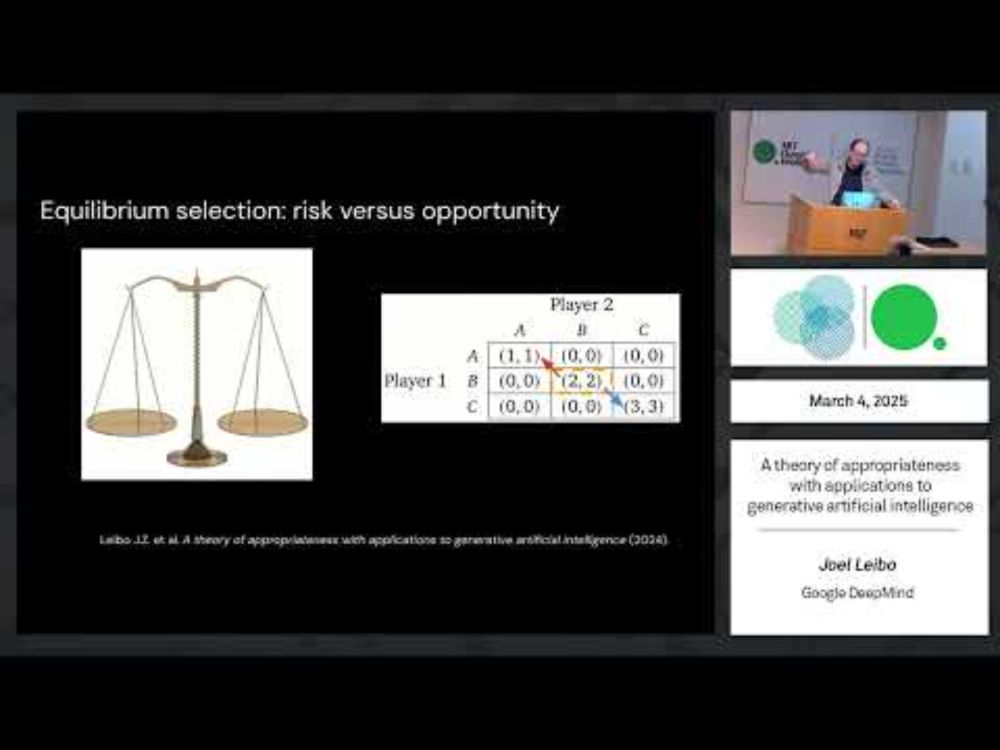

Reposting our paper from a few months ago as recent events again underscore its relevance:

"a theory of appropriateness with applications to generative AI"

arxiv.org/abs/2412.19010

"a theory of appropriateness with applications to generative AI"

arxiv.org/abs/2412.19010

A theory of appropriateness with applications to generative artificial intelligence

What is appropriateness? Humans navigate a multi-scale mosaic of interlocking notions of what is appropriate for different situations. We act one way with our friends, another with our family, and yet...

arxiv.org

July 12, 2025 at 6:47 AM

Reposting our paper from a few months ago as recent events again underscore its relevance:

"a theory of appropriateness with applications to generative AI"

arxiv.org/abs/2412.19010

"a theory of appropriateness with applications to generative AI"

arxiv.org/abs/2412.19010

Reposted by Joel Z Leibo

How to cooperate for a sustainable future? We don't know (yet), but I'm thrilled to share that our new perspective piece has just been published in @pnas.org. Bridging complexity science and multiagent reinforcement learning can lead to a much-needed science of collective, cooperative intelligence.

June 17, 2025 at 10:29 AM

How to cooperate for a sustainable future? We don't know (yet), but I'm thrilled to share that our new perspective piece has just been published in @pnas.org. Bridging complexity science and multiagent reinforcement learning can lead to a much-needed science of collective, cooperative intelligence.

Reposted by Joel Z Leibo

Love the insight that language models have been more widely useful than video models.

For Levine, this is because LLMs kind of sneakily and indirectly model the brain. I would be tempted to say instead that shared languages are more powerful than single brains. But either way, it’s a good read!

For Levine, this is because LLMs kind of sneakily and indirectly model the brain. I would be tempted to say instead that shared languages are more powerful than single brains. But either way, it’s a good read!

Language Models in Plato's Cave

Why language models succeeded where video models failed, and what that teaches us about AI

open.substack.com

June 12, 2025 at 2:47 AM

Love the insight that language models have been more widely useful than video models.

For Levine, this is because LLMs kind of sneakily and indirectly model the brain. I would be tempted to say instead that shared languages are more powerful than single brains. But either way, it’s a good read!

For Levine, this is because LLMs kind of sneakily and indirectly model the brain. I would be tempted to say instead that shared languages are more powerful than single brains. But either way, it’s a good read!

Reposted by Joel Z Leibo

Bellingcat has followed up its 2023 article on testing LLMs on geolocation with a new review of LLM's geolocation skills, with dramatically improved results www.bellingcat.com/resources/ho...

Have LLMs Finally Mastered Geolocation? - bellingcat

We tasked LLMs from OpenAI, Google, Anthropic, Mistral and xAI to geolocate our unpublished holiday snaps. Here's how they did.

www.bellingcat.com

June 6, 2025 at 7:51 AM

Bellingcat has followed up its 2023 article on testing LLMs on geolocation with a new review of LLM's geolocation skills, with dramatically improved results www.bellingcat.com/resources/ho...

Reposted by Joel Z Leibo

The idea of "AI alignment" grew out of a community that thought you could solve morals like it was a CS problem set. Nice to see a more nuanced take.

Announcing our latest arxiv paper:

Societal and technological progress as sewing an ever-growing, ever-changing, patchy, and polychrome quilt

arxiv.org/abs/2505.05197

We argue for a view of AI safety centered on preventing disagreement from spiraling into conflict.

Societal and technological progress as sewing an ever-growing, ever-changing, patchy, and polychrome quilt

arxiv.org/abs/2505.05197

We argue for a view of AI safety centered on preventing disagreement from spiraling into conflict.

Societal and technological progress as sewing an ever-growing, ever-changing, patchy, and polychrome quilt

Artificial Intelligence (AI) systems are increasingly placed in positions where their decisions have real consequences, e.g., moderating online spaces, conducting research, and advising on policy. Ens...

arxiv.org

May 9, 2025 at 2:49 PM

The idea of "AI alignment" grew out of a community that thought you could solve morals like it was a CS problem set. Nice to see a more nuanced take.

Announcing our latest arxiv paper:

Societal and technological progress as sewing an ever-growing, ever-changing, patchy, and polychrome quilt

arxiv.org/abs/2505.05197

We argue for a view of AI safety centered on preventing disagreement from spiraling into conflict.

Societal and technological progress as sewing an ever-growing, ever-changing, patchy, and polychrome quilt

arxiv.org/abs/2505.05197

We argue for a view of AI safety centered on preventing disagreement from spiraling into conflict.

Societal and technological progress as sewing an ever-growing, ever-changing, patchy, and polychrome quilt

Artificial Intelligence (AI) systems are increasingly placed in positions where their decisions have real consequences, e.g., moderating online spaces, conducting research, and advising on policy. Ens...

arxiv.org

May 9, 2025 at 11:39 AM

Announcing our latest arxiv paper:

Societal and technological progress as sewing an ever-growing, ever-changing, patchy, and polychrome quilt

arxiv.org/abs/2505.05197

We argue for a view of AI safety centered on preventing disagreement from spiraling into conflict.

Societal and technological progress as sewing an ever-growing, ever-changing, patchy, and polychrome quilt

arxiv.org/abs/2505.05197

We argue for a view of AI safety centered on preventing disagreement from spiraling into conflict.

Just posted today, here's a podcast interview where I spoke about multi-agent artificial general intelligence.

www.aipolicyperspectives.com/p/robinson-c...

www.aipolicyperspectives.com/p/robinson-c...

Robinson Crusoe or Lord of the Flies?

The importance of Multi-Agent AI, with Joel Z. Leibo

www.aipolicyperspectives.com

April 23, 2025 at 11:57 AM

Just posted today, here's a podcast interview where I spoke about multi-agent artificial general intelligence.

www.aipolicyperspectives.com/p/robinson-c...

www.aipolicyperspectives.com/p/robinson-c...

First LessWrong post! Inspired by Richard Rorty, we argue for a different view of AI alignment, where the goal is "more like sewing together a very large, elaborate, polychrome quilt", than it is "like getting a clearer vision of something true and deep"

www.lesswrong.com/posts/S8KYwt...

www.lesswrong.com/posts/S8KYwt...

Societal and technological progress as sewing an ever-growing, ever-changing, patchy, and polychrome quilt — LessWrong

We can just drop the axiom of rational convergence.

www.lesswrong.com

April 22, 2025 at 3:14 PM

First LessWrong post! Inspired by Richard Rorty, we argue for a different view of AI alignment, where the goal is "more like sewing together a very large, elaborate, polychrome quilt", than it is "like getting a clearer vision of something true and deep"

www.lesswrong.com/posts/S8KYwt...

www.lesswrong.com/posts/S8KYwt...

That's nothing, it also improves performance if you tell the worker what they ate for breakfast!

The multiagent LLM people are going to do me in. What do you mean you told one agent it was a manager and the other a worker and it slightly improved performance

April 11, 2025 at 7:35 PM

That's nothing, it also improves performance if you tell the worker what they ate for breakfast!

In case folks are interested, here's a video of a talk I gave at MIT a couple weeks ago: youtu.be/FmN6fRyfcsY?...

A Theory of Appropriateness with Applications to Generative Artificial Intelligence

YouTube video by MITCBMM

youtu.be

April 1, 2025 at 8:50 PM

In case folks are interested, here's a video of a talk I gave at MIT a couple weeks ago: youtu.be/FmN6fRyfcsY?...

Reposted by Joel Z Leibo

A silly example of this being unexpected for AI forecasters

March 26, 2025 at 2:37 PM

A silly example of this being unexpected for AI forecasters