Now integrating thinking capabilities, 2.5 Pro Experimental is our most performant Gemini model yet. It’s #1 on the LM Arena leaderboard. 🥇

Now integrating thinking capabilities, 2.5 Pro Experimental is our most performant Gemini model yet. It’s #1 on the LM Arena leaderboard. 🥇

There was a lot of care and love in this launch

Check out the video

youtu.be/UU13FN2Xpyw?...

There was a lot of care and love in this launch

Check out the video

youtu.be/UU13FN2Xpyw?...

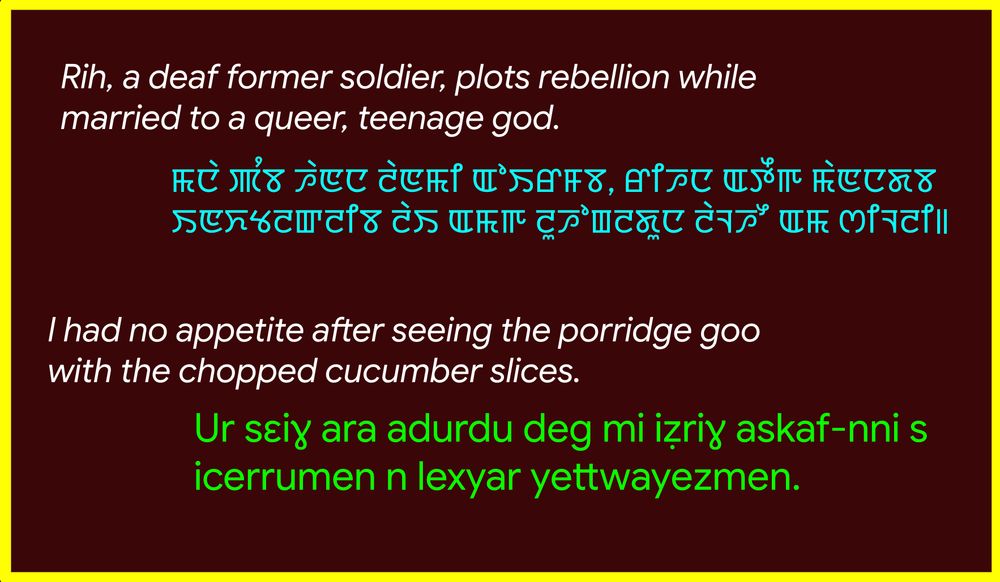

www2.statmt.org/wmt25/multil...

www2.statmt.org/wmt25/multil...

Releasing open weights helps to make breakthroughs in VLMs accessible to the research community.

Releasing open weights helps to make breakthroughs in VLMs accessible to the research community.

I really hope it puts the final nail in the coffin of FLORES or WMT14. The field is evolving, legacy testsets can't show your progress

arxiv.org/abs/2502.124...

I really hope it puts the final nail in the coffin of FLORES or WMT14. The field is evolving, legacy testsets can't show your progress

arxiv.org/abs/2502.124...

Huggingface: huggingface.co/datasets/goo...

Huggingface: huggingface.co/datasets/goo...