Natural Language Processing, LLM Reasonings

Actively seeking for PhD position for 2025 spring/fall✨

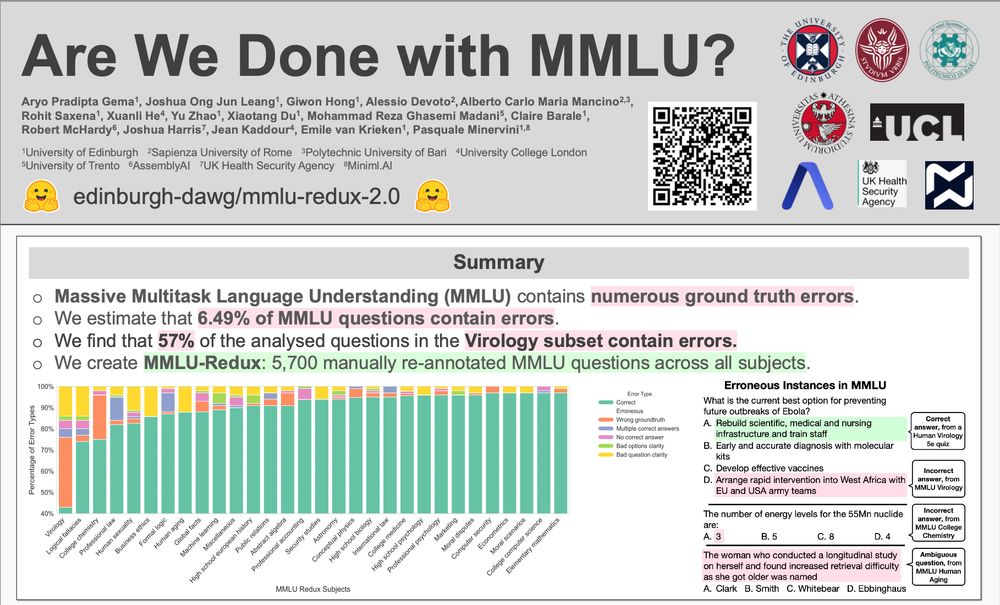

Wish I could be there for our "Are We Done with MMLU?" poster today (9:00-10:30am in Hall 3, Poster Session 7), but visa drama said nope 😅

If anyone's swinging by, give our research some love! Hit me up if you check it out! 👋

Wish I could be there for our "Are We Done with MMLU?" poster today (9:00-10:30am in Hall 3, Poster Session 7), but visa drama said nope 😅

If anyone's swinging by, give our research some love! Hit me up if you check it out! 👋

We investigated the issue of existing errors in the original MMLU in

arxiv.org/abs/2406.04127

@aryopg.bsky.social @neuralnoise.com

As part of a massive cross-institutional collaboration:

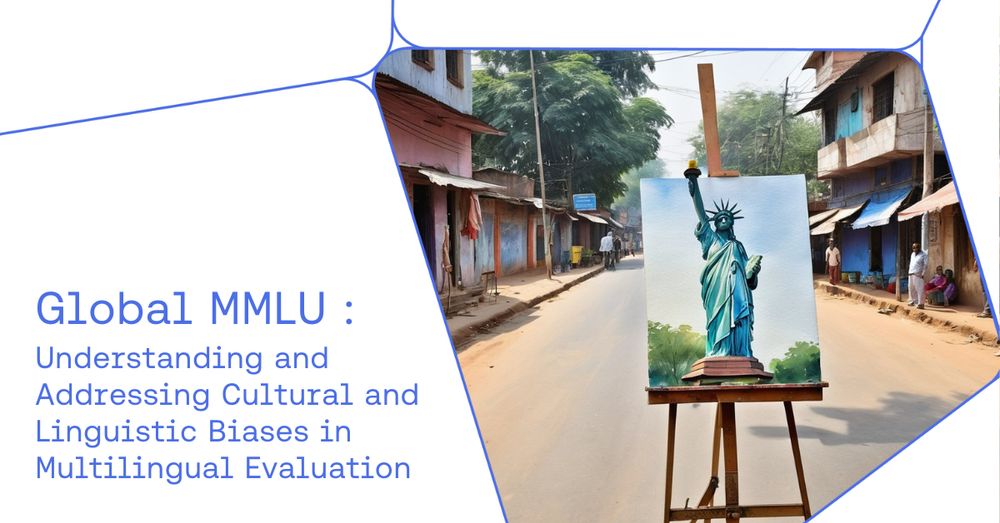

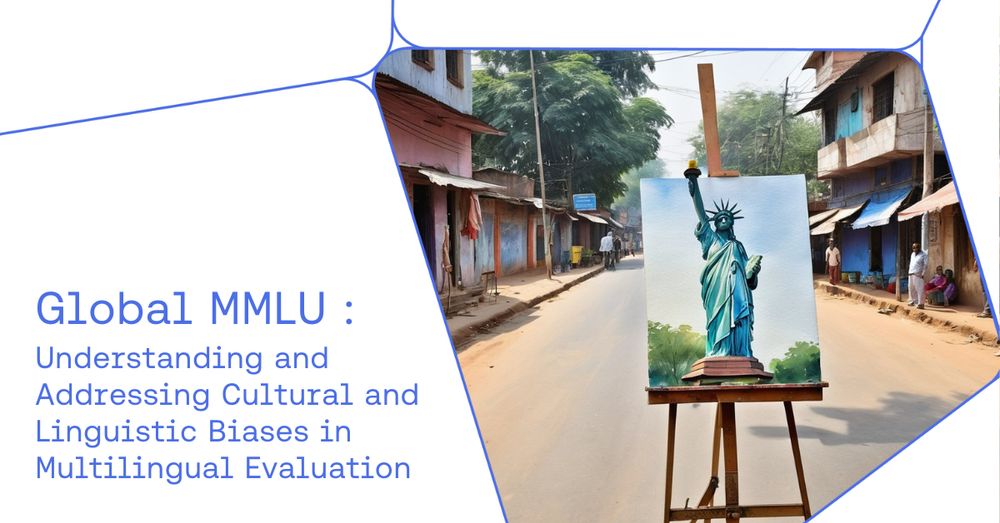

🗽Find MMLU is heavily overfit to western culture

🔍 Professional annotation of cultural sensitivity data

🌍 Release improved Global-MMLU 42 languages

📜 Paper: arxiv.org/pdf/2412.03304

📂 Data: hf.co/datasets/Coh...

We investigated the issue of existing errors in the original MMLU in

arxiv.org/abs/2406.04127

@aryopg.bsky.social @neuralnoise.com

The result of months of work with the goal of advancing Multilingual LLM evaluation.

Built together with the community and amazing collaborators at Cohere4AI, MILA, MIT, and many more.

As part of a massive cross-institutional collaboration:

🗽Find MMLU is heavily overfit to western culture

🔍 Professional annotation of cultural sensitivity data

🌍 Release improved Global-MMLU 42 languages

📜 Paper: arxiv.org/pdf/2412.03304

📂 Data: hf.co/datasets/Coh...

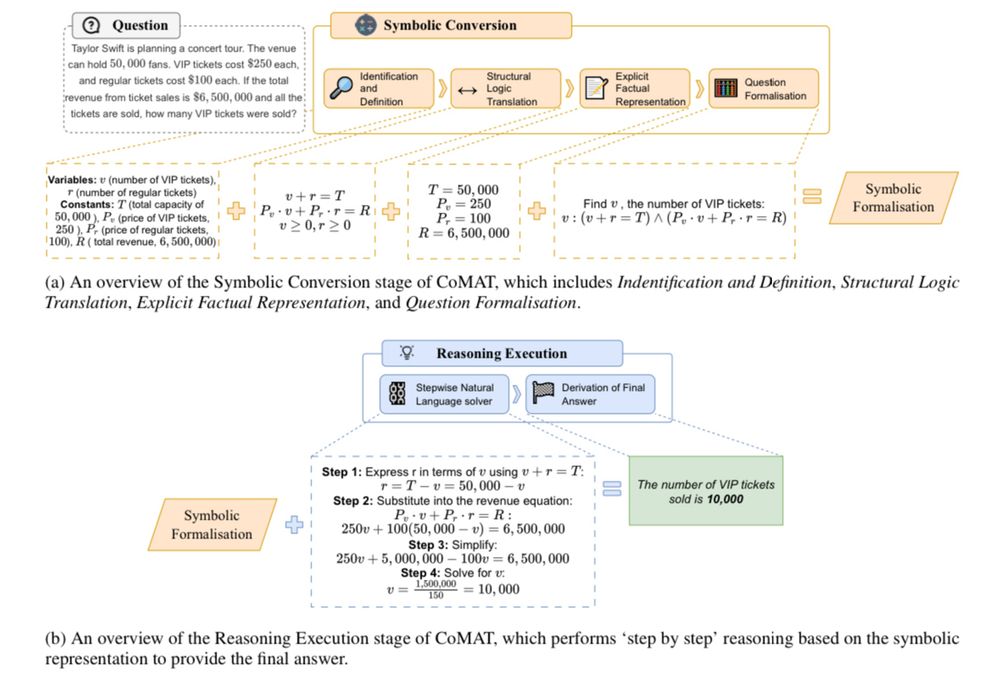

1) NLI remains a difficult task for LLMs

2) Having more few-shot examples is helpful (in my view, helping LLMs better understand class boundaries)

3) Incorrect predictions are often a result of ambiguous labels

1) NLI remains a difficult task for LLMs

2) Having more few-shot examples is helpful (in my view, helping LLMs better understand class boundaries)

3) Incorrect predictions are often a result of ambiguous labels

It works for me because it allows me to visually break down comments across reviewers into common themes, things that I can easily address v those that I can't, and also filter across these.

You all've got this!!!

It works for me because it allows me to visually break down comments across reviewers into common themes, things that I can easily address v those that I can't, and also filter across these.

You all've got this!!!

arxiv.org/pdf/2410.103...

arxiv.org/pdf/2410.103...

@uwnlp.bsky.social & Ai2

With open models & 45M-paper datastores, it outperforms proprietary systems & match human experts.

Try out our demo!

openscholar.allen.ai

@uwnlp.bsky.social & Ai2

With open models & 45M-paper datastores, it outperforms proprietary systems & match human experts.

Try out our demo!

openscholar.allen.ai

I'd love to chat about my recent works (DeCoRe, MMLU-Redux, etc.). DM me if you’re around! 👋

DeCoRe: arxiv.org/abs/2410.18860

MMLU-Redux: arxiv.org/abs/2406.04127

I'd love to chat about my recent works (DeCoRe, MMLU-Redux, etc.). DM me if you’re around! 👋

DeCoRe: arxiv.org/abs/2410.18860

MMLU-Redux: arxiv.org/abs/2406.04127

Let me know if I missed you!

go.bsky.app/RMJ8q3i

Let me know if I missed you!

go.bsky.app/RMJ8q3i