We will soon release our materials, human results, LLM results and all the cool images the models produced on our sentences.

arxiv.org/abs/2502.09307

We will soon release our materials, human results, LLM results and all the cool images the models produced on our sentences.

arxiv.org/abs/2502.09307

One takeaway from this paper for the psycholinguistics community: run your reading comprehension experiment on LLM first. You might get a general idea of the human results.

(Last image I swear)

One takeaway from this paper for the psycholinguistics community: run your reading comprehension experiment on LLM first. You might get a general idea of the human results.

(Last image I swear)

In this image: While the teacher taught the puppies looked at the board.

In this image: While the teacher taught the puppies looked at the board.

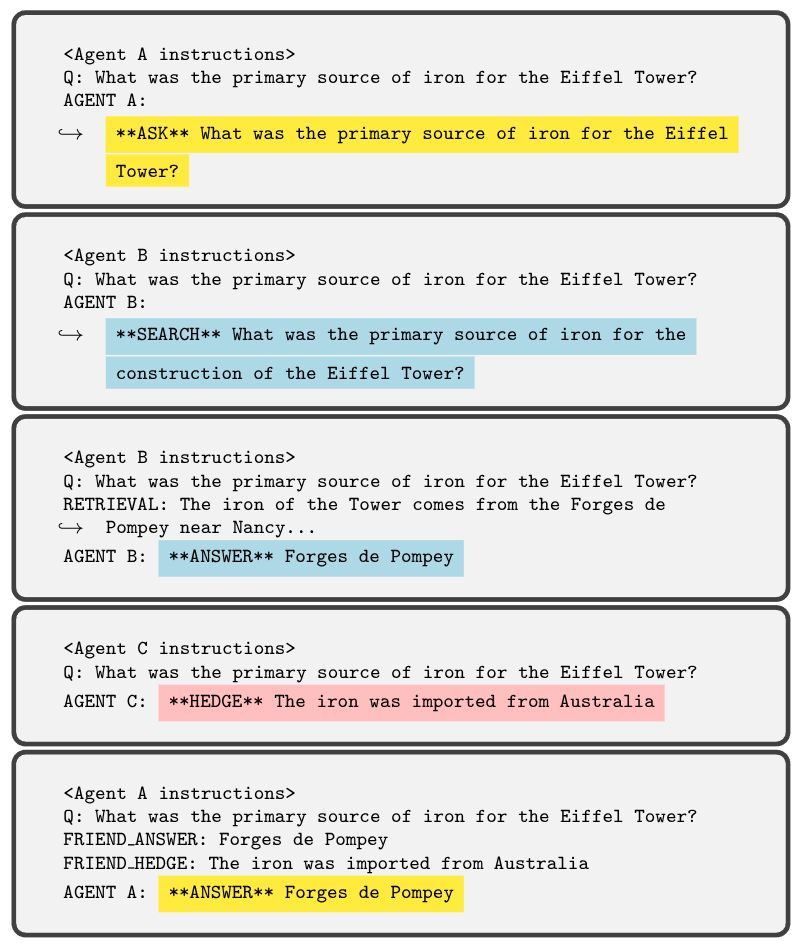

1. We asked the LLM to paraphrase our sentence

2. We asked text-to-image models to draw the sentences

In this image: While the horse pulled the submarine moved silently.

1. We asked the LLM to paraphrase our sentence

2. We asked text-to-image models to draw the sentences

In this image: While the horse pulled the submarine moved silently.

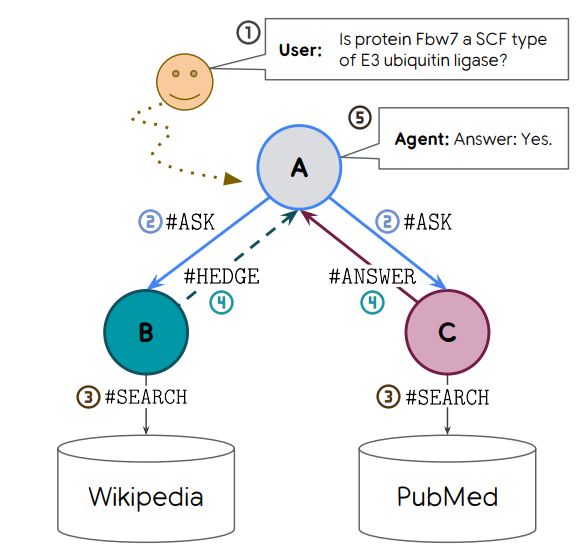

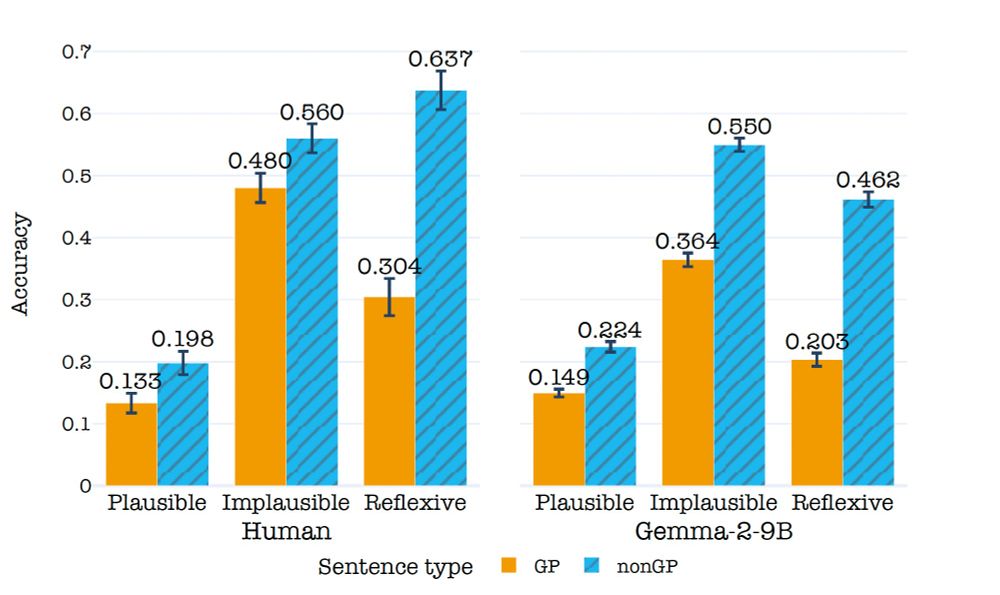

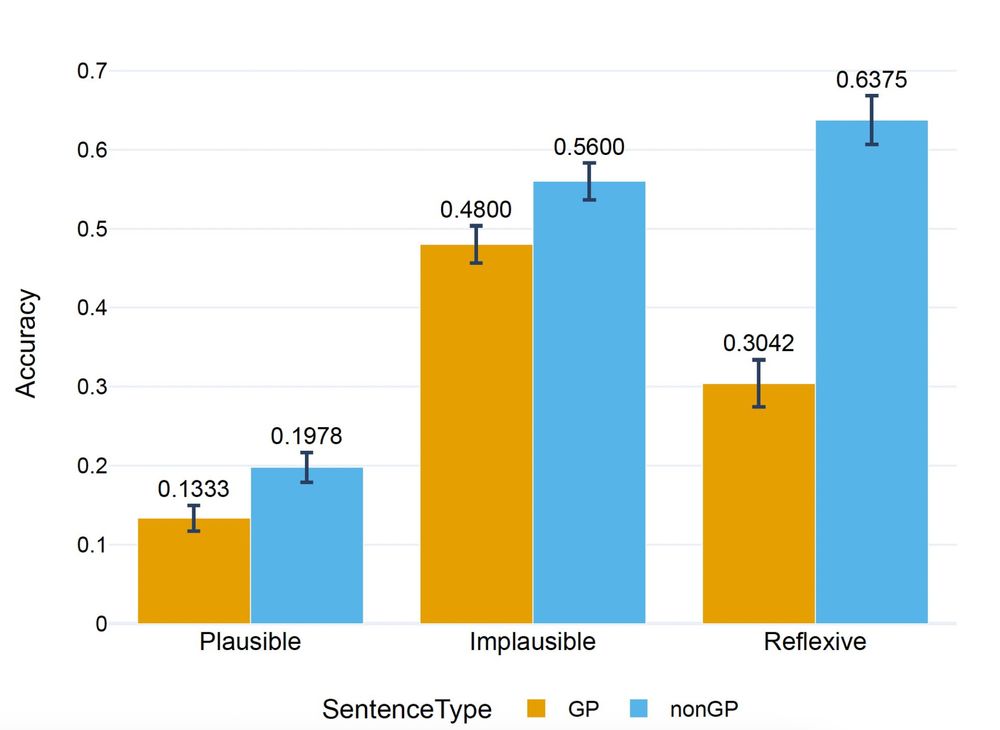

We found that LLMs also struggle with GP sentences and that, interestingly, the manipulations we did to test our hypotheses impacted LLMs as they did with humans

We found that LLMs also struggle with GP sentences and that, interestingly, the manipulations we did to test our hypotheses impacted LLMs as they did with humans

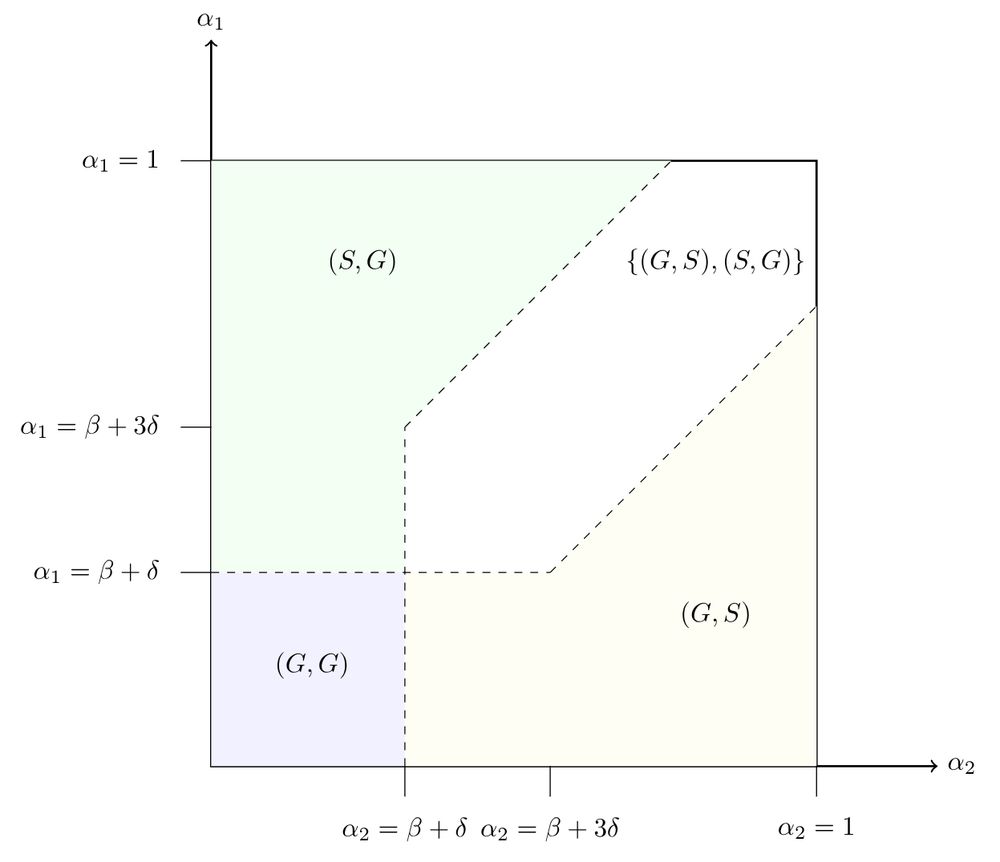

We devise hypotheses explaining why GP sentences are harder to process and test them. Human subjects answered a reading comprehension question about a sentence they read.

We devise hypotheses explaining why GP sentences are harder to process and test them. Human subjects answered a reading comprehension question about a sentence they read.

arxiv.org/abs/2312.09244

Preference data distribution doesn't explain this btw

arxiv.org/abs/2312.09244

Preference data distribution doesn't explain this btw